What is Docker?

As I remember my early days of learning any technology or tools, I was a guy full of questions in mind. Ah! Okay, still I am but that is not what we are discussing today. Today I am going to share my thoughts and experiences of learning Docker or Container technology.

When I first heard about docker and container, I asked the internet why we needed one more tool and what are the practical use cases.

Consider the rise of micro-services architecture and in our project, we have multiple small deployable services. (I know you are a good developer but) You are stuck on one issue and want your friend Vraj’s help who lives in a different city. Vraj will require that project, but every project has its dependencies, libraries, and configurations. Also runs on different platforms like Linux or Windows.

It is not necessary that if one project is running on your machine, it will run on other machines without any problem. Let’s Suppose your project running on Java 8 and requires mysql 8.2 and Vraj has different versions of that. So in this case he will not be able to run the project and help you. Where Docker comes to rescue us. In the Docker or Container technology, we can put all our dependencies, libraries, configurations, and code and make an image out of it (will discuss this later). With this image or multiple images, Vraj can run your project and can help you.

This is one of the use cases of Docker, so now let’s see the practical use of Docker.

Practical Use of Docker

Docker makes the software development life cycle (SDLC) easy to follow.

Docker helps to build images from our project, ships those images, and also provides container runtime to run applications.

Suppose you want to deploy your application to any cloud provider, and you have multiple servers on the cloud or on-premises so you will have to include all the dependencies, provide a runtime environment, and take care of other configurations as well manually. That takes time and also has a learning curve.

But if you use Docker, you just need to install Docker on the server, and with the help of Docker images, you can easily run your application.

Deployment becomes even easier when you use CI/CD tools with Docker. I will make a separate Blog about CI/CD but now focus on Docker. When you make a CI/CD pipeline, it is very easy to follow the changes in the project. This pipeline makes the Docker images when there are any changes to projects and publishes them to Cloud servers.

But now, if you do not use Docker and you change the Java version in your project, then you would need to change the Java version in each server. Such time-consuming work right?

Again question? What is an image and how to create that?

You all are becoming like me.

Docker Images

Think of a Docker image as a blueprint that contains everything your computer program (Application) needs to run. It contains all the necessary tools, dependencies, configurations, and your code.

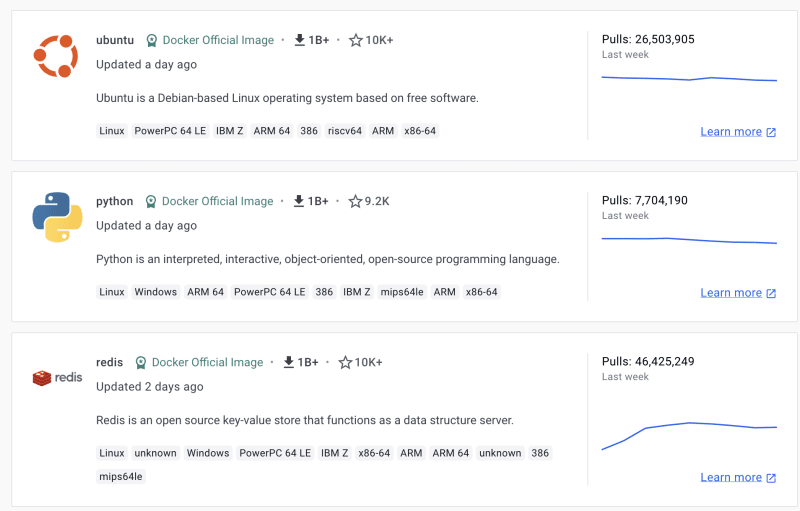

These are some famous docker images. Without downloading any dependencies you can run this application or software with the docker run command. But before running you would need to pull the image from the Docker hub (Will discuss this later).

docker run --name mynginx1 -p 80:80 -d nginx

How to create these images?

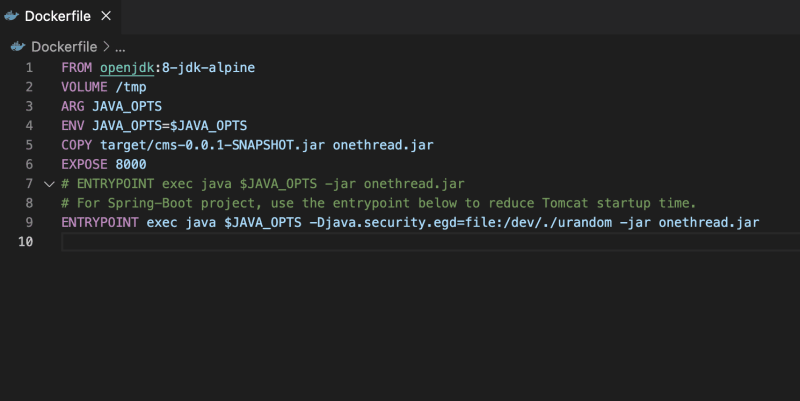

To create a Docker image, we have to create a Dockerfile. A Dockerfile is the build instruction that helps to create Docker images. Below is an example of a Dockerfile

To create a Docker image, run the below command from the directory where the Dockerfile is located.

docker build

Do not worry there is so much going on. You will think it is very complicated to create a Dockerfile and every project is different so it is very hard. What if I tell you by a few button clicks you can create a Dockerfile?

Most code editors provide plugins to create Docker images or Dockerfile.

Here is an example of a Visual Studio Code where you can download the Docker extension. You can easily create a Dockerfile of your project with this command.

Now you have your Docker image but where can we store and how to use it on different machines?

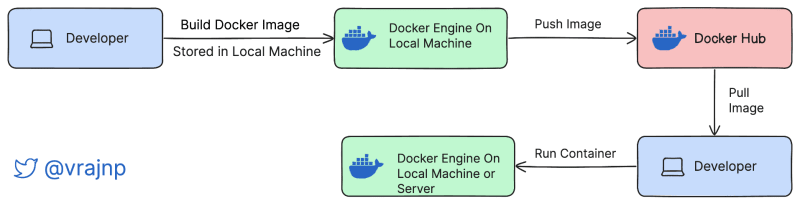

When you create a Docker image, it will be stored in Docker Desktop on your local machine, you can use it but on a remote machine, it won’t be run. So now the Docker registry comes to rescue us. Again new term?

Docker Hub (Registry)

Think of the Registry as storage for all the images like Github where we can store our code. Docker provides Docker Hub to store all the images and share them with the world. You can run one command and can store your local image in Docker Hub.

docker push myRegistry/myImage

myRegistry is your registry and myImage is the image you want to push.

Once you have an image on Docker Hub, you can make it private or public. Public images can be used by anyone. If you want to use any image, first perform the pull image command so the image will be in our local machine.

docker pull nginx

Then you can run the docker run command and that application will be running.

docker run --name mynginx1 -p 80:80 -d nginx

Complete Flow of Docker Image

Containers

That running instance of the image is called Container. It runs a process on our machines. Run the below command to list all your running containers.

docker container ls

There are so many commands to explore in the Docker world but for beginners, understand the concept and slowly implement it in your project.

Advanced Topics

We discussed about basics of Docker, and now let’s see advanced topics like docker-compose and networking.

Let’s suppose, we are making an application and we have multiple micro-services. And we want to run them through Docker. The first thing you would do is create the Docker images for all the services and push them to Docker Hub.

Now we can use the Docker run command for each service but there are problems using that. Firstly, to run services you need to manage configurations but in the case of using run command, it becomes hard to manage configurations and start the applications at the same time. Now docker-compose becomes a hero for us and comes to the rescue.

Docker Compose

Docker compose is just a Yaml file in which we can manage the configuration of services and can easily run them. I will cover the Yaml file in a different blog.

Sample of docker-compose file. You have to name your file docker-compose.yaml

Here I have 2 services, currency-exchange and currency-conversion. You can specify all the necessary configurations, do not worry if you do not know something mentioned. Understand the concepts, I will explain networks, and depends_on in this blog. but environment variables you can understand by creating projects. So start creating projects, what are you waiting for?

Depends_on

Our services need to communicate with each other to work as a fully functional application. In the above image, you can see that I have 2 services mentioned in depends on. My services depend on those 2 services. And now think what if those 2 services are not running and I want to access currency-exchange service. I will not get any desired result, right? So we have to mention that those 2 services should run first, so we do not have any unexpected errors.

Networking

As we mentioned, services need to communicate. As we have social networking platforms to communicate, they also need networking mechanisms. We can create networks in a docker-compose file and mention them in services.

networks:

currency-exchange:

*Can we create multiple networks? Yes. But why do we need to? *

So let’s take an example, you have front-end service, back-end service, and database service. Now if you create one network then all services can communicate with each other. But due to security, You want to grant access to the database service exclusively for the backend service. Then you would need multiple networks.

Refer below image for more explanation.

Docker Challenges

Although Docker is a good tool for containerization, we still have some limitations with that. Let’s Discuss what challenges we have when we deploy applications through Docker.

Orchestration

When we have multiple services at a large scale, it becomes hard to manage all the containers. With microservice architecture, we need to scale containers based on the load the application getting and Docker does not provide the auto-scale feature. So you cannot automate your deployment. So for that, we need an orchestrator tool like Kubernetes or Docker Swarm. I will create a separate blog for Orchestration.Service Discovery

You would need an extra service in your project for service discovery functionality if you have micro-service architecture. Therefore, it is better to use Kubernetes (k8s). k8s provides service discovery functionality so we do not have to create externally and manage.Application Monitoring and Logging

We need an application monitoring tool. Though Docker provides logs, it is not sufficient. You can use other tools that provide better monitoring and logging like Prometheus.

I know it becomes very overwhelming to learn all of this technology, but take things one at a time and start practicing by creating projects.

Let me know if anything is not clear. Until next time, have an error-free code.

Top comments (0)