🎯 The Problem

As a developer , I faced this question every day:

"Can we ship this release to production?"

We had test results, coverage metrics, SonarQube reports... but no single source of truth to answer this simple question.

So I built QualityHub - an AI-powered platform that analyzes your quality metrics and gives you instant go/no-go decisions.

🚀 What is QualityHub?

QualityHub is an open-source quality intelligence platform that:

- 📊 Aggregates test results from any framework (Jest, JUnit, JaCoCo...)

- 🤖 Analyzes quality metrics with AI

- ✅ Decides if you can ship to production

- 📈 Tracks trends over time in a beautiful dashboard

The Stack

- Backend: TypeScript + Express + PostgreSQL + Redis

- Frontend: Next.js 14 + Tailwind CSS

- CLI: TypeScript with parsers for Jest, JaCoCo, JUnit

- Deployment: Docker Compose (self-hostable)

- License: MIT

💡 How It Works

1. Universal Format: qa-result.json

Instead of forcing you to use specific tools, QualityHub uses an open standard format:

{

"version": "1.0.0",

"project": {

"name": "my-app",

"version": "2.3.1",

"commit": "a3f4d2c",

"branch": "main",

"timestamp": "2026-01-31T14:30:00Z"

},

"quality": {

"tests": {

"total": 1247,

"passed": 1245,

"failed": 2,

"skipped": 0,

"duration_ms": 45230,

"flaky_tests": ["UserAuthTest.testTimeout"]

},

"coverage": {

"lines": 87.3,

"branches": 82.1,

"functions": 91.2

}

}

}

This format works with any test framework.

2. CLI Parsers

The CLI automatically converts your test results:

# Jest (JavaScript/TypeScript)

qualityhub parse jest ./coverage

# JaCoCo (Java)

qualityhub parse jacoco ./target/site/jacoco/jacoco.xml

# JUnit (Java/Kotlin/Python)

qualityhub parse junit ./build/test-results/test

3. Risk Analysis Engine

The backend analyzes your results and calculates a Risk Score (0-100):

// Risk factors analyzed:

- Test pass rate

- Code coverage (lines, branches, functions)

- Flaky tests

- Coverage trends

- Code quality metrics (if available)

Output:

{

"risk_score": 85,

"status": "SAFE",

"decision": "PROCEED",

"reasoning": "Test pass rate: 99.8%. Coverage: 87.3%. No critical issues.",

"recommendations": []

}

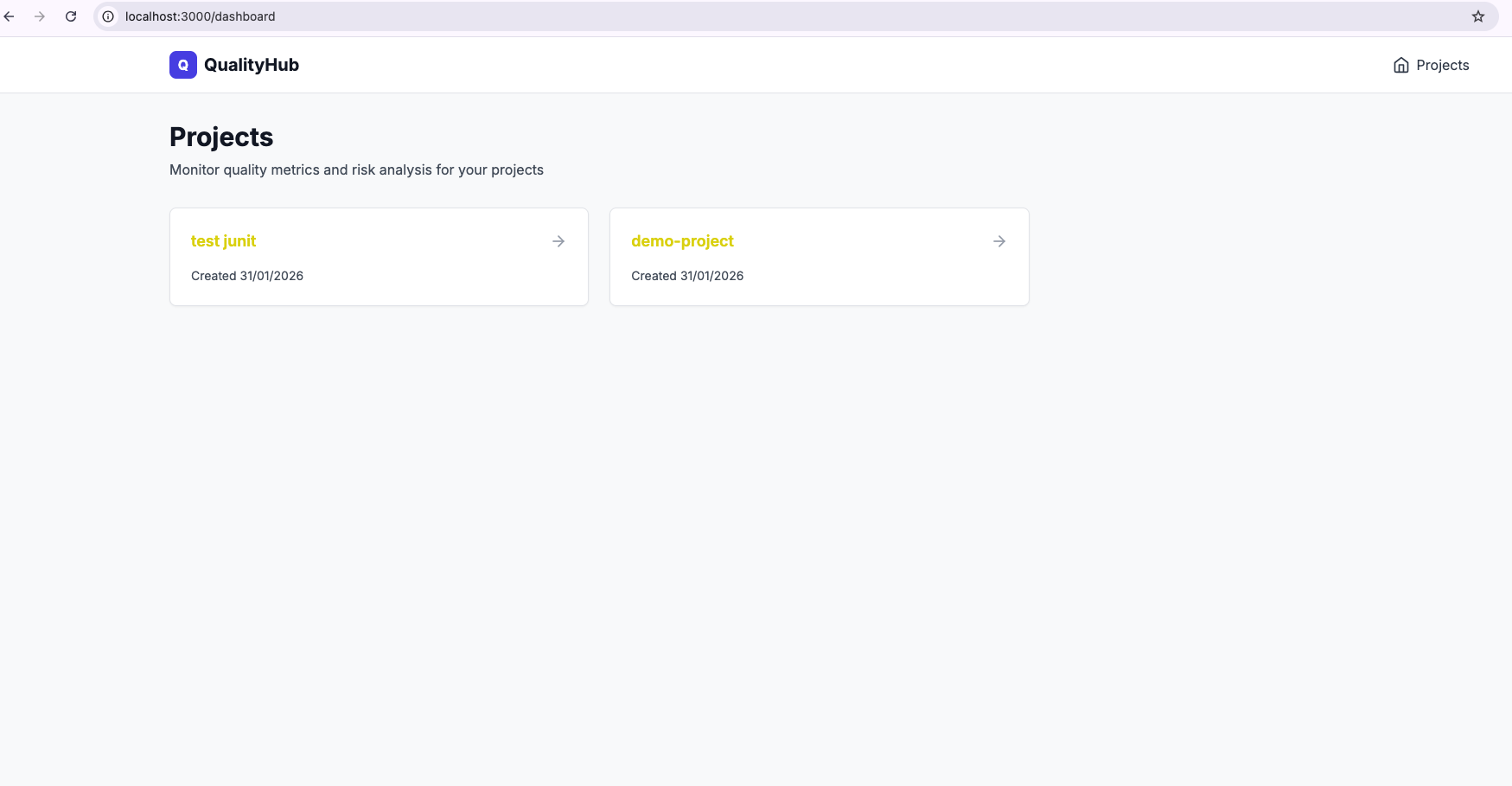

4. Beautiful Dashboard

Track metrics over time, see trends, and make informed decisions.

🔧 Quick Start

Self-Hosted (5 minutes)

# Clone repo

git clone https://github.com/ybentlili/qualityhub.git

cd qualityhub

# Start everything with Docker

docker-compose up -d

# ✅ Backend: http://localhost:8080

# ✅ Frontend: http://localhost:3000

Use the CLI

# Install

npm install -g qualityhub-cli

# Initialize

qualityhub init

# Parse your test results

qualityhub parse jest ./coverage

# Push to QualityHub

qualityhub push qa-result.json

Done! Your metrics appear in the dashboard instantly.

🎨 Why I Built This

The Pain Points

Fragmented Tools: Jest for tests, JaCoCo for coverage, SonarQube for quality... each tool has its own UI and format.

No Single Answer: "Can we ship?" required checking 5 different tools and making a gut decision.

No History: Hard to track quality trends over time.

Manual Process: No automation, no CI/CD integration.

The Solution

QualityHub aggregates everything into one dashboard and uses AI to make the decision for you.

🏗️ Architecture

┌─────────────┐

│ CLI │ ← Parse test results

└──────┬──────┘

│ POST /api/v1/results

↓

┌─────────────────────────────┐

│ Backend (API) │

│ • Express + TypeScript │

│ • PostgreSQL + Redis │

│ • Risk Analysis Engine │

└──────────────┬──────────────┘

│

↓

┌─────────────────────────────┐

│ Frontend (Dashboard) │

│ • Next.js 14 │

│ • Real-time metrics │

└─────────────────────────────┘

📊 Technical Deep Dive

1. The Parser Architecture

Each parser extends a base class:

export abstract class BaseParser {

abstract parse(filePath: string): Promise;

protected buildBaseResult(adapterName: string) {

return {

version: '1.0.0',

project: {

name: this.projectInfo.name,

commit: process.env.GIT_COMMIT || 'unknown',

// Auto-detect CI/CD environment

timestamp: new Date().toISOString(),

},

metadata: {

ci_provider: this.detectCIProvider(),

adapters: [adapterName],

},

};

}

}

This makes adding new parsers trivial. Want pytest support? Extend BaseParser and implement parse().

2. Risk Scoring Algorithm (MVP)

The current version uses rule-based scoring:

let score = 100;

// Test failures

if (tests.failed > 0) {

score -= tests.failed * 5;

}

// Coverage thresholds

if (coverage.lines < 70) {

score -= 15;

}

// Flaky tests

if (flakyTests.length > 0) {

score -= flakyTests.length * 3;

}

// Ensure 0-100 range

score = Math.max(0, Math.min(100, score));

Future: Replace with AI-powered analysis (Claude API) for contextual insights.

3. Database Schema

Simple and efficient:

CREATE TABLE projects (

id UUID PRIMARY KEY,

name VARCHAR(255) UNIQUE NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE qa_results (

id UUID PRIMARY KEY,

project_id UUID REFERENCES projects(id),

version VARCHAR(50),

commit VARCHAR(255),

branch VARCHAR(255),

timestamp TIMESTAMP,

metrics JSONB, -- Flexible JSON storage

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE risk_analyses (

id UUID PRIMARY KEY,

qa_result_id UUID REFERENCES qa_results(id),

risk_score INTEGER,

status VARCHAR(50),

reasoning TEXT,

risks JSONB,

recommendations JSONB,

decision VARCHAR(50)

);

JSONB allows flexible metric storage without schema migrations.

🚀 What's Next?

v1.1 (Planned)

- 🤖 AI-Powered Analysis with Claude API

- 📊 Trend Detection (coverage dropping over time)

- 🔔 Slack/Email Notifications

- 🔌 GitHub App (comments on PRs)

v1.2 (Future)

- 📈 Advanced Analytics (benchmarking, predictions)

- 🔐 SSO & RBAC for enterprise

- 🌍 Multi-language support

- 🎨 Custom dashboards

💡 Lessons Learned

1. Open Standards Win

Making qa-result.json an open standard was key. Now anyone can build parsers or integrations.

2. Developer Experience Matters

The CLI must be dead simple:

qualityhub parse jest ./coverage # Just works

No config files, no setup, just works.

3. Self-Hosting is a Feature

Many companies can't send their metrics to external SaaS. Docker Compose makes self-hosting trivial.

🤝 Contributing

QualityHub is 100% open-source (MIT License).

Want to contribute?

- 🧪 Add parsers (pytest, XCTest, Rust...)

- 🎨 Improve the dashboard

- 🐛 Fix bugs

- 📚 Write docs

Check out: Contributing Guide

🔗 Links

- GitHub: ybentlili/qualityhub

- CLI: qualityhub-cli

- npm: qualityhub-cli

🎯 Try It Now

# Self-host in 5 minutes

git clone https://github.com/ybentlili/qualityhub.git

cd qualityhub

docker-compose up -d

# Or just the CLI

npm install -g qualityhub-cli

qualityhub parse jest ./coverage

💬 What do you think?

Would you use this? What features would you like to see?

Drop a ⭐ on GitHub if you find this useful!

Built with ❤️ in TypeScript

Top comments (5)

Here the new version 1.1.0 : dev.to/younes_bentlili_9480340f/i-...

This hits a very real pain point. That “can we ship or not?” question exists in almost every team, and it’s wild how often the answer is basically vibes + five dashboards + whoever speaks loudest in the release meeting.

I really like the idea of treating quality as decision intelligence, not just metrics. The qa-result.json move is especially smart — once you normalize the inputs, you can evolve the analysis without forcing teams to change how they test. That’s the kind of boring-but-powerful abstraction that actually sticks.

The risk score being explicit (and explainable) is another big win. Even if people disagree with the weighting, at least the logic is visible and debatable, instead of hidden in tribal knowledge or a Sonar gate nobody fully trusts.

Also +1 on self-hosting. A lot of orgs simply can’t ship test data to SaaS, no matter how nice the UI is. Docker Compose as a first-class path makes this usable in the real world, not just demos.

One thought for the future: I could see this becoming really strong as a PR companion rather than a replacement for humans. Something that says “this is technically safe, but here’s why and where the risk actually is,” so teams can make informed tradeoffs instead of blindly following a green light.

Overall, this feels pragmatic in a good way. Not “AI for the sake of AI,” but AI as a way to reduce cognitive load and make decisions less subjective. Solid work — definitely the kind of tool I’d want in a CI pipeline rather than another chart to ignore.

Really appreciate this thoughtful feedback! You nailed exactly why I built this.

This was literally my current experience. We had Gitlabe, SonarQube, JaCoCo reports, and manual testing logs spread across 4 different tools. Every release meeting was "does anyone see any red flags?" followed by awkward silence and then "...ship it?"

Thanks! The goal is that even if teams switch from Jest to Vitest, or add pytest coverage later, the format doesn't change. It's also easier to build integrations when you have a stable contract.

100%. Right now it's rule-based (which is simple but limited), but the plan is to add AI-powered analysis that can explain why something is risky in natural language. Like "coverage dropped 5% on the auth module, which had 3 incidents in the last 6 months" instead of just "score: 72".

This is exactly where I want to take it. The vision is a GitHub App that comments on PRs with context like:

UserAuthTest.testTimeouthas failed in 3 of the last 10 runs"So humans make the final call, but with way better information.

Really glad this resonates. If you have specific use cases or pain points from your team, I'm all ears — trying to make sure v1.1 solves real problems, not imaginary ones.

Also if you want to try it out and have feedback, happy to jump on a quick call to get your thoughts!

That release-meeting moment you described is painfully universal 😅

Once you’ve lived through a few “awkward silence → ship it?” decisions, you realize how fragile that process actually is.

I like that you’re keeping the current scoring rule-based for now. That’s underrated. It keeps trust high. People can argue with weights and thresholds, but at least they’re arguing about something concrete. Jumping straight to opaque AI scoring would probably kill adoption early.

The PR-companion direction feels exactly right. Not replacing judgment, just making risk legible at the moment decisions are made. The examples you gave are perfect because they’re actionable without being prescriptive — “here’s what changed, here’s why it might matter.”

One thing I’d be curious about as this evolves: how teams tune risk tolerance over time. Early-stage teams ship riskier by necessity, while later-stage teams optimize for stability. If QualityHub can reflect that reality instead of enforcing a one-size-fits-all definition of “safe,” it becomes a lot more than a gate.

Either way, this is solving a real problem, not inventing one. If v1.1 keeps leaning into explainability and context instead of magic scores, I think you’re on a strong path.

Happy to keep watching this grow — and yeah, I may take you up on that call once we get a chance to try it in anger 🙂

📦 +100 downloads npm first day : npmjs.com/package/qualityhub-cli