Cline is an autonomous coding agent plugin for VS Code. It's currently the best open-source equivalent to Cursor.

TL;DR: Cline is a beast with Claude—but getting results from local models is more challenging.

I put Cline to the test on a real-world project: dockerizing my Retrieval-Augmented Generation (RAG) system—a FastAPI app that runs on Google Cloud.

Here's the video version of this blog post:

🎥 Watch the Video

🪷 Get the Source Code

https://github.com/zazencodes/zazenbot-5000

🐋 The Demo: Dockerizing a Python API

The goal today is to take a Python function and Dockerize it:

- Add an API layer using FastAPI

- Build a Dockerfile and Docker Compose

- Update my

README.mdwith usage instructions

Long story short: Cline did this wonderfully.

But all great victories are earned through great struggle.

In this post I'll first talk through my failed attempts at using Cline with Ollama local models like Gemma 2 and Qwen 2.5-coder, and then walk you through the grand success that I had with Claude 3.7.

🧪 Installing Cline & First Tests with Local Models via Ollama

Setting up Cline couldn't be easier.

I opened my project (ZazenBot 5000) in VS Code, popped open the Extensions Marketplace, and installed Cline.

Once installed, I selected a model to use with Cline. For the first attempt, I picked Ollama, running Gemma 2—a 9B parameter model.

I used the default connection information for Ollama. I just had to make sure that it was running on my computer (this is done outside of VS Code).

Then I gave Cline the prompt:

Use a fastapi in order to make

@/zazenbot5k/query_rag_with_metadata

available with HTTP. Using a POST request sending the question in the data

Dockerize this application. Use docker compose and create a Dockerfile as well.

Update the README with instructions to run it.

Using Cline's prompt context system, I attached the key file: query_rag_with_metadata.py (as seen above with the @ notation).

This file contains the

process_question()function—the core of the app that takes a user question, enriches it with metadata and a timestamp, and returns a string. This is the function that I want to make available with an API and then Dockerize.

Cline took in all that info and got to work.

The result?

At 100% GPU usage, system memory maxed out, and Cline was reduced to a crawl.

Gemma 2 --- at 9B params --- struggled to run on my system. My GPU monitoring CLI tool nvtop showed my system under duress as Cline worked.

And in the end, the task failed to complete. It seems that Cline and Gemma 2 just don't get along well.

On the bright side, I had time to examine the prompts that Cline was writing:

I loved the use of HTML tags to structure prompt sections, and the particularly amazing thing I noticed was how Cline uses HTML attributes to specify metadata about the section.

In the screenshot above, you can see it specify the file path path="zazenbot5k/query_rag_with_metada?2.py" in this way. How cool it that?

🔁 Swapping to Qwen 2.5-Coder Models

Test #2: Qwen 0.5B

I decided to switch things up and use a local model that was intended for coding.

So I installed and spun up a very tiny model: Qwen 2.5 Coder --- 0.5B.

I queued up the same prompt and awwwway we went.

My laptop had no problem running this one as it started outputting a string of seemingly intelligent code and instructions.

However it was in fact doing noting whatsoever. Cline wasn't making any changes to my code, and the outputs --- on closer investigation --- were largely incomprehensible.

This was likely due to the model failing to generate outputs in the format the Cline was expecting.

Test #3: Qwen 14B

The final Ollama model that I tried was the 14B variant of Qwen 2.5 Coder.

As expected, my GPU maxed out again.

The model slowly started generating output, but Cline never successfully created any of the files I asked for.

At this point, the writing was on the wall:

Local models + "limited" hardware --- M4 chip 24gb RAM --- are not powerful enough for the autonomous, multi-file, full-stack generation Cline is built to do.

⚡️ Switching to Claude 3.7 — The Game Changer

Once I swapped to Claude 3.7 Sonnet via the Anthropic API, it was like unlocking Cline's final form.

I kicked off the exact same prompt as before—no changes whatsoever—and Cline immediately sprang into action.

The difference was obvious. And awesome:

- Context window tracking came online

- Output tokens logging started streaming in real time

- Input/output costs started updating

And most importantly:

- Files were being created and updated autonomously --- and it was working at blazing speed

And yes, Claude is expensive— as mentioned, Cline showed me the cost in real-time.

- $0.10 in API charges before I could blink.

- $0.30 in API charges by the time everything was done.

But that cost came with excellent results (on the first try):

- Generated a proper

Dockerfile - Created a

docker-compose.yml - Wrote a FastAPI wrapper around the

process_question()function - Added a

README.mdsection with example usage - Built a test script to verify the endpoint

- Handled environment variables and credential mounting

- And did all of it autonomously

🎯 Final Outputs

Here's a look at the generated Dockerfile:

FROM python:3.10-slim

WORKDIR /app

# Copy requirements file

COPY requirements.txt .

# Install system dependencies

RUN apt-get update && apt-get install -y curl && rm -rf /var/lib/apt/lists/*

# Install dependencies

RUN pip install --no-cache-dir -r requirements.txt

RUN pip install --no-cache-dir fastapi uvicorn

# Copy application code

COPY zazenbot5k/ /app/zazenbot5k/

# Set Python path to include the application directory

ENV PYTHONPATH=/app

WORKDIR /app/zazenbot5k

EXPOSE 8000

CMD ["uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8000"]

And the corresponding docker-compose.yml:

version: '3.8'

services:

zazenbot-api:

build:

context: .

dockerfile: Dockerfile

ports:

- "8000:8000"

environment:

- GCP_PROJECT_ID=${GCP_PROJECT_ID}

- GCP_LOCATION=${GCP_LOCATION}

- GOOGLE_APPLICATION_CREDENTIALS=/app/credentials/google-credentials.json

volumes:

- ./credentials:/app/credentials

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 30s

timeout: 10s

retries: 3

start_period: 10s

And here's the FastAPI application app.py:

import logging

from fastapi import FastAPI, HTTPException, Request

from fastapi.responses import PlainTextResponse

from pydantic import BaseModel

from query_rag_with_metadata import process_question

# Configure logging

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s - %(name)s - %(levelname)s - %(message)s",

datefmt="%Y-%m-%d %H:%M:%S",

)

logger = logging.getLogger(__name__)

app = FastAPI(

title="ZazenBot 5000 API",

description="API for querying RAG with metadata",

version="1.0.0",

)

class QuestionRequest(BaseModel):

question: str

@app.post("/query", response_class=PlainTextResponse)

async def query(request: QuestionRequest):

"""

Process a question through the RAG system and enhance with metadata and timestamp

"""

try:

logger.info(f"Received question: {request.question}")

response = process_question(request.question)

return response

except Exception as e:

logger.error(f"Error processing question: {e}")

raise HTTPException(status_code=500, detail=str(e))

@app.get("/health")

async def health():

"""

Health check endpoint

"""

return {"status": "healthy"}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

😤 Running Cline's Dockerized app

Running this API with Docker was a pretty special moment. It worked flawlessly.

I ran:

docker-compose up -d

And boom—everything built and ran clean. The /health endpoint returned {"status": "healthy"}, and my POST /query endpoint responded with it's RAG glory.

Cline setup the proper environment variables for my Docker app and even mounted my Google application credentials with a volume, exactly like I would have done.

ical.

There was one little problem: I was initially missing credentials. But since Cline handled things nicely by mounting them in a volume, this fix was trivial. It took me about 10 seconds.

🧠 Final Verdict on Cline — Powerful, But Practical?

After watching Cline go from zero to working API using Claude 3.7, I was thoroughly impressed. It handled everything from environment setup to testing, with zero manual coding on my part.

But here's the truth: Cline is pretty damn expensive.

Claude comes at a cost

I paid roughly $0.30 for this one task. That's whacked. Sure, it was worthwhile in this case. But this doesn't scale out to real-world workflows.Local Models Fall Short

Despite Cline supporting any model backend (Ollama, etc), none of the local models came close. Either they crashed, lagged, or suffered from incomprehensible output. I believe that further testing would yield some working results, but the speed would likely continue to be a limiting factor.

🆚 Cursor vs Cline: What's Better?

Cline absolutely blew me away when paired with Claude --- but I'm not convinced that it's better than Cursor.

Cursor offers a better UX, and is likely to be much cheaper overall, making it more practical for daily development.

👋 Wrapping Up

If you're a builder, experimenter, or just curious about agent-powered development, give Cline a spin.

I love it, and it's a powerful tool to have in my back pocket.

📌 My newsletter is for AI Engineers. I only send one email a week when I upload a new video.

As a bonus: I'll send you my Zen Guide for Developers (PDF) and access to my 2nd Brain Notes (Notion URL) when you sign up.

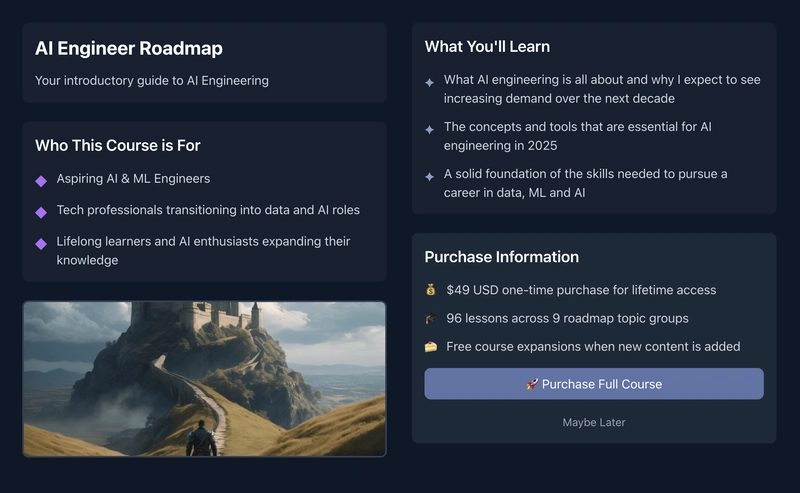

🚀 Level-up in 2025

If you're serious about AI-powered development, then you'll enjoy my new AI Engineering course which is live on ZazenCodes.com.

It covers:

- AI engineering fundamentals

- LLM deployment strategies

- Machine learning essentials

Thanks for reading and happy coding!

Top comments (0)