Welcome to the SQL showdown series!

What is this and how does it work?

I'm committing to publishing a SQL challenge every day for 30 days. In each post I'll describe my solution to the last day's challenge. I'll follow that up with a full description of the challenge for the next day.

Write your own solution in the comments! Let's see who can come up with the most creative solutions.

Challenge #15: Fuzzy Matching

Today I'd like to tackle the subject of fuzzy matching in SQL. Not every problem is as black and white as a simple equality check. You might have used the SQL LIKE operator before, but there are times when even that won't suffice.

Imagine you have two totally separate data-sets that represent the same entities, and you want to join them together. Because they come from different sources, they don't share any kind of reliable key. You may need to join them using names, addresses, or other such "noisy" information.

For this challenge, I've populated two tables with data about movies. Both tables contain movie titles, director names, and names from the cast.

Here's the challenge itself:

What fuzzy matching methods can be used to join the movie datasets together on keys such as the director name?

I won't show a desired output for this challenge because it's more of an open-ended invitation to experiment. Tomorrow I'll show a number of ways you can fuzzy match in PostgreSQL.

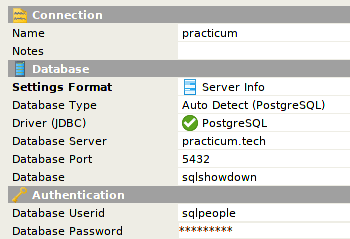

Sandbox Connection Details

I have a PostgreSQL database ready for you to play with.

The password is the same as the username! To query the movie table:

SELECT * FROM day15.movie;

Solution to Challenge #14: UFO Sightings

If you'll recall yesterday's challenge, we were trying to decipher any patterns that might exist when analyzing UFO sightings over time.

This was the challenge from yesterday:

Given UFO sightings from 1906 to present day, can you produce a monthly count of sightings by shape?

And as a reminder, this was the format of the output we were after:

It seems like July and August are a busy time for UFO sightings!

Here's the SQL that produces the above:

SELECT

shape,

sum(CASE WHEN sighting_m = 1 THEN 1 END) AS "Jan",

sum(CASE WHEN sighting_m = 2 THEN 1 END) AS "Feb",

sum(CASE WHEN sighting_m = 3 THEN 1 END) AS "Mar",

sum(CASE WHEN sighting_m = 4 THEN 1 END) AS "Apr",

sum(CASE WHEN sighting_m = 5 THEN 1 END) AS "May",

sum(CASE WHEN sighting_m = 6 THEN 1 END) AS "Jun",

sum(CASE WHEN sighting_m = 7 THEN 1 END) AS "Jul",

sum(CASE WHEN sighting_m = 8 THEN 1 END) AS "Aug",

sum(CASE WHEN sighting_m = 9 THEN 1 END) AS "Sep",

sum(CASE WHEN sighting_m = 10 THEN 1 END) AS "Oct",

sum(CASE WHEN sighting_m = 11 THEN 1 END) AS "Nov",

sum(CASE WHEN sighting_m = 12 THEN 1 END) AS "Dec"

FROM (

SELECT

date_sighted,

shape,

duration,

EXTRACT(MONTH FROM date_sighted) AS sighting_m

FROM day14.ufo WHERE shape IN (

SELECT shape FROM day14.ufo GROUP BY shape HAVING count(*) >= 3000

)

) AS sightings GROUP BY shape;

The main trick here, similar to the solution found in day 5, is to turn rows into columns using CASE WHEN statements, so that I can show the months across the top.

Some databases, such as SQL Server, provide a built-in PIVOT function that makes this a bit easier (or at least less verbose). In PostgreSQL, we'll have to settle for the CASE WHEN trick.

Each month has a sum with a conditional inside of it that produces a 1 only if the month of the input row matches. Whenever the row doesn't match, the output of the conditional is NULL, which is evaluated as zero in the sum.

Selecting from a subquery instead of directly from the UFO table is optional, and I do that only so I can avoid repeating the month extraction over and over in the CASE WHEN statements. Likewise, the WHERE clause is optional too, and just serves to eliminate a lot of noisy one-off shapes from the data.

Good luck!

Have fun, and I can't wait to see what you come up with! I'll be back tomorrow with a solution to this problem and a new challenge.

Top comments (0)