In Machine Learning as well as in real life, there might be some patterns that bring wealth and others that don’t. As individuals, we tend to stay away from the latter and lean towards the former. Often, people get comfortable enough with one specific pattern that goes well that they do not bother to explore other variations. This is an ongoing issue in all kinds of Machine Learning frameworks. They have to leverage both the power of mining known well-performing regions (exploitation), but also the diversity that looking for better alternatives yields (exploration).

Problem definition

The exploration-exploitation dilemma is a general problem that can be encountered whenever there exists a feedback loop between data gathering and decision-making, that is whenever a model transitions from spectator to actor of collecting data, this problem may arise.

To better understand this idea, let’s look at two example models:

- A model that predicts whether an image contains a cat or a dog. In this case, all the data required is collected, then the model is trained. After training, the model is used in one way or another to make predictions without any more collection of data, so no more exploration is needed.

- A model that predicts click-through rate (CTR) for some ads. In this case, the model is trained with some initial data and then is continuously updated whenever a user interacts with an ad. This model becomes an actor in the process of data collection so the trade-offs between exploration-exploitation need to be carefully considered.

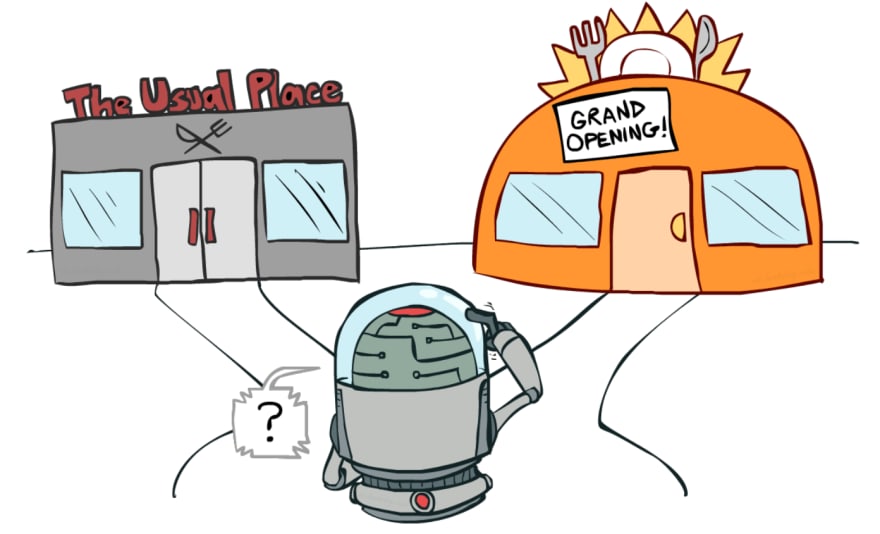

Before going forward it’s worth mentioning some real-life examples of the exploration-exploitation dilemma:

- Going to your favorite restaurant, or trying a new one?

- Keep your current job or hunt around?

- Take the normal route home or try another?

Handling the dilemma

Greedy approach

As stated in the name this approach simply consists of taking the best action with respect to the current actor’s knowledge. Now, as we can deduce, this strategy means full exploitation, the drawback being that we don’t do any exploration and we may always do sub-optimal decisions because we don’t get the chance to explore the problem space.

Ɛ-greedy approach

The Ɛ-greedy algorithm is a simple, yet very effective variation of the greedy approach. It simply consists in choosing the best action with respect to the current knowledge with a probability of (1-Ɛ) - exploitation or a completely random action with probability Ɛ - exploration. The Ɛ parameter is balancing between exploration/exploitation, a high value of Ɛ yields a more explorative approach, while a low value emphasizes exploitation. This approach is widely used in Reinforcement Learning, for enhancing the Q-learning algorithm.

Ɛ-decaying greedy

It’s good that we introduced exploration in our approach, but ideally, we’d like to not explore infinitely if we found the best choosing strategy. However, in practice it’s very difficult to pinpoint when we found the best strategy so, to simulate this behavior, we can choose a value for the Ɛ parameter, and then slowly decrease it as we explore the problem space. The decaying rate highly depends on the problem we are trying to solve, so one needs to find the value that yields the best results.

Those were just a few insights into the Exploration-Exploitation dilemma. At aiflow.ltd, we try to automate as many processes as possible, to make sure we find the best balance between Exploration and Exploitation. If you’re curious to find out more, subscribe to our newsletter.

References:

Top comments (0)