We will explore how to easily provision, backup, restore & monitor MYSQL Instances on Kubernetes the easy way using MYSQL Operator.

“Running databases in Kubernetes is one shiny disco ball that attracts a lot of limelight. Although Databases by their very nature are stateful, on the other hand, Kubernetes is more inclined towards running stateless & ephemeral applications, which makes them two different worlds apart, although to make them one ❤ we have MYSQL Operator. As there is some great amount of work done worldwide in the Open Source community by Oracle, Presslabs & Percona providing rich MySQL Operator’s which make running MYSQL on Kubernetes a hassle-free experience.”

What is MYSQL Operator?

Operator are applications written ontop of kubernetes which makes challenging & domain specific operations automated & easy. We are choosing *MYSQL Operator* from *Presslabs which makes running **MySQL as a service* with built-in High-Availability, Scalability & Monitoring quite simple. A single definition of MYSQL Cluster can include all the information needed for backup, storage along with MySQLD configuration.

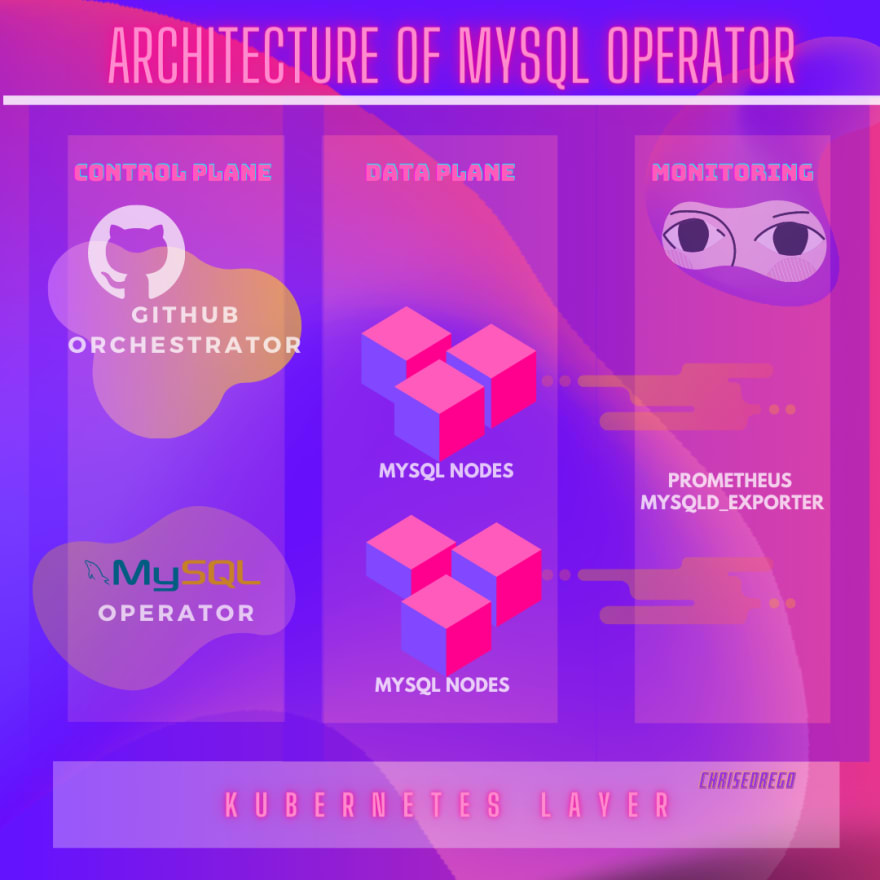

Architecture of MYSQL Operator

The whole infrastructure runs on top of Kubernetes, along with **Github Orchestrator** which is an open-source tools that provides a pretty intuitive UI, also we have the MYSQL Operator which does the actual heavy lifting & provisions various MySQL Nodes & Services, what’s even greater is that each MYSQL Instances has mysqld-exporter service running which can be used for monitoring.

Reason’s to use Presslabs MYSQL Operator

Automatic on-demand & scheduled backups.

Initializing new clusters from existing backups.

Built-in High-Availability, Scalability, Self-Healing & Monitoring.

Save money & Avoid vendor lock-in’s

Best suited for Microservices Architecture.

Prerequisite

Basic understanding of MYSQL, Kubernetes & Kubernetes Operator

Installing MySQL Operator using helm

It’s a simple straightforward way to install mysql-operator with helm, run the following commands, based on the version of helm installed on your machine.

Provisioning: MYSQL Cluster

Once, we are done installing MYSQL Operator the next step is to provision MYSQL cluster instance with credentials, storage & MySQL configuration.

In this case, we have configured a root password which needs to be base64 encoded, we can do that with help of base64encode, along with it we can configure mysqld configuration an persistent volume claim for storage.

List & **Describe **the available MYSQL Instances

kubectl get mysql

kubectl describe mysql/<NAME_OF_MYSQL_CLUSTER>

Connecting to a MySQL Instance

kubectl -n mysql-operator run mysql-client --image=mysql:5.7 -it --rm --restart=Never -- /bin/bash

mysql -uroot -p'changme' -h '**mycluster-mysql.default.svc.cluster.local**' -P3306

Exposing MYSQL Cluster Publically using Ingress

There are some minor tweaks needed in the Ingress configuration such as adding the port number along with service to be exposed when it comes to TCP Servies, i have already addressed it in this blog.

Setting up a Standalone MYSQL Instance on Kubernetes & exposing it using Nginx Ingress Controller.

Backups

Backups are done pretty easily in an automated fashion with On-demand or Scheduled backups. Backups are stored on an Object Storage such as AWS S3, GCloud Bucket, Google Drive & Azure Bucket. Backups are done using rclone.

Creating Backups

In order for backups to be uploaded on these object storage we need to provide the credentials for the same using Kubernetes Secrets which need to base64 encoded.

We can list or view the status of the backups. MysqlBackups are actually runs as Jobs inside of Kubernetes Cluster.

kubectl get MysqlBackup

kubectl describe MysqlBackup/<BACKUP_NAME>

Creating Scheduled Backups

Operator provides a way in which clusters can be periodically backed up in regular intervals by specifying a cron expression.

Restoring Backups

Initializing a cluster from existing backups

There are often at times the need to spawn databases in Microservice environment which are restored from a point in-time backup or snapshot. MYSQL Operator makes it pretty easy by defining initBucketURL which points to backup archive file along with secret initBucketSecretName to access the file.

Monitoring & Visualization

MYSQL Operator comes along with built-in monitoring & visualization with the help of Orchestrator and mysqld-exporter service.

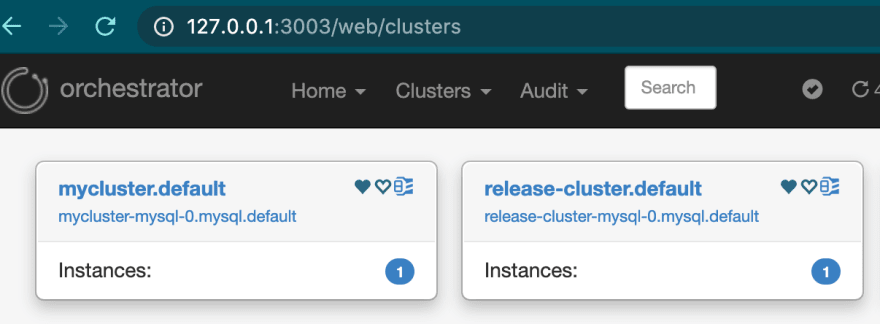

Orchestrator

Orchestrator is developed by Github, which allows to provides a replication topology control & high availability and bird-eyes view of the whole MYSQL Cluster farm.

In order to access the Orchestrator we can port-forward the service

kubectl port-forward svc/mysql-operator -n mysql-operator 3003:80

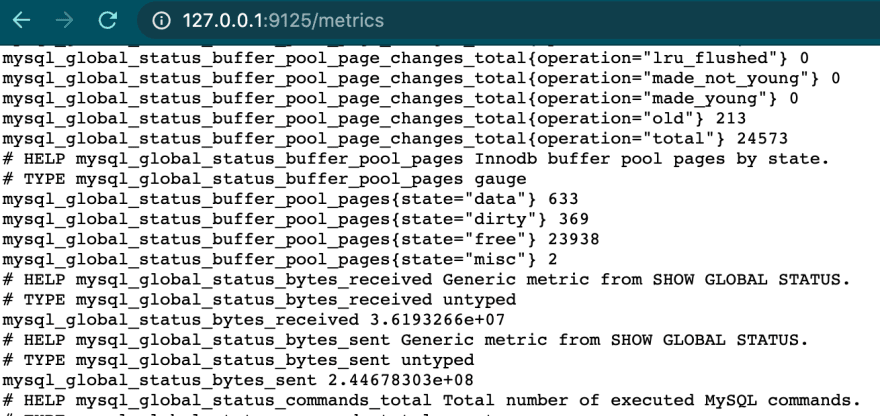

mysqld-exporter

Each MYSQL Cluster which is provisioned, have mysqld-exporter pod which captures metrics/information about individual clusters. This service can be used by Prometheus to scrape the metrics & provide valuable insight about the database.

We need to define a service which exposes the mysqld-exporter pod which runs on port 9125

In order to access the mysqld-exporter, lets port-forward the service.

kubectl port-forward svc/mycluster-exporter-svc 9125:9125

Final Notes

Although, we have learned to provision, scale,monitor mysql instances making production databases run on Kubernetes, the most important thing to look forward is the underlying storage for Persistent Volume as there are a couple of Storage Backends such as EFS, NFS that support RWX, but often don’t work well.We can certainly opt for EBS, Azure disk, local disk although we can still run into problems like for EBS ReadWriteMany isnt a supported. In that case we can prefer options such as Ceph, GlusterFS, Rook which are production ready but have complex side for setup & maintenance.

“if you found this article useful, feel free show some ❤️ click on ❤️ many times or share it with your friends.”

Top comments (0)