This article originally appeared on Akvo's blog

At Akvo, we believe that open source software provides substantial benefits for organisations in the international development sector. Nowadays, we expect a high quality and rich feature set from any software that we use.

Thanks to open source software, relatively small organisations and development teams are able to build sophisticated and feature-rich applications by virtue of gluing together pre-existing open source software components in unique or specific ways.

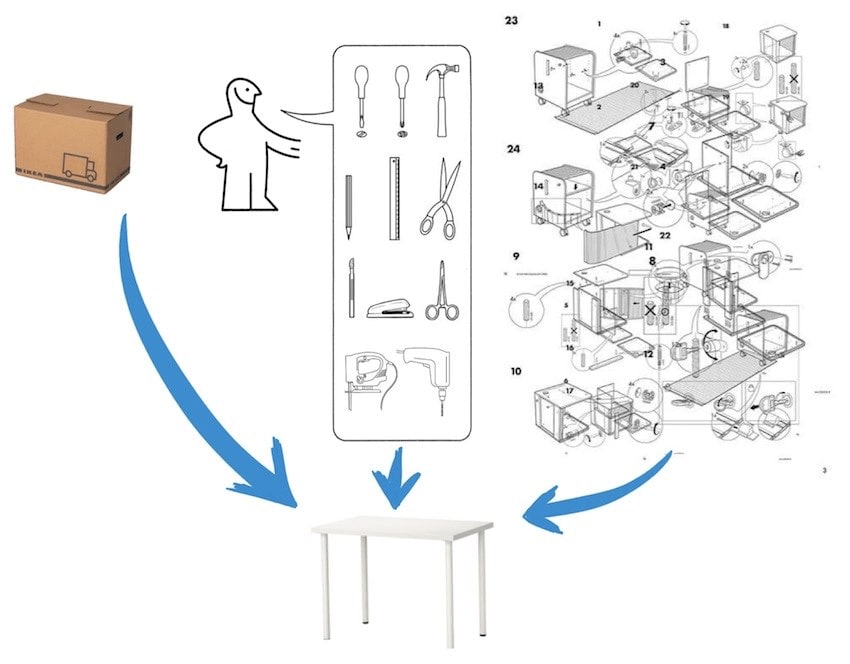

To be able to reuse all those pieces is awesome, as it is a lot less complex to assemble existing components than it is to create them from scratch.

But that assembly can become a beast from your worst IKEA nightmare:

If you had to follow these instructions once in a lifetime, it probably wouldn’t be too bad. But software developers have to follow those instructions regularly, as we need to recreate, rebuild and reassemble our applications whenever we need to add new components, update versions of those components, or build new features.

Due to the nature of software, the changes that developers need to make (or the pieces that we need to reassemble) are sometimes drastic. An analogy would be like making that basic IKEA desk into a motorised adjustable standing desk.

Much like any other set of instructions, there is always something that doesn’t work. Maybe you missed a step, lost a component or didn’t have all the tools required. Maybe the instructions were wrong, misleading, ambiguous or you were reading them upside down.

The result of any of these issues is the same: a big waste of time.

The problem with instructions

For many years, the IT industry has tried to ease this pain by providing more accurate sets of instructions, but those instructions are still written, read and interpreted by humans, and we all know that human communication is messy and far from accurate.

At Akvo, we want to make it easy for anybody to contribute to our open source software. For that reason, we have decided to invest in making our open source applications as simple as possible to assemble, not by improving on the assembly instructions, but by completely eliminating them.

Making a fresh start

As human communication is imperfect, we had to look for another tool; one that would eliminate any ambiguity. And there is nothing like a computer to follow instructions in a very literal way.

Even a perfect set of instructions blindly followed by a machine is not enough. Computers have a tendency to accumulate entropy, so even two computers of the same brand, bought at the same shop at the same time, after some weeks of use, can behave differently. Maybe I configured my locale to Klingon while you installed Antivirus-X. That difference is enough to cause the assembly instructions to fail.

So apart from some machine-readable instructions, we need a way of making sure that we always have a fresh start. If every developer starts from the same point, the instructions will always arrive at exactly the same result. And here is where software virtualisation technology shines, as it has the capability to create virtual computers inside the developer’s computer. In a nutshell, virtualisation provides a very easy way of creating a new empty cosmos that we can use as the starting point for our set of instructions.

The Docker Platform

We have chosen the Docker Platform as the virtualisation technology to allow us to achieve our goal of having a repeatable and machine-readable set of instructions, and therefore a consistent starting point.

The two tools of the Docker Platform that we use are Docker and Docker Compose. Below is an example of a file you’d give to Docker to create a computer:

FROM openjdk:8-jre-alpine

COPY target/akvo-flow-maps.jar /app/akvo-flow-maps.jar

COPY maybe-import-and-java-jar.sh /app/maybe-import-and-java-jar.sh

RUN chmod 777 /app/maybe-import-and-java-jar.sh

CMD ./maybe-import-and-java-jar.sh

The most interesting bit here is the first “FROM” line, which tells Docker what our desired starting point is. Thanks again to the open source community, there are thousands of starting points to choose from at DockerHub, which makes it a lot easier to bootstrap our custom virtual computers. The rest of the lines are the set of instructions to follow from that starting point.

Where Docker allows us to create virtual computers, Docker Compose allows us to create virtual cosmos, where a set of computers will live, isolated from other cosmos. Here’s an example:

services:

postgres:

build:

dockerfile: Dockerfile-postgres

expose:

- 5432

flow-maps:

build:

dockerfile: Dockerfile-dev

ports:

- "47480:47480"

- "3000:3000"

redis:

image: redis:3.2.9

The first thing to notice is that the starting point for Docker Compose is always the void, the nothing, la nada. After that, we specify which computers should exist in that universe, in this case, a Postgres database, a Redis database and the Akvo Flow Maps application. Those computers are created using the Docker files that we just explained.

Results

So what is the result of applying this technology to Akvo Lumen, Akvo’s data visualisation and analysis tool? By applying this technology, contributing to Lumen goes from this… to this:

Two tools, git and docker, and two commands, make it extremely easy to build and assemble a working Akvo Lumen development environment. Besides easing the open source contribution process, by removing the onerous task of setting up a working environment, we find two additional benefits of using this technology:

- It encourages innovation. As it is extremely easy to “start from scratch”, we are free to experiment with new components, without fear of breaking things or ending up in an unrecoverable situation.

- It reduces wasted time. Whenever we have a new team member, new components are added to the project, switching between different projects or just something stops working, we have two commands to get back to a known and valid starting point.

Want better instructions? Strive for no instructions.

Top comments (2)

C++ desktop application development doesn't really lend itself to this approach, per-se, but we do follow a somewhat similar process.

Our build system is a combination of CMake and Makefiles. This means that if someone knows what they're doing, they still have total control over the build process. Meanwhile, the rest of us don't have to bother with it, because 99% of it is automated.

So, for most people:

Make sure you have a C++ compiler and CMake installed.

Clone the repository for whichever of our projects you are building.

Clone our dependency repositories in the same directory as the one you cloned in Step 2. That's key to the magics.

Run 'make ready' in each repository, working your way up the dependency chain, as outlined in the main project's

README.mdandBUILDING.mdfiles.On the last one, you can run 'make ready' for the default build, or 'make tester' if you want to test it out instead.

How does that work, exactly? Pretty simple.

First, we keep a repository of all our dependencies (

libdeps), containing the latest version we've tested against, so you can either use that for the easy way, or bring your own, which is a little harder, but gives you more control. We provide a template for the very simple.configfile, which is used to tell the build system for the given repository where to find its dependencies.Second, we structured the folders so that CMake runs an out-of-place build in a dedicated temporary folder within the source directory! This means that we can write custom Makefiles in the parent directories, including the repository root, for executing the most common CMake commands. That little bit of witchery has been called both "brilliant" and "convoluted," but either way, I can't take credit for it. I learned the trick from CPGF's build system. Love it or hate it, it really is the best of both worlds; if you don't want to run CMake directly,

make readydoes it for you!Those short instructions, including where to get each dependency, is listed in the

BUILDING.mdfile in each repository.As a side effect, nearly all of the build system's code never needs to be altered. There are two specific places in

CMakeLists.txtthat have to be changed as the project does - one for new dependencies, and one for new files within the project. Each change is only 2-3 lines each, and always follows the same pattern.Of course, I do have the entire system documented in painstaking detail elsewhere, for ongoing maintenance, but I've had to modify it all of...once since I devised it? It's pretty neat.

The cool part is, it has worked on every system we've tried it on, flawlessly, every time. Even our CI, Jenkins, loves it. (We haven't yet added Windows support. We CAN, we just don't care enough about that platform yet.)

I definitely agree with all of this.