Post originally posted on my personal site

Over the weekend, I spent a few hours building a Github action to automatically detect potentially toxic comments and PR reviews.

It uses TensorFlow.js and its toxicity pre-trained model to assess the level of toxicity, based on the following 7 categories:

- Identity attack

- Insult

- Obscene

- Severe toxicity

- Sexual explicit

- Threat

- Toxicity

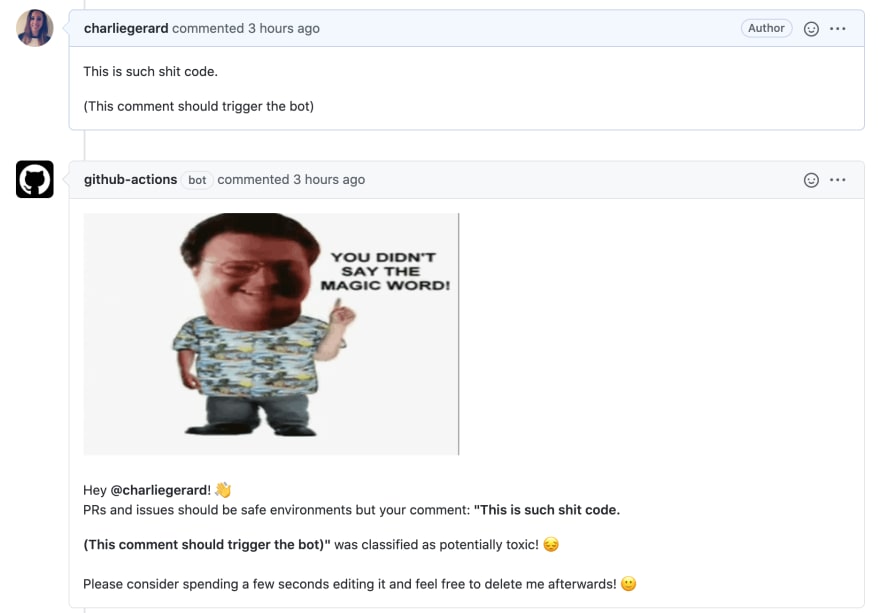

When a user posts a new comment or reviews a PR, the action is triggered. If there is a high probability that the content would be classified as toxic, the bot creates a comment tagging the author and advising to update the content.

Here's a quick demo:

Setup

Before we dive into the code, it is probably important to note that this is a JavaScript action. I read that actions could also be in Docker containers but for simplicity, I stuck with JS.

First, I created a action.yml file at the root of my project folder.

Inside this file, I wrote the following code:

name: "Safe space"

description: "Detect the potential toxicity of PR comments"

inputs:

GITHUB_TOKEN:

required: true

message:

required: false

toxicity_threshold:

required: false

runs:

using: "node12"

main: "dist/index.js"

The first couple of lines are self-explanatory. Then, the inputs property contains 3 different elements.

- The

GITHUB_TOKENis a secret token required to authenticate in your workflow run and is automatically generated. - The

messageproperty is optional and can be used by people if they want to customise the content of the comment posted by the bot if the action detects toxic comments. - The

toxicity_thresholdproperty is also optional and allows people to set a custom threshold that will be used by the machine learning model when making predictions about a comment.

Finally, the settings under runs indicate the version of Node.js we want our action to run with, as well as the file the action code lives in.

Action code

To create a JavaScript action, you need to install and require at least 2 Node.js modules: @actions/core and @actions/github. As this particular action uses a TensorFlow.js model, I also installed and required @tensorflow-models/toxicity and @tensorflow/tfjs.

Then, in my dist/index.js file, I started writing my action code.

The core setup could look something like this:

async function run() {

const tf = require("@tensorflow/tfjs");

const toxicity = require("@tensorflow-models/toxicity");

await tf.setBackend("cpu");

try {

const githubToken = core.getInput("GITHUB_TOKEN");

const customMessage = core.getInput("message");

const toxicityThreshold = core.getInput("toxicity_threshold");

const { context } = github;

} catch (error) {

core.setFailed(error.message);

}

}

run();

There is a main run function that requires the packages needed and sets the backend for TensorFlow.js. Then in a try/catch statement, the code gets the 3 parameters mentioned a bit earlier that we'll be using soon.

Finally, we get the context of the event when the action is triggered.

Creating a bot comment when a user posts a comment on an issue or PR

A few different events can trigger a Github action. As this action is interested in getting the comments posted on an issue or PR, we need to start by looking at the payload of the event and see if the property comment is defined. Then we can also look at the type of action (here created and edited), to run the predictions only when a new comment is added or one is edited, but not deleted for example.

More details are available on the official Github documentation.

I then access a few parameters needed to request the comments for the right issue or PR, load the machine learning model, and if the property match is true on one of the results coming back from the predictions, it means the comment has been classified as toxic and I generate a new comment with the warning message.

if (context.payload.comment) {

if (

context.payload.action === "created" ||

context.payoad.action === "edited"

) {

const issueNumber = context.payload.issue.number;

const repository = context.payload.repository;

const octokit = new github.GitHub(githubToken);

const threshold = toxicityThreshold ? toxicityThreshold : 0.9;

const model = await toxicity.load(threshold);

const comments = [];

const commentsObjects = [];

const latestComment = [context.payload.comment.body];

const latestCommentObject = context.payload.comment;

let toxicComment = undefined;

model.classify(latestComment).then((predictions) => {

predictions.forEach((prediction) => {

if (toxicComment) {

return;

}

prediction.results.forEach((result, index) => {

if (toxicComment) {

return;

}

if (result.match) {

const commentAuthor = latestCommentObject.user.login;

toxicComment = latestComment;

const message = customMessage

? customMessage

: `<img src="https://media.giphy.com/media/3ohzdQ1IynzclJldUQ/giphy.gif" width="400"/> </br>

Hey @${commentAuthor}! 👋 <br/> PRs and issues should be safe environments but your comment: <strong>"${toxicComment}"</strong> was classified as potentially toxic! 😔</br>

Please consider spending a few seconds editing it and feel free to delete me afterwards! 🙂`;

return octokit.issues.createComment({

owner: repository.owner.login,

repo: repository.name,

issue_number: issueNumber,

body: message,

});

}

});

});

});

}

}

Creating a bot comment when a user submits a PR review

The code to run checks on PR reviews is very similar, the main difference is in the first couple of lines. Instead of looking for the comment property on the payload, we look for review, and the action I am interested in is submitted.

if (context.payload.review) {

if (context.payload.action === "submitted") {

const issueNumber = context.payload.pull_request.number;

const repository = context.payload.repository;

const octokit = new github.GitHub(githubToken);

const threshold = toxicityThreshold ? toxicityThreshold : 0.9;

const model = await toxicity.load(threshold);

const reviewComment = [context.payload.review.body];

const reviewObject = context.payload.review;

let toxicComment = undefined;

model.classify(reviewComment).then((predictions) => {

predictions.forEach((prediction) => {

if (toxicComment) {

return;

}

prediction.results.forEach((result, index) => {

if (toxicComment) {

return;

}

if (result.match) {

const commentAuthor = reviewObject.user.login;

toxicComment = reviewComment[0];

const message = customMessage

? customMessage

: `<img src="https://media.giphy.com/media/3ohzdQ1IynzclJldUQ/giphy.gif" width="400"/> </br>

Hey @${commentAuthor}! 👋 <br/> PRs and issues should be safe environments but your comment: <strong>"${toxicComment}"</strong> was classified as potentially toxic! 😔</br>

Please consider spending a few seconds editing it and feel free to delete me afterwards! 🙂`;

return octokit.issues.createComment({

owner: repository.owner.login,

repo: repository.name,

issue_number: issueNumber,

body: message,

});

}

});

});

});

}

}

Using the action

To use an action in a repository, we need to create a workflow file.

First, the repository needs to have a .github folder with a workflows folder inside. Then, we can add a new .yml file with the details for the action we want to run.

on: [issue_comment, pull_request_review]

jobs:

toxic_check:

runs-on: ubuntu-latest

name: Safe space

steps:

- uses: actions/checkout@v2

- name: Safe space - action step

uses: charliegerard/safe-space@master

with:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

In this code sample, we indicate that we want to trigger this action when events happen around comments in an issue and when a pull request review event happens.

Then, we add that we need to start by using the default actions/checkout@v2 action and finally, add this toxicity classification action, with some additional parameters, including the required GITHUB_TOKEN one.

If you wanted to use the optional properties message and toxicity_threshold, you could do so like this:

on: [issue_comment, pull_request_review]

jobs:

toxic_check:

runs-on: ubuntu-latest

name: Safe space

steps:

- uses: actions/checkout@v2

- name: Safe space - action step

uses: charliegerard/safe-space@master

with:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

message: "Hello"

toxicity_threshold: 0.7

If you are developing your own action, you can test it by changing the line

uses: charliegerard/safe-space@master

to

uses: ./

One important thing to note, if you want to build your own Github action, is that when using the action type issue_comment and pull_request_review, you need to push your code to your main (often called "master") branch before being able to test that your code is working in another branch. If you develop everything in a separate branch, the action will not be triggered when writing a comment or reviewing a PR.

And that's it! 🎉

Potential improvements

At the moment, I invite the user to delete manually the note from the bot once they've updated the content of their toxic comment, however, I think this could be done automatically on edit. When the user edits a comment, I could run the check again and if it is predicted as safe, remove automatically the bot comment so users don't have to do it.

Top comments (6)

Nice strategy by using a GitHub Action to detect this kind of behavior. I wrote a similar approach but using linters (grep engine):

dev.to/sourcelevel/git-linters-and...

This is super cool — I wonder if this can be applied to comments on DEV some how. 🤔

Cool one.

You can also particate in GitHub Action Hackathon Hsoted on Dev.to

This would be a great submission

Hey Charlie Gerard,

My name is Erik O’Bryant and I’m assembling a team of developers to create an AI operating system. An OS like this would use AI to interpret and execute user commands (just imagine being able to type plain English into your terminal and having your computer do exactly what you tell it). You seem to know a lot about AI development and so I was wondering if you’d be interested in joining my team and helping me develop the first ever intelligent operating system. If you’re interested, please shoot me a message at erockthefrog@gmail.com and let me know.

Cool idea

This is a fantastic idea. Well done, Charlie.