In case you were wondering, Kafdrop has an official Docker image. Which is great; it means you don't have to clone the repo, mess around with Maven builds or even install the JVM.

Docker images are updated automatically with each release build of Kafdrop, courtesy of the public Travis build pipeline. The latest tag on DockerHub always points to the most recent stable build.

Getting started

To run Kafdrop, run the following:

docker exec -it -p 9000:9000 \

-e KAFKA_BROKERCONNECT=host:port,host:port \

obsidiandynamics/kafdrop:latest

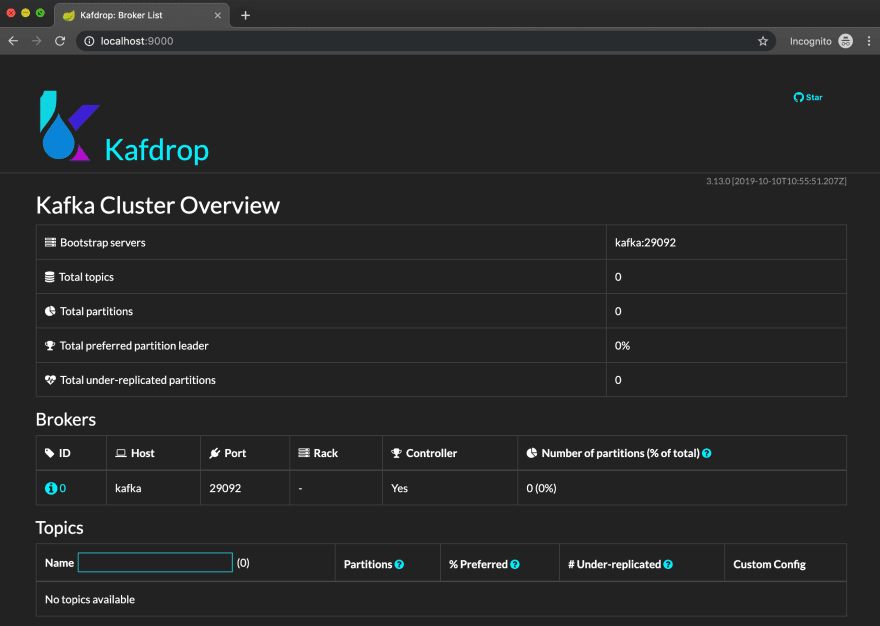

Replace host:port,host:port with the bootstrap list of Kafka host/port pairs. Once the container boots up, navigate to localhost:9000 in your browser. You should see the Kafdrop landing screen:

Hey there! We hope you really like Kafdrop! Please take a moment to ⭐ the repo or Tweet about it.

Configuration

The Kafdrop Docker image is configured by simply passing in environment variables:

-

KAFKA_BROKERCONNECT: Bootstrap list of Kafka host/port pairs. -

JVM_OPTS: JVM options. -

JMX_PORT: Port to use for JMX. No default; if unspecified, JMX will not be exposed. -

HOST: The hostname to report for the RMI registry (used for JMX). Default:localhost. -

SERVER_PORT: The web server port to listen on. Default:9000. -

SERVER_SERVLET_CONTEXTPATH: The context path to serve requests on (must end with a/). Default:/. -

KAFKA_PROPERTIES: Additional properties to configure the broker connection (base-64 encoded). -

KAFKA_TRUSTSTORE: Certificate for broker authentication (base-64 encoded). Required for TLS/SSL. -

KAFKA_KEYSTORE: Private key for mutual TLS authentication (base-64 encoded).

Connecting to a secure cluster

Need to connect to a secure Kafka cluster? No problem; Kafdrop supports TLS (SSL) and SASL connections for encryption and authentication. This can be configured by providing a combination of the following files:

- Truststore: specifying the certificate for authenticating brokers, if TLS is enabled.

- Keystore: specifying the private key to authenticate the client to the broker, if mutual TLS authentication is required.

-

Properties file: specifying the necessary configuration, including key/truststore passwords, cipher suites, enabled TLS protocol versions, username/password pairs, etc. When supplying the truststore and/or keystore files, the

ssl.truststore.locationandssl.keystore.locationproperties will be assigned automatically.

These files are supplied in base-64-encoded form via environment variables:

docker exec -it -p 9000:9000 \

-e KAFKA_BROKERCONNECT=host:port,host:port \

-e KAFKA_PROPERTIES=$(cat kafka.properties | base64) \

-e KAFKA_TRUSTSTORE=$(cat kafka.truststore.jks | base64) \ # optional

-e KAFKA_KEYSTORE=$(cat kafka.keystore.jks | base64) \ # optional

obsidiandynamics/kafdrop

Running Kafdrop in Kubernetes

You can also launch Kafdrop in Kubernetes with the aid of Helm chart.

Clone the repository (if necessary):

git clone https://github.com/obsidiandynamics/kafdrop && cd kafdrop

Install (or upgrade) the chart. Replace 3.x.x with the latest stable release.

helm upgrade -i kafdrop chart --set image.tag=3.x.x \

--set kafka.brokerConnect=<host:port,host:port> \

--set kafka.properties="$(cat kafka.properties | base64)" \

--set kafka.truststore="$(cat kafka.truststore.jks | base64)" \

--set kafka.keystore="$(cat kafka.keystore.jks | base64)"

A list of settable values can be found in values.yaml.

I'm one of the maintainers of Kafdrop and a bit of a Kafka enthusiast. I'm also an avid crusader for microservices and event-driven architecture. If you're interested in these topics, or just have a question to ask, grab me on Twitter.

Top comments (0)