In this Story, I have a super quick tutorial showing you how to create a multi-agent chatbot using LangGraph, Deepseek-R1, function calling, and Agentic RAG to build a powerful agent chatbot for your business or personal use.

In my last Story, I talked about LangChain and Deepseek-R1. One of the viewers asked if I could do it with LangGraph.

I have fulfilled that request — but not only that, I have also enhanced the chatbot with function calling and Agentic RAG.

“But Gao, Deepseek-R1 doesn’t support function calls!”

Yes, you’re right — but let me tell you, I came up with a clever idea. If you stay until the end of the video, I’ll show you how to do it, too!

I have mentioned the function call many times in my previous article, we already know that the function call is a technique that allows LLM to autonomously select and call predefined functions based on the conversation content. These functions can be used to perform various tasks.

Agentic RAG is a type of RAG that improves the problems of general RAG by using agents. The problem with conventional RAG is that the AI processes everything “in order”, so if an error occurs, such as not being able to retrieve data, all subsequent processing becomes meaningless.

Agentic RAG addresses these issues by using an “agent.” The agent not only chooses the tool to go to but also allows for thought loops, so it can loop through multiple processes until it gets the information it needs and meets the user’s expectations.

So, let me give you a quick demo of a live chatbot to show you what I mean.

We have two different databases, research and development, where we can get our answers. I have created some example questions that you can test. Let me enter the question: ‘What is the status of Project A?’ If you look at how the chatbot generates the output,

It uses RecursiveCharacterTextSplitter to split a text into smaller chunks and convert it into a document using splitter.create_documents(). These documents were stored as vector embeddings in ChromaDB for efficient similarity search.

A retriever was created using create_retriever_tool in LangChain, and an AI agent was developed to classify user queries as research or development, connect to DeepSeek-R1 (temperature = 0.7) for responses, and retrieve relevant documents.

One of the big problems I faced when I developed a chatbot was that DeepSeek-R1 does not support function calling like OpenAI, so I created a text-based command system instead of using function calls. Rather than forcing DeepSeek-R1 to use functions directly, I designed it to output specific text formats.

Then, I built a wrapper to convert these text commands into tool actions. This way, we get the same functionality as function calling, but in a way, DeepSeek-R1 can handle it.

A grading function checks if retrieved documents exist — if found, the process continues; if not, the query is rewritten for clarity. A generation function summarizes retrieved documents using DeepSeek-R1 and formats the response.

A decision function determines whether tools are needed based on the message content, and a LangGraph workflow was created to manage the process, starting with the agent, routing queries to retrieval if needed, generating an answer when documents are found, or rewriting the question and retrying if necessary. Finally, it generates the final answer in a green box.

By the end of this video, you will understand what is Agentic RAG, why we need Agentic RAG and how a function call + DeepSeek-R1 can be used to create a super AI Agent.

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

like my article; that will really help me out.👏

Follow me on my YouTube channel

Subscribe to me to get the latest articles.

What is Agentic RAG?

Agentic RAG is an RAG that integrates the capabilities of Agent, and the core capabilities of Agent are autonomous reasoning and action.

Therefore, Agentic RAG brings the autonomous planning capabilities of AI agents (such as routing, action steps, reflection, etc.) into traditional RAG to adapt to more complex RAG query tasks.

RAG = LLM + Knowledge Base + Retriever

Why do we need Agentic RAG❓

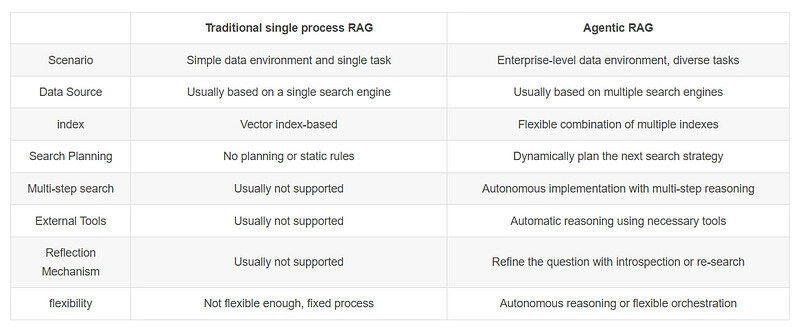

Agentic RAG's “agent” feature is mainly reflected in the retrieval stage. Compared to the retrieval process in traditional RAG, Agentic RAG is more capable of:

Deciding whether to search (autonomous decision-making)

Choosing which search engine to use (autonomous planning)

Evaluating the retrieved context and deciding whether to re-search (self-planning)

Determining whether to use external tools

Agentic RAG VS Traditional RAG

Let’s start coding

Now, let’s get on with the guide on how to build an AI chatbot using LangGraph + DeepSeek-R1 + Function Call + Agentic RAG. When it comes to creating this chatbot, we will create an ideal environment for the code to work. We need to install the necessary Python libraries. For this, we will do a pip install of the libraries below.

pip install -r requirements.txt

Once installed, we import the important dependencies like langchian, Langgraph and Streamlit

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import Chroma

from langchain_core.messages import HumanMessage, AIMessage, ToolMessage

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langgraph.graph import END, StateGraph, START

from langchain.tools.retriever import create_retriever_tool

from langgraph.prebuilt import ToolNode

from langgraph.graph.message import add_messages

from typing_extensions import TypedDict, Annotated

from typing import Sequence

from langchain_openai import OpenAIEmbeddings

import re

import os

import requests

import streamlit as st

I created two databases. The first set is called research_texts, and it includes some research-related statements, like reports and papers about AI and machine learning.

The second set, development_texts, includes statements about ongoing projects, such as UI design, testing features, and optimization before a product release.

But feel free to customize the data depending on your use case

# Create Dummy Data

research_texts = [

"Research Report: Results of a New AI Model Improving Image Recognition Accuracy to 98%",

"Academic Paper Summary: Why Transformers Became the Mainstream Architecture in Natural Language Processing",

"Latest Trends in Machine Learning Methods Using Quantum Computing"

]

development_texts = [

"Project A: UI Design Completed, API Integration in Progress",

"Project B: Testing New Feature X, Bug Fixes Needed",

"Product Y: In the Performance Optimization Stage Before Release"

]

Let’s process the data by splitting it into smaller parts, converting it into document objects, and then creating vector embeddings.

I used the RecursiveCharacterTextSplitter to break long pieces of text into smaller chunks, ensuring that each chunk has a maximum of 100 characters.

Then, I converted the split texts into document objects using splitter.create_documents(). These document objects will be useful later when working with Deepseek_R1 for searching

Text splitting settings

splitter = RecursiveCharacterTextSplitter(chunk_size=100, chunk_overlap=10)

Generate Document objects from text

research_docs = splitter.create_documents(research_texts)

development_docs = splitter.create_documents(development_texts)

Create vector stores

embeddings = OpenAIEmbeddings(

model="text-embedding-3-large",

# With the `text-embedding-3` class

# of models, you can specify the size

# of the embeddings you want returned.

# dimensions=1024

)

We store research and development documents as vector embeddings and make them searchable using retrievers.

First, I use Chroma.from_documents() to create a vector store for research_docs, where the embedding=embeddings converts text into numerical vectors, and collection_name="research_collection" ensures the documents are stored in a dedicated collection for efficient retrieval.

Once both research and development texts are stored as vectors we use retrieval to find and retrieve the most relevant document from a vector store.

research_vectorstore = Chroma.from_documents(

documents=research_docs,

embedding=embeddings,

collection_name="research_collection"

)

development_vectorstore = Chroma.from_documents(

documents=development_docs,

embedding=embeddings,

collection_name="development_collection"

)

research_retriever = research_vectorstore.as_retriever()

development_retriever = development_vectorstore.as_retriever()

Then, we provide an AI agent with a tool, You first need to create a retriever and then import the tool.

I use the create_retriever_tool function in LangChain, which creates a tool for retrieving documents. The documents retrieved by this tool can be extracted from the return value of the function that this tool wraps,

research_tool = create_retriever_tool(

research_retriever, # Retriever object

"research_db_tool", # Name of the tool to create

"Search information from the research database." # Description of the tool

)

development_tool = create_retriever_tool(

development_retriever,

"development_db_tool",

"Search information from the development database."

)

# Combine the created research and development tools into a list

tools = [research_tool, development_tool]

I developed an agent function as a smart router for user questions. I extract the user’s message and use a prompt to categorize it as either research or development-related.

I connect to DeepSeek AI with a temperature of 0.7 for balanced responses. When the API responds, I check if it’s a research or development query, then use the appropriate retriever to find relevant documents. I format everything into a standard message format, including both the query and results.

If it doesn’t fit either category, I return a direct answer. Think of it as a traffic controller — directing questions to the right database for the best answers.

def agent(state: AgentState):

print("---CALL AGENT---")

messages = state["messages"]

if isinstance(messages[0], tuple):

user_message = messages[0][1]

else:

user_message = messages[0].content

# Structure prompt for consistent text output

prompt = f"""Given this user question: "{user_message}"

If it's about research or academic topics, respond EXACTLY in this format:

SEARCH_RESEARCH: <search terms>

If it's about development status, respond EXACTLY in this format:

SEARCH_DEV: <search terms>

Otherwise, just answer directly.

"""

headers = {

"Accept": "application/json",

"Authorization": f"Bearer sk-1cddf19f9dc4466fa3ecea6fe10abec0",

"Content-Type": "application/json"

}

data = {

"model": "deepseek-chat",

"messages": [{"role": "user", "content": prompt}],

"temperature": 0.7,

"max_tokens": 1024

}

response = requests.post(

"https://api.deepseek.com/v1/chat/completions",

headers=headers,

json=data,

verify=False

)

if response.status_code == 200:

response_text = response.json()['choices'][0]['message']['content']

print("Raw response:", response_text)

# Format the response into expected tool format

if "SEARCH_RESEARCH:" in response_text:

query = response_text.split("SEARCH_RESEARCH:")[1].strip()

# Use direct call to research retriever

results = research_retriever.invoke(query)

return {"messages": [AIMessage(content=f'Action: research_db_tool\n{{"query": "{query}"}}\n\nResults: {str(results)}')]}

elif "SEARCH_DEV:" in response_text:

query = response_text.split("SEARCH_DEV:")[1].strip()

# Use direct call to development retriever

results = development_retriever.invoke(query)

return {"messages": [AIMessage(content=f'Action: development_db_tool\n{{"query": "{query}"}}\n\nResults: {str(results)}')]}

else:

return {"messages": [AIMessage(content=response_text)]}

else:

raise Exception(f"API call failed: {response.text}")

Then, we designed a grading function that checks if our search was successful. I look at the last message received and check if it contains any retrieved documents.

If I find documents (marked by “Results: [Document”), I signal to move forward with generating an answer.

If I don’t find any documents, I suggest rewriting the question for better results. It’s like a quality check — making sure we have the right materials before proceeding.

def simple_grade_documents(state: AgentState):

messages = state["messages"]

last_message = messages[-1]

print("Evaluating message:", last_message.content)

# Check if the content contains retrieved documents

if "Results: [Document" in last_message.content:

print("---DOCS FOUND, GO TO GENERATE---")

return "generate"

else:

print("---NO DOCS FOUND, TRY REWRITE---")

return "rewrite"

I developed a generation function that creates the final answer from our found documents. I start by getting the original question and any documents we found in our search.

I extract the document content and create a prompt that asks DeepSeek-R1 to summarize the findings, focusing on key research advancements. I set up the API call with specific headers and a temperature of 0.7 for balanced creativity.

Once I get a response, I format it into a user-friendly message. If something goes wrong with the API call, It let you know about the error.

def generate(state: AgentState):

print("---GENERATE FINAL ANSWER---")

messages = state["messages"]

question = messages[0].content if isinstance(messages[0], tuple) else messages[0].content

last_message = messages[-1]

# Extract the document content from the results

docs = ""

if "Results: [" in last_message.content:

results_start = last_message.content.find("Results: [")

docs = last_message.content[results_start:]

print("Documents found:", docs)

headers = {

"Accept": "application/json",

"Authorization": f"Bearer sk-1cddf19f9dc4466fa3ecea6fe10abec0",

"Content-Type": "application/json"

}

prompt = f"""Based on these research documents, summarize the latest advancements in AI:

Question: {question}

Documents: {docs}

Focus on extracting and synthesizing the key findings from the research papers.

"""

data = {

"model": "deepseek-chat",

"messages": [{

"role": "user",

"content": prompt

}],

"temperature": 0.7,

"max_tokens": 1024

}

print("Sending generate request to API...")

response = requests.post(

"https://api.deepseek.com/v1/chat/completions",

headers=headers,

json=data,

verify=False

)

if response.status_code == 200:

response_text = response.json()['choices'][0]['message']['content']

print("Final Answer:", response_text)

return {"messages": [AIMessage(content=response_text)]}

else:

raise Exception(f"API call failed: {response.text}")

I designed a rewrite function that helps improve unclear questions. I take the original question and ask DeepSeek to make it more specific and clearer. I set up the API call with custom headers and a balanced temperature of 0.7, then asked the model to rewrite the question. When I get a response, I check if it worked properly — if it did, I return the improved question; if not, I will print the error

def rewrite(state: AgentState):

print("---REWRITE QUESTION---")

messages = state["messages"]

original_question = messages[0].content if len(messages)>0 else "N/A"

headers = {

"Accept": "application/json",

"Authorization": f"Bearer sk-1cddf19f9dc4466fa3ecea6fe10abec0",

"Content-Type": "application/json"

}

data = {

"model": "deepseek-chat",

"messages": [{

"role": "user",

"content": f"Rewrite this question to be more specific and clearer: {original_question}"

}],

"temperature": 0.7,

"max_tokens": 1024

}

print("Sending rewrite request...")

response = requests.post(

"https://api.deepseek.com/v1/chat/completions",

headers=headers,

json=data,

verify=False

)

print("Status Code:", response.status_code)

print("Response:", response.text)

if response.status_code == 200:

response_text = response.json()['choices'][0]['message']['content']

print("Rewritten question:", response_text)

return {"messages": [AIMessage(content=response_text)]}

else:

raise Exception(f"API call failed: {response.text}")

I created a decision-making function that checks if we need to use any tools based on the message content. I look at the last message and check if it matches our tool pattern (which looks for “Action:” at the start).

If it matches, I signal that we should retrieve information using our tools. If it doesn’t match, I signal that we should end the process.

def custom_tools_condition(state: AgentState):

messages = state["messages"]

last_message = messages[-1]

content = last_message.content

print("Checking tools condition:", content)

if tools_pattern.match(content):

print("Moving to retrieve...")

return "tools"

print("Moving to END...")

return END

Let’s create a workflow system that connects all our functions in a logical sequence. I used a LangGraph where each function is a node, starting with the agent that first receives the question. I set up special paths using edges — when the agent needs tools, it goes to retrieve information; if not, it ends. After retrieving, I check the documents: good results go to generate an answer, while poor results go back to rewrite the question

workflow = StateGraph(AgentState)

# Define the workflow using StateGraph

workflow.add_node("agent", agent)

retrieve_node = ToolNode(tools)

workflow.add_node("retrieve", retrieve_node)

workflow.add_node("rewrite", rewrite)

workflow.add_node("generate", generate)

# Define nodes

workflow.add_edge(START, "agent")

# If the agent calls a tool, proceed to retrieve; otherwise, go to END

workflow.add_conditional_edges(

"agent",

custom_tools_condition,

{

"tools": "retrieve",

END: END

}

)

# After retrieval, determine whether to generate or rewrite

workflow.add_conditional_edges("retrieve", simple_grade_documents)

workflow.add_edge("generate", END)

workflow.add_edge("rewrite", "agent")

# Compile the workflow to make it executable

app = workflow.compile()

Let’s make a function that manages how questions flow through our system. I take a user’s question and process it through the workflow app, collecting every event (like searching, finding documents, or generating answers) into a list.

def process_question(user_question, app, config):

"""Process user question through the workflow"""

events = []

for event in app.stream({"messages":[("user", user_question)]}, config):

events.append(event)

return events

Then, we use Streamlit to create a clean layout and put database content in a sidebar for easy reference. In the main area, I created a text input box for questions and split the screen into two columns. In the first column, I handle the question processing: when you click the “Get Answer” button, I show a loading spinner and process your question through the workflow.

I display any found documents in expandable sections and show the final answer with a green success box. In the second column, I added helpful instructions and example questions.

def main():

st.set_page_config(

page_title="AI Research & Development Assistant",

layout="wide",

initial_sidebar_state="expanded"

)

# Custom CSS

st.markdown("""

<style>

.stApp {

background-color: #f8f9fa;

}

.stButton > button {

width: 100%;

margin-top: 20px;

}

.data-box {

padding: 20px;

border-radius: 10px;

margin: 10px 0;

}

.research-box {

background-color: #e3f2fd;

border-left: 5px solid #1976d2;

}

.dev-box {

background-color: #e8f5e9;

border-left: 5px solid #43a047;

}

</style>

""", unsafe_allow_html=True)

# Sidebar with Data Display

with st.sidebar:

st.header("📚 Available Data")

st.subheader("Research Database")

for text in research_texts:

st.markdown(f'<div class="data-box research-box">{text}</div>', unsafe_allow_html=True)

st.subheader("Development Database")

for text in development_texts:

st.markdown(f'<div class="data-box dev-box">{text}</div>', unsafe_allow_html=True)

# Main Content

st.title("🤖 AI Research & Development Assistant")

st.markdown("---")

# Query Input

query = st.text_area("Enter your question:", height=100, placeholder="e.g., What is the latest advancement in AI research?")

col1, col2 = st.columns([1,2])

with col1:

if st.button("🔍 Get Answer", use_container_width=True):

if query:

with st.spinner('Processing your question...'):

# Process query through workflow

events = process_question(query, app, {"configurable":{"thread_id":"1"}})

# Display results

for event in events:

if 'agent' in event:

with st.expander("🔄 Processing Step", expanded=True):

content = event['agent']['messages'][0].content

if "Results:" in content:

# Display retrieved documents

st.markdown("### 📑 Retrieved Documents:")

docs_start = content.find("Results:")

docs = content[docs_start:]

st.info(docs)

elif 'generate' in event:

st.markdown("### ✨ Final Answer:")

st.success(event['generate']['messages'][0].content)

else:

st.warning("⚠️ Please enter a question first!")

with col2:

st.markdown("""

### 🎯 How to Use

1. Type your question in the text box

2. Click "Get Answer" to process

3. View retrieved documents and final answer

### 💡 Example Questions

- What are the latest advancements in AI research?

- What is the status of Project A?

- What are the current trends in machine learning?

""")

if __name__ == "__main__":

main()

Conclusion :

Agentic RAG and DeepSeek-R1 Function Call are innovative information retrieval and generation technologies that can take action autonomously. They can even complete complex tasks by breaking them down into smaller steps and calling the appropriate tools when needed, pushing the boundaries of AI in real-time decision-making and dynamic content generation. As these technologies evolve, their applications will become more diverse, driving innovation across numerous industries.

If this article might be helpful to your friends, please forward it to them.

Reference :

🧙♂️ I am an AI Generative expert! If you want to collaborate on a project, drop an inquiry here or book a 1-on-1 Consulting Call With Me.

I would highly appreciate it if you

❣ join to my Patreon: https://www.patreon.com/GaoDalie_AI

Book an Appointment with me:https://topmate.io/gaodalie_ai

Support the Content (every Dollar goes back into the video):https://buymeacoffee.com/gaodalie98d

Subscribe to the Newsletter for free:https://substack.com/@gaodalie

Top comments (0)