You’ll often notice in various projects that code behaves differently depending on the environment. That is not ideal, as code should behave the same across all development environments. This way, it’s easier to debug issues, test, and refactor.

In our case, we stored uploaded images on an AWS S3 bucket on the production site. However, in staging, we stored them on DigitalOcean, and locally on the public folder, somehow. The code for that looked like this:

This is how we improved it.

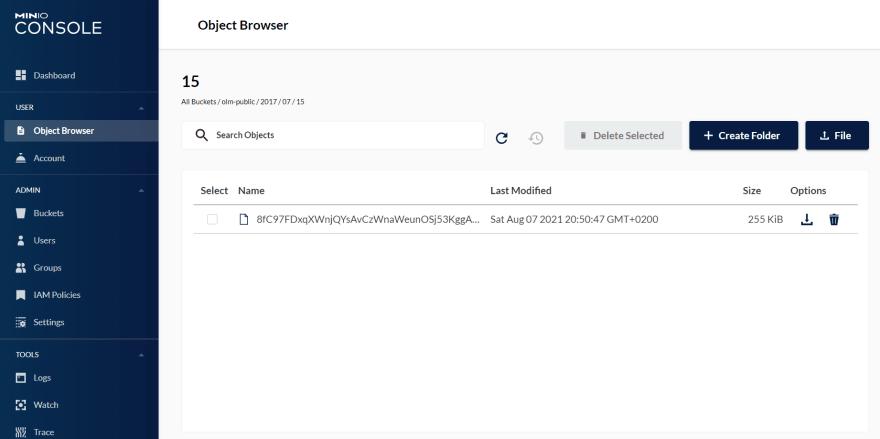

Install and configure Minio

Minio is an open-source object storage server with an Amazon S3 compatible API. If you’re using Homestead as your working environment, you’re super lucky; Minio is pretty easy to install, barely an inconvenience. Follow the instructions on the Laravel docs above, and you should be ready for the next step.

Refactor the uploading code

Minio will allow you to work with the same ‘interface’ as S3 when uploading files. You only need to apply different configuration values to your environments. This is the Laravel way. This is the way xD. With that said, our code now looks like this.

Two little gotchas

You might run into some issues on the first try. From the docs, you should be able to access Minio on http://localhost:9600. If that doesn't work, try http://192.168.10.10::9600, and also change the .env to AWS_ENDPOINT=http://192.168.10.10:9600.

Secondly, the docs say you should use the 'use_path_style_endpoint' => true configuration. If you're not sure what that means, it will simply make your URLs look like this locally: http://192.168.10.10:9600/bucket/image.jpg. It will also make your URLs look like this on production https://s3.eu-west-1.amazonaws.com/bucket/image.jpg. You might not want that, especially if your previous images have another URL signature.

What worked for us was just removing the use_path_style_endpoint configuration key. This way, the URLs had their bucket name in the path on our local environments, and in the domain part on staging and production, like this https://bucket.s3.eu-west-1.amazonaws.com/image.jpg. And yes, you can also use DigitalOcean with the same configuration.

The code used for illustration is taken from the OpenLitterMap project. They’re doing a great job creating the world’s most advanced open database on litter, brands & plastic pollution. The project is open-sourced and would love your contributions, both as users and developers.

Originally published at https://genijaho.dev.

Top comments (0)