Reap What You Sow

IaC, Terraform, & Azure DevOps

The Waiting Game

In the past I have worked in environments that took ages to provision infrastructure. I’m not talking days here, I mean it took a minimum of six months – and that’s if you had a lot of political capital to spend. The majority of requests took about a year and required a minimum of twelve meetings and four, 30-page packets of paperwork that needed to be signed by multiple directors.

After everything was approved, the virtual machines were manually provisioned to the minimal specifications. This was true even for development servers. Once provisioned, it was not unusual for the servers to be configured incorrectly. I have seen everything fat-fingered from RAM settings to incorrect time zones.

Well, guess what? There is a much better way to provision infrastructure in modern environments.

Infrastructure as Code

Infrastructure as Code or IaC is the process of provisioning and managing infrastructure through descriptive code that defines exactly what should be created, updated, or destroyed instead of using traditional manual configuration tools.

By bringing current best practices of software engineering into the infrastruction world, IaC offers many benefits over the “old way” of doing things.

- Reduced Risk

- Consistency

- Increased Speed

- Decreased Cost

By removing the human factor from the provisioning process and putting the code in a repository, with a peer-review process, you begin to eliminate the mistakes that people are prone to make. Using the same code base and automated process to deploy your infrastructure – every time – increases consistency, while also increasing the speed of provisioning. This in turn frees up your engineers to focus on solving more complex problems, also known as reduced cost. Engineers aren’t cheap; so the less time they spend on menial work – the better.

Terraform

In this post I will be using Terraform to provision and manage my infrastructure in Azure. Terraform is an open source tool for creating, updating, and versioning infrastructure safely and efficiently. It supports Microsoft Azure, Amazon Web Services, Google Cloud Platform, IBM Cloud, VMware vSphere and many others.

Getting Started

Install the latest version of Terraform., here.

Install the latest version of the Azure CLI, here

Once those are installed, make your way over to Azure DevOps. This is where we will store our code and create our build/release pipeline. If you have never used Azure DevOps I highly recommend that you give it a try. I’m not going to go over Azure DevOps in detail as it is outside of the scope of this post.

Deploying infrastructure into Azure from Azure DevOps requires an Azure Resource Manager service connection. Follow these instructions to create one.

Create a new repository and select Terraform as the gitignore file:

Clone this repository to your computer and create a new file called main.tf. Write the following code:

Clone this repository to your computer and create a new file called main.tf. Write the following code:

terraform {

required_version = ">= 0.11"

backend "azurerm" {

storage_account_name = "__terraformstorageaccount__"

container_name = "terraform"

key = "terraform.tfstate"

access_key = "__storagekey__"

}

}

This code tells Terraform that it must use a version greater than or equal to 0.11, it is provisioning resources in Microsoft Azure, and storing state in Azure Blob Storage. You can read more about storing Terraform State in Azure here. If you notice some of the values have “__” as prefixes and postfixes it is because we are using those to identify variables we will store in our pipeline.

Write the below code:

resource "azurerm_resource_group" "rg" {

name = "__resource_group__"

location = "__location__"

}

This block of code tells Terraform to create a new resource group and we are defining the name and location via pipeline variables.

Write the below code:

resource "azurerm_storage_account" "functions_storage" {

name = "__functions_storage__"

resource_group_name = "${azurerm_resource_group.rg.name}"

location = "${azurerm_resource_group.rg.location}"

account_tier = "Standard"

account_replication_type = "LRS"

}

The above block tells Terraform to create a new storage account. If you look closely you will see that we are setting the resource_group_name and location a little differently. What we are doing is using configuration values from the previously created resource group to define configuration values on our storage account. We want everything in the same location to reduce network traffic and we want to use the resource group that we just created in the previous block of code.

The gist of this is pretty simple: you can access configuration values and output from created resource in other parts of your code.

Write the below block of code:

resource "azurerm_app_service_plan" "functions_appservice"{

name = "__functions_appservice__"

location = "${azurerm_resource_group.rg.location}"

resource_group_name = "${azurerm_resource_group.rg.name}"

kind = "FunctionApp"

sku {

tier = "Dynamic"

size = "Y1"

}

}

We are now provisioning a new App Service Plan in Azure, which we are configuring with the sku for the consumption based plan since that is what Azure Functions requires to run.

Write the below block of code:

resource "azurerm_application_insights" "functions_appinsights"{

name = "__functions_appinsights__"

location = "${azurerm_resource_group.rg.location}"

resource_group_name = "${azurerm_resource_group.rg.name}"

application_type = "Web"

}

Now we are provisioning application insights to provide metrics and error logging for our Azure Functions.

Write the below block of code:

resource "azurerm_function_app" "function_app" {

name = "__puzzleplates_functions__"

location = "${azurerm_resource_group.rg.location}"

resource_group_name = "${azurerm_resource_group.rg.name}"

app_service_plan_id = "${azurerm_app_service_plan.functions_appservice.id}"

storage_connection_string = "${azurerm_storage_account.functions_storage.primary_connection_string}"

app_settings = {

"APPINSIGHTS_INSTRUMENTATIONKEY" = "${azurerm_application_insights.functions_appinsights.instrumentation_key}"

}

}

This section creates the actual Azure Functions App and adds the instrumentation key for the previously created AppInsights.

Commit and Push your changes back to the remote repository.

Back To Azure DevOps

In Azure DevOps create a new build pipeline and select “use the classic editor” at the bottom.

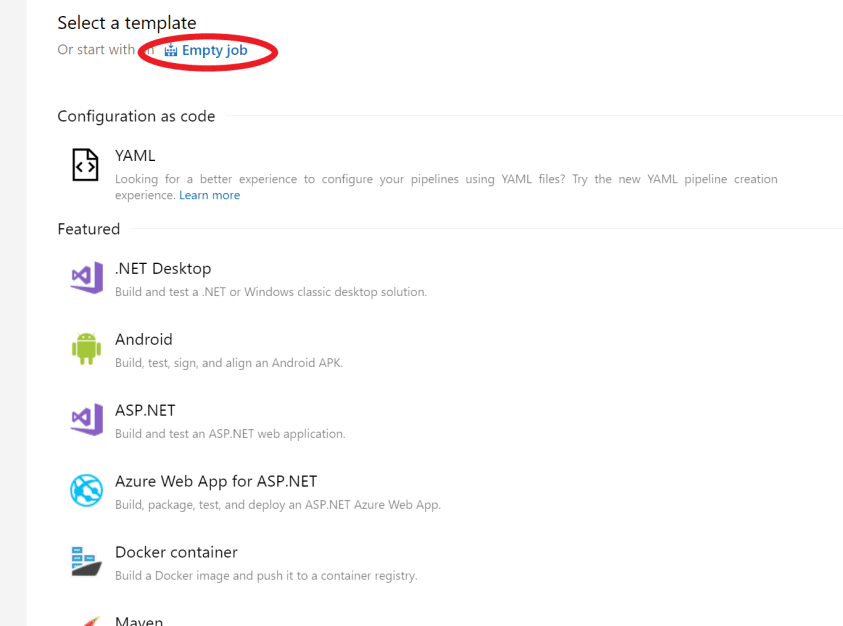

Select the Empty Job template at the top:

Select the Empty Job template at the top:

Add the Copy Files task to Agent job 1 with the same settings:

Add the Copy Files task to Agent job 1 with the same settings:

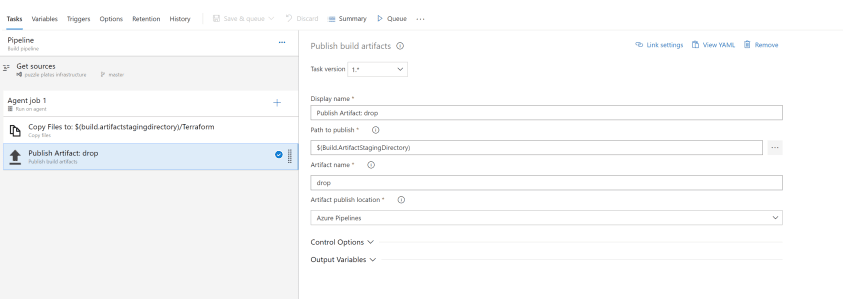

Add the Publish Artifact – drop task:

Add the Publish Artifact – drop task:

Go to Triggers and check Enable Continuous Integration. With CI enabled, every code changed published to the master branch will trigger this build:

Go to Triggers and check Enable Continuous Integration. With CI enabled, every code changed published to the master branch will trigger this build:

Hit Save & Queue. This will save your pipeline and kickoff a build. Once the build is successful, hit the Release button in the upper right corner:

Hit Save & Queue. This will save your pipeline and kickoff a build. Once the build is successful, hit the Release button in the upper right corner:

In Stage 1 select “1 job, 0 task” to edit the tasks in Stage 1 of the release pipeline:

In Stage 1 select “1 job, 0 task” to edit the tasks in Stage 1 of the release pipeline:

Add the Azure CLI task with the below settings. For the Azure Subscription make sure you use the service connection you created earlier:

Add the Azure CLI task with the below settings. For the Azure Subscription make sure you use the service connection you created earlier:

Add the following code to the inline script:

Add the following code to the inline script:

call az group create --location centralus --name $(terraformstoragerg)

call az storage account create --name $(terraformstorageaccount) --resource-group $(terraformstoragerg) --location centralus --sku Standard_LRS

call az storage container create --name terraform --account-name $(terraformstorageaccount)

This code creates the Azure Blob Storage to store Terraform’s State.

Afterwards add the Azure PowerShell task with the following settings:

Add the below code to the inline script:

Add the below code to the inline script:

$key=(Get-AzureRmStorageAccountKey -ResourceGroupName $(terraformstoragerg) -AccountName $(terraformstorageaccount)).Value[0]

Write-Host "##vso[task.setvariable variable=storagekey]$key"

This code saves the storage key into the release pipeline.

Add the Replace Tokens (installed from the Marketplace) to the pipeline. This task will replace all of the values with the “__” prefix and postfix with variables from the release pipeline:

Then add three Run Terraform tasks: one for Init, one for Plan, and one for Apply. Terraform Init initializes Terraform on the agent. Terraform Plan compares existing infrastructure to what is in the code you are deploying and determines if it needs to create, update, or destroy resources. Terraform Apply applies the plan to the infrastructure.

Then add three Run Terraform tasks: one for Init, one for Plan, and one for Apply. Terraform Init initializes Terraform on the agent. Terraform Plan compares existing infrastructure to what is in the code you are deploying and determines if it needs to create, update, or destroy resources. Terraform Apply applies the plan to the infrastructure.

Terrraform Init:

Next you will have to create and set your release variables:

Next you will have to create and set your release variables:

Afterwards hit Save in the upper right corner:

Afterwards hit Save in the upper right corner:

Click on the Continuous Deployment Trigger (Lightning Bolt) under Artifacts. Then enable continuous deployment. Now every time your build creates a new artifact a release will deploy your infrastructure into Azure:

Click on the Continuous Deployment Trigger (Lightning Bolt) under Artifacts. Then enable continuous deployment. Now every time your build creates a new artifact a release will deploy your infrastructure into Azure:

Click Save again and then click Create release to the right of the save button:

Click Save again and then click Create release to the right of the save button:

If everything went according to plan, you should now have the following deployed in Azure:

If everything went according to plan, you should now have the following deployed in Azure:

- Resource Group

- Storage Account

- App Service Plan

- Application Insights

- Azure Functions App

Top comments (0)