This is a submission for the Algolia MCP Server Challenge

What I Built

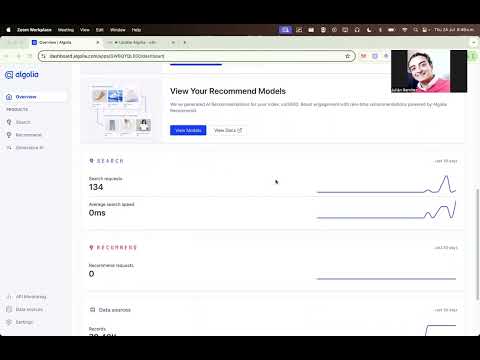

The primary use case revolves around automating the enrichment of Inc5000 company data stored in an Algolia index. Inc5000 refers to the annual list of the fastest-growing private companies in the United States, published by Inc. Magazine. The data includes attributes like company name, industry, growth rate, and years on the list (yrs_on_list). The goal is to enhance this dataset with AI-generated insights to make it more valuable for search, analysis, or business intelligence purposes.This enrichment is powered by Anthropic's Claude AI model, integrated via API calls within an n8n workflow

Demo

Repo

Algolia Node.js MCP – n8n Integration Guide

Watch the video above for a walkthrough of the n8n workflow integration process.

🚀 Quick Start: n8n Workflow Integration

This guide explains how to connect Algolia Node.js MCP to your custom n8n workflows, enabling you to trigger automations from Claude Desktop or any MCP-compatible client.

1. Clone and Install MCP

git clone https://github.com/Juls95/algoliaMCP.git

cd algoliaMCP

npm install

2. Configure and Start MCP

-

Authenticate with Algolia:

node src/app.ts authenticate

-

Start the MCP server:

node src/app.ts start-server

3. Configure Claude Desktop (or MCP Client)

Add this to your Claude Desktop configuration:

{

"mcpServers": {

"algolia-mcp": {

"command": "<PATH_TO_BIN>/node",

"args": [

"--experimental-strip-types",

"--no-warnings=ExperimentalWarning",

"<PATH_TO_PROJECT>/src/app.ts"

]

}

}

}

Restart Claude Desktop after saving changes.

🆕 How the n8n Workflow Tool Works

- The tool

triggerN8nWorkflowis included by default and registered automatically. - …

How I Utilized the Algolia MCP Server

- Algolia MCP Server: A local server that exposes Algolia's APIs (search, update, analysis) as tools accessible via natural language.

- Claude Desktop: Anthropic's app that integrates MCP as a custom tool, allowing users to converse with Claude and invoke Algolia actions dynamically.

- Launch MCP Server: Run the Algolia MCP locally, configured with Algolia API keys. It listens for prompts and translates them into API calls.

- Integrate with Claude Desktop: Add MCP as a tool in Claude Desktop's settings, enabling Claude to use it for tasks involving Algolia.

- Issue Natural Language Commands: In Claude Desktop, prompt something like: "Search Algolia for companies with yrs_on_list:17, enrich with research on trends and categories, then update the index."

-

Claude Processes the Prompt:

- Claude interprets the intent.

- Invokes MCP tools: First, a search tool to fetch data, parses the data and iterates over each item

- Uses its own reasoning or API calls for enrichment (e.g., generating summaries/trends).

- Calls MCP's update tool to push changes back to Algolia.

Key Takeaways

How It Worked Step by Step

The system operates in two main phases: the core n8n workflow to fetch and update data form Algolia and the integration with Claude Desktop + Algolia MCP for agentic enhancements. Below is a detailed, sequential breakdown based on the provided details.

-

Setup Overview:

- Wait for the message from Claude with the commands from the user.

- Link to n8n: The agentic flow can trigger or extend the n8n workflow. For example, Claude sends information to n8n using the MCP for further agentic processing.

-

Fetching Data from Algolia:

- An HTTP Request node sends a POST request to Algolia's search endpoint:

/1/indexes/inc5000/query. - The request body includes filters like

"yrs_on_list:17"to target companies with exactly 17 years on the list. - Additional parameters:

-

distinct=1: Ensures only one record per company (deduplication). - Custom ranking:

desc(year)to prioritize the most recent entries. -

hitsPerPage:5: Limits results per page for manageable batches. -

attributesToRetrieve: ["objectID", "company_name", "industry", "growth_rate", "yrs_on_list"]: Retrieves only essential fields to optimize performance.

-

- Pagination is handled by starting with

page=0. After processing a page, an IF condition checks ifnbHits > (page+1)*hitsPerPage; if true, the page increments, and the workflow loops back to fetch the next page.

- An HTTP Request node sends a POST request to Algolia's search endpoint:

-

Iterating Over Fetched Records:

- The fetched data (an array of "hits") is split using an Item Lists node to break it into individual items.

- A Loop Over Items node (using splitInBatches v3) processes each item one by one with

batchSize=1. This ensures sequential, reliable handling, avoiding parallel API overloads.

-

Enriching Each Company with Claude API:

- For each company record, an HTTP Request node calls Anthropic's Claude API: POST

/v1/messages. - Model used:

claude-3-5-sonnet-20240620. - The prompt instructs Claude to generate:

- A 100-word summary based on the company's details (e.g., name, industry, growth rate).

- Predicted trends (e.g., market expansions or challenges).

- Categories (e.g., sector classifications).

- Tags and scores (e.g., 'Fast-Growing' with a score of 8/10, 'Tech Innovator' with 7/10).

- The prompt specifies JSON output for easy parsing.

- For each company record, an HTTP Request node calls Anthropic's Claude API: POST

-

Parsing the Claude Response:

- A Set node extracts fields from Claude's JSON response:

summary,trends,categories,objectID. - This node merges the new enriched data with the original Algolia record.

- A Set node extracts fields from Claude's JSON response:

-

Updating Algolia with Enriched Data:

- Another HTTP Request node uses Algolia's partialUpdateObject API (single-object endpoint) for reliability.

- It sends updates one by one using the Admin API Key.

- This avoids batch failures; if one update fails, others continue.

- Key fix: Ensured the JSON body is correctly formatted without unnecessary

JSON.parseon arrays.

-

Aggregating Enriched Records Across Iterations and Pages:

- As the loop processes items and pages, a Merge node (in "All Item Data" mode) accumulates all enriched records into a global array.

- A Set node helps manage this aggregation, ensuring data from multiple pages and iterations is collected without loss.

-

Sending Completion Email:

- Once all processing is done, a Send Email node (v2.1) uses SMTP (e.g., Gmail with App Password) to notify the user.

Key Challenges Resolved During Implementation

- Iteration Issues: Initial problems with looping over arrays were fixed by using Item Lists to split hits and setting batchSize=1 for per-item processing.

- Aggregation: Switched to "All Item Data" mode in Merge nodes, with careful pagination accumulation to build a complete list.

- Update Failures: Batch updates were unreliable, so switched to single-object endpoints; also corrected JSON body handling.

- Email Formatting: Used array methods (.map/.join) to create readable lists in HTML.

Thanks for reading!

Top comments (0)