Let me start by stating: I neither dismiss AI as a fad nor the astonishing progress the field and the researchers have made. Compare the old Google Translate to today's version and one sees the advances in no time. This text is only about good and healthy skepticism and being aware of the current limitations.

AI is everywhere.

Every blog post seems to be about AI.

Every video seems to be about AI.

Every new tool seems to be using AI.

With ChatGPT's public release in November 2022, things moved even faster.

The goal of this article is to take a step back and try to separate fiction from reality, and hype from actual real-world business cases.

On note: my work focuses on regulated industries in the EU. E.g., banks, and insurance companies. So, some concerns that I raise may not apply to you.

Topics we ignore

First things first. AI is a complex and vast topic. Its proponents and critiques drive the conversation from "silver bullet and panacea" to "existential risk".

We exclude the following trains of argument from the rest of the article:

- Ethics: AI is tricky from an ethic-point-of-view. Consider face recognition. Innocent enough in a photo application. But may be problematic when used to find dissidents in a crowd.

- Control problem: building an AI is one thing. Making sure it benefits humanity and helps us prosper is another.

- Artificial General Intelligence: current AIs are rather narrow in application and scope. They can play Go. They can generate funny images. But they cannot do everything. An Artificial General Intelligence could tackle a wide range of tasks-, surpassing human capabilities. The implications would be significant and are ignored in this article.

- Existential risk: The fear that "we might be to an AI, as an ant is to us" runs deep. Let's just point to Bostrom's book Superintelligence: Paths, Dangers, Strategies and to Harris' podcast Making Sense that explores this in depth.

These issues are not dismissed easily. They are urgent and important. Here we want to focus on a different angle, however. The links above should serve as a good starting point for exploring the implications.

The AI hype-train

AI has seen ups and downs since its inception in computer science research.

With the availability of giant data sets and computing power of unprecedented scale since the 2010s AI has seen an incredible boost. Methods like deep learning and deep reinforcement learning promise things undreamed of before. Andrew Ng, known for his extensive work in the field and, e.g., Coursera, wrote in 2016 in Harvard Business Review:

If a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future. (Andrew Ng)

With the release of ChatGPT back in November 2022 the general public started to jump on the hype train. The Google trend for "Künstliche Intelligenz" (German, "artificial intelligence") shows a dramatic spike in interest since the release of ChatGPT.

And the promise just keeps growing. At the beginning of April 2023, the German IT news portal Golem published an article citing a research paper. The claim: the author of the paper was able to predict stock market movements just using ChatGPT.

According to this article, Goldman Sachs estimates that 35% of all jobs in the financial industry are jeopardized by AI.

The consulting company McKinsey compared the economic impact of AI to the invention of the steam engine.

Together these elements may produce an annual average net contribution of about 1.2 percent of activity growth between now and 2030. The impact on economies would be significant if this scenario were to materialize. In the case of steam engines, it has been estimated that, between 1850 and 1910, they enabled productivity growth of 0.3 percent per year.

Just imagine. The impact of AI might be four times the impact of the steam engine, which revolutionized the worldwide economy.

Last, but not least, research using the newest generation of GTP, GTP-4, claims to have found "sparks of artificial general intelligence".

So, this is it. AI is finally on the verge of the dominance it promised for so long. Do data and compute power equal the singularity we dreamed of (or feared)?

Over-promise and under-deliver

Herb Simon, a pioneer in AI research, predicted

Machines will be capable, within twenty years, of doing any work man can do. (Herb Simon)

This was in 1965. We must not ridicule Simon for a bold prediction. The intention is to show, that we tend to overestimate (and underestimate) the impact of trends we are working on.

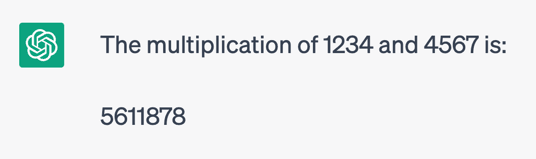

Going back to ChatGPT, it is easy to see its limitations. Asking the AI to multiply two numbers results in a puzzling answer. The result of 1234 times 4567 according to ChatGPT is 5611878. Close, but wrong.

Underlying ChatGPT is a language model. Not understanding. ChatGPT does not understand multiplication, or text, or even language. It has a complex internal model, that applies to prompts and generates answers. Basically like an idiot savant.

ChatGPT is kind of this idiot savant but it really doesn't understand about truth. It's been trained on inconsistent data. It's trying to predict what they'll say next on the web, and people have different opinions. (Geoffrey Hinton)

The AI does not comprehend context, history, or abstract relations. A fun experiment is to drop any legal text - say the GPL license and then ask questions based on the license.

Again, not to ridicule, but to point out the limitations.

So, why the hype? Why is every second post on Linkein about the amazing powers of ChatGPT, and AI in general?

There are many factors. But predominately:

- Marketing: If your product or service is driven by AI then it gets attention. The same happened with the terms Agile and Cloud. Agile Cloud Foobar means more customers than just Foobar.

- Lack of understanding: AI is complex, as are GANs, as is Machine Learning, as are LLMs. Judging the capabilities and their limitations is difficult if not impossible, without understanding how these methods work.

- Media and the news cycle: Media works by grabbing attention. Every tiny step of research results in a news report blown of out proportion.

Let's go back to the news headline from Golem above. We find some interesting detail if we read the underlying research and references carefully:

He used ChatGPT to parse news headlines for whether they’re good or bad for a stock, and found that ChatGPT’s ability to predict the direction of the next day’s returns were much better than random, he said in a recent unreviewed paper. (CNBC)

The paper has not been reviewed. The results have not been challenged at the time the article was released. This seems like reporting on an arbitrary blog post. The report lacks diligence in favor of attention-grabbing. ChatGPT is the hot topic. Predicting stocks with it sounds magical. So journalistic duties take a step back.

The point is: we must look behind the ads. We need to read and analyze the references and the actual data.

"Economic"-AI

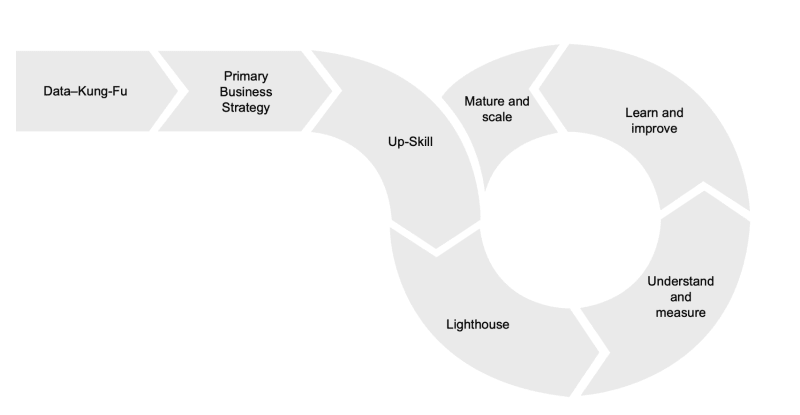

Instead of falling for false and overblown promises, we could go a different route, which may be called economic AI. The following illustration visualizes the underlying idea.

We use a narrow and focused process that starts with first having a good business-driven reason for adopting AI methods and tools (primary business strategy). But there is even one more essential step even before that: getting your data in order and accessible, i.e., data Kung-Fu.

Data Kung-Fu relates to the idea of making the business data flow between stances, being accessible, agile, and bendable. We often see organizations building either undiscovered data-silos without any APIs or easy methods of access. Or organizations go the opposite way and start building expensive ungoverned and in the end useless data-lakes. Both tend to go nowhere. Instead, a "data-as-a-product" approach leads to more promising results. Whatever we do: getting the data in shape is the foundation of any AI strategy.

Now we can up-skill the people in the organization. Become familiar with data-methods like deep learning, tooling, and frameworks. This is essential to judge the applicability and results of different AI approaches.

Next, we build a lighthouse solution. This has to be a real business-driven and impactful first vertical slice. The goal is to prove AI is an asset for the organization. Everybody can build an image-labeling application today. But building a machine learning-supported loan application tool is a different animal. Governance, security, and compliance, all come into play here and need to be solved. Only then do we know what we are dealing with.

Finally, we are in the improvement and growth loop. We understand and measure the impact of our solution. We learn and improve our setup and we mature and scale the usage of AI within the organization.

In essence, this leads to narrow and targeted applications of AI to a concrete business domain. This can range from automatically identifying customers in KYC processes to automatically processing incoming documents (IDP). None of these use cases will get you a page one headline. But each will have a significant ROI.

Challenges: Trust and bias

Although we have found a replacement for the elevated promises of AI in the form of economic AI, two central concerns remain

- the trust in the results of an AI solution

- the bias sometimes found in an AI solution

Both are related (how can we trust a biased AI?), but need to be addressed differently.

Human-centric-AI

The core of the idea is to move towards a human-centric-ai.

If we want an AI solution to be accepted, it has to meet three base requirements:

- we need the solution to be reliable

- we need the solution to be safe

- we need the solution to be trustworthy

This idea is visualized in the next diagram.

The human-centric-AI supports full automation, but in the end, leaves control and verification to the human. The human always stays in charge.

This is what we call the AI as a valet analogy. Instead of seeing eye to eye with an AI, or even regarding an AI as a human partner, we reduce AI to what it is meant to be: a very powerful tool, but a tool in the end.

Imagine our phones to be equipped with a multitude of narrow specialized AI, each excelling at a special purpose. Maybe monitoring our health, optimizing our calendar and mail, and improving the photos we take.

Maybe this sounds banal or boring. But at least it seems realistic for now.

Bias and diversity as a solution

This leaves the second, related, challenge: Bias. This may turn out to be the most important near-term challenge for AI and computer science as a field.

There have been many shocking reports from biased AIs leading to terrible results. Let's remember the gender bias in Google's picture search, or the racial bias in the same engine. Both have since been addressed, kind of, but rather haphazardly instead of fixing the underlying core problem: the data you feed into an AI determines the results.

So, what can be done?

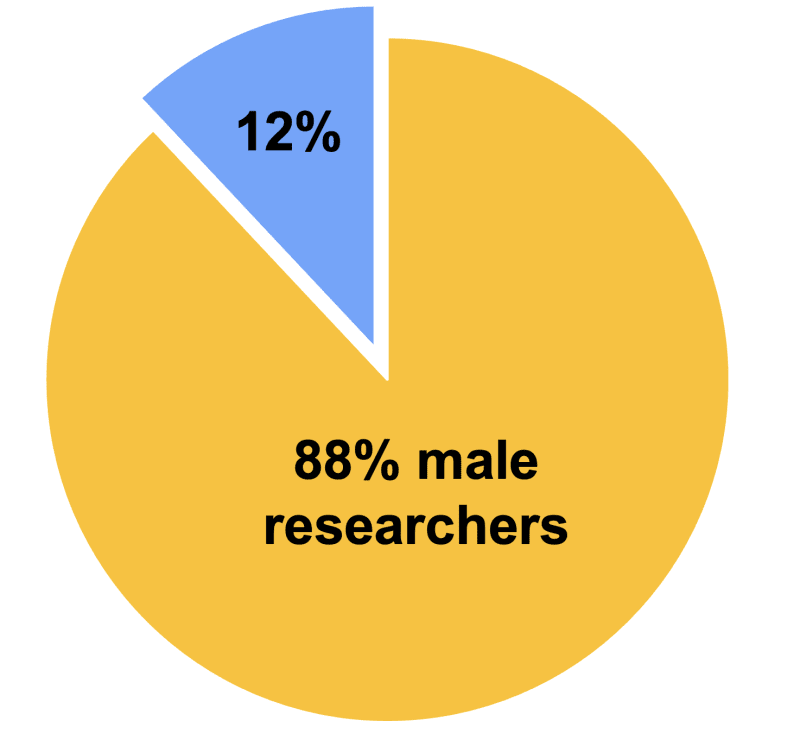

A Wired article from 2018 states that less than 12% of AI researchers are not male.

Whether or not that ratio is correct is not important. But researchers and practitioners in the AI field do not reflect society as a whole. If AI transforms society as profoundly as we all expect, then this is a very problematic situation.

But this is not only AI. A study from the German "Gesellschaft für Informatik" ("Society for Computer Science") came up with distressing results.

The following graph illustrates the interest of boys and girls in computer science over time.

Both groups start with a similar level of interest and curiosity. But over time this changes dramatically. The study suggests many reasons, for example the stereotypical "IT-nerd". If we watch any IT related show, we immediately see the problem.

And let's not get started on the under-representation of groups like, e.g., trans-people.

The solutions to the lack of diversity in AI and computer science are difficult and are being worked on. A short-term mitigation seems unlikely, however. This implies, that we have to be very aware of bias in our data sets and AI solutions. If we build an AI-driven product (or any data-driven for that matter) we must set up tests to discover any unintentional bias before using the solution for real-world problems.

Summary

Let's be clear. AI and its sub-disciplines will transform society. New jobs will appear. Old jobs will disappear. We will work and live differently.

But not overnight.

Things are not revolutionized overnight often. Evolution instead of revolution is more often than not how things progress.

We need to educate ourselves on the tools and methods around AI to understand their impact. Without understanding we are left to believe and trust the snake-oil vendors and the fearmongers. Let's look behind the media outbursts, let's dissect the actual research and not fall blindly into cheap scams.

If AI is one of the things changing the world in a fundamental way over the next years (decades, whatever), then we need to pay attention. Diversity in research and data is of uttermost importance. Bias must be avoided. Trust must be built. Otherwise, AI won't be embraced and not live up to its promise.

- Title picture: Photo by Andy Kelly on Unsplash

Top comments (0)