What is MCP and Why Should You Care?

If you've ever used AI-powered tools like Cursor or virtual assistants, you might have noticed that they are often limited to specific functionalities—for example, Cursor primarily helps with code-related queries but lacks access to real-time external data.

This happens because AI models rely on pre-trained knowledge and don't automatically fetch live data. This is where Model Context Protocol (MCP) servers come in.

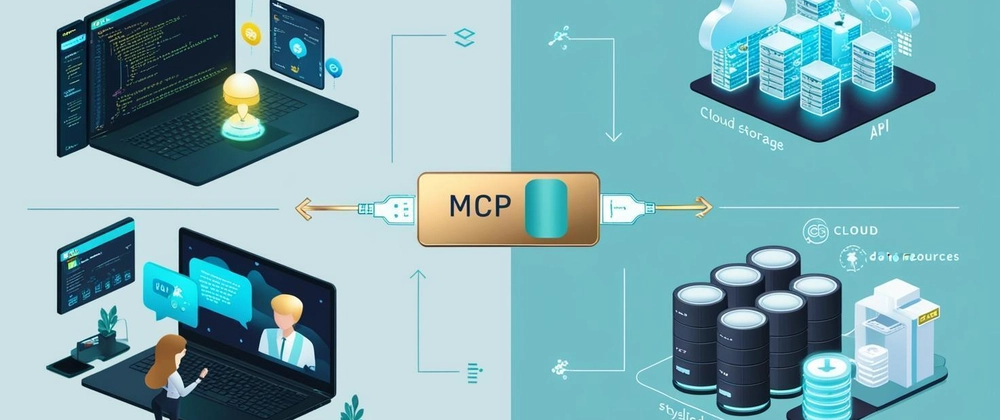

Think of MCP as a bridge between AI models and the real world. It enables AI applications to fetch real-time information, execute actions, and interact with various data sources in a structured way. Instead of manually integrating APIs for each use case, MCP servers provide a standardized way for AI to access live data and external tools.

For a deeper technical dive, check out the Model Context Protocol documentation here.

Why is MCP Important for AI?

AI assistants often face these common issues:

Limited to pre-trained knowledge – They can't fetch real-time data.

Contextual gaps – They may struggle with providing relevant responses.

Lack of automation – Manual API integrations are required for every external data source.

MCP fixes these problems by acting as a bridge between AI models and real-time data sources:

Fetch up-to-date information – AI can access fresh data dynamically.

Enable real-time interactions – AI can execute tools and interact with external APIs.

Improve contextual understanding – MCP structures data in a way that AI understands, leading to more precise responses.

For example, if you use Cursor to analyze a piece of code, it might generate a generic response without deeper insights. However, with MCP:

AI can fetch your latest GitHub commits to provide relevant context.

It can analyze patterns in your code to suggest optimizations.

MCP tools can refine vague prompts into meaningful, structured requests, improving AI responses.

This makes AI-powered developer tools more intelligent and efficient.

How MCP Works: A Simple Breakdown

MCP servers operate using three main components:

🔹 Resources (Fetching Data) – Think of these as GET requests, retrieving data AI needs (e.g., latest commits, API statuses, stock prices).

🔹 Tools (Executing Actions) – These function like POST requests, enabling AI to perform actions such as sending emails, updating databases, or running scripts.

🔹 Prompts (Defining Interactions) – Predefined message templates that help AI interact with users in a structured way.

For example, let’s say an AI tool needs to refactor a code snippet:

It fetches previous versions of the code via a Resource.

It runs an automated refactoring tool via a Tool.

It formats the response using a well-structured Prompt for better clarity.

For more details on MCP architecture, visit Cursor's documentation.

Real Example: Improving IDEs with MCP

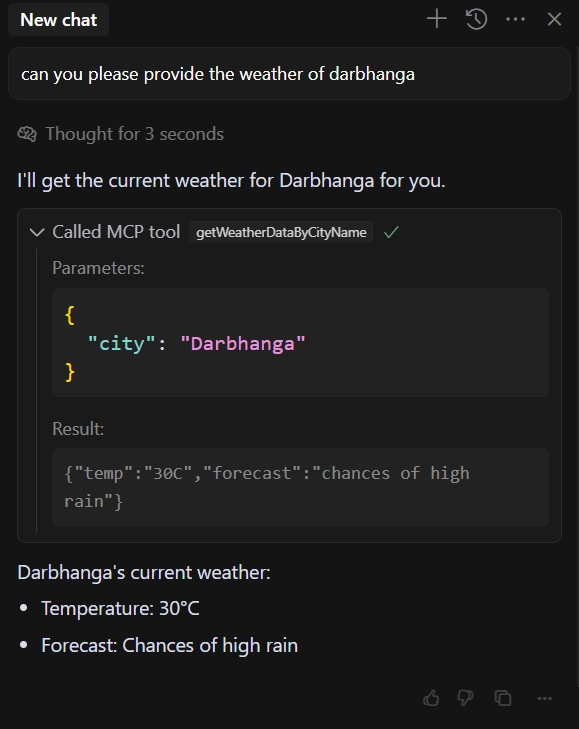

Recently, I asked Cursor Chat to fetch the weather for Darbhanga, and it responded:

"I apologize, but I am not able to provide real-time weather information for Darbhanga or any other location. I am a programming assistant designed to help with code-related questions and tasks."

This highlights AI’s limitation in fetching real-time data. However, we can solve this issue with an MCP-based weather tool.

By calling an MCP tool like this:

server.tool('getWeatherDataByCityName', {

city: z.string(),

}, async({ city }) => {

return { content: [{ type: "text", text: JSON.stringify(await getWeatherByCity(city)) }] }

});

AI-powered IDEs like Cursor can fetch real-time weather data, enhancing their functionality beyond pre-programmed capabilities.

Similarly, IDEs could use MCP to: 1. Fetch real-time debugging insights from error logs. 2. Automate code reviews by calling external linting tools. 3. Provide personalized suggestions based on coding patterns.

This is how MCP transforms AI assistants into powerful, context-aware developer tools.

Using SSE and Local Execution for MCP Calls

MCP tools can be executed in different ways:

SSE (Server-Sent Events): Ideal for streaming live updates like real-time error tracking, debugging logs, or AI-driven suggestions.

Local Execution: Runs tools within your development environment, offering faster performance and improved security.

Both methods ensure seamless AI interactions based on the use case.

The Future of AI-Powered IDEs with MCP

MCP is revolutionizing AI interactions, making them more context-aware, real-time, and powerful. For developer-focused AI tools like Cursor, MCP can bridge the gap between AI’s capabilities and real-world development needs.

Top comments (0)