This is a Plain English Papers summary of a research paper called German AI Models Learn Grammar Better from Child-Like Speech than Random Text, Study Shows. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

- Research examines how language model training on child-directed speech affects acquisition of German grammar

- Study focuses on verb-second (V2) word order rules in German

- Compares BabyLM models trained on two types of German datasets

- Models trained on authentic child input showed significantly better grammar learning

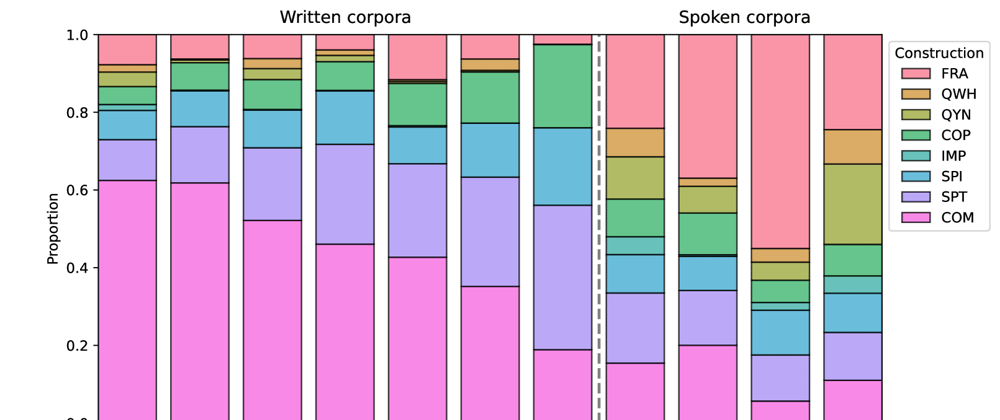

- Input frequency distribution of constructions shapes grammar acquisition

- Findings support usage-based language acquisition theories

Plain English Explanation

Kids learn language by hearing it used around them. The patterns they encounter most often become the building blocks of their grammar knowledge. This paper asks: do AI language models learn grammar the same way?

The researchers focused on German, which has special word order ...

Top comments (0)