This is a Plain English Papers summary of a research paper called How AI Models Can Think Faster and Better: New Research Shows 70% More Efficient Reasoning. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

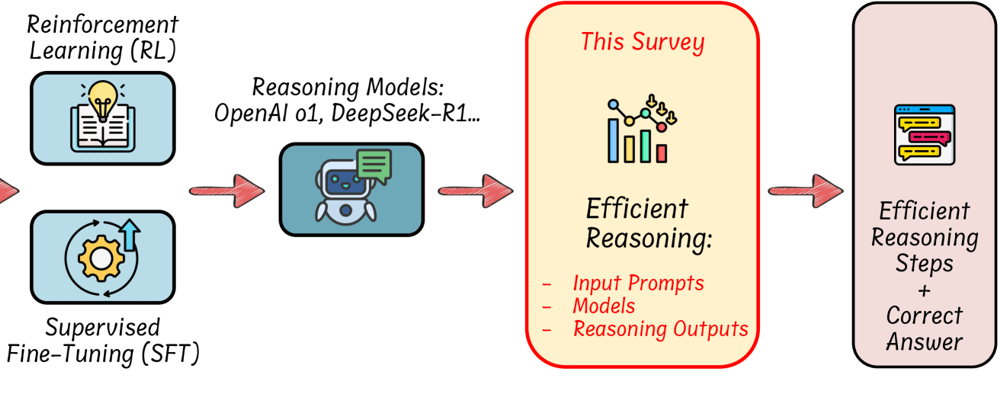

- LLMs often overthink with long reasoning chains

- Efficient reasoning techniques reduce computation while maintaining accuracy

- Survey categorizes approaches into: reasoning length reduction, early exit, reasoning acceleration

- Challenges include generalizability across tasks and models

- Future work focuses on better stopping mechanisms and adaptive reasoning strategies

Plain English Explanation

Language models like ChatGPT often take too many steps to solve problems - just like a student who writes pages of work for a simple math problem. This paper calls this "overthinking" and explores ways to make these models more efficient without sacrificing accuracy.

Think of ...

Top comments (0)