Making the complex, simple

Modern software engineering offers a bunch of ready-to-use tools that make development faster and easier. That's awesome! But that doesn't mean we can just ignore how things actually work behind the scenes.

In this simple and straightforward text, I'll explain some things about rate limiters and why it's worth knowing how they tick.

Why?

When it comes to APIs, managing requests, sending back responses, and handling intricate business rules all take up resources. And when these resources are used up, it usually translates to requiring more infrastructure and shelling out extra cash. If you're offering a service to clients, this is the basic idea of how APIs operate. However, regardless of your API's functionality, there's always a threshold for the number of requests it can handle - be it thousands or millions. Eventually, there's a breaking point where the volume of requests within a specific time frame becomes overwhelming.

Just an example of some APIs and its rate limiters:

Twitter API: Applies rate limits to different endpoints and functionalities of the Twitter API. For example, the standard Search API has rate limits such as 180 requests per 15-minute window for user-authenticated requests.

GitHub API: Enforces rate limits on API requests, which depend on whether the request is authenticated or not. For unauthenticated requests, the rate limits might be lower compared to authenticated requests.

Stripe API: Implements rate limits on API requests for payment processing and related functionalities. The specific rate limits can vary depending on the specific API endpoint.

Twilio API: Sets rate limits on sending SMS, making calls, and other communication services. The rate limits can be specific to the type of communication and the Twilio service being used.

Keep this in mind: rate limiters operate on the service level, not the network level. So, you're not overseeing data packets and IP addresses; instead, you're working with endpoints and service requests.

How?

Let's start by clarifying what exactly you want to safeguard your system from.

Some examples:

- A user can write no more than 2 posts per second.

- You can create a maximum of 10 accounts per day from the same IP address

- You can claim rewards no more than 5 times per week from the same device For lack of creativity I took these examples from the Alex Xu book, System Design Interview

Now that you have your requirements, answer an important question, please, comment below before reading further:

Where should you put your rate limiter??

Rate Limiter on the Client

Well, let's dig in the opportunities that we have.

That's a functional choice, no doubt. However, there are a couple of drawbacks. Even though it stops your application from handling undesired requests, the solution is vulnerable on the client side. They can easily bypass this by removing the module, allowing requests without the rate limiter.

Rate limiter on the API

This approach is effective too. It lets you block clients from altering the application to bypass the rate limiter. However, the trade-off is that you'll manage the rate limiter processing within the API itself, aiming to shield it from undesired requests. This shields the API from executing unnecessary instructions, but it's still not ideal.

Rate Limiter as a Proxy

This could be the most refined solution to tackle this issue. By implementing a rate limiter to manage these requests, you effectively sift through and weed out all the undesired processing from your Service API. Of course, there are downsides, such as introducing an additional software component to manage and the potential for network overload. Yet, considering the benefits, it's a reasonable trade-off to make.

Algorithms

Now that we've grasped the Rate Limiter concept, let's delve into how we can construct one ourselves. It's worth noting that numerous companies provide pre-built solutions for rate limiting, and there are also open-source projects that offer similar functionality. However, the purpose of this document is to explore the inner workings and mechanics behind it.

Token Bucket List

Among the various rate limiting algorithms, we'll focus on the token bucket list due to its simplicity and effectiveness. We'll delve into its mechanics, since it offers a straightforward yet efficient approach.

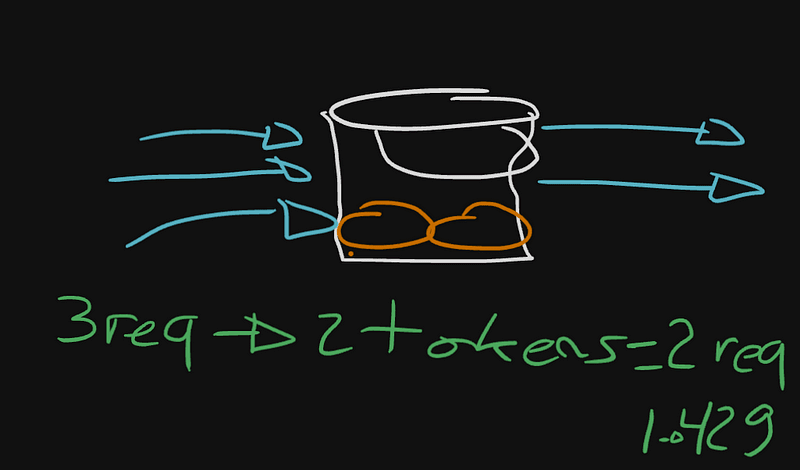

In essence, this algorithm involves a "refiller" that gradually adds tokens at intervals until a predefined maximum is reached. Any additional tokens beyond this maximum are essentially lost as they overflow.

This process occurs within the lifecycle of the Rate Limiter Application, distinct from the Request Lifecycle. When a request is received, a token is consumed. If no token is available, the request responds with a "429 Too Many Requests" status to the user, effectively rejecting the request. In this scenario, the request is declined due to the lack of available tokens.

Given the situation described, with only 2 tokens available and 3 incoming requests, the first 2 requests are allowed through, utilizing the available tokens. However, the third request is rejected and met with a "Too Many Requests" HTTP response, resulting in its dismissal.

The Code

Feel free to explore the token bucket code on my technical blog and repository 😄

If you found the content valuable, consider subscribing to my Newsletter 😀

Remember, this content is provided freely and will always remain so. If you find it helpful, consider supporting me, buy me a coffee

I'm eager to hear your thoughts in the comments section.

Hope I made the complex, simple.

Top comments (2)

great article! your explanation is very easy to understand. keep it up!

Im glad you liked! You should subscribe to my newsletter to keep updated to new content :-)