In this blog post, I’ll introduce you to a powerful technique called model routing and guide you through creating a model router using SetFit, a framework optimized for few-shot learning. We'll cover the concept, its benefits, and a step-by-step tutorial, including training and inference code. Finally, we'll deploy the model to Hugging Face for easy access.

Table of Contents

- Introduction

- What is Model Routing?

- Why We Need Model Routing

- Creating a Model Router with SetFit

- Conclusion

Introduction

In the rapidly evolving field of artificial intelligence, efficiency and cost-effectiveness are paramount. Model routing is an innovative technique that directs user queries to the most appropriate model based on their complexity or nature. For instance, simple questions can be handled by lightweight models like GPT-4o mini, while more intricate queries are routed to robust models like GPT-4. This approach optimizes resource usage, reduces costs, and speeds up response times.

In this post, we’ll explore how to implement model routing using SetFit, a framework that excels at few-shot fine-tuning of sentence transformers. With minimal labeled data, SetFit enables us to build an effective model router—perfect for scenarios where extensive datasets are unavailable.

What is Model Routing?

Model routing is the process of classifying and directing user queries to the most suitable model based on their characteristics. As explained in Huyen Chip’s blog:

"One use case of predictive human preference is model routing. For example, if we know in advance that for a prompt, users will prefer Claude Instant’s response over GPT-4, and Claude Instant is cheaper/faster than GPT-4, we can route this prompt to Claude Instant. Model routing has the potential to increase response quality while reducing costs and latency."

Here’s a practical example:

- Simple Query: "What is the capital of France?" → Routed to GPT-4o mini.

- Complex Query: "Analyze the time complexity of the merge sort algorithm." → Routed to GPT-4.

This technique leverages predictive human preference to anticipate which model will deliver the best response, balancing quality, cost, and speed.

Why We Need Model Routing

Model routing addresses several critical needs in AI systems:

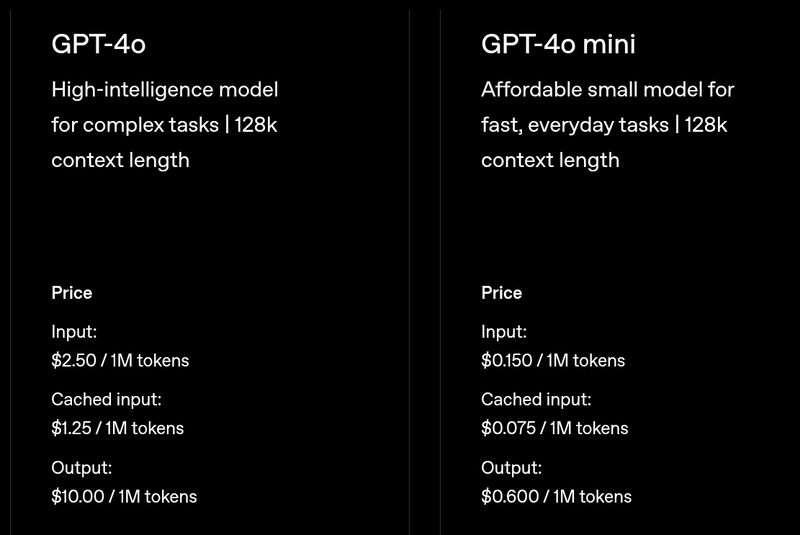

- Cost Efficiency: Consider the pricing: GPT-4 costs approximately $10 per million tokens, while GPT-4o mini costs just $0.6 per million tokens. If a business routes 60% of its queries to GPT-4o mini, it could save up to $5.64 per million tokens (calculated as 60% × ($10 - $0.6)).

- Speed: Lightweight models like GPT-4o mini have lower latency, providing faster responses for simpler queries and enhancing user experience.

- Resource Optimization: By reserving powerful models for complex tasks, we avoid overburdening resources unnecessarily.

For this task, classification models outperform complex NLP models (e.g., LLMs) because they are:

- Predictable: Outputs are straightforward and easy to monitor.

- Efficient: Training and inference are quick.

- Accurate: They perform well even with small datasets, especially with few-shot learning frameworks like SetFit.

Creating a Model Router with SetFit

Let’s build a model router using SetFit, an efficient framework for few-shot fine-tuning of sentence transformers. According to the SetFit GitHub page:

"SetFit achieves high accuracy with little labeled data—for instance, with only 8 labeled examples per class on the Customer Reviews sentiment dataset."

You can explore my trained model here: mrzaizai2k/model_routing_few_shot.

Dataset

Our goal is to route simple queries (e.g., "What is microservices?") to a lightweight model like GPT-4o mini and complex queries (e.g., "Tell me the difference between microservice and service-based architecture.") to a more powerful model like GPT-4. The dataset is small, designed for few-shot learning, and includes prompts in Vietnamese and English. It’s hosted on Hugging Face: mrzaizai2k/gpt_routing.

Here’s a sample of the labeled data:

| Label | Examples |

|---|---|

| 0 | "What is microservices?" "What is the capital of France?" "Write a Python function that calculates the factorial of a number." |

| 1 | "Tell me the difference between microservice and service-based architecture." "What is White-box testing? [with multiple-choice options]" "Analyze the time complexity of the merge sort algorithm." |

- Label 0: Simple queries.

- Label 1: Complex queries.

Training

We’ll use the embedding model sentence-transformers/all-MiniLM-L6-v2, which supports both Vietnamese and English. You can swap this for another embedding model depending on your language requirements.

Here’s the complete training code, sourced from my repository: mrzaizai2k/LLM-with-RAG.

import sys

sys.path.append("")

from datasets import load_dataset, ClassLabel

from setfit import SetFitModel, Trainer, TrainingArguments

from Utils.utils import config_parser

# Load configuration from YAML file

config = config_parser(data_config_path='config/gpt_routing_train_config.yaml')

dataset_name = config['dataset_name']

sentence_model = config['sentence_model']

text_col_name = config['text_col_name']

label_col_name = config['label_col_name']

# Load dataset from Hugging Face

datasets = load_dataset(dataset_name, split="train", cache_dir=True)

# Cast labels to ClassLabel type

new_features = datasets.features.copy()

new_features[label_col_name] = ClassLabel(num_classes=len(datasets.unique(label_col_name)), names=datasets.unique(label_col_name))

datasets = datasets.cast(new_features)

# Split dataset into train, validation, and test sets

ddict = datasets.train_test_split(test_size=config['test_size'], stratify_by_column=label_col_name, shuffle=True)

train_ds, val_ds = ddict["train"], ddict["test"]

ddict_val_test = val_ds.train_test_split(test_size=0.5, stratify_by_column=label_col_name, shuffle=True)

val_ds, test_ds = ddict_val_test["train"], ddict_val_test["test"]

# Load SetFit model

model = SetFitModel.from_pretrained(

sentence_model,

labels=datasets.unique(label_col_name),

)

# Define training arguments

args = TrainingArguments(

batch_size=config['batch_size'],

num_epochs=config['num_epochs'],

evaluation_strategy="epoch",

save_strategy="epoch",

load_best_model_at_end=True,

)

args.eval_strategy = args.evaluation_strategy # Workaround for SetFit compatibility

# Initialize trainer

trainer = Trainer(

model=model,

args=args,

train_dataset=train_ds,

eval_dataset=val_ds,

metric="accuracy",

column_mapping={"text": text_col_name, "label": label_col_name}

)

# Train the model

trainer.train()

# Evaluate on test set

metrics = trainer.evaluate(test_ds)

print("Metrics:", metrics)

# Push to Hugging Face Hub if enabled

if config['push_to_hub']:

trainer.push_to_hub(config['huggingface_out_dir'])

# Example predictions

preds = model.predict(["who has the pen", "The birthday of Kerger", "explain in detail the karger min cut and its complexity"])

print("Prediction:", preds)

Notes:

- The

config_parserfunction reads settings (e.g., dataset name, batch size) from a YAML file (gpt_routing_train_config.yaml). You’ll need to create this file with appropriate configurations. - The model splits the dataset into training, validation, and test sets to ensure robust evaluation.

Inference

Once trained, the model can classify new queries and route them accordingly. Here’s how to perform inference using the trained model:

from setfit import SetFitModel

# Load the trained model from Hugging Face Hub

model = SetFitModel.from_pretrained("mrzaizai2k/model_routing_few_shot")

# Run inference on a new query

preds = model("Thủ đô của nước Pháp là ở đâu?") # "What is the capital of France?" in Vietnamese

print("Prediction:", preds)

The output will be 0 (simple query) or 1 (complex query), guiding the routing decision.

Conclusion

In this post, we’ve introduced model routing, a technique that optimizes AI systems by directing queries to the most suitable model based on complexity. Using SetFit, we built a model router with a small dataset, leveraging few-shot learning for high accuracy. We covered the dataset preparation, training process, inference, and deployment to Hugging Face.

Model routing offers tangible benefits—cost savings, faster responses, and efficient resource use—making it a valuable tool for businesses and developers. With the resources provided (dataset, code, and model), you can adapt this approach to your own use case. For further exploration, check out the SetFit documentation or experiment with different embedding models to suit your language needs.

Happy routing!

Top comments (1)

🤯 I never realized how much money could be saved with smart routing! Thanks for this guide!