The "Chat" interface is killing our creativity.

We have Cursor for coding. It knows our repo, understands our dependencies, and writes code in context.

But for writing novels? We are still stuck copy-pasting text into a "Chat" box that forgets the main character's name every 4,000 words.

I couldn't afford a $30/month subscription to enterprise tools. So, I spent my last $7 building my own Agentic IDE on the Groq LPU.

Here is the tech stack, the architecture, and how I solved the "Context Amnesia" problem on a high school student's budget.

🏗️ The Problem: "Context Amnesia"

Standard LLMs have a "Slide Window" memory. If you are writing Chapter 20, the model has likely forgotten the foreshadowing you planted in Chapter 1.

Most "AI Writers" are just wrappers around GPT-4. They treat your novel like a conversation, not a project.

I needed an Agent, not a chatbot.

🛠️ The "Broke" Tech Stack ($7/mo)

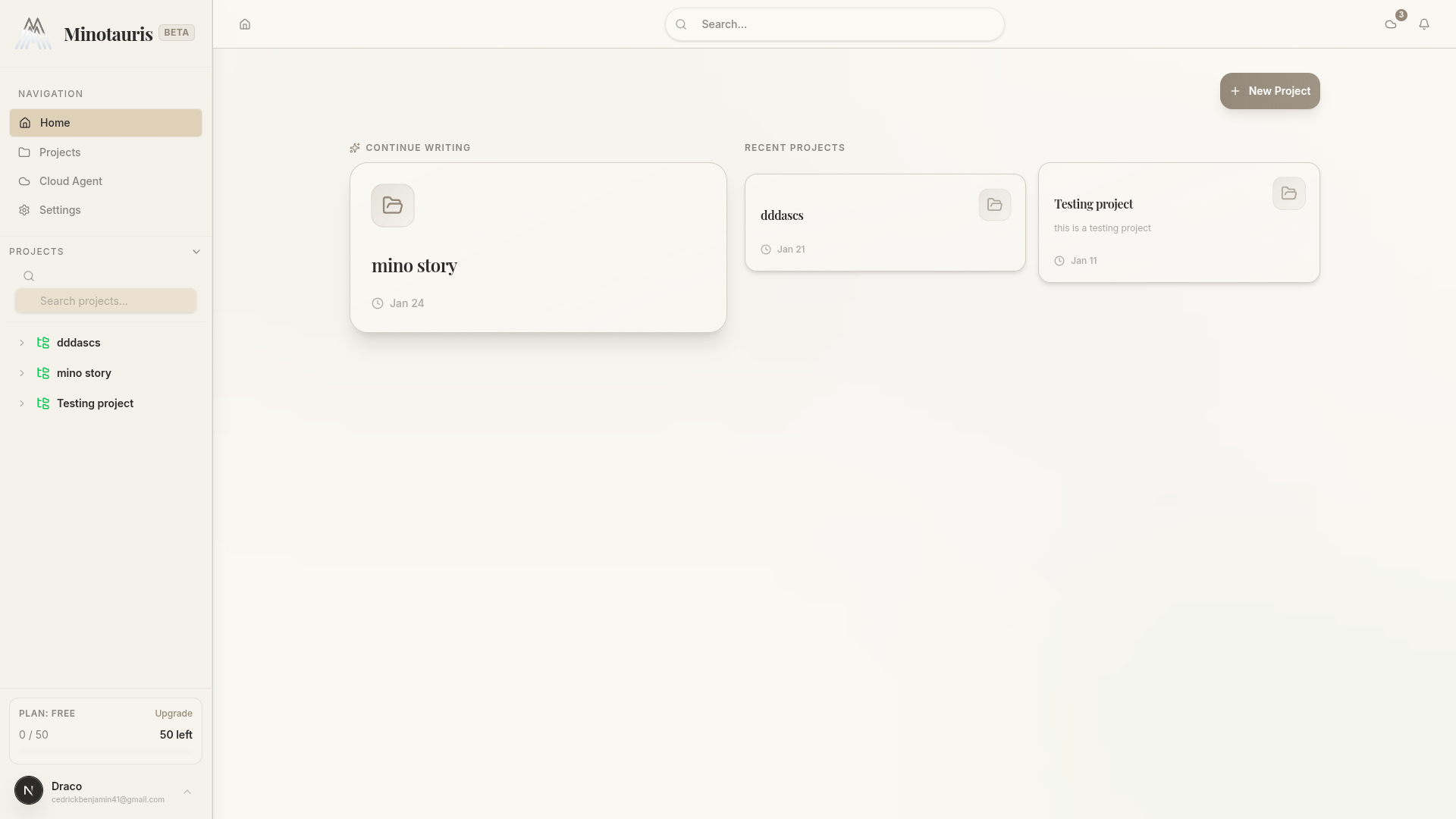

I call it Minotauris. It’s built to run lean but act smart.

Frontend: Next.js 15 (App Router) + Tailwind CSS.

Backend: Supabase (Auth + Database).

The Brain (Smart): deepseek-r1-distill-llama-70b (via Groq) for reasoning/plotting.

The Muscle (Fast): llama-3.1-8b-instant (via Groq) for prose/drafting.

The Orchestrator: A custom TypeScript router that decides which model to use.

🧠 The "Smart Router" (How I save money)

Since I'm 16 and not VC-backed, I can't let users burn $100 in API credits. I built a Traffic Router in Supabase Edge Functions:

TypeScript

// The logic is simple:

// 1. If the task is "Planning" -> Use Expensive Model (70B)

// 2. If the task is "Drafting" -> Use Cheap Model (8B)

// 3. If User > Monthly Limit -> Enforce "Slow Pool" (Artifical Delay)

This lets me offer "Unlimited" basic drafting because Llama 8B on Groq is practically free (~$0.05 per million tokens).

⚡ The Agentic Workflow

Minotauris isn't a chat. It’s an Asynchronous Command Center.

The Lore Index: I vector-embed the user's "Story Bible" (Characters, Locations, Rules).

The Command: User types: "Refactor Chapter 4 to be more suspenseful."

The Sub-Agent: The AI pulls the specific lore needed for Chapter 4, checks the tone of Chapter 1, and rewrites the text in the background.

I also built a Mobile Command Mode. You can send commands from your phone while walking the dog, and the cloud agent executes the heavy lifting on the server.

"Demon Mode" Development

I built the MVP in 2 weeks using v0 for the UI and Cursor for the backend logic.

I’m currently running a closed Alpha because my wallet can't handle the whole internet yet. But if you are a developer who writes (or a writer who devs), I’d love your feedback on the architecture.

The Waitlist is here: 👉 minotauris.app

Let me know in the comments: How are you handling long-context in your own AI apps? RAG? Long-context caching? I need optimization tips before I go broke lol.

Top comments (0)