Here's my weekly accountability report for my self-study approach to learning data science.

Week 2 Fast.ai

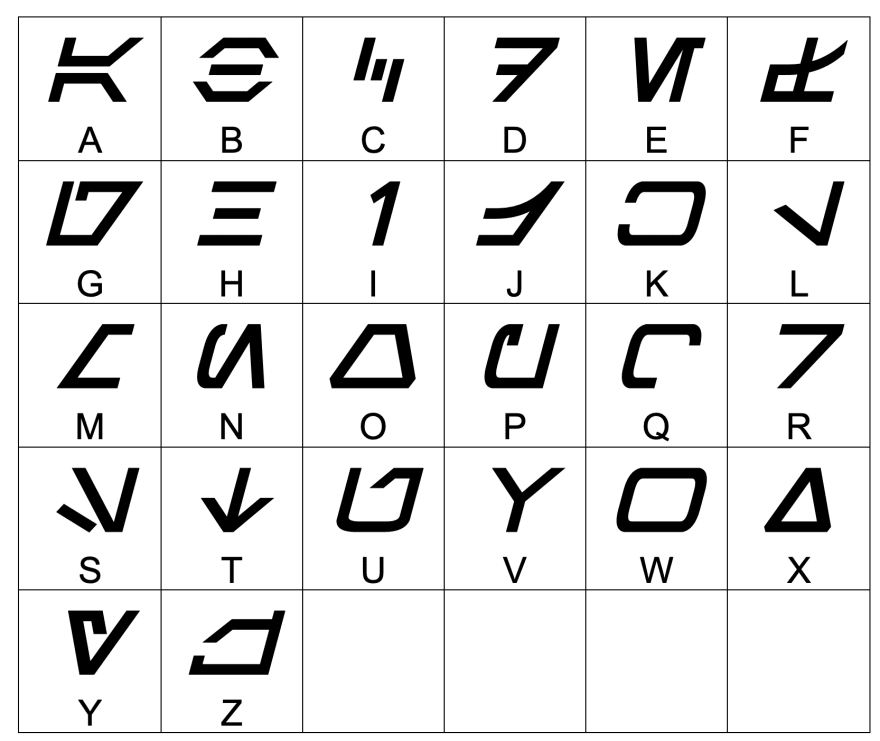

I finished week 2 by building a model that predicts which character of the Aurebesh (Star Wars alphabet) a handwritten letter is.

The model achieved 97% accuracy with a small dataset (a subset of the Omniglot dataset - Lake, B. M., Salakhutdinov, R., and Tenenbaum, J. B. (2015). Human-level concept learning through probabilistic program induction. Science, 350(6266), 1332-1338.) on a ResNet 34 architecture. But when I deployed it the real-world accuracy was much lower. You can see it in action on Render. And my GitHub repo has both my model and the fast.ai docker container for the frontend.

Some thoughts (I should just call them #$@#ing issues, but thoughts gives the impression that I have some professional distance).

- The sample image of the alphabet is broken. I double-checked index.html, it worked locally and is present in my GitHub repo. So maybe it's my docker container or my Render service? Both frameworks are new to me and I don't have a deep grasp of what is doing what. And there is so much to learn that frontend and DevOps ... well, I try a bit and then I need to move on. But hey my model works... kinda...sorta...)

-

It turns out my model's real-world accuracy is much, much lower. How much lower? I don't know, I didn't bother calculating. I expected it wouldn't be great, so it's not really a surprise. But hey! I made an attempt. I suspect having more than 20 black and white images of each character would help, given that real-world test cases are using color pictures taken in real-world situations.

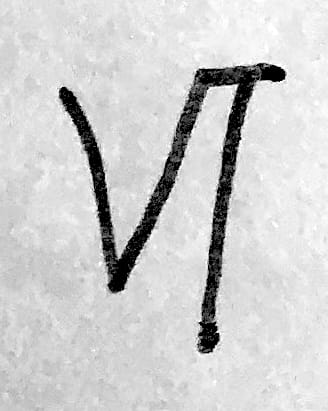

Say hello to my friend the letter Esk (aka 'E') I would like to gather more samples of handwritten letters and figure I know enough fans to help me crowdsource say in the realm of scores of samples per letter (vs. 20). Some questions I have. How important is it to get clean letters vs noisy ones? I suspect the more it represents what you would find in the wild the better. Alternately I could augment what I've got. And I would like to train it on more dirty printed characters as well.

- A nice goal would be to get it to translate complete lines of text, which requires character/line detection. That might help with my accuracy since the training set has characters that take up the bulk of the image percentage-wise and the stuff I've been throwing at it has been less so. That seems like a reasonable constraint to have on the training/test sets and possible next steps for gathering data.

"Warning. Rendering Fat" At least that's what I think it says. Sure would be nice to have a model that would do the translation for me. Source: Mercury News - Finally, Render is pooping out after an image or two and I think it's related to running out of memory, but again, I haven't had a chance to investigate and understand why.

I would love to get it to the point where it detects complete written lines of Aurebesh and could decipher it - making it easy to snap pics from the movies, shows, Galaxy's Edge, books, etc. without having to actually know Aurebesh. But all of this is in service to me learning the skills I need to get a job. So we'll see how it fits in with the rest of my studying. For now, Fast.ai encourages a just keep swimming with the uncertainty, so that's my plan.

And if anyone knows exactly why Render is choking or my image isn't loading or how to design a dataset better, I welcome feedback.

Top comments (2)

Lot's of interesting technical issues in here that I'm going to think about.

I wonder if an intermediate project might be to classify does the image have the language in it. Then you're dealing with yes/no output rather than the full range of possible sentences.

Which fast.ai course is this related to? And does it point you to the Aurabesh character recognition, or was that your choice?

The question I think you are asking is does it make sense to have an "aurebesh or not" classifier before doing an aurebesh letter classifier? From a deep learning perspective, I don't think it matters. The two are both straight forward to implement (and harder to generate enough data for) and one doesn't build on the work of the other. A bit like making an "animal or not" classifier doesn't really help with making a "cat or dog" classifier.

It's from week 2 of Practical Deep Learning for Coders (course.fast.ai/videos/?lesson=2). The assignment was basically, here's a bunch of ideas about how to throw stuff together, now go forth and make an image classifier. That level of hands-off is very typical for fast.ai which is in part why I love it so much. The character recognition was my choice. I'm a big Star Wars fan! But also I was looking for images to classify and the data set was already pre-generated. The sample problem that was worked through was "teddy bear, grizzly bear or black bear" other students have done "cricket or baseball". It's a fun project.