That DuckDB is gaining more and more attention is no accident. As a rising star in desktop analytics, it masters SQL with ease, effortlessly handling CTE recursive queries, multi-layered window functions, and complex JOINs. It can even handle aggregations on datasets with hundreds of millions of rows with ease—just run a SELECT AVG(revenue) FROM terabyte_table GROUP BY region, and it’s done. Such capability is truly commendable. But even the strongest warriors have their Achilles’ heel, and DuckDB’s shortcomings lie in those areas where SQL falls short but business demands persist.

Encountering “unconventional requirements”

For example, the boss wants to calculate “the moving average of each customer’s last 3 order amounts, limited to weekend orders.” Want to do this in SQL? Be ready for a three-layer nested query: first, filter weekend orders; then, group and sort by customer; finally, calculate the moving average using a window function.

Of course, you can write it with some effort, but this isn’t a one-time job. Similar requirements are common, and putting in so much effort every time isn’t a practical in the long run.

And there’s an even more frustrating issue: flow control. Want to create a loop in SQL or dynamically adjust calculation logic based on conditions? For example, “If today’s sales increase exceeds 10%, then run promotional calculations; otherwise, skip.” Unfortunately, SQL’s IF and LOOP statements are too limited to be practical. The convoluted code you manage to write becomes incomprehensible even to you after just three days.

Resorting to Python?

Of course, DuckDB has a backup plan: “For tasks that SQL struggles with, hand them off to Python! There’s nothing wrong with this plan. DuckDB’s Python API is indeed easy to use; a simple conn.sql().to_df() lets you seamlessly switch to pandas.

For example, to calculate “double reward points when a client’s consecutive purchase days exceed 5,” SQL’s window functions can calculate consecutive dates, but handling dynamic conditions is cumbersome. In comparison, Python is more straightforward:

df = duckdb.sql("SELECT client, order_date FROM orders ORDER BY client, order_date").df()

df['order_date'] = pd.to_datetime(df['order_date'])

results = []

for client, group in df.groupby("client"):

streak = 1

prev_date = None

for date in group["order_date"]:

if prev_date and (date - prev_date).days == 1:

streak += 1

else:

streak = 1

if streak >= 5:

results.append({"client": client, "bonus": "Doubling"})

prev_date = date

print(results)

The code works, but the data must be exported from DuckDB to a DataFrame. A logically coherent business requirement has to be split into SQL preprocessing and Python post-processing, forcing you to keep switching between the two. This approach is not only awkward to develop and debug but also wastes significant time on data transfer, driving you crazy.

Moreover, Python doesn’t excel at everything. It lacks big data processing capabilities and native parallel computing support for large datasets, making it inferior to DuckDB in this regard.

SPL is a good solution

With esProc SPL, these problems vanish. It’s like a combined-evolved version of ‘DuckDB + Python’, handling everything in one system, making it simpler and more efficient.

Like DuckDB, esProc SPL offers SQL support. SQL can be run directly on common files such as CSV and Excel. For example, query sales data:

$SELECT region, SUM(amount) FROM sales.csv GROUP BY region

Such lightweight operations are a breeze for esProc. Moreover, it offers a binary file format with good compression ratio and fast data I/O – capabilities on par with DuckDB.

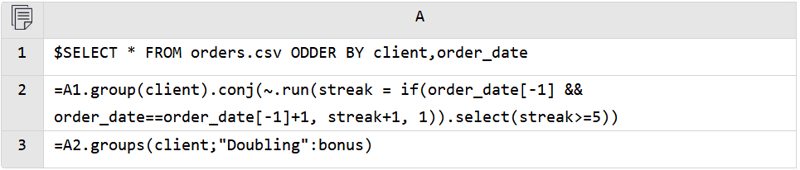

Furthermore, for complex requirements, esProc provides native SPL syntax as a backup. For example, for the consecutive purchase reward task mentioned earlier, written in SPL:

This code not only identifies eligible clients for double rewards but also retrieves their purchase details (A3). Doesn’t it feel like you’re getting ahead of the business needs?

SPL also provides far better JSON support compared to DuckDB, allowing you to directly navigate through nested data with simple dot notation: example.contact(1).metadata.verified, which is much cleaner than DuckDB’s json_extract(contact[1], '$.metadata.verified').

SPL offers robust support to address Python’s weakness in handling big data. For large datasets, SPL’s cursor mechanism will show you how easy it is:

=file("huge.log").cursor@t()

=A1.groups(;sum(amount):total, count(~):rows)

Data is read in a stream, with only the current batch held in memory. This allows files of hundreds of gigabytes to run smoothly. Additionally, it supports parallel and segmented processing:

=file("huge.log").cursor@tm(;4) //4-thread parallel processing

DuckDB + Python is like using chopsticks for a steak dinner – each works well individually but the combination feels awkward. What about esProc SPL? Think of it as a full-stack kitchen suite: it combines SQL’s rigor with Python’s flexibility, enhanced with a universal toolkit for multi-source data mixed computation + big data processing—all seamlessly integrated into one system. Isn’t the ultimate dream of data engineers to write less code and slack off? SPL might just be the closest shortcut to achieving that.

Top comments (0)