Currently configuring Redis server as our backend caching service and during that wrote this post with some things to pay attention at in Redis config file.

Currently configuring Redis server as our backend caching service and during that wrote this post with some things to pay attention at in Redis config file.

Shortly enough but with links to other posts or documentation.

Topics:

- Redis server-level config

- timeout

- tcp-keepalive

- RDB Persistence

- AOF persistence

- maxmemory

- vmaxmemory-policy

- unixsocket

- loglevel

- OS-level config

- Transparent Huge Page

- maxclients and fs.file-max

- tcp-backlog and net.core.somaxconnv

- What is the TCP backlog and net.core.somaxconn

- vm.overcommit_memory

- vm.swappiness

- Useful links

Let’s begin with the redis-benchmark utility.

Will be installed alongside with Redis service, so can be used right after server installation:

root@bttrm-dev-app-1:/home/admin# redis-benchmark -p 6389 -n 1000 -c 10 -k 1

====== 1 ======

1000 requests completed in 0.03 seconds

10 parallel clients

3 bytes payload

keep alive: 1

98.30% <= 1 milliseconds

99.30% <= 2 milliseconds

100.00% <= 2 milliseconds

30303.03 requests per second

Also, you can use Redis CLI with --latency or --latency-dist options:

Redis server-level config

timeout

Disconnect connection if a client is inactive during N seconds.

Set to the zero to disable (IDLE-client swill stay connected until server will be restart):

...

timeout 0

...

In general – can’t see any reason to change the default’s 300 seconds.

tcp-keepalive

See more at Redis Clients Handling.

Doesn’t apply during PUBLISH/SUBSCRIBE (real-time operations), see the Redis Pub/Sub: Intro Guide and Redis Pub/Sub… How Does it Work?

During that, the Redis server will send ACK-requests (Acknowledgment) after seconds from this parameter to keep a session alive:

...

tcp-keepalive 300

...

Check with the:

127.0.0.1:6389> CONFIG GET tcp-keepalive

1) "tcp-keepalive"

2) "300"

Default value 300 seconds (but can change on a Redis version).

If the client will not respond to the ACK-request from the server – this connection will be closed.

If both timeout and tcp-keepalive on the server-side will be set to the zero (i.e. disabled) – then “dead” connections will stay alive until server’s restart.

See more at Things that you may want to know about TCP Keepalives.

To see how keep-alive affect performance id general – run benchmark using tcp-keepalive option (-k == 1):

root@bttrm-dev-app-1:/home/admin# redis-benchmark -p 6389 -n 1000 -c 10 -k 1 -q | tail -2

MSET (10 keys): 16129.03 requests per second

And with disabled keep-alive:

root@bttrm-dev-app-1:/home/admin# redis-benchmark -p 6389 -n 1000 -c 10 -k 0 -q | tail -2

MSET (10 keys): 7042.25 requests per second

Again – I can’t any reason here to change the default value.

RDB Persistence

Will create a full database copy. See the documentation here Redis Persistence.

Its behavior is set with the save option (see also Redis save, SAVE и BGSAVE).

Check the current value:

127.0.0.1:6379> CONFIG GET save

1) "save"

2) "3600 1 300 100 60 10000"

Remove it:

127.0.0.1:6379> CONFIG SET save ""

OK

127.0.0.1:6379> CONFIG GET save

1) "save"

2) ""

Save new settings:

127.0.0.1:6389> CONFIG rewrite

OK

In case when Redis is used for caching only – persistence can be disabled, just remove the save from the config-file at all.

But still, the same mechanism will be used for the master-salve replication, if used (see more in theRedis: replication, part 1 – an overview. Replication vs Sharding. Sentinel vs Cluster. Redis topology. post).

AOF persistence

Append Only File – will save each operation performed by a Master host to the log-file.

Similarly to the RDB when we are using Redis for cache only – no need in this option.

To disable – set appendonly to the no:

...

appendonly no

...

maxmemory

maxmemory sets maximum host’s memory limit to be allocated to the Redis.

See the Using Redis as an LRU cache.

Can be set as %:

127.0.0.1:6389> CONFIG SET maxmemory 80

OK

Or megabyte/gigabyte:

127.0.0.1:6389> CONFIG SET maxmemory 1gb

OK

127.0.0.1:6389> CONFIG GET maxmemory

1) "maxmemory"

2) "1073741824"

Or to the zero to disable limit at all and is the default value for 64-bit systems. 32-bit systems have 3 GB as the default.

When the limit is reached – Redis will make a decision based on the maxmemory-policy option.

Keeping in mind the fact that we have also memcached and PHP-FPM workers running on the backend’s hosts – let’s set this limit to 50% RAM.

maxmemory-policy

Sets policy to be used when Redis reaches maxmemory limit.

_ Note : LRU – Less Recently Used_

Can be one of the next:

-

volatile-lru: remove less used keys with theexpireset -

allkeys-lru: remove less used keys regardless of theexpireset -

volatile-random: remove random keys with theexpireset -

allkeys-random: remove random keys regardless of theexpireset -

volatile-ttl: remove a key with lowest TTL left -

noeviction: do not delete keys at all – just return an error on the write operations

To check the expire field you can use TTL/PTTL:

127.0.0.1:6379> set test "test"

OK

127.0.0.1:6379> ttl test

(integer) -1

127.0.0.1:6379> pttl test

(integer) -1

127.0.0.1:6379> EXPIRE test 10

(integer) 1

127.0.0.1:6379> ttl test

(integer) 8

127.0.0.1:6379> ttl test

(integer) 7

127.0.0.1:6379> ttl test

(integer) 7

In our case backend developers are not sure that we are using the expire for all keys, and knowing the fact that Redis will be used for caching only – maxmemory-policy allkeys-lru can be set.

unixsocket

In case if Redis and an application are working together on the same host – you can try to use UNIX-sockets instead of the TCP-connections.

Set Redis server to use socket:

...

unixsocket /tmp/redis.sock

unixsocketperm 755

...

Can add significant value to the performance, see more at the Tuning Redis for extra Magento performance.

Let’s check with the redis-benchmark.

Create a test-conf:

unixsocket /tmp/redis.sock

unixsocketperm 775

port 0

Run with the socket:

root@bttrm-dev-console:/etc/redis-cluster# redis-server /etc/redis-cluster/test.conf

Check operations:

root@bttrm-dev-console:/home/admin# redis-benchmark -s /tmp/redis.sock -n 1000 -c 100 -k 1

...

90909.09 requests per second

And via TCP port:

root@bttrm-dev-console:/home/admin# redis-benchmark -p 7777 -n 1000 -c 100 -k 1

...

66666.67 requests per second

90909.09 vs 66666.67 – pretty obvious.

loglevel

A log’s destabilization level. With debug – most detailed and thus more expensive for host’s resources (CPU, etc).

Can be set to the debug, verbose, notice, warning.

By default – notice, and while the application still in the configuration state can be left with this value.

OS-level config

Transparent Huge Page

The Linux kernel feature to leverage objects to manipulate during virtual memory allocation and management. See more at the Transparent Hugepages: measuring the performance impact, Disable Transparent Hugepages, and Latency induced by transparent huge pages.

In the Redis, judging by the documentation>>>, has sense only when the RTB enabled, but can be disabled at all.

You can check the current value by calling:

root@bttrm-dev-app-1:/home/admin# cat /sys/kernel/mm/transparent_hugepage/enabled

always [madvise] never

The value in the [] is the currently used one – madvice.

madvice set the Kernel to use THP only in the case when it’s requested directly with the madvice() call.

THP usage can be checked with the next command:

root@bttrm-dev-app-1:/home/admin# grep HugePages /proc/meminfo

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

maxclients and fs.file-max

Set maximum clients connected at the same time.

The default value is 10.000 and can be overwritten via the maxclients, see the Maximum number of clients.

At the same time – Redis will check an operating system limits as well as the kernel level limit – sysctl fs.file-max:

root@bttrm-dev-app-1:/home/admin# sysctl fs.file-max

fs.file-max = 202080

root@bttrm-dev-app-1:/home/admin# cat /proc/sys/fs/file-max

202080

And ulimit as per-user limit per process:

root@bttrm-dev-app-1:/home/admin# ulimit -Sn

1024

For systemd-based systems, this limit can be set in the Redis unit-file by the LimitNOFILE option:

root@bttrm-dev-app-1:/home/admin# cat /etc/systemd/system/redis.service | grep LimitNOFILE

LimitNOFILE=65535

tcp-backlog and net.core.somaxconn

Redis can set the client’s connections queue to the value specified in the tcp-backlog (511 by default).

Still, the operating system has its own limit – net.core.somaxconn and if it is less then the Redis’ limit – then the warning will be produced:

The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128

What is the TCP backlog and net.core.somaxconn

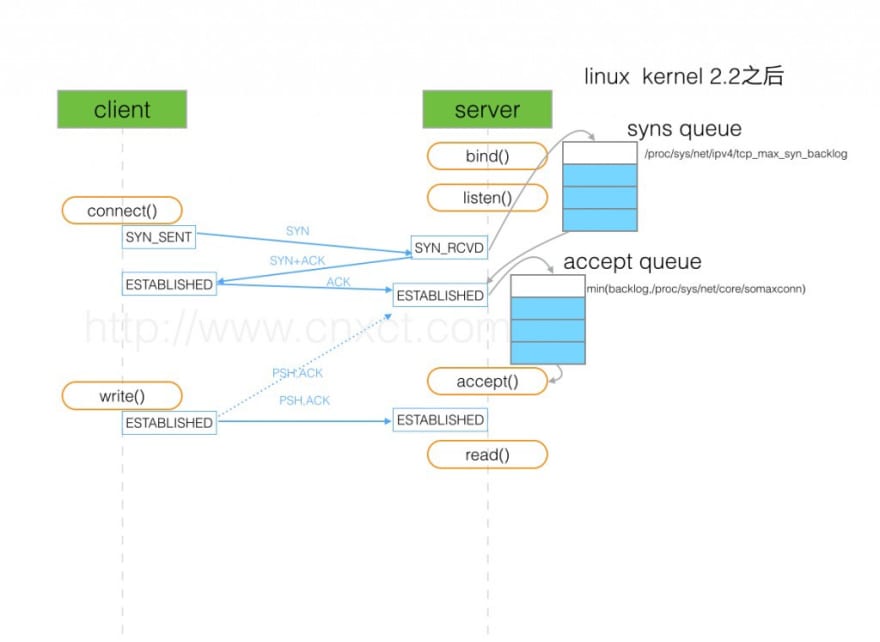

To better understand where and how the tcp-backlog is applied and the net.core.somaxconn‘s role – let’s review how a TCP session is established:

- a server: an application executes the

listen()syscall passing to it a file descriptor to a socket, and in the second argument – the accept backlog size (thetcp-backlogvalue taken from theredis.conf) - a client: an application on the client-side executes

connect()call and sends an SYN packet to the server- on the client side – the connection changes its state to the SYN_SENT

- on the server’s side: a new connection is set to the SYN_RCVD state and will be saved in the syn backlog (

net.ipv4.tcp_max_syn_backlog) – incomplete connection queue

- the server: sends the SYN+ACK

- the client: sends ACK , and changes the connection’s state to the ESTABLISHED state

- the server: accepts ACK and set the connection state to the ESTABLISHED and moves it to the accept backlog – complete connection queue

- the server: executes the

accept()call passing a connection from the accept backlog - the client: executes the

write()call and starts sending data - the server: calls the

read()syscall and starts receiving data

So, if Redis will pass the tcp-backlog value to the listen() greater then the kernel has in its limit in thenet.core.somaxconn setting – you’ll get the “TCP backlog setting cannot be enforced” message.

The default value is 128:

root@bttrm-dev-app-1:/home/admin# sysctl net.core.somaxconn

net.core.somaxconn = 128

And can be updated by the the sysctl -w:

root@bttrm-dev-console:/home/admin# sysctl -w net.core.somaxconn=65535

net.core.somaxconn = 65535

root@bttrm-dev-console:/home/admin# sysctl -p

See the TCP connection backlog – a struggling server и TCP Three-Way Handshake.

vm.overcommit_memory

Well, the most ambiguous parameter, as for me.

I’d highly recommend reading the Redis: fork – Cannot allocate memory, Linux, virtual memory and vm.overcommit_memory.

See also overcommit_memory and Background saving fails with a fork() error under Linux even if I have a lot of free RAM.

The overcommit_memory steps in when Redis creates data snapshotting from the memory on the disk, specifically during the BGSAVE

In our current case, when Redis is used for caching only and has no RDB or AOF backups enabled – no need to change the overcommit_memory and best to leave it with its default value – 0.

In the case when you really want to set the boundaries by yourself – it’s best to use overcommit_memory == 2 and limit the overcommit by setting the overcommit_ratio or overcommit_kbytes parameters.

vm.swappiness

If an operating system has SWAP configured – it can dump some Redis’ data to the disk and later when Redis will try to access them – it can take a long time to read them back to the memory.

To avoid this – disable swap completely:

root@bttrm-dev-console:/home/admin# sysctl -w vm.swappiness=0

Useful links

- redis.conf

- sentinel.conf

- 5 Tips for Running Redis over AWS

- Redis: I like you, but you’re crazy

- Redis Best Practices and Performance Tuning

- Learn Redis the hard way (in production)

- Optimizing Redis Usage For Caching

- Benchmarking the experimental Redis Multi-Threaded I/O

- Redis configuration for production

- Things that you may want to know about TCP Keepalives

- Redis Configuration Controls

- Understanding the Top 5 Redis Performance Metric

- Сollection of our notes to tweak redis

- Влияние Transparent Huge Pages на производительность системы

- Redis latency due to Transparent Huge Pages

- Transparent Hugepages: measuring the performance impact

- Disable Transparent Hugepages

- Running Redis in production (2014 год)

- Настройка Redis

Similar posts

- 03/29/2019 Redis: replication, part 2 – Master-Slave replication, and Redis Sentinel

- 03/29/2019 Redis: replication, part 1 – an overview. Replication vs Sharding. Sentinel vs Cluster. Redis topology.

- 04/15/2019 Redis: replication, part 4 – writing an Ansible role fore the Master-Slave replication with Redis Sentinel provisioning

- 04/15/2019 Redis: репликация, часть 4 – написание Ansible роли

Top comments (0)