What do I need to know?

The concepts that enable this are the event loop and macrotasks. There is also an example of it all working at the end.

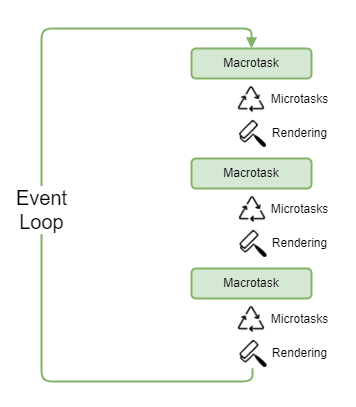

The event loop

The event loop is how the JS engine asynchronously executes queued tasks. It monitors the call stack and the task queue. When the call stack is empty it will process the next item on the queue.

A single loop will involve executing 1 macrotask, all microtasks queued during this loop and finally rendering if needed. It then repeats until there are no more tasks and sleeps until a new task is added. It's clear then how a long running macrotask can cause the UI to freeze.

What is a macrotask (or just task)?

A macrotask is any task waiting for processing on the event loop. The engine executes these tasks oldest first. Examples include:

- An event is fired

- When an external script loads

- setTimeout, setInterval, setImmediate etc.

And microtasks?

Microtasks are small functions executed after the macrotask is completed, commonly generated as the resolution of a promise or async/await. All microtasks generated during the active loop will run before the next macrotask is executed.

You can add microtasks directly with queueMicrotask:

queueMicrotask(() => {

// function contents here

})

Microtasks can queue other microtasks which can also lead to freezing or even an infinite loop.

Using this to save your UI

If you need to execute an expensive operation and can batch it, such as iterating over a large array, then macrotasks can enable microtasks and rendering to complete in between. Take the following example:

let i = 0;

function count() {

do {

i++;

} while (i % 1e6 != 0); // Process the next million.

if (i === 1e9) { // We're going to 1 billion.

console.log('Done!');

} else {

setTimeout(count); // Schedule the next batch

}

}

count();

What the above code is doing is effectively splitting our operation into 1,000 batches, meaning in between each batch the UI can respond to events and update. Rather than being stuck until our long operation completes.

As events are macrotasks and rendering occurs after the microtasks complete we want to use concepts such as setTimeout rather than queueMicrotask to ensure they are executed between batches.

concurrent-each

concurrent-each is a small library I wrote that leverages these concepts to enable expensive array processing while keeping the UI responsive.

Async array operations to push tasks onto the macrotask queue to prevent UI lock up while processing large volumes of data in batches.

| Normal Map | Concurrent-each Map |

|---|---|

|

|

Top comments (0)