Foreword

Anthropic launched the Agent Skills framework in October 2025, which is a mechanism that enables general AI to acquire professional capabilities. This article analyzes the design philosophy of this framework and demonstrates how to build practical Agent Skills using Litho as an example.

Core Mechanisms of Agent Skills

Progressive Disclosure Design

According to the official Anthropic documentation, the core innovation of Agent Skills lies in adopting a "Progressive Disclosure" mechanism. While this concept originates from user experience design, it has new application dimensions in AI systems.

Understanding Progressive Disclosure

In traditional software, Progressive Disclosure refers to an interface design strategy: first display basic information, then show detailed features when users have needs. In Agent Skills, this concept is migrated to the cognitive level:

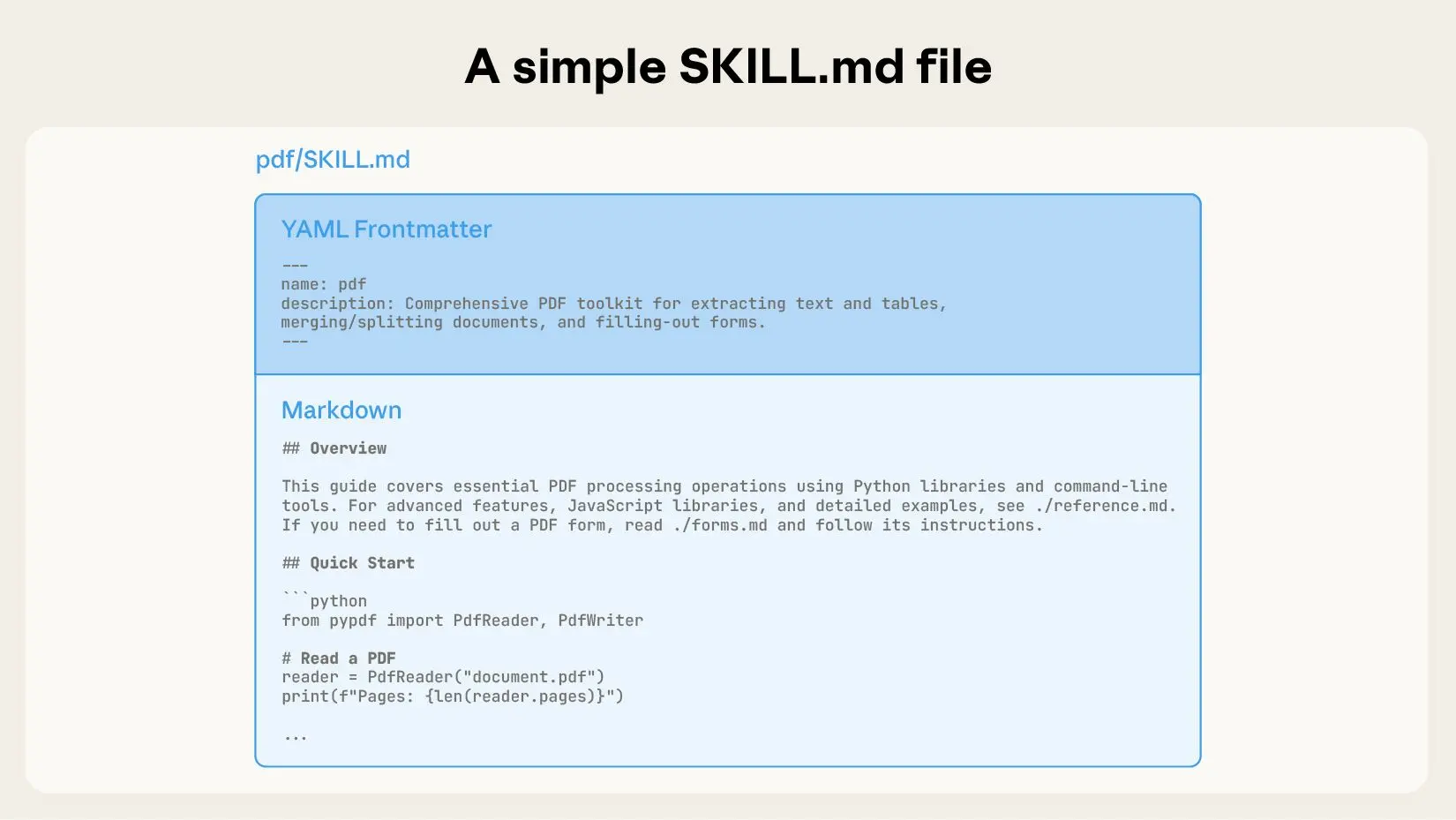

Detailed Three-Layer Information Architecture

The official documentation details the technical implementation of this layered design:

First Layer: Metadata Trigger Layer

---

name: your-skill-name

description: "Clear description of when and how to use this skill"

---

- Technical Details: This metadata is loaded into the system prompt when the agent starts

- Working Principle: Claude checks these metadata when processing each request to determine if a specific skill needs to be activated

- Advantage for Chinese Users: descriptions can be written in Chinese to more accurately express usage scenarios, helping Chinese users trigger the correct skills

Second Layer: Core Instructions Layer

# Main SKILL.md file content

## Workflow

## Key Decision Points

## Basic Usage Methods

## Common Patterns

- Loading Timing: Only when Claude confirms the skill is relevant will it actively read the complete SKILL.md

- Context Management: This step consumes tokens, so content needs to be concise (official recommendation to keep reasonable length)

- Design Focus: Should contain handling logic for 80% of common scenarios

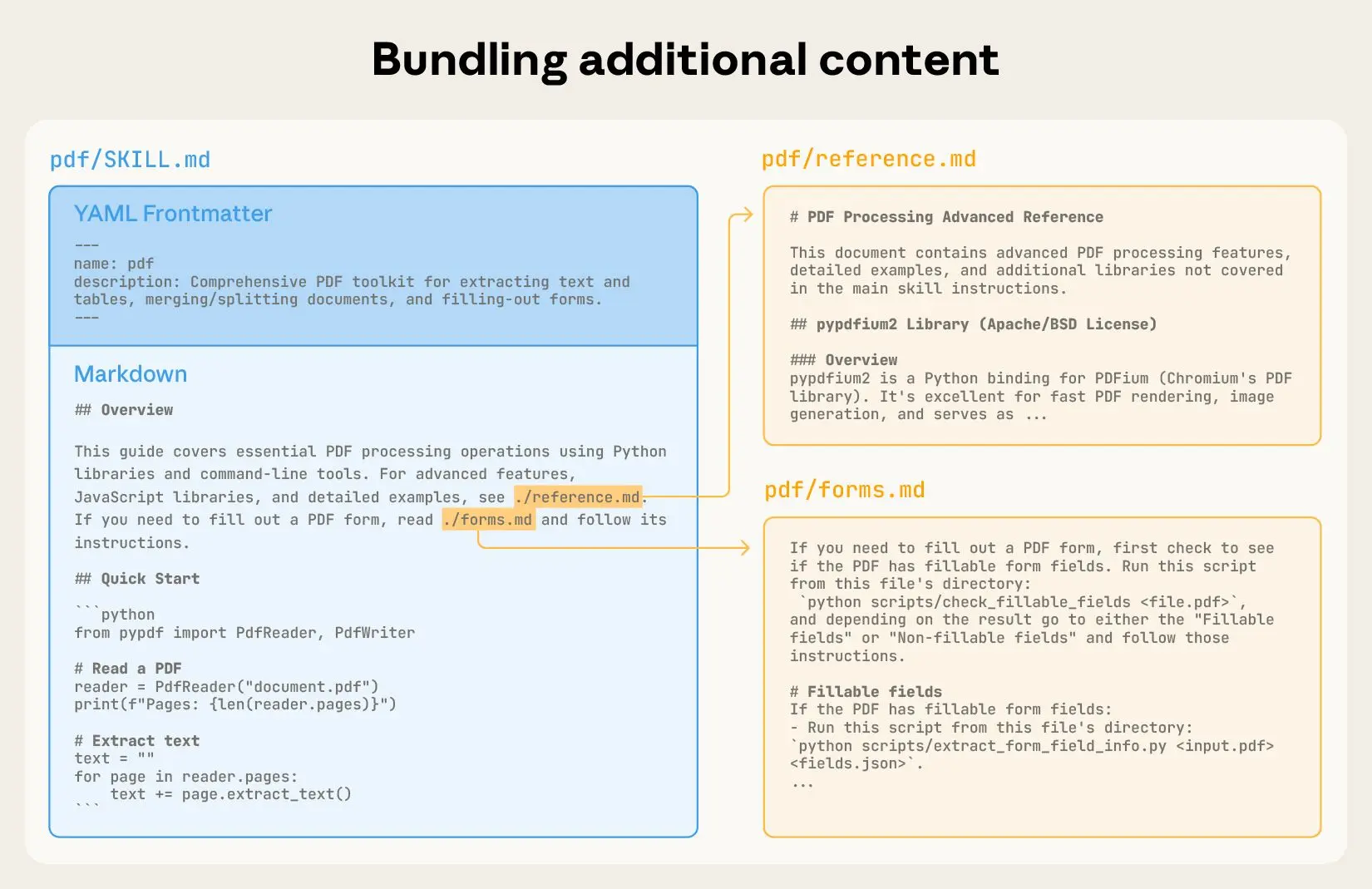

Third Layer: Reference Materials Layer

Taking Litho as an example, complete description see SKILL.md

- On-demand Loading: Claude only reads these files when actually needing specific details

- Unlimited Extension: Theoretically can have unlimited auxiliary files as they don't occupy basic context

- Modular Advantage: Can separate infrequently used content to reduce complexity of main documentation

Actual Effects of Context Window Optimization

The official documentation uses a specific example to illustrate this process:

-

Initial State (assuming context is 4000 tokens):

- System prompt: 1500 tokens

- User message: 200 tokens

- All loaded skill metadata: ~800 tokens (assuming 10 skills)

- Remaining available space: 1500 tokens

-

After Skill Trigger:

- Claude determines a specific skill is needed

- Calls bash tool to read that skill's SKILL.md (adds 300 tokens)

- Remaining available space: 1200 tokens

- If specific details are needed, calls bash tool again to read auxiliary files (adds 100 tokens)

- Remaining available space: 1100 tokens

This mechanism ensures context space is only consumed when truly needed.

Design Philosophy of File System as Knowledge Network

Another important design of Agent Skills is mapping the file system to a knowledge network structure. This is called "composable resources" in the official documentation.

File Organization Design

Reference the agent skill of litho

skill-directory/

├── SKILL.md # Knowledge root node

├── configuration.md # Configuration knowledge subgraph

├── examples/ # Example knowledge cluster

│ ├── basic-usage.md

│ ├── advanced-patterns.md

│ └── troubleshooting-examples.md

├── tools/ # Tools knowledge cluster

│ ├── setup.sh # Automation tools

│ ├── validate.py # Validation tools

│ └── deploy.yml # Deployment tools

└── integration/ # Integration knowledge cluster

├── ci-cd.md # CI/CD integration

└── api-clients.md # Client integration

Behind this structure are several key concepts:

1. Semantic Foldering

Each subfolder represents a specific knowledge domain. This is not just physical grouping but conceptual layering. Anthropic's design allows Claude to understand content classification through path semantics.

2. Filenames as Navigation Signals

Filenames themselves are important navigation clues:

-

basic-setup.mdimplies this is introductory content -

advanced-config.pyimplies this is advanced configuration tools -

emergency-recovery.shimplies this is emergency recovery procedures

3. Information Density Control by Directory Depth

Deeper levels usually contain:

- More specialized details

- Less commonly used features

- Stronger context dependencies

Discoverability and Self-documenting Principles

The official documentation emphasizes the importance of "self-documenting":

- Predictable Structure from Outside In > Reference the agent skill of litho Both users and Claude should be able to infer where to find specific types of information:

skill-name/

├── SKILL.md # First document to read

├── configuration.md # Configuration-related queries point here

├── troubleshooting.md # Troubleshooting points here

└── scripts/ # Execution points here

- Establishing Internal Reference Networks References in SKILL.md are not simple links but edges of knowledge graph construction:

## Litho Agent Skill Analysis

Next, let's take [Litho](https://github.com/sopaco/deepwiki-rs), an open-source project's Agent Skill implementation example

### First, Introduce Litho

Litho is a cross-platform, cross-technology stack project knowledge generation engine based on Rust. Its core function is to automatically generate structured knowledge understandable by agents from source code. In the Agent Skill scenario, its main value is:

1. **Reduce cognitive burden of Coding Agents**

2. **Provide pre-generated code knowledge graphs**

3. **Reduce context consumption during reasoning**

4. **Improve code understanding efficiency**

Specifically, when a Coding Agent needs to operate on complex codebases:

- **Traditional approach**: AI needs to directly analyze a large number of source code files, consuming many tokens and occupying reasoning resources

- **Litho-enhanced approach**: AI first loads architecture documents generated by Litho to quickly establish system understanding, then performs deep analysis on specific modules

### Skill Structure Design

#### Metadata Design

yaml

name: deepwiki-rs

description: "Code analysis tool that pre-generates structured documentation to help AI agents understand complex codebases efficiently, reducing context overhead and improving coding assistance quality."

The description highlights value in Agent usage scenarios: reducing context overhead and improving efficiency.

#### Core Workflow Design

The main SKILL.md maintains a concise structure (87 lines):

Use Cases

- Codebase Pre-analysis: Generate architecture overview before coding tasks

- Context Optimization: Reduce tokens needed for large project understanding

- Module Mapping: Understand relationships and dependencies quickly

Command Patterns

- Quick overview:

deepwiki-rs -p ./src --skip-preprocessing - Full analysis:

deepwiki-rs -p ./project --model-efficient gpt4-mini --model-powerful gpt4-o

#### On-demand Reference of Auxiliary Files

Detailed configuration and technical details are moved to independent files:

#### Actual Workflow Example

The official documentation provides a complete PDF processing skill example to demonstrate this mechanism. We'll use Litho's scenario to show a similar workflow:

**Scenario: AI agent needs to add new functionality to existing project**

1. **Initial Request Analysis** (User: "Add user authentication functionality to project")

Context: 4000 tokens

- System prompt: 1500 tokens

- All skill metadata: 800 tokens

- User request: 50 tokens

- Remaining: 1650 tokens

2. **Skill Activation** (Claude detects "project" and "add functionality" triggered Litho skill)

Claude evaluation: This task requires understanding project architecture, Litho skill relevant

Execute: bash -c "read_file ./skill-litho/SKILL.md"

3. **Loading Core Instructions** (Litho SKILL.md content loaded)

Context increase: 300 tokens

Remaining: 1350 tokens

Information Claude gets:

- Decision tree

- Basic command patterns

- Key considerations

4. **Deep Requirement Analysis** (Claude finds need to understand specific module configuration)

Claude decision: To add authentication functionality, need to understand project configuration structure

Execute: bash -c "read_file ./skill-litho/configuration.md#project-config"

5. **Get Professional Details** (Load configuration guide)

Context increase: 150 tokens

Remaining: 1200 tokens

Claude gets: Specific parameters and best practices for project configuration

6. **Execute Tool Call** (Need to generate project analysis)

Claude decision: Analyze existing architecture first, then design authentication integration

Execute: bash -c "deepwiki-rs -p ./src --skip-preprocessing -o ./temp-analysis"

7. **Design Based on Analysis Results** (Use generated documents for reasoning)

Claude now has:

- Complete Litho usage guidance (from SKILL.md)

- Detailed configuration options (from configuration.md)

- Real-time project architecture analysis (from Litho output)

Based on this information, Claude can design appropriate authentication integration solution

This workflow demonstrates how Agent Skills enable AI to gain deep professional capabilities while maintaining efficiency.

### Key Technical Implementation Details

#### Intelligent Call Mechanism of Bash Tool

The official documentation particularly emphasizes how Agent Skills collaborate with Bash tools. This is not just a simple file read but an intelligent decision-making process:

**Claude's Decision Logic**:

python

Claude's internal simplified decision process

def should_use_skill(user_request, available_skills):

# 1. Analyze request content, identify keywords and intent

intent = analyze_intent(user_request)

keywords = extract_keywords(user_request)

# 2. Match skill metadata

relevant_skills = []

for skill in available_skills:

if matches_keywords(skill.description, keywords):

relevance_score = calculate_relevance(intent, skill.description)

if relevance_score > threshold:

relevant_skills.append((skill, relevance_score))

# 3. Select most relevant skill

if relevant_skills:

return max(relevant_skills, key=lambda x: x[1])[0]

return None

**Intelligent Strategy for File Reading**:

bash

Command patterns Claude actually executes

1. Basic skill activation

read_file ./skill-name/SKILL.md

2. On-demand deep loading

read_file ./skill-name/configuration.md#specific-section

3. Tool execution (if needed)

bash -c "./skill-name/scripts/automation.sh --params"

4. Result validation

read_file ./skill-name/validation-report.md

#### Security and Stability Considerations

The official documentation discusses security issues in detail, which is particularly important for Chinese users:

**Malicious Skill Protection Mechanism**:

1. **Code Execution Restrictions**

- Agent Skills can run scripts but require explicit user authorization

- System limits script permission scope

- Network access requires additional security checks

2. **Content Audit Requirements**

Official recommended audit checklist:

✓ Check content of all executable files

✓ Verify network connection target addresses

✓ Confirm file system access permissions

✓ Test exception handling

**Special Considerations for Chinese Users**:

- File paths may contain Chinese characters, need to ensure scripts handle them correctly

- Error messages may need localization

- Chinese comments in configuration files cannot affect program parsing

#### Error Handling and Fallback Mechanism

The official documentation describes multi-layer error handling:

**First Layer: File Access Errors**

bash

If SKILL.md read fails

read_file ./skill-name/SKILL.md

Error response: File not found

Claude fallback: Use general knowledge + suggest user check skill installation

**Second Layer: Content Parsing Errors**

bash

If SKILL.md format error

Claude detection: Missing required YAML frontmatter

Fallback strategy: Ignore this skill, continue processing other tasks

**Third Layer: Execution Failure Handling**

bash

If script execution fails

bash -c "./skill-name/script.sh"

Error capture: Check exit codes and standard error output

Intelligent analysis: Provide solutions based on error information

### Integration Patterns with Toolchains

#### Coordination with Existing Development Tools

The official documentation emphasizes that Agent Skills need to coexist harmoniously with traditional toolchains:

**1. IDE Integration Mode**

json

// VS Code extension example

{

"anthropic.skills": {

"enabled": true,

"skillsPath": "./skills/",

"autoLoad": ["litho", "testing", "security"],

"workspaceContext": {

"projectType": "rust",

"buildSystem": "cargo"

}

}

}

**2. CI/CD Pipeline Integration**

yaml

Skill usage in GitHub Actions

- name: Code Analysis with Skills uses: anthropic/skills-action@v1 with: skills: "litho,-security-linter" project-path: "./src" output-format: "markdown" env: LITHO_API_KEY: ${{ secrets.LITHO_API_KEY }}

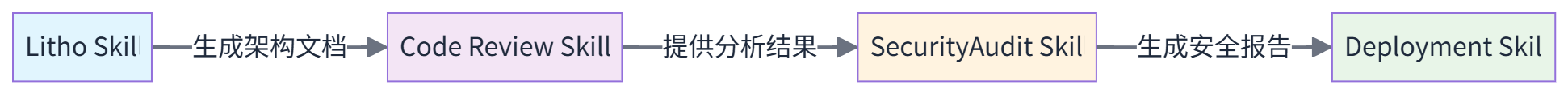

#### Inter-skill Collaboration Patterns

Different skills can form skill chains:

Each skill can:

- Use output as input for next skill

- Share temporary files and cache

- Coordinate execution order and dependencies

### Performance Optimization Considerations

In Agent Skill usage scenarios, Litho's configuration focuses on:

1. **Response Speed**: Use efficient models to quickly generate basic architecture

2. **Information Density**: Generated documents are highly structured for AI to quickly extract key information

3. **Incremental Updates**: Support diff mode, only process changed parts

4. **Modular Output**: Split documents by functional domain for on-demand loading

### Value of Executable Scripts

Litho Skill includes two key scripts:

**quick-start.sh**

bash

!/bin/bash

Quick setup of code analysis environment for AI agents

detect_project_structure() {

# Identify project type and structure

# Select appropriate analysis parameters

}

setup_optimized_config() {

# Optimize configuration according to agent usage scenarios

# Set appropriate model combinations

}

**ci-integration.sh**

bash

!/bin/bash

Automate documentation updates in CI/CD

generate_diff_docs() {

# Only regenerate documentation for changed parts

# Keep documentation synchronized with code

}

These scripts allow other AI Agents to automatically call Litho's capabilities instead of relying on manual operations.

## Design Principles for Building High-Quality Skills

### Officially Recommended Skill Design Patterns

According to the official Anthropic documentation, good Agent Skills should follow several core design principles:

#### 1. Clear Boundaries Principle

The official documentation emphasizes that each skill should have clear application boundaries, avoiding "do-it-all" design:

yaml

❌ Officially not recommended example

name: development-helper

description: "All-purpose development assistance for everything"

✅ Officially recommended example

name: rust-async-patterns

description: "Rust async programming patterns for high-performance backend services. Use when Claude needs to implement async/await, tokio integration, or performance optimization in Rust."

**Key Points**:

- Description should clearly state "when to use"

- Avoid overlapping functional scopes

- Maintain single responsibility for skills

#### 2. Discoverability Design

The official documentation details how to enable Claude to accurately identify and use skills:

**Metadata Optimization Techniques**:

yaml

Before optimization

description: "Documentation generation tool"

After optimization (official recommended pattern)

description: "Code analysis tool that generates architectural documentation. Use when Claude needs to: 1) understand codebase structure, 2) identify module dependencies, 3) prepare for refactoring, 4) create technical specifications."

**Keyword Strategy**:

- Include core keywords in description

- Use numbers or bullet points to clearly state application scenarios

- Provide specific trigger conditions

#### 3. Progressive Complexity

Officially recommended content organization:

markdown

Content layering of SKILL.md

First Layer: Core Concepts (all users need)

- Basic usage methods

- Key decision points

- Common patterns

Second Layer: Deep Practice (experienced users)

- Advanced configuration options

- Performance optimization techniques

- Troubleshooting methods

Third Layer: Expert Content (on-demand reference)

- Internal implementation principles

- Extension development guide

- Contributor documentation

### Official Recommendations for Quality Assurance

#### Test-Driven Skill Development

The official documentation proposes a complete testing framework:

bash

skills-testing/

├── unit-tests/ # Functional unit tests

│ ├── test-basic-workflow.py

│ ├── test-error-handling.py

│ └── test-integration.py

├── integration-tests/ # Integration tests

│ ├── test-context-usage.py

│ └── test-tool-chaining.py

├── performance-tests/ # Performance tests

│ ├── test-response-time.py

│ └── test-context-efficiency.py

└── user-scenario-tests/ # User scenario tests

├── test-new-user.json

└── test-expert-user.json

**Key Testing Metrics**:

- **Response Time**: Time from skill activation to providing guidance < 2 seconds

- **Context Efficiency**: Token density of skill-related content > regular documentation

- **Task Success Rate**: > 90% under standard test scenarios

#### Continuous Monitoring and Iteration Mechanism

Officially recommended monitoring system:

python

Skill performance monitoring

class SkillMonitor:

def track_usage_patterns(self):

return {

'activation_frequency': self.count_activations(),

'context_consumption': self.measure_token_usage(),

'task_success_rate': self.calculate_success_rate(),

'user_satisfaction': self.collect_feedback()

}

def identify_improvement_areas(self):

if self.context_consumption > threshold:

return "Consider optimizing content density"

if self.task_success_rate < 0.8:

return "Review instruction clarity"

### Composable AI Workflows

The official documentation shows the potential of skill combination:

Typical Rust development workflow:

┌─────────────┐ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐

│ Code Req │───▶│ Litho Skill │───▶│ Rust Skill │───▶│ Test Skill │

│ Analysis │ │(Arch Analysis)│ │(Code Implementation)│ │(Test Generation)│

└─────────────┘ └─────────────┘ └─────────────┘ └─────────────┘

│ │ │ │

▼ ▼ ▼ ▼

Requirement Quick Architecture Generate Rust Automated Test

Understanding and Understanding Best Practice Case Generation

Decomposition System View Code

This combination allows each skill to maximize value, forming a complete development loop.

## Building Recommendations

### Design Principles Based on Official Best Practices

According to the official Anthropic documentation, building high-quality skills should follow:

1. **Layered Information Organization**: Follow Progressive Disclosure principle, optimize Context usage efficiency

2. **Clear Boundaries**: Each skill focuses on solving specific types of problems, avoiding functional overlap

3. **AI Agent Priority**: Content design prioritizes AI agent usage scenarios, not direct human reading

4. **Structured Output**: Generated information should be easily parsed and used by other AI agents

5. **Provide Execution Interface**: Include executable scripts for automated calling and toolchain integration

### Recommended File Organization Structure

your-skill/

├── SKILL.md # Core workflow (recommended 50 ~ 150 tokens)

├── configuration.md # Detailed configuration parameters and environment settings

├── troubleshooting.md # Common problems and solutions

├── examples/ # Layered usage examples

│ ├── basic-usage.md

│ ├── advanced-patterns.md

│ └── integration-examples.md

├── scripts/ # Automation tools

│ ├── setup.sh # Environment configuration

│ ├── validate.py # Function validation

│ └── deploy.yml # Deployment automation

└── integration/ # Integration with other systems

├── ci-cd.md # Continuous integration configuration

└── api-clients.md # API client examples

In the future, with deep integration of AI in development workflows, Agent Skills may become the key bridge connecting human professional knowledge and AI execution capabilities. Mastering this technology will help teams maintain technological leadership in the AI era.

---

References:

- [Anthropic Agent Skills Official Documentation](https://www.anthropic.com/engineering/equipping-agents-for-the-real-world-with-agent-skills)

- [Litho Project Repository](https://github.com/sopaco/deepwiki-rs)

Top comments (0)