Did you ever wondered how we can harness the reasoning capabilities of Large Language Models (LLMs) while ensuring reliable, deterministic execution in real-world applications? Today, I'd like to share a practical pattern that creates an elegant bridge between natural language understanding and precise system control.

The Problem: LLMs and Deterministic Systems Don't Naturally Mix

LLMs excel at understanding and generating human language, but they struggle with consistent, reliable execution of precise commands. Meanwhile, our software systems demand exactness and determinism.

This creates a fundamental tension:

- We want the flexibility and intuition of natural language

- We need the reliability and precision of deterministic execution

How do we reconcile these seemingly opposing needs?

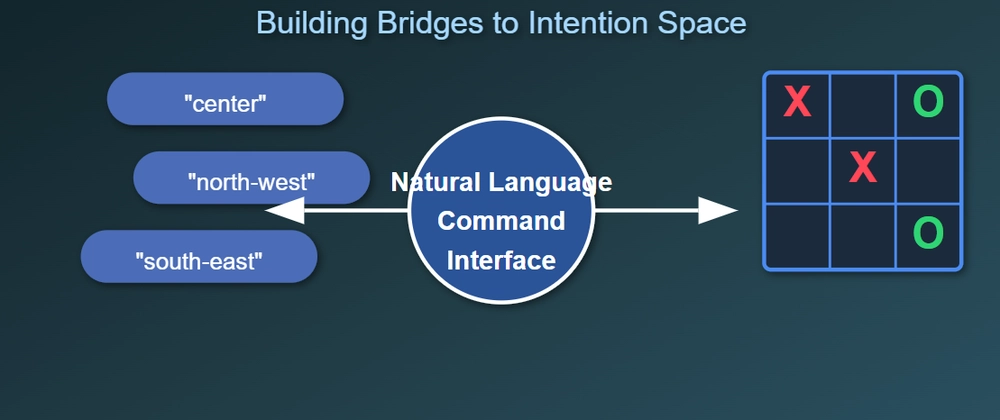

A Solution: Natural Language Command Interfaces

I've been experimenting with a pattern I call Natural Language Command Interfaces (NLCI) that creates a structured intermediary layer between LLMs and deterministic systems.

Let me demonstrate with a simple but illustrative example: a tic-tac-toe game where moves are expressed through natural language commands.

A Practical Example: Word-Based Tic-Tac-Toe

Instead of using numerical positions (1-9) for placing marks on a tic-tac-toe board, let's use directional words:

north-west | north | north-east

-----------+-------+-----------

west | center| east

-----------+-------+-----------

south-west | south | south-east

This seemingly simple change creates a profound shift in how we can interact with the system, particularly when involving LLMs.

The Code Pattern

Here's a simplified representation of this pattern:

# Command mapping layer

def get_position(command):

"""Convert word command to board position index"""

command_map = {

"northwest": 0, "north": 1, "northeast": 2,

"west": 3, "center": 4, "east": 5,

"southwest": 6, "south": 7, "southeast": 8,

# Allow for variations

"north-west": 0, "north-east": 2,

"south-west": 6, "south-east": 8

}

return command_map.get(command.lower().replace(" ", ""), -1)

# Reverse mapping for state explanation

def get_position_name(index):

"""Convert board position index to command name"""

position_names = {

0: "north-west", 1: "north", 2: "north-east",

3: "west", 4: "center", 5: "east",

6: "south-west", 7: "south", 8: "south-east"

}

return position_names.get(index, "unknown")

These simple mapping functions are the core of our NLCI pattern. They transform intuitive language commands into precise system operations, and system states back into human-readable descriptions.

Why This Matters for LLM Integration

When we hook this up to an LLM (like GPT-4), something fascinating happens. The LLM doesn't just send commands - it can reason about the game state in natural language terms.

For example, we can provide the LLM with game context like this:

current_state = f"""

Current board state:

X | | O

---+---+---

| X |

---+---+---

| |

Available moves: west, east, south, south-west, south-east

As the O player, your goal is to get three O's in a row.

Choose one of the available positions.

"""

The LLM can now reason: "I see X is attempting a diagonal win from north-west through center toward south-east. I should block at south-east."

This natural language representation allows the LLM to apply its reasoning capabilities while ensuring its output maps precisely to valid system operations.

The Three Key Components

This pattern consists of three essential elements:

- Natural Language Commands: Intuitive, human-readable terms for system operations

- Bidirectional Mapping: Precise translation between commands and system actions

- State Representation in Natural Language: Expressing system state in terms the LLM can reason about

Beyond Games: Real-World Applications

The full code for you to play with Tic-Tac-Toe is in the Github repo link https://github.com/spicecoder/Tic_Tac_LLM/blob/main/tictacLLM.py

While tic-tac-toe demonstrates the concept clearly, this pattern extends to countless practical applications:

- Database Operations: Using natural language to describe CRUD operations

- IoT Control: Expressing device operations in intuitive terms

- UI Automation: Directing interface interactions through natural commands

- Programming Assistance: Enabling LLMs to suggest and implement code changes

The Benefits

This approach offers several advantages:

- Verifiability: LLMs can explain their reasoning in human terms

- Precision: Commands map to exact system operations

- Learnability: Intuitive for humans, accessible for LLMs

- Flexibility: Accepts variations in command phrasing

Introducing Intention Space: The Next Evolution

This natural language command interface pattern is actually the foundational principle behind a more comprehensive framework we're building called Intention Space.

Intention Space extends these concepts into a complete system where software components communicate through natural language phrases representing Intentions, Objects, and Design Nodes. By expressing software architecture in human-readable terms, Intention Space creates an unprecedented bridge between human understanding and machine execution.

With Intention Space Intelligence, your LLM becomes a true development assistant, helping you with:

- Requirements gathering: Capturing user needs in natural language that maps directly to system components

- Prototyping: Quickly assembling working systems through intention-driven design

- Testing: Verifying behavior through natural language paths called CPUXs (Common Paths for Understanding and Execution)

- Algebraic verification: Creating mathematically verifiable software systems through structured natural language interfaces

Getting Started

Want to implement this pattern in your own projects? Here's a simple framework:

- Identify the core operations in your system

- Create natural language equivalents for these operations

- Implement bidirectional mapping between commands and operations

- Represent system state in natural language for context

- Connect your LLM through a simple API integration

Conclusion

Natural Language Command Interfaces represent a powerful pattern for bridging the gap between LLM capabilities and deterministic system requirements. By creating this intermediary layer, we can harness the reasoning power of language models while maintaining precise control over system behavior.

This is just the beginning of how we can transform software development through natural language interfaces. Intention Space takes these concepts further, creating a comprehensive framework for intent-driven, human-centered software development.

Have you experimented with similar approaches? I'd love to hear your thoughts and experiences in the comments!

Coming soon: A deeper exploration of Intention Space from Keybyte Systems, Australia and how it transforms software development through natural language interfaces. Stay tuned for more practical examples and implementation details!

Top comments (0)