If you're enjoying this article and have a Medium subscription, I'd greatly appreciate if you'd open and read it there — your support helps me continue creating quality content like this!

Update (June 2025): Added reference to the Angular team's two official LLMs.txt files that help LLMs create better modern Angular code.

Have you ever felt that moment of frustration when a Large Language Model completely misunderstands your coding question? We've all been there. The truth is, LLMs are a lot like our developer colleagues - the quality of their help depends heavily on the context we provide.

Think of it this way: just as you wouldn't ask a teammate for help with a complex bug without sharing the relevant code, LLMs need proper context to give you their best advice. That's where AI-friendly documentation comes in, and I'd like to share what I've learned while creating some for Angular.

The Quest for Better Context

That's what inspired me to start collecting AI-friendly documentation. Here are some notable examples:

- Model Context Protocol: https://modelcontextprotocol.io/llms-full.txt offers complete documentation in a single file

- Daisy UI v5: https://daisyui.com/llms.txt provides both comprehensive docs and component-specific versions

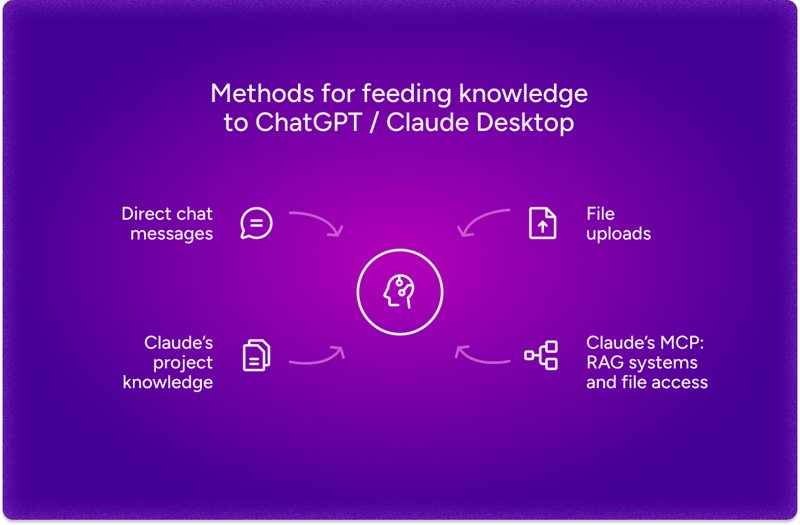

There are several approaches to feeding this knowledge to your AI assistant:

- Sending documentation directly as a chat message to the LLM

- Uploading relevant files to your conversation

- Using a Retrieval-Augmented Generation (RAG) system to intelligently fetch information

As part of this exploration, I've created AI-friendly documentation for Angular, which you can find here: https://github.com/gergelyszerovay/ai-friendly-docs/

Update (June 2025):

The Angular team has developed two official LLMs.txt files to help LLMs and tools that use LLMs for code generation create better modern Angular code:

- llms.txt - an index file providing links to key files and resources.

- llms-full.txt - a more robust compiled set of resources describing how Angular works and how to build Angular applications.

Beyond Basic File Uploads

While most chat interfaces let you upload files, Claude Desktop takes this further with its Project Knowledge feature. It allows you to provide documents, code, and other materials that Claude can reference during conversations. With a massive context window of 200,000 tokens (roughly 500 pages of text), Claude can maintain a deeper understanding of complex projects, making its responses significantly more accurate.

A context window, by the way, is essentially how much information an LLM can "keep in mind" during a single conversation - think of it as the AI's working memory. It's measured in tokens, which are the basic units that LLMs process (roughly corresponding to word fragments). For example, the sentence "I love programming in TypeScript" might be broken down into tokens like ["I", "love", "program", "ming", "in", "Type", "Script"]. The larger the context window, the more information the LLM can consider when generating responses.

The Magic Behind RAG Systems

RAG (Retrieval-Augmented Generation) is what powers these context-aware AI interactions. Rather than simply dumping all information into the model, RAG systems intelligently retrieve the most relevant pieces for your specific question.

It's like having a helpful librarian instead of being handed a pile of books - the librarian knows exactly where to find the information you need. When your documentation has clear headers and organized sections, the retrieval system can pinpoint relevant information much more precisely.

Under the hood, RAG systems work by converting your documentation into vector embeddings—numerical representations that capture semantic meaning. When you ask a question, the system converts it to the same format and finds the most similar sections in your documentation. Pretty neat, right?

I'll be diving deeper into RAG systems in an upcoming article, so stay tuned if you're curious about setting up your own.

Building Better Angular Documentation

Here are the principles I've found most helpful when creating AI-friendly documentation:

Create a comprehensive single file containing the entire framework or library documentation for when you need broad knowledge

Develop separate, focused files for specific features that can be included individually when you need targeted information

Maintain clear header structures to help AI systems understand relationships between different documentation sections

I've applied these ideas to my Angular documentation project. Starting with the official Angular docs, I've created both a comprehensive angular-full.md file and individual feature-specific files in the sections directory. This approach gives you flexibility in how you provide context to your AI assistants.

The Importance of Clear Header Structures

That third principle (maintaining clear header structures) deserves special attention because it dramatically affects how well AI systems can navigate your documentation.

Let me show you what I mean with an example from the Angular documentation. The original file guide/components/inputs.md begins with:

# Accepting data with input properties

Tip: This guide assumes you've already read the [Essentials Guide](essentials).

Read that first if you're new to Angular.

Tip: If you're familiar with other web frameworks, input properties

are similar to _props_.

When you use a component, you commonly want to pass some data to it.

A component specifies the data that it accepts by declaring **inputs**:

// ...

From the file's name and content alone, it's not immediately obvious that this document explains component inputs within Angular's component architecture.

In my AI-friendly version in guide-components.md, I've added an additional header that provides this crucial context:

# Guide Components

// ...

## Accepting data with input properties

Tip: This guide assumes you've already read the [Essentials Guide](essentials).

Read that first if you're new to Angular.

Tip: If you're familiar with other web frameworks, input properties

are similar to _props_.

When you use a component, you commonly want to pass some data to it.

A component specifies the data that it accepts by declaring **inputs**:

// ...

This hierarchical structure helps AI systems understand that component inputs are a subtopic within Angular components. This context is especially valuable for retrieval systems that might pull just a section of documentation, as it helps the AI properly frame the information within Angular's component architecture.

Being Strategic About Context Size

One valuable lesson I've learned is to be thoughtful about what you include in your AI's context window.

Sending the entire angular-full.md file isn't always the best move. For instance, if you're working on Angular components, you'll get much better results by including just sections/guide-components.md and perhaps a few related files like guide-templates.md or guide-di.md.

This targeted approach helps the AI focus on what matters most without getting distracted by unrelated documentation. Sometimes less really is more when it comes to context.

Seeing It in Action: A Real Example

Let's look at a straightforward question: "Are standalone components default in Angular?"

This is an interesting case because before Angular 19.0.0, the standalone option defaulted to false, but in version 19 it changed to true. I tested this question with different LLMs, providing varying levels of documentation context.

ChatGPT (GPT-4o)

Test 1: No documentation provided

🧑: Are standalone components default in Angular?

🤖: No, standalone components are not the default in Angular. By default,

Angular components are part of NgModules, meaning they must be declared

inside an @NgModule.

However, Angular introduced standalone components in Angular 14 to allow

components to work without being declared inside an @NgModule. Instead,

they use the standalone: true flag in their decorator.

This response is outdated and lacks awareness of Angular 19's changes: a perfect example of why context matters.

Test 2: Full Angular documentation added

🧑: Are standalone components default in Angular?

🤖: No, standalone components are not the default in Angular, but they

are encouraged as a simplified way to build applications. In Angular

versions before 19.0.0, the standalone option defaults to false, meaning

components are part of an NgModule by default. However, newer Angular

versions allow developers to migrate existing applications to standalone

mode incrementally without breaking changes.

Much better! The model correctly identifies the version cutoff, though

it's still a bit hesitant to clearly state they're now the default in v19+.

Test 3: Only guide-components.md added

🧑: Are standalone components default in Angular?

🤖: Yes, in Angular, components are standalone by default. This means

you can directly add them to the imports array of other components without

needing an NgModule. However, components created with older versions of

Angular may have standalone: false in their @Component decorator,

requiring them to be part of an NgModule.

Important Note: In Angular versions before 19.0.0, the standalone option

defaulted to false.

Perfect! With the targeted documentation, we get the most accurate and confident answer.

These examples highlight how crucial proper documentation context is for accurate AI responses. When asking different LLMs this question without providing documentation context, you'll often get responses that sound plausible but might not reflect the latest updates. The LLM is working from its training data, which might be outdated or incomplete.

However, when you provide the relevant section of Angular documentation, the response changes dramatically. It's like the difference between asking a developer who last used Angular a year ago versus one who's working with the latest version daily.

Adding Code Context to the Mix

Documentation is just one piece of the puzzle. For truly effective AI assistance with development tasks, you often need to provide code alongside documentation.

If you're looking to optimize source code for AI consumption, check out Repomix (https://repomix.com/). It's a great tool that helps consolidate entire codebases into a single, AI-friendly file.

Repomix handles token count management (crucial for staying within context limits), excludes sensitive information, and produces output in various formats. It's the perfect companion to AI-friendly documentation when you need to provide both docs and code context.

AI-Friendly Documentation vs. Web Retrieval

Why create AI-friendly documentation when many LLMs can now access the web?

While web access is undoubtedly valuable, it presents several limitations compared to carefully curated documentation:

Version Specificity: Web searches may return information about different versions of a framework or library than the one you're using. AI-friendly documentation can be created specifically for your exact version, eliminating confusion and inconsistencies.

Accuracy Control: Web content varies widely in quality and accuracy. Curated documentation ensures the LLM works with verified, reliable information rather than potentially outdated or incorrect sources.

Contextual Coherence: When an LLM retrieves information from multiple web sources, it must reconcile potentially conflicting information. Curated documentation provides a unified, coherent context that leads to more consistent responses.

Reduced Hallucinations: With clear, comprehensive documentation, LLMs are less likely to "fill in the gaps" with incorrect assumptions, reducing hallucinations and increasing accuracy.

For example, in our earlier test case about Angular standalone components, a web search might return outdated information from various sources. Our curated documentation, however, clearly indicated the version-specific change in Angular 19, resulting in a more accurate response.

Looking Forward

We've explored how AI-friendly documentation can significantly improve the quality of responses from LLMs. The key takeaway from my work with Angular documentation and tools like Repomix is that structuring information specifically for AI consumption yields dramatically better results.

Based on my experience, there are two key approaches for enhancing AI capabilities:

Context Enhancement: This is what we've discussed throughout this article - providing additional context through well-structured documentation and code. This approach works with existing models and gives them better information to work with.

Tool Integration: The next evolution in AI assistance comes through standardized integration methods, particularly the Model Context Protocol (MCP) developed by Anthropic. MCP is a specification that defines how AI models can communicate with external tools in a standardized way. Unlike earlier approaches where each AI service had its own custom integration methods, MCP provides a common language for tool definitions, invocations, and responses.

These approaches complement each other: better documentation helps LLMs understand what tools to use, while integrated tools expand their capabilities. I am currently developing RAG tools that leverage MCP and will share these developments in my upcoming articles:

- Building Your Own RAG System: Enhancing Claude with Your Documentation

- How to Get Better Technical Answers from Claude Using Your Own Documentation

About the Author

My name is Gergely Szerovay. I've worked as a data scientist and full-stack developer for many years, and I've been a frontend tech lead focusing on Angular development for the past three years. To closely follow the evolution of AI-assisted software development, I've decided to start building AI tools in public and publish my progress on AIBoosted.dev.

Follow me on Substack (Angular Addicts), Substack (AIBoosted.dev), Medium, Dev.to, X or LinkedIn to learn more about Angular and how to build AI apps with AI, TypeScript, React, and Angular!

Top comments (4)

Thanks for preparing those docs, so far I've been sending it some of the relevant underlying code (say the signals graph file and a few others) from the Angular repo + asking the model a few questions to make sure it "gets it"...I'll definitely give this a shot :)

By the way, I've also found that models tend to "lose the plot" relatively quickly after a medium sized conversation, after which it tends to be easier to just start a new thread. Have you found any good ways to keep them focused, such as resending the initial parameters? I realize its a losing game due to context windows...but sometimes "just one more message" would be helpful :)

I've experienced the same issue. For the "lost in the middle" phenomenon (where LLMs tend to forget or pay less attention to information in the middle of the context window), I break tasks into smaller chunks in new chats.

For coding tasks, my workflow is:

After the commit, I can reuse my chat from step 2, so I don't have to repeatedly ask the LLM to read the standards.

This approach works well when making code changes that result in a commit. I find handling each smaller task in a new chat with a separate context window is more effective than trying to keep everything in one conversation. This also helps to keep the token usage low.

I typically use Claude Desktop with the filesystem MCP server for most projects.

Good advice, I'll give it a shot :) I tend to mostly use LLM's for brainstorming new ideas/features, & as a sanity check on the api of some generic component/primitive I'm working on.

Funny part is how much all of them want to "write code for you", even when suffixing every message with something like "I don't want you to write code for me, we're just brainstorming", they still output code on every 3rd message on average...still very useful tools :)

This is genius! Working with LLMs is really frustrating when you want to use modern Angular techniques, because the framework has changed a lot in the last two years. The assistant not understanding the new template syntax and signals has always been my biggest annoyance. I can't wait to try this AI-friendly documentation! Thanks for sharing! :-)