Intro

A general rule of machine learning is that the more accurate your labeled data is, the higher quality your trained models will be. Currently, it is practically impossible to avoid manual labeling. Everyone from small companies to giants like Tesla, OpenAI, and others engage in this task.

In this article, I will explain how to create an in-house labeling team, how to do it cost-effectively, and what to focus on based on my own experience. Having gone through this process myself, I can share insights on the challenges you may encounter, which tools to use in 2023, the optimal code to employ, and so on.

Tools to use

I'll start by saying that I used CVAT - an open-source tool for dataset labeling. There are several ways to use it:

- Website version - you can label with CVAT directly on a website (Link), however, there is a restriction on the volume of data you can upload, and it's also inadvisable to do so with sensitive client information.

- You can download the CVAT docker from a github (Link) and install it yourself, keeping all data local. And here are two options - locally on your personal computer (or company server) or in your own cloud (there are instructions on how to do this with AWS).

Here, I will explain how to do it locally, but all of this is applicable to a server in your AWS account. The installation of CVAT itself is quite simple - there is a detailed guide (link). After installation, CVAT will be available in the browser at localhost:8080, but you can change IP address and port.

Uploading data

Different dataset formats and loading methods are supported - both through the browser and other ways. I used the integration path between CVAT and fiftyone - as this is the exact tool I use for working with datasets, their storage, and so on. The combination of CVAT and fiftyone (link) provides the convenience of downloading and uploading datasets, and further working with them.

CVAT has a built-in auto-labeling function. For many, this will be a big plus - but in my case, I preferred to have more control and did the auto-labeling myself before uploading the dataset into CVAT (link).

After I obtained the dataset in fiftyone, I used the following code to upload it into CVAT:

def CVAT_upload():

dataset = Import_base_dataset()

dataset_len = len(dataset)

dataset_parts = int(dataset_len / SUB_LEN)

if dataset_len % SUB_LEN != 0:

#more parts

dataset_parts = dataset_parts + 1

print("Dataset len:", dataset_len, "parts:", dataset_parts)

for i in range(dataset_parts):

if (i*SUB_LEN)+SUB_LEN > dataset_len:

sub_max = dataset_len

else:

sub_max = (i * SUB_LEN) + SUB_LEN

dataset_new = dataset[i*SUB_LEN:sub_max].select_fields("ground_truth").clone()

dataset_new.name = NAME+SUB_PART+'_'+str(i)

dataset_new.persistent = True

dataset_new.annotate(anno_key+'_'+str(i), label_field="ground_truth", url="http://176.36.189.106", project_name=NAME+TRAIN_VAL+SUB_PART)

print("Uploading dataset:", NAME+SUB_PART+'_'+str(i))

time.sleep(2)

print("list of datasets:", fo.list_datasets())

This loading method has a bug (or a feature?) - CVAT takes chunks of 300–500–1000 photos, no more - this code breaks the dataset into parts and uploads them to the server. This is also convenient if you have multiple people - you can assign each person equal parts.

Labeling rules

When labeling, it is important to take the time to create rules. People should understand exactly what needs to be labeled, what rules to follow, what to delete, and so on. These rules will be highly individual, but here are the issues that I encountered the most when labeling my dataset:

- What is the minimum size of objects worth labeling?

- In which cases should labeling be corrected, and in which cases the photo should be removed from the dataset?

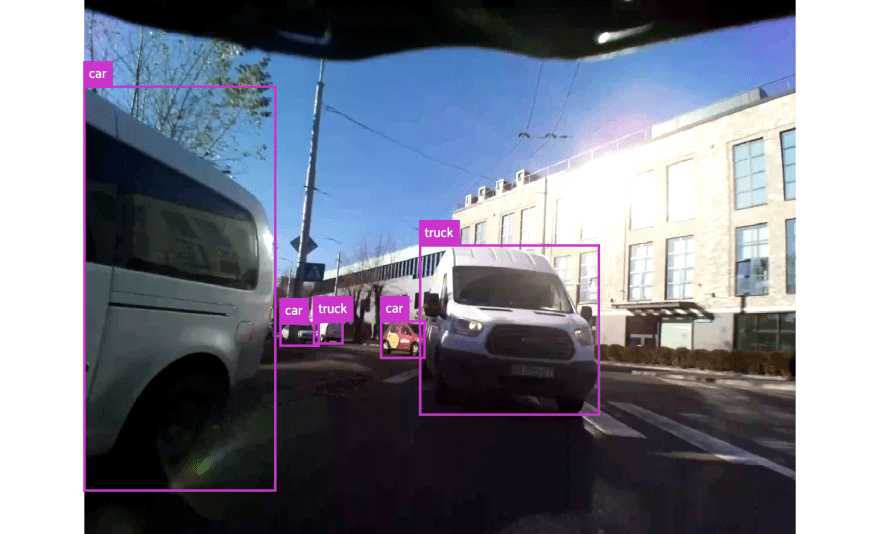

- Which cars belong to which class - there is often a fine line between car and truck, as there are trucks of different sizes, and examples need to be shown of which class they belong to.

- Should people and bicycles be labeled separately, or are they the same class? In my case, we labeled separately bicycle and the person on it.

Also, with night photos, there were questions like:

- Deleting blurry photos

- Labeling objects only with clear boundaries - if only the headlights are visible, don't label it (as it may just be a street light or headlight)

It will also take time for people to learn how to do fast and high-quality labeling (ironically, to train neural networks, you need to fine-tune the annotator's (brain) neural network first).

Downloading data

After the dataset has been labeled and verified, it needs to be downloaded and turned into a fiftyone dataset.

def CVAT_download():

print("list of datasets:", fo.list_datasets())

dataset = Import_base_dataset()

dataset_len = len(dataset)

dataset_parts = int(dataset_len / SUB_LEN)

if dataset_len % SUB_LEN != 0:

#more parts

dataset_parts = dataset_parts + 1

print("Dataset len:", dataset_len, "parts:", dataset_parts)

for i in range(dataset_parts):

old_dataset = fo.load_dataset(NAME+SUB_PART+'_'+str(i))

print("Downloading dataset:", NAME+SUB_PART+'_'+str(i))

dataset.get_annotation_info(anno_key+'_'+str(i))

old_dataset.load_annotations(anno_key+'_'+str(i), url="http://176.36.189.106", project_name=NAME+TRAIN_VAL+SUB_PART)

dataset.merge_samples(

old_dataset,

key_fcn=lambda sample: os.path.basename(sample.filepath),

insert_new=False)

time.sleep(1)

This code also breaks the dataset into parts and downloads them separately. I recommend keeping versions before and after manual labeling.

Annotation team

The convenience of having a server with CVAT is that it can be accessible to annotators from anywhere, and it is perfectly normal to hire a team of remote annotators. Each need to have a PC with a sufficiently large screen, as it may be necessary to annotate small objects.

A team of annotators and the infrastructure described in this article I needed to label my dataset, which was collected from cameras on the road (30k+ photos). This dataset was necessary to train an object detection model on six classes: [person, car, bus, bicycle, motorcycle, truck]. I released the dataset, created in this manner, as open source, and it can be downloaded here (link) together with trained YOLOv5s and YOLOv5x models from a popular repository (link) using this dataset. The license is simple: "Use it well"!

How I created a new dataset in 1 week

During the project, I needed to recognize license plates. This task consists of two parts: detecting the plate itself (bounding box) and recognizing the license number. Attempting to find an open-source solution did not yield results - either none were available or they were in an outdated format (I needed to run the neural network on the Coral EdgeTPU) or performed poorly on my images. I decided to create this dataset on my own.

Now, the established infrastructure and prepared team played a crucial role in the speed of the work. Using photos from labelled dataset, I selected the most suitable ones (where license plates were clearly visible). I was unable to use auto-labeling because I did not have a suitable neural network. I established new labeling rules (which changed significantly for this task) and uploaded the photos to CVAT. Labeling for a single class is quick, so 10k photos with license plates were ready in approximately one week with the efforts of two people.

Next, I trained YOLOv5s and YOLOv5m. Afterwards, I released both the models and the dataset as open source (link) with the same license: "Use it well".

Conclusion

In general, this is all you need to know about in-house dataset labeling. Currently, there are many cloud-based solutions for this, so my guide is suitable for those who work with sensitive clients' data.

References:

- https://github.com/opencv/cvat

- https://docs.voxel51.com/

- https://betterprogramming.pub/how-i-made-my-own-computer-vision-dataset-for-bicycle-safety-ai-f39153bfe9ca

- https://github.com/Valdiolus/Rear_view_camera_dataset

- https://github.com/Valdiolus/License_plate_detection_dataset

- https://github.com/ultralytics/yolov5

Top comments (0)