As both a programming nerd and a literature nerd 🤓 I've recently been trying to find more ways to unite the two. I'm currently spending the summer at the Recurse Center doing my own self-directed learning, so it seemed like a great time to start examining the overlap in this Venn diagram.

Going down this rabbit hole, I got obsessed with this adjacency matrix of characters in Les Misérables and wondering what else I might be able to look into about my favorite books with code.

I've been working through the book Natural Language Processing in Python and also love Carroll's use of language, including his tendencies to just invent words and rely on context and sound symbolism to make them comprehensible.

With that in mind, I was thinking about how to identify uncommon or invented words in a text.

So, armed with the text of Alice in Wonderland, I started on my quest (pedantic note: "Jabberwocky" in fact appears in Through the Looking Glass, so going into this I wasn't sure what, if any, invented words I should expect to find in Alice).

The book is kind enough to supply a function that should do this very thing:

def unusual_words(text):

text_vocab = set(w.lower() for w in text if w.isalpha())

english_vocab = set(w.lower() for w in nltk.corpus.words.words())

unusual = text_vocab.difference(english_vocab)

return sorted(unusual)

So I plugged that in and gave it a try. I got a list of 582 words back. That seemed like an awful lot, so I scanned through some of them. Plenty of them seemed to be pretty common: eggs, grins, happens, presented.

Ok, so maybe this was going to be harder than I thought. I got a little frustrated and tried using some other corpuses. But this corpus had a quarter-million words in it, surely it should have these incredibly common words? Then I noticed something about the words. Most of the nouns were plural (e.g. eggs) and the verbs were conjugated (e.g. presented).

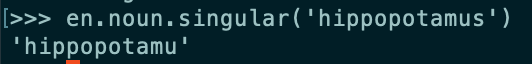

I wanted to get the words into their most "basic" forms. Singular, in the case of nouns, and infinitive, in the case of verbs. To this end, I tried the NodeBox English Linguistics Library. There were a couple hiccups here. For example, is_verb? did not return true for all conjugated verbs and singular had some unexpected results, so I ended up running the infinitive and singular methods on everything, and returning them if they returned a non-empty result that was different from the starting word.

Since this method is much slower than the simple set difference in the original method, I implemented it as a second pass, only to be used on words that were not found in the first diff. I rewrote unusual_words as follows:

def unusual_words(text):

text_vocab = set(w.lower() for w in text if w.isalpha())

english_vocab = set(w.lower() for w in nltk.corpus.words.words())

unusual = text_vocab.difference(english_vocab)

basic_forms = set(basic_word_form(w) for w in unusual)

return sorted(basic_forms.difference(english_vocab))

And wrote a new method basic_word_form, which used the NodeBox methods:

def basic_word_form(word):

word = word.lower()

singular = en.noun.singular(word)

if singular != word:

return singular

else:

infinitive = en.verb.infinitive(word)

return infinitive if len(infinitive) > 0 else word

This got me back a list of 92 words. Not too bad. Some were Roman numerals, some were proper nouns (France, London, Shakespeare), others legitimately unusual or invented (seaography, gryphon). There were a few that seemed out of place still though, like smallest, larger, loveliest--comparatives and superlatives. I didn't see anything in NodeBox that might help with reducing these to their base adjectives or adverbs. There were also a few verbs, like dreamed, which had not been correctly converted to their infinitive forms.

I noticed that there was a tool in the Natural Language Tool Kit (NLTK) that purported to do just this--get the base (or "stem") form of a word.

I tried two different "Stemmers," the Porter Stemmer and the Snowball Stemmer:

def unusual_words_porter(text):

text_vocab = set(w.lower() for w in text if w.isalpha())

english_vocab = set(w.lower() for w in nltk.corpus.words.words())

unusual = text_vocab.difference(english_vocab)

basic_forms = set(basic_word_form_porter(w) for w in unusual)

return sorted(basic_forms.difference(english_vocab))

def basic_word_form_porter(word):

word = word.lower()

stemmer = nltk.PorterStemmer()

return stemmer.stem(word)

def unusual_words_snowball(text):

text_vocab = set(w.lower() for w in text if w.isalpha())

english_vocab = set(w.lower() for w in nltk.corpus.words.words())

unusual = text_vocab.difference(english_vocab)

basic_forms = set(basic_word_form_snowball(w) for w in unusual)

return sorted(basic_forms.difference(english_vocab))

def basic_word_form_snowball(word):

word = word.lower()

stemmer = nltk.SnowballStemmer('english')

return stemmer.stem(word)

These returned lists of 187 and 188 words, respectively. The stemmers didn't seem to do exactly what I needed--they often lobbed off conjugations or irregular plurals such that what remained was not an English word. Some of the outputs: trembl, turtl, difficulti.

I found one more tool: the lemmatizer. It is meant to reduce words to their "lemma," the "the canonical, dictionary or citation form of a word." Sounds promising.

>>> lemmatizer = WordNetLemmatizer()

>>> lemmatizer.lemmatize('hedgehogs')

u'hedgehog'

>>> lemmatizer.lemmatize('says')

u'say'

I noticed that it worked much better if you specified the part of speech of the word through an optional second argument.

>>> lemmatizer.lemmatize('prettier')

'prettier'

>>> lemmatizer.lemmatize('smallest')

'smallest'

>>> lemmatizer.lemmatize('smallest', pos='a')

u'small'

>>> lemmatizer.lemmatize('prettier', pos='a')

u'pretty'

Problem is, I didn't know what part of speech the words were, and some might be different ones depending on context, which I didn't have. So I tried each one without a part of speech, then as an adjective, a verb, and a noun, and returned the first one that didn't match the starting word. 🤷

def basic_word_form(word):

word = word.lower()

lemmatized_forms = [lemmatizer.lemmatize(word), lemmatizer.lemmatize(word, pos='a'), lemmatizer.lemmatize(word, pos='v'), lemmatizer.lemmatize(word, pos='n')]

for form in lemmatized_forms:

if form != word:

return form

return word

This produced the shortest list yet, at 71 words. The main false positives appeared to be British spellings, skurried, neighbour, curtsey, Roman numerals, and real words inexplicably missing from the original corpus, kid, proud. But a lot of the words did seem to be truly unusual.

I decided to do one last experiment, which was to run the text of Through the Looking Glass through the same process. I expected I'd find many more invented words, including the famous vorpal, uffish, brillig, and slithy from "Jabberwocky."

This text was not included in the NLTK by default, so I grabbed it from Project Gutenberg.

When I ran it through, I got 87 unusual words, including brillig, callay,callooh, frumious, etc. Funny enough, vorpal and slithy seem to have made their way into the NLTK corpus of "real" words, so they were not returned. 😂

This was my first foray into natural language processing tools. This is clearly not the most efficient way to do this, so I'm curious to find other possible approaches. Let me know what you think and if you have questions or ideas!

Top comments (3)

Great post, would you do us, readers, a favour of updating your code so that it styled by adding the

pySo this:

has highlighted code as follows:

Done! Thanks for flagging.

This is awesome! I know basically nothing about NLP and it's really cool to see how straightforward some of these snippets are.