AI-powered applications are evolving beyond just autonomous agents performing tasks. A new approach involving Human-in-the-Loop allows users to provide feedback, review results, and decide the next steps for the AI. These run-time agents are known as CoAgents.

TL;DR

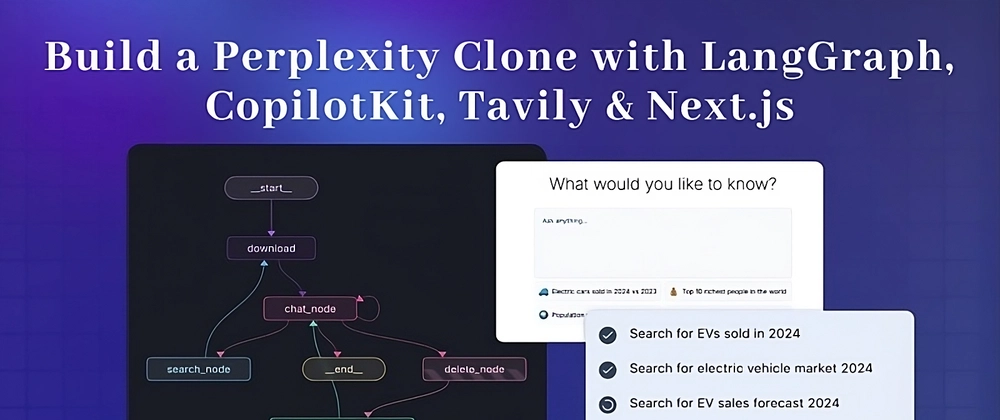

In this tutorial, you'll learn how to build a Perplexity clone using LangGraph, CopilotKit, and Tavily.

Time to start building!

What is an Agentic Copilot?

Agentic copilots are how CopilotKit brings LangGraph agents into your application.

CoAgents are CopilotKit's approach to building agentic experiences!

In short, it will handle user requests by performing multiple search queries and stream the search back with status and results in real-time to the client.

Prerequisites

To fully understand this tutorial, you need to have a basic understanding of React or Next.js.

We'll also make use of the following:

- Python - a popular programming language for building AI agents with LangGraph; make sure it is installed on your computer.

- LangGraph - a framework for creating and deploying AI agents. It also helps to define the control flows and actions to be performed by the agent.

- OpenAI API Key - to enable us to perform various tasks using the GPT models; for this tutorial, ensure you have access to the GPT-4 model.

- Tavily AI - a search engine that enables AI agents to conduct research and access real-time knowledge within the application.

- CopilotKit - an open-source copilot framework for building custom AI chatbots, in-app AI agents, and text areas.

- Shad Cn UI - provides a collection of reusable UI components within the application.

How to Create AI Agents with LangGraph and CopilotKit

In this section, you'll learn how to create an AI agent using LangGraph and CopilotKit.

First, clone the CopilotKit CoAgents starter repository. The ui directory contains the frontend for the Next.js application, and the agent directory holds the CoAgent for the application.

Inside the agent directory, install the project dependencies using Poetry.

cd agent

poetry install

Create a .env file within the agent folder and copy your OpenAI and Tavily AI API keys into the file:

OPENAI_API_KEY=

TAVILY_API_KEY=

Copy the code snippet below into the agent.py file:

"""

This is the main entry point for the AI.

It defines the workflow graph and the entry point for the agent.

"""

# pylint: disable=line-too-long, unused-import

from langgraph.graph import StateGraph, END

from langgraph.checkpoint.memory import MemorySaver

from ai_researcher.state import AgentState

from ai_researcher.steps import steps_node

from ai_researcher.search import search_node

from ai_researcher.summarize import summarize_node

from ai_researcher.extract import extract_node

def route(state):

"""Route to research nodes."""

if not state.get("steps", None):

return END

current_step = next((step for step in state["steps"] if step["status"] == "pending"), None)

if not current_step:

return "summarize_node"

if current_step["type"] == "search":

return "search_node"

raise ValueError(f"Unknown step type: {current_step['type']}")

# Define a new graph

workflow = StateGraph(AgentState)

workflow.add_node("steps_node", steps_node)

workflow.add_node("search_node", search_node)

workflow.add_node("summarize_node", summarize_node)

workflow.add_node("extract_node", extract_node)

# Chatbot

workflow.set_entry_point("steps_node")

workflow.add_conditional_edges(

"steps_node",

route,

["summarize_node", "search_node", END]

)

workflow.add_edge("search_node", "extract_node")

workflow.add_conditional_edges(

"extract_node",

route,

["summarize_node", "search_node"]

)

workflow.add_edge("summarize_node", END)

memory = MemorySaver()

graph = workflow.compile(checkpointer=memory)

The code snippet above defines the LangGraph agent workflow. It starts from the steps_node, searches for the results, summarizes them, and extracts the key points.

Next create a demo.py file with the code snippet below:

"""Demo"""

import os

from dotenv import load_dotenv

load_dotenv()

from fastapi import FastAPI

import uvicorn

from copilotkit.integrations.fastapi import add_fastapi_endpoint

from copilotkit import CopilotKitSDK, LangGraphAgent

from ai_researcher.agent import graph

app = FastAPI()

sdk = CopilotKitSDK(

agents=[

LangGraphAgent(

name="ai_researcher",

description="Search agent.",

graph=graph,

)

],

)

add_fastapi_endpoint(app, sdk, "/copilotkit")

# add new route for health check

@app.get("/health")

def health():

"""Health check."""

return {"status": "ok"}

def main():

"""Run the uvicorn server."""

port = int(os.getenv("PORT", "8000"))

uvicorn.run("ai_researcher.demo:app", host="0.0.0.0", port=port, reload=True)

The code above creates a FastAPI endpoint that hosts the LangGraph agent and connects it to the CopilotKit SDK.

You can copy the remaining code for creating the CoAgent from the GitHub repository. In the following sections, you'll learn how to build the user interface for the Perplexity clone and handle search requests using CopilotKit.

Building the application interface with Next.js

In this section, I'll walk you through the process of building the user interface for the application.

First, create a Next.js Typescript project by running the code snippet below:

# 👉🏻 Navigate into the ui folder

npx create-next-app ./

Install the ShadCn UI library to the newly created project by running the code snippet below:

npx shadcn@latest init

Next, create a components folder at the root of the Next.js project, then copy the ui folder from this GitHub repository into that folder. Shadcn allows you to easily add various components to your application by installing them via the command line.

In addition to the Shadcn components, you'll need to create a few components representing different parts of the application interface. Run the following code snippet inside the components folder to add these components to the Next.js project:

touch ResearchWrapper.tsx ResultsView.tsx HomeView.tsx

touch AnswerMarkdown.tsx Progress.tsx SkeletonLoader.tsx

Copy the code snippet below into the app/page.tsx file:

"use client";

import { ResearchWrapper } from "@/components/ResearchWrapper";

import { ModelSelectorProvider, useModelSelectorContext } from "@/lib/model-selector-provider";

import { ResearchProvider } from "@/lib/research-provider";

import { CopilotKit } from "@copilotkit/react-core";

import "@copilotkit/react-ui/styles.css";

export default function ModelSelectorWrapper() {

return (

<CopilotKit runtimeUrl={useLgc ? "/api/copilotkit-lgc" : "/api/copilotkit"} agent="ai_researcher">

<ResearchProvider>

<ResearchWrapper />

</ResearchProvider>

</CopilotKit>

);

}

In the code snippet above, ResearchProvider is a custom React context provider that shares the user's search query and results, making them accessible to all components within the application. The ResearchWrapper component contains the core application elements and manages the UI.

Create a lib folder containing a research-provider.tsx file at the root of the Next.js project and copy the code below into the file:

import { createContext, useContext, useState, ReactNode, useEffect } from "react";

type ResearchContextType = {

researchQuery: string;

setResearchQuery: (query: string) => void;

researchInput: string;

setResearchInput: (input: string) => void;

isLoading: boolean;

setIsLoading: (loading: boolean) => void;

researchResult: ResearchResult | null;

setResearchResult: (result: ResearchResult) => void;

};

type ResearchResult = {

answer: string;

sources: string[];

}

const ResearchContext = createContext<ResearchContextType | undefined>(undefined);

export const ResearchProvider = ({ children }: { children: ReactNode }) => {

const [researchQuery, setResearchQuery] = useState<string>("");

const [researchInput, setResearchInput] = useState<string>("");

const [researchResult, setResearchResult] = useState<ResearchResult | null>(null);

const [isLoading, setIsLoading] = useState<boolean>(false);

useEffect(() => {

if (!researchQuery) {

setResearchResult(null);

setResearchInput("");

}

}, [researchQuery, researchResult]);

return (

<ResearchContext.Provider

value={{

researchQuery,

setResearchQuery,

researchInput,

setResearchInput,

isLoading,

setIsLoading,

researchResult,

setResearchResult,

}}

>

{children}

</ResearchContext.Provider>

);

};

export const useResearchContext = () => {

const context = useContext(ResearchContext);

if (context === undefined) {

throw new Error("useResearchContext must be used within a ResearchProvider");

}

return context;

};

The states are declared and saved to the ResearchContext to ensure they are properly managed across multiple components within the application.

Create a ResearchWrapper component as shown below:

import { HomeView } from "./HomeView";

import { ResultsView } from "./ResultsView";

import { AnimatePresence } from "framer-motion";

import { useResearchContext } from "@/lib/research-provider";

export function ResearchWrapper() {

const { researchQuery, setResearchInput } = useResearchContext();

return (

<>

<div className="flex flex-col items-center justify-center relative z-10">

<div className="flex-1">

{researchQuery ? (

<AnimatePresence

key="results"

onExitComplete={() => {

setResearchInput("");

}}

mode="wait"

>

<ResultsView key="results" />

</AnimatePresence>

) : (

<AnimatePresence key="home" mode="wait">

<HomeView key="home" />

</AnimatePresence>

)}

</div>

<footer className="text-xs p-2">

<a

href="https://copilotkit.ai"

target="_blank"

rel="noopener noreferrer"

className="text-slate-600 font-medium hover:underline"

>

Powered by CopilotKit 🪁

</a>

</footer>

</div>

</>

);

}

The ResearchWrapper component renders the HomeView component as the default view and displays the ResultView when a search query is provided. The useResearchContext hook enables us to access the researchQuery state and update the view accordingly.

Finally, create the HomeView component to render the application home page interface.

"use client";

import { useEffect, useState } from "react";

import { Textarea } from "./ui/textarea";

import { cn } from "@/lib/utils";

import { Button } from "./ui/button";

import { CornerDownLeftIcon } from "lucide-react";

import { useResearchContext } from "@/lib/research-provider";

import { motion } from "framer-motion";

import { useCoAgent } from "@copilotkit/react-core";

import { TextMessage, MessageRole } from "@copilotkit/runtime-client-gql";

import type { AgentState } from "../lib/types";

import { useModelSelectorContext } from "@/lib/model-selector-provider";

const MAX_INPUT_LENGTH = 250;

export function HomeView() {

const { setResearchQuery, researchInput, setResearchInput } =

useResearchContext();

const { model } = useModelSelectorContext();

const [isInputFocused, setIsInputFocused] = useState(false);

const {

run: runResearchAgent,

} = useCoAgent<AgentState>({

name: "ai_researcher",

initialState: {

model,

},

});

const handleResearch = (query: string) => {

setResearchQuery(query);

runResearchAgent(() => {

return new TextMessage({

role: MessageRole.User,

content: query,

});

});

};

const suggestions = [

{ label: "Electric cars sold in 2024 vs 2023", icon: "🚙" },

{ label: "Top 10 richest people in the world", icon: "💰" },

{ label: "Population of the World", icon: "🌍 " },

{ label: "Weather in Seattle VS New York", icon: "⛅️" },

];

return (

<motion.div

initial={{ opacity: 0, y: -50 }}

animate={{ opacity: 1, y: 0 }}

exit={{ opacity: 0 }}

transition={{ duration: 0.4 }}

className="h-screen w-full flex flex-col gap-y-2 justify-center items-center p-4 lg:p-0"

>

<h1 className="text-4xl font-extralight mb-6">

What would you like to know?

</h1>

<div

className={cn(

"w-full bg-slate-100/50 border shadow-sm rounded-md transition-all",

{

"ring-1 ring-slate-300": isInputFocused,

}

)}

>

<Textarea

placeholder="Ask anything..."

className="bg-transparent p-4 resize-none focus-visible:ring-0 focus-visible:ring-offset-0 border-0 w-full"

onFocus={() => setIsInputFocused(true)}

onBlur={() => setIsInputFocused(false)}

value={researchInput}

onChange={(e) => setResearchInput(e.target.value)}

onKeyDown={(e) => {

if (e.key === "Enter" && !e.shiftKey) {

e.preventDefault();

handleResearch(researchInput);

}

}}

maxLength={MAX_INPUT_LENGTH}

/>

<div className="text-xs p-4 flex items-center justify-between">

<div

className={cn("transition-all duration-300 mt-4 text-slate-500", {

"opacity-0": !researchInput,

"opacity-100": researchInput,

})}

>

{researchInput.length} / {MAX_INPUT_LENGTH}

</div>

<Button

size="sm"

className={cn("rounded-full transition-all duration-300", {

"opacity-0 pointer-events-none": !researchInput,

"opacity-100": researchInput,

})}

onClick={() => handleResearch(researchInput)}

>

Research

<CornerDownLeftIcon className="w-4 h-4 ml-2" />

</Button>

</div>

</div>

<div className="grid grid-cols-2 w-full gap-2 text-sm">

{suggestions.map((suggestion) => (

<div

key={suggestion.label}

onClick={() => handleResearch(suggestion.label)}

className="p-2 bg-slate-100/50 rounded-md border col-span-2 lg:col-span-1 flex cursor-pointer items-center space-x-2 hover:bg-slate-100 transition-all duration-300"

>

<span className="text-base">{suggestion.icon}</span>

<span className="flex-1">{suggestion.label}</span>

</div>

))}

</div>

</motion.div>

);

}

How to Connect your CoAgent to a Next.js Application

In this section, you'll learn how to connect the CopilotKit CoAgent to your Next.js application to enable users to perform search operations within the application.

Install the following CopilotKit packages and the OpenAI Node.js SDK. The CopilotKit packages allow the co-agent to interact with the React state values and make decisions within the application.

npm install @copilotkit/react-core @copilotkit/react-ui @copilotkit/runtime @copilotkit/runtime-client-gql openai

Create an api folder within the Next.js app folder. Inside the api folder, create a copilotkit directory containing a route.ts file. This will create an API endpoint (/api/copilotkit) that connects the frontend application to the CopilotKit CoAgent.

cd app

mkdir api && cd api

mkdir copilotkit && cd copilotkit

touch route.ts

Copy the code snippet below into the api/copilotkit/route.ts file:

import { NextRequest } from "next/server";

import {

CopilotRuntime,

OpenAIAdapter,

copilotRuntimeNextJSAppRouterEndpoint,

} from "@copilotkit/runtime";

import OpenAI from "openai";

//👇🏻 initializes OpenAI as the adapter

const openai = new OpenAI();

const serviceAdapter = new OpenAIAdapter({ openai } as any);

//👇🏻 connects the CopilotKit runtime to the CoAgent

const runtime = new CopilotRuntime({

remoteEndpoints: [

{

url: process.env.REMOTE_ACTION_URL || "http://localhost:8000/copilotkit",

},

],

});

export const POST = async (req: NextRequest) => {

const { handleRequest } = copilotRuntimeNextJSAppRouterEndpoint({

runtime,

serviceAdapter,

endpoint: "/api/copilotkit",

});

return handleRequest(req);

};

The code snippet above sets up the CopilotKit runtime at the /api/copilotkit API endpoint, allowing CopilotKit to process user requests through the AI co-agent.

Finally, update the app/page.tsx by wrapping the entire application with the CopilotKit component which provides the copilot context to all application components.

"use client";

import { ModelSelector } from "@/components/ModelSelector";

import { ResearchWrapper } from "@/components/ResearchWrapper";

import { ModelSelectorProvider, useModelSelectorContext } from "@/lib/model-selector-provider";

import { ResearchProvider } from "@/lib/research-provider";

import { CopilotKit } from "@copilotkit/react-core";

import "@copilotkit/react-ui/styles.css";

export default function ModelSelectorWrapper() {

return (

<main className="flex flex-col items-center justify-between">

<ModelSelectorProvider>

<Home/>

<ModelSelector />

</ModelSelectorProvider>

</main>

);

}

function Home() {

const { useLgc } = useModelSelectorContext();

return (

<CopilotKit runtimeUrl={useLgc ? "/api/copilotkit-lgc" : "/api/copilotkit"} agent="ai_researcher">

<ResearchProvider>

<ResearchWrapper />

</ResearchProvider>

</CopilotKit>

);

}

The CopilotKit component wraps the entire application and accepts two props - runtimeUrl and agent. The runtimeUrl is the backend API route that hosts the AI agent and agent is the name of the agent performing the action.

Accepting Requests and Streaming Responses to the Frontend

To enable CopilotKit to access and process user inputs, it provides the useCoAgent hook, which allows access to the agent's state from anywhere within the application.

For example, the code snippet below demonstrates how to use the useCoAgent hook. The state variable allows access to the agent's current state, setState is used to modify the state, and the run function executes instructions using the agent. The start and stop functions initiate and halt the agent's execution.

const { state, setState, run, start, stop } = useCoAgent({

name: "search_agent",

});

Update the HomeView component to execute the agent when a search query is provided.

//👇🏻 import useCoAgent hook from CopilotKit

import { useCoAgent } from "@copilotkit/react-core";

const { run: runResearchAgent } = useCoAgent({

name: "search_agent",

});

const handleResearch = (query: string) => {

setResearchQuery(query);

runResearchAgent(query); //👉🏻 starts the agent execution

};

Next, you can stream the search results to the the ResultsView by accessing the state variable within the useCoAgent hook. Copy the code snippet below into the ResultsView component.

"use client";

import { useResearchContext } from "@/lib/research-provider";

import { motion } from "framer-motion";

import { BookOpenIcon, LoaderCircleIcon, SparkleIcon } from "lucide-react";

import { SkeletonLoader } from "./SkeletonLoader";

import { useCoAgent } from "@copilotkit/react-core";

import { Progress } from "./Progress";

import { AnswerMarkdown } from "./AnswerMarkdown";

export function ResultsView() {

const { researchQuery } = useResearchContext();

//👇🏻 agent state

const { state: agentState } = useCoAgent({

name: "search_agent",

});

console.log("AGENT_STATE", agentState);

//👇🏻 keeps track of the current agent processing state

const steps =

agentState?.steps?.map((step: any) => {

return {

description: step.description || "",

status: step.status || "pending",

updates: step.updates || [],

};

}) || [];

const isLoading = !agentState?.answer?.markdown;

return (

<motion.div

initial={{ opacity: 0, y: -50 }}

animate={{ opacity: 1, y: 0 }}

exit={{ opacity: 0, y: -50 }}

transition={{ duration: 0.5, ease: "easeOut" }}

>

<div className='max-w-[1000px] p-8 lg:p-4 flex flex-col gap-y-8 mt-4 lg:mt-6 text-sm lg:text-base'>

<div className='space-y-4'>

<h1 className='text-3xl lg:text-4xl font-extralight'>

{researchQuery}

</h1>

</div>

<Progress steps={steps} />

<div className='grid grid-cols-12 gap-8'>

<div className='col-span-12 lg:col-span-8 flex flex-col'>

<h2 className='flex items-center gap-x-2'>

{isLoading ? (

<LoaderCircleIcon className='animate-spin w-4 h-4 text-slate-500' />

) : (

<SparkleIcon className='w-4 h-4 text-slate-500' />

)}

Answer

</h2>

<div className='text-slate-500 font-light'>

{isLoading ? (

<SkeletonLoader />

) : (

<AnswerMarkdown markdown={agentState?.answer?.markdown} /> //👈🏼 displays search results

)}

</div>

</div>

{agentState?.answer?.references?.length && (

<div className='flex col-span-12 lg:col-span-4 flex-col gap-y-4 w-[200px]'>

<h2 className='flex items-center gap-x-2'>

<BookOpenIcon className='w-4 h-4 text-slate-500' />

References

</h2>

<ul className='text-slate-900 font-light text-sm flex flex-col gap-y-2'>

{agentState?.answer?.references?.map(

(ref: any, idx: number) => (

<li key={idx}>

<a

href={ref.url}

target='_blank'

rel='noopener noreferrer'

>

{idx + 1}. {ref.title}

</a>

</li>

)

)}

</ul>

</div>

)}

</div>

</div>

</motion.div>

);

}

The code snippet above retrieves the search results from the agent's state and streams them to the frontend using the useCoAgent hook. The search results are returned in markdown format and passed into the AnswerMarkdown component, which renders the content on the page.

Finally, copy the code snippet below into the AnswerMarkdown component. This will render the markdown content as formatted text using the React Markdown library.

import Markdown from "react-markdown";

export function AnswerMarkdown({ markdown }: { markdown: string }) {

return (

<div className='markdown-wrapper'>

<Markdown>{markdown}</Markdown>

</div>

);

}

Congratulations! You've completed the project for this tutorial. You can also watch the video recording here:

Wrapping it up

LLM intelligence is the most effective when it works alongside human intelligence, and CopilotKit CoAgents allows you to integrate AI agents, copilots, and various types of assistants into your software applications in just a few minutes.

If you need to build an AI product or integrate AI agents into your app, you should consider CopilotKit.

The source code for this tutorial is available on GitHub:

https://github.com/CopilotKit/CopilotKit/tree/main/examples/coagents-ai-researcher

Thank you for reading!

Top comments (31)

Endless possibilities with CopilotKit 🚀

Yes, they are!

Great Article!

Thank you, Arindam!

Glad, you enjoyed it🔥

gfvbfg

fgbfgb

fgbfgb

fgfgbfg

Thanks for the write up. I’ve been looking for a new hobby project and this has possibilities.

Glad you found it useful, James! 😊

Hey James, I would love to get your feedback after you finish this project.

Okay I’ll do so.

Ok great, looking forward to it!

Nice, David! 🔥 There's a very popular repo with 18k stars called Perplexica, which is also a clone of Perplexity.

I will give this a read later :)

Thank you, Anmol! 🙂

This is really cool!

Glad, you enjoyed it, James! 🙌

I hope it helps you in your projects! 🔥🎉

I'll try to build something like this.Thanks for this

Tanmoy, I'd love to hear your feedback.

If you need help along the way you can reach out via our Discord

Sure.

Great Article!

Thank you, Tahara 😎

Awesome!

Thank you, Hernath! 🙌

I hope it helps you in your projects!🔥

This seems more technical than just ChatGPT. How is it retrieving the internet data?

Hi David, yes, it's more technical than just a plain ChatGPT output because it uses Tavily's search API, which has fewer hallucinations and retrieves the most relevant information based on your search.

I'm getting an error when running poetry install:

I was able to get around it using poetry.lock but then I got an error:

Installing the current project: greeter (0.0.1)packagesWarning: The current project could not be installed: No file/folder found for package greeter

If you do not want to install the current project use --no-root.

If you want to use Poetry only for dependency management but not for packaging, you can disable package mode by setting package-mode = false in your pyproject.toml file.

If you did intend to install the current project, you may need to set

in your pyproject.toml file.

Hi, @thomas_romanowski_9442e51, my apologies, I'm just seeing this.

I'm getting the same error.

Let me look into this and get back to you.

@thomas_romanowski_9442e51, we have deployed a fix for this issue.

Would you mind trying again and let me know if it works?

Please feel free to ask any questions and reach out personally on Twitter if you'd like @nathan_tarbert

Some comments may only be visible to logged-in visitors. Sign in to view all comments.