TL;DR

This guide shows you how to build a web app in Go that uses ElevenLabs generative voice AI to create lifelike speech from text. You can then deploy it to the cloud using Encore's free development cloud.

🚀 What we're doing:

- Install Encore & create an empty app

- Download the ElevenLabs Encore package

- Run the backend locally

- Create a simple frontend

- Deploy to the cloud

💽 Install Encore

Install the Encore CLI to run your local environment:

-

macOS:

brew install encoredev/tap/encore -

Linux:

curl -L https://encore.dev/install.sh | bash -

Windows:

iwr https://encore.dev/install.ps1 | iex

🛠 Create your app

Create a new Encore application with this command and select the Empty app starter:

encore app create

💾 Download the ElevenLabs package

- Download the

elevenlabspackage fromhttps://github.com/encoredev/examples/tree/main/bits/elevenlabs(link) and add it to the app directory you just created. - Sync your project dependencies by running

go mod tidy. (Note: This requires that you have Go 1.21, or later, installed.)

Get your ElevenLabs API Key

You'll need an API key from ElevenLabs to use this package. You can get one by signing up for a free account at https://elevenlabs.io.

Once you have the API key, save it as a secret using Encore's secret manager with the name ElevenLabsAPIKey, by running:

encore secret set --type dev,prod,local,pr ElevenLabsAPIKey

🏁 Run your app locally

Start your application locally by running:

encore run

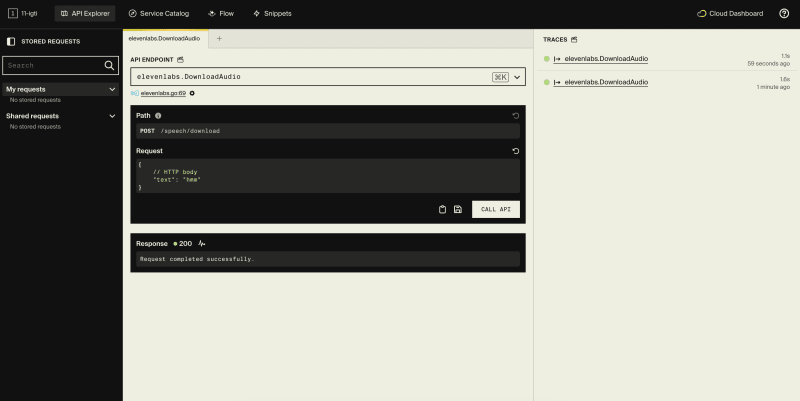

You can now open Encore's local development dashboard at http://localhost:9400 to see your app's API documentation, call the API using the API explorer and view traces, and more.

🧐 Try out the API

Now let's play around a bit with our shiny new API!

From the API Explorer in the local development dashboard, try calling the elevenlabs.DownloadAudio endpoint with the text input of your choice in the request body.

This will use the API to generate an MP3 audio file and download it to your app root folder: speech.mp3.

API Endpoints

Now that we know it works, let's review the API endpoints in the elevenlabs package.

- elevenlabs.ServeAudio: ServeAudio generates audio from text and serves it as mpeg to the client.

- elevenlabs.StreamAudio: StreamAudio generates audio from text and streams it as mpeg to the client.

- elevenlabs.DownloadAudio: DownloadAudio generates audio from text and saves the audio file as mp3 to disk.

🖼 Create a simple frontend

Now let's make our app more user-friendly by adding a simple frontend.

- Create a subfolder in your app root called

frontend. - Inside

/frontend, createfrontend.goand paste the following code into it:

package frontend

import (

"embed"

"net/http"

)

var (

//go:embed index.html

dist embed.FS

handler = http.StripPrefix("/frontend/", http.FileServer(http.FS(dist)))

)

// Serve serves the frontend for development.

// For production use we recommend deploying the frontend

// using Vercel, Netlify, or similar.

//

//encore:api public raw path=/frontend/*path

func Serve(w http.ResponseWriter, req *http.Request) {

handler.ServeHTTP(w, req)

}

- Inside

/frontend, createindex.htmland paste the following code into it:

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport"

content="width=device-width, user-scalable=no, initial-scale=1.0, maximum-scale=1.0, minimum-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>AI Speech Generator</title>

<style>

textarea {

font-size: 18px;

}

button {

display: block;

border: 1px solid black;

background: transparent;

font-size: 20px;

border-radius: 3px;

margin-top: 10px;

padding: 5px 10px;

}

</style>

</head>

<body>

<h1>Encore + ElevenLabs AI Speech Generator</h1>

<textarea id="text-to-speech" cols="40" rows="5">Hello dear, this is your computer speaking.</textarea>

<button id="speak-button">Say it!</button>

<script>

const onSpeak = () => {

const text = document.getElementById('text-to-speech').value;

fetch('/speech/serve', {

method: 'POST',

body: JSON.stringify({text})

})

.then(res => res.blob())

.then(data => {

const url = window.URL.createObjectURL(data);

const audio = new Audio();

audio.src = url;

audio.play();

})

}

const button = document.getElementById('speak-button');

button.addEventListener('click', onSpeak);

</script>

</body>

</html>

- Now restart your app by running

encore runand open your new frontend at http://127.0.0.1:4000/frontend.

You should see this:

— Congratulations, you now have a working AI Voice Generator! 🎉

🚀 Deploy to the cloud

Deploy your app to a free cloud environment in Encore's development cloud by running:

git add -A .

git commit -m 'Initial commit'

git push encore

👉 Then head over to the Cloud Dashboard to monitor your deployment and find your production URL by going the overview page for the environment you just created. It will be something like: https://staging-[APP-ID].encr.app.

Once you have your root API address and the deploy is complete, open your app in your browser: https://staging-[APP-ID].encr.app/frontend.

🎉 Great job - you're done!

Great job! You now have an AI-powered app running in the cloud.

Keep building with Encore using these Open Source App Templates. 👈

If you have questions or want to share your work, join the developer hangout in Encore's community Slack. 👈

Top comments (2)

Nice article Marcus, thanks for sharing! Few of the image links are broken now, but they weren't that important 😃

I came here after reading the docs around defining a "func Serve", and it references the Uptime monitoring tutorial, but I can't find this function declared anywhere in the tutorial, or git repo, just wanted to flag 👍

Thanks for the report Ed, will take a look!

I did however find the

func Servereference in the Uptime Monitor example for Encore.go, it is in the frontend.go file:github.com/encoredev/examples/blob...

We could make this reference a lot clearer in the frontend integration docs though!