Explore some examples of Granite 3.2 in action

By Bill Higgins, Anthony Colucci

This blog was originally published on IBM Developer.

Our latest release of IBM Granite models included two new models with reasoning capabilities, as well as our first vision model.

The new Granite 3.2 language models can “think” through tasks by breaking down problems into multiple steps before answering, while Granite-Vision-3.2-2B processes information from multiple types of data, such as text and images. These capabilities broaden the possibilities of what developers can build with our family of fit-for-purpose efficient and trusted AI models.

In this blog, we'll explore some examples of Granite 3.2 in action. And, if you want to get your hands on the keyboard, you can download the models from Hugging Face and use the following code tutorials to get started.

1. Turn a PDF into an FAQ document

Retrieval-augmented generation (RAG) enhances a large language model with a knowledge base of information outside its training data and without fine-tuning. Traditionally, this technique has been limited to text-based use cases, like summarization and chatbots. But with multimodal models like Granite-Vision-3.2-2B, images and other forms of data can feed the knowledge base.

This tutorial from IBM explains how to create an AI system that answers user queries based on unstructured data in a PDF.

Learn how in this tutorial, "Build an AI-powered multimodal RAG system with Docling and Granite."

2. Debug code with just an image

After building a web app with Granite’s code generation capabilities, one influencer showed off the new reasoning and vision capabilities by adding a bug and uploading a screenshot of the modified code. Granite was able to read the screenshot to detect the bug and suggest a fix for it.

Learn how in this demo video, "IBM Granite 3.2: Writing / Debugging code with AI in seconds."

3. Get tips for how to dress to impress

In a tutorial for how to build a personal stylist with Granite 3.2, learn what you can do by pairing reasoning and vision capabilities. Whether you’re heading to the park for a casual afternoon in the summer or off to a formal winter gala, the stylist suggests the most appropriate outfit for an occasion, based on pictures of what’s in your closet, and explains the reasoning behind its decision-making.

See how in this tutorial, "Build an AI stylist with IBM Granite using watsonx.ai."

4. Turn a picture into 1,000 words

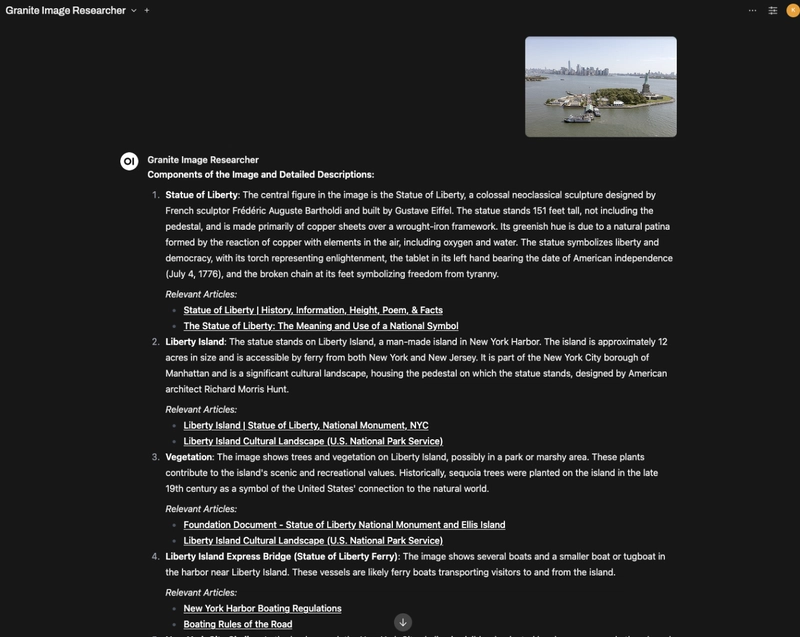

This tutorial shows how you can develop an AI research agent that can analyze images and provide additional context for everything from complex technical diagrams to historical photographs. By pairing our first vision model with agentic workflows, the tool can provide actionable insights and reference relevant information from the Internet and other uploaded documents.

Learn how in this tutorial, "Build an AI research agent for image analysis with Granite 3.2 Reasoning and Vision models."

Here's what the agent shared based on a picture of the Statue of Liberty:

5. Manage a budget

Another influencer created an AI financial assistant that provides personalized recommendations for how to manage her budget. Instead of uploading an actual spreadsheet, she needed only an image of her spending history to generate these insights. This capability highlights our vision model’s strong performance in document understanding, as it excels at processing images, charts, diagrams and other media common in enterprise use cases.

See how in this reel, "The secret to optimizing your finances."

Want more?

For more ideas for how to get started with Granite 3.2, explore the Granite Cookbook.

Explore other tutorials that take advantage of the powerful Granite models.

Top comments (0)