Originally published on my blog

Puppeteer is an awesome Node.js library which provide us a lot of commands to control a headless (or not) chromium instance and automatize navigation with few lines of code. In this post we are going to use the puppeteer superpowers and build a car information scraper tool for second hand car catalog and choose the best option.

A few days ago I was reading, with my teammate and big friend @mafesernaarbole about Web scraping and different online tools she needed for a personal project. Looking at different articles and repositories we found Puppeteer, which is a high-level API to control headless Chrome over the DevTools Protocol. That great tool woke up our interest and, although at the end of the day it wasn't useful for her, we both said "Hell yeah! We have to do something with this!!". A couple days after, I told her, Puppeteer would be a great topic for my blog's first article... and here I am. I hope you enjoy it.

Our Study Case

The idea is pretty simple, there is a second hand car catalog in our country, Colombia, it's tucarro.com.co. Basically given the make and model of the vehicle tucarro.com.co offers you a list of second hand cars that match and which are for sale over the country. The thing is, the possible customer have to search one by one of that results and analyze which is the best choice (or choices).

So, our focus is to create a small Node.js app for navigating the catalog website, searching as a human would, then we are going to take the first page of results, scrape its information (specifically the car year, kilometers traveled and price... and of course the ad URL). Finally with that information and using some optimization algorithm we are going to offer to customer best choice (or choices) based on price and kilometers traveled.

Disclaimer: This exercise has just academic purposes, not commercial interest. We don’t store anything of extracted data, which belongs to tucarro.com.co. The app's source code is distributed under MIT license, exempting us of any responsibility of derivate work . We highly recommend to use it with responsibly.

Initial Setup

We are about to create a Node.js application so, the first step of course, is create a new npm project in a new directory. With the -y parameter the package.json will be created with default values:

$ npm init -y

And add the puppeteer dependency to your project

$ npm install --save puppeteer

# or, if you prefer Yarn:

$ yarn add puppeteer

Finally in our package.json file, add the following script:

"scripts": {

"start": "node index.js"

}

This script simplifies running our app - now we can do it with just npm start command

Important: As you'll see soon, puppeteer needs

asyncandawaitfunctions from Node.js core, so we are going to need a recent version of node which supports those functions (for this article we usev9.2.0version, however sincev7.6both functions are supported)

Let's Rock

With our npm project successfully configured, the next step is, yes, coding , let's create our index.js file. Then here is the skeleton for our puppeteer app

'use strict'

const puppeteer = require('puppeteer')

async function run() {

const browser = await puppeteer.launch()

const page = await browser.newPage()

browser.close()

}

run();

Basically we are importing a puppeteer dependency at line 2, then we open an async function in order to wrap all browser/puppeteer interactions, in the following lines we get an instance for chromium browser and then open a new tab (page) ... at the end in the last lines, we are closing the browser (and its process) and finally running the async function.

Navigating to our target site

Going to a specific website is a simple task using our tab instance (page). We just need to use the goto method:

await page.goto('https://www.tucarro.com.co/')

Here is how the site looks in the browser

Searching

Our goal is to find and scrape the first page of results without any kind of filter, ergo all makes. To do that we just need to interact with the website and click on Buscar button, we can achieve it using the click method of page instance.

await page.waitForSelector('.nav-search-submit')

await page.click('button[type=submit]');

Note, the first line allows our script to wait for a specific element to load. We use that to make sure that Buscar button is rendered in order to click it, the second one just clicks the button and triggers the following screen

The surprise here is the motorcycles were loaded there, so we are going to need use the categories link for vehicles and trucks Carros y Camionetas using of course the same click function, first validating that the link was rendered.

await page.waitForSelector('#id_category > dd:nth-child(2) > h3 > a')

await page.click('#id_category > dd:nth-child(2) > h3 > a');

And there we go, now we have our car results page... let's scrape it!

Note: the selectors used in this section was discovered exploring the site html delivered to browser and/or using the

copy selectoroption

Scrape it!

With our results page we just need to iterate over the DOM nodes and extract the information. Fortunately puppeteer can help us with that too.

await page.waitForSelector('.ch-pagination')

const cars = await page.evaluate(() => {

const results = Array.from(document.querySelectorAll('li.results-item'));

return results.map(result => {

return {

link: result.querySelector('a').href,

price: result.querySelector('.ch-price').textContent,

name: result.querySelector('a').textContent,

year: result.querySelector('.destaque > strong:nth-child(1)').textContent,

kms: result.querySelector('.destaque > strong:nth-child(3)').textContent

}

});

return results

});

console.log(cars)

In the script above we are using the evaluate method for the results inspection, then with some query selectors we iterate the results list in order to extract the information of each node, producing an output like this for each item/car

{ link: 'https://articulo.tucarro.com.co/MCO-460314674-ford-fusion-2007-_JM',

price: '$ 23.800.000 ',

name: ' Ford Fusion V6 Sel At 3000cc',

year: '2007',

kms: '102.000 Km' }

Oh yeah! we got the information and with JSON structure, however if we want optimize it, we need to normalize the data - after all the calculations are a bit complicated with those Kms and $ symbols, aren't they?... So we are going to change our results map fragment like this

return results.map(result => {

return {

link: result.querySelector('a').href,

price: Number((result.querySelector('.ch-price').textContent).replace(/[^0-9-]+/g,"")),

name: result.querySelector('a').textContent,

year: Number(result.querySelector('.destaque > strong:nth-child(1)').textContent),

kms: Number((result.querySelector('.destaque > strong:nth-child(3)').textContent).replace(/[^0-9-]+/g,""))

}

});

Sure, Regular Expressions save the day, we have numbers where we want numbers.

Optimization time!!

At this point we already got a taste something of Puppeteer flavors, which was our main goal for this article, in this last section we are going to use a simple heuristic to get the best car choice based on the scraped data. Basically we'll create a heuristic function in order to calculate some score that allow us to rate each vehicle and choose the best option. For that purpose we consider the following points:

- For each variable we assign a weight based on the importance for the potential customer then (price has 4, and year and kms has 3 each one).

- Given the kms and price should be minimized we are going to use its values as fraction denominator

- For calculation easiness we normalize the numeric factors for our variables so, each price would be divided between 1 million, year and kms by 1 thousand

This is the final formula Disclaimer : This is an hypothetical formula, in order to complete this exercise, so it lacks of any mathematical or scientific value in the real life

score = 4 (1/price) + 3 (year) + 3 (1/kms)

And the code snippet with that formula

let car = {score: 0}

for (let i = 0; i < cars.length; i++) {

cars[i].score = (4 * (1/(cars[i].price/1000000))) + (3 * (cars[i].year/1000)) + (3 * (1/(cars[i].kms/1000)))

if(cars[i].score > car.score){

car = cars[i]

}

}

console.log(car)

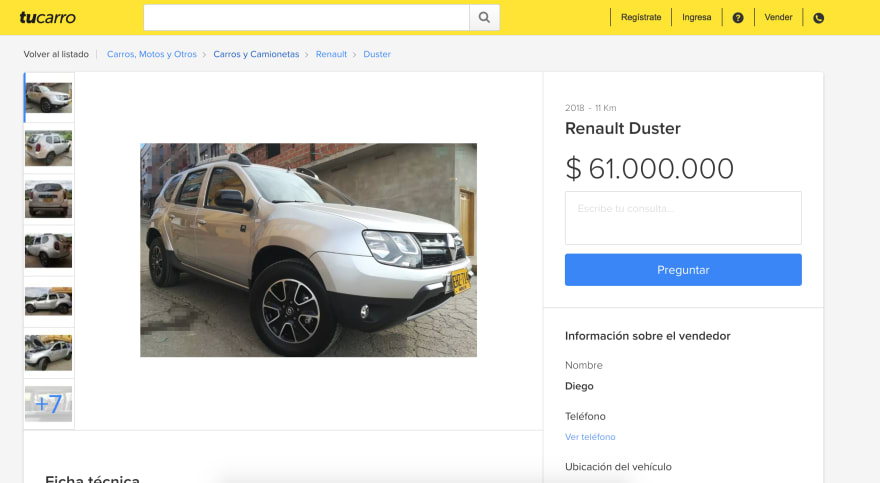

Finally with puppeteer we visit the result link and take a screenshot

await page.goto(car.link)

await page.waitForSelector('.gallery__thumbnail')

await page.screenshot({path: 'result.png', fullPage: true});

and that's it !

- Puppeter API documentation could be found right here!

- Complete source code for this exercise could be found on this Github repo

- Yeah! the optimization section, must be improved with some machine learning technique or optimization algorithm, but that is fabric for another t-shirt

- Thanks for reading! comments, suggestions and DMs are welcome!

Top comments (4)

Hi Lex, thank you for taking the time to explain puppeteer with a good to understand example!

I stumpled over puppeteer quite some times now but never gave it a chance. Now I consider giving it one for automated testing :)

Yup Robin! puppeteer it's a pretty cool tool.. so you definitely should do it! .. I'm glad you enjoyed the post.. thank YOU for read it

Nice intro to Puppeteer and very well explained !!

Thanks Lex :)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.