The opinion that React is poorly suited for search engine optimization is widespread. It’s assumed that React doesn’t offer information about the rendered HTML, so it’s useless for crawling and indexing.

If it’s such a lousy fit for SEO, why do more than 11 million websites use React? Well, popular search engines like Google have executed JavaScript since the late 2000s. But it doesn’t solve all React’s SEO issues.

Luckily, a framework called Next.js makes these problems non-existent.

Here you will find a concise yet complete description of the traits making Next.js perfect for SEO. But first, let’s ensure you understand how search engines and Next.js SEO work.

This post covers:

- What’s important for a search engine?

- Client-Side: HTML or JavaScript

- Longer Initial Load-Time in CSR

- Poor Link Discovery in CSR

- Why Next JS is Amazing for SEO

- Even Shorter Load Time

- Closing thoughts

- Ready to build an SEO-driven web application with Next.js?

What’s important for a search engine?

Search engines care about your experience. They aim to show websites that are not only relevant but also nice to navigate on the first pages.

To do so, Google stepped into the users’ shoes and formally defined the metrics influencing user experience. These are called Core Web Vitals.

They measure waiting time, quality of visual experience, security, and other parameters claimed important by Google. Other search engines may have differently calculated metrics, but they all measure similar things.

The Core Web Vitals are measured when the page is rendered. Next, the crawler goes through the page, looks at the metadata, and puts the page in the registry accordingly. Then it finds links on the page and crawls them.

A website should have relevant metadata available to make a crawler happy, and the metrics scores should be high.

But how to do that? It depends on the rendering strategy you choose.

Client-Side: HTML or JavaScript

The times of HTML websites crafted by hand are long gone. Most pages are generated by running JS scripts on the server or the client. Which strategy of the two is better for SEO, and how does it influence the metrics?

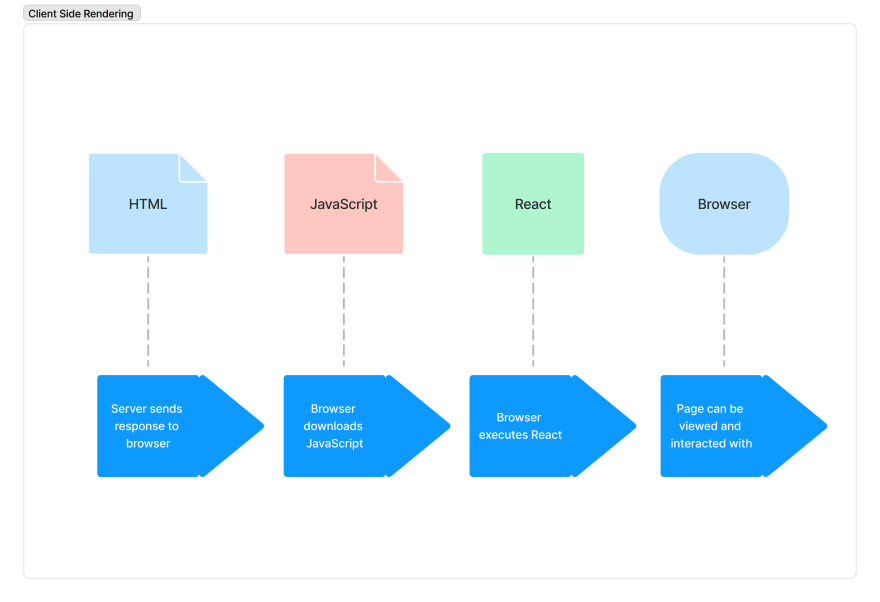

Client side rendering (CSR) happens when your browser receives JavaScript from the server, executes it, and displays the resulting HTML.

On the other hand, in server-side rendering (SSR), you don’t run scripts in the browser. They are executed on the server each time a user requests a page. Only the resulting HTML is sent to the client.

In the past, search engines could not execute JavaScript. Neither page descriptions nor URLs for further crawling were provided to the bot because bots can only parse HTML.

As early as 2008, Google introduced JavaScript execution, making CSR pages indexable. The rendering was not perfect at the beginning but got better with time. Currently, some search engines still can’t crawl JavaScript, but their market share is small.

You may ask, why does it still matter where you are rendering your website if there’s the same HTML in your browser anyway? Read further to find the answer.

Longer Initial Load-Time in CSR

With SSR, your website's initial load time is shorter, so you score better on Core web vitals. Since browsing is often done from devices with meager computing capacity, such as smartphones, executing JS on the client side takes more time than on the server.

When it comes to a Single page application (SPA), the load time gets even longer because you’re loading and executing all the website content at once.

Moreover, the rendering request may timeout in the Web Rendering Service queue, or the code may not be executed due to errors.

Additionally, Google crawls client-rendered websites in two waves. Firstly, it indexes any featured HTML, adds the found links to the crawl queue, and downloads the response codes. The next step executes the JS scripts and indexes the page entirely.

But default processing capacities may not be enough, so Googlebot may request extra resources. When these become available, the crawler comes back to finish its job. But this step may occur hours or even a week later!

Poor Link Discovery in CSR

If your SPA is client-rendered, Google bots can’t tell you have paths on your website. That’s because SPAs look like a single page to the crawler.

What seems to be switching between pages for a user is, in fact, switching between fragments of a document object model (DOM). Unfortunately, search engines don’t crawl fragments as individual URLs; you’ll need a workaround to fix this. And there is one: you can use History API to make the pages have solid URLs. But it comes at a time’s cost.

Okay, let’s say you got your paths crawled. Will the crawler obtain the relevant titles and descriptions to index the pages correctly?

In a plain React app this is a problem because all the content is represented on one page. How do you assign different head tags with a specific meta tag to fragments? There’s also a workaround, but extra JS code takes time to execute, so the load time increases.

Client-rendered SPAs are still advantageous for inner business projects with no SEO concerns, like back offices or dashboards. They have a higher load speed when switching between pages because there’s no need to send more requests to the server and wait for the answers: all the content is already preloaded.

Why Next JS is Amazing for SEO

Next.js comes with certain SEO-enhancing features out of the box. Additionally, many frameworks add value to Next.js' SEO component. Let’s explore how internal and external tools help solve page discoverability and initial load time problems.

Discoverable Links and Meaningful Descriptions

For crawlers to find your website pages, the paths should be clear, and the URL structure simple and follow a specific pattern.

The good news is that Next.js handles the URL structure for you and does so consistently. Also, it allows for dynamic routes, so you don’t have to use URL parameters (Google doesn’t like them). What’s more, search engines punish you for having duplicate links leading to the same page. To fix that, you can provide canonical URLs in the ‘robots.txt’ file.

Remember that a client-rendered single page application may have problems providing a unique title and description for each page?

Well, server side rendering solves this problem. Thanks to it, website pages are rendered and returned upon request, allowing unique head and meta tags to be inserted each time. Additionally, a next/head\ component allows for dynamic head rendering, meaning that your meta tags will be relevant even for dynamically generated pages like the ones representing products in a shop.

Besides, many Next.js libraries provide support for SEO-improving protocols. Here are some examples.

Sitemap Protocol

Many pages may not have external links if your website is big. To get them crawled, you should upload a sitemap to Google. A sitemap is an XML file describing all paths inside your website. Its purpose is to tell a crawler about the pages it wouldn’t find otherwise.

By and large, if you have to add extra pages to your site, there’s no need to update your sitemap manually. Next.js allows for dynamically generated sitemaps. All updates are instantly reflected in the XML file when you change your routes. You can build your own sitemap component or use next-sitemap.

Structured Metadata

There’s another way you can help Google understand the content of your web page better. You should incorporate a particular JS snippet into your HTML that describes the page content according to a JSON-LD format that gives machine-readable information about the website.

For doing so, Google rewards you with better SEO treatment. In Next.js, you can easily make use of the JSON-LD structured data format.

Open Graph Protocol

What else you can do is add the Open Graph protocol support. Open Graph tags have a lot of similarities with SEO tags, but they don’t improve your position in search results directly.

Instead, by providing images, they make your pages more visible on social networks, which may influence your place in the ranking. This is how you can include dynamic content and OG images in your Next.js project.

Even Shorter Load Time

As mentioned above, rendering HTML on the server is faster.

However, there’s still JavaScript on the client in SSR that allows the website to operate as a SPA, but that’s much fewer scripts and a shorter execution time. Also, no two-step crawling is needed for Next.js, because extra resources for code execution are not requested. It allows search engines to crawl your website more often.

What’s more, Next.js 10 offers automatic prefetching: the browser downloads the links’ content when they appear in the page’s viewport, which makes switching between pages faster.

Probably the best thing about Next.js is its ability to combine rendering strategies. In case you need dynamically generated content, you can use SSR. In that way, your HTML will be rendered each time upon request.

If your website parameters stay unchanged during the user’s web journey, you can incorporate Static Site Generation (SSG), which renders all the pages upon the initial request and then serves the HTML pages one by one. You can go for Incremental Static Regeneration (ISR) if you have lots of individual pages and want to build a static page.

Each strategy can adjust the metrics for a particular use case!

Additionally, Next.js comes with HTTP/2 support. HTTP/2 is a major revision of the HTTP protocol that aims to speed up internet exchanges between clients and servers. Using it positively affects the load speed.

Loading Things When You Need Them

Next.js offers you to load resources only when you need them. If you have heavy images on your site, you can use lazy loading, which limits image loading only to the cases when they appear in the viewpoint.

That’s what Pinterest does to save you time. Likewise, you can import other specific components only when needed. It’s called dynamic imports. Your code is being split into small chunks loaded on demand.

Chances are you will use third-party scripts on your website for marketing purposes. But they can be slow. In Next.js, a next/script\ component performs script optimization: you can decide whether to fetch and execute the script instantly or after all the page contents have finished loading.

Closing thoughts

It’s evident now that client-side rendering is still detrimental to SEO. While executing JavaScript, errors can arise, preventing the crawler from reaching your meta tags and inner URLs. Additionally, running JS negatively impacts your ranking because it slows down page loading.

Next.js offers various combinations of on-server rendering that fix most of the issues with SPAs. Powered with it, your website will have a clear URL structure and load large components on demand. Additionally, you can find libraries that help you enhance your SEO optimization strategy.

All in all, if you are planning to build an SEO-oriented website, consider using Next.js.

Top comments (0)