This is a Plain English Papers summary of a research paper called AI Image Generation Breakthrough: New Expert Race System Cuts Computing Costs While Boosting Quality. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

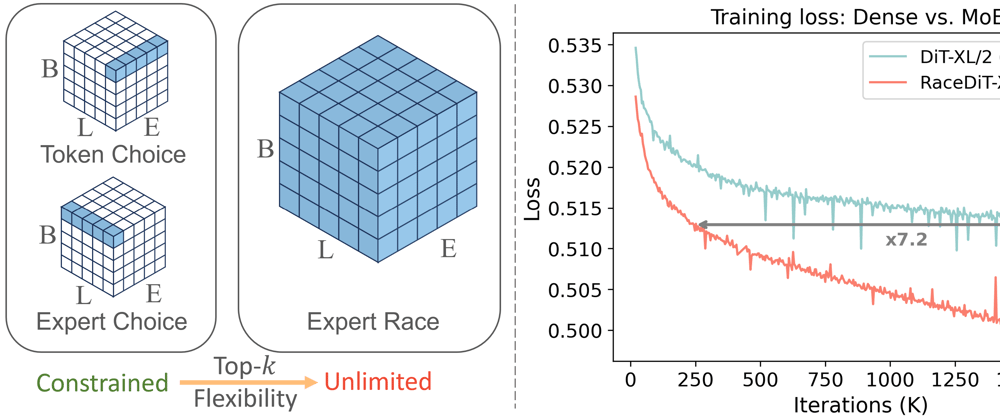

- Expert Race is a new routing strategy for Mixture of Experts (MoE) in diffusion models

- Improves on traditional top-k routing by allowing experts to "race" for tokens

- Significantly reduces computation while maintaining performance

- Introduced DiT-MoE architecture that scales to 3.8B parameters with superior performance

- Achieves SOTA results on ImageNet with fewer FLOPS than baseline models

- Framework allows flexible training across different devices without specialized hardware

Plain English Explanation

When AI researchers build large image generation models like diffusion transformers, they face a challenge: bigger models mean better images, but also require more computing power. This...

Top comments (0)