Hello, my name is Dmitriy Karlovskiy and I have... post-traumatic stress disorder after generating sourcemaps. And today, with your help, we will treat this by immersing ourselves as deeply as possible in traumatic events.

This is a text transcript of the speech at HolyJS'21. You can watch video record, read as article, or open in presentation interface.

How did I get to this point?

First the medical history:

I once developed a simple Tree format to represent abstract syntax trees in the most visual form. Based on this format, I have already implemented several languages. One of them - the view.tree language - is intended for declarative description of components and their composition with each other. And it is in this language that all the standard visual components of the $mol framework are described. This allows you to write short and descriptive code that does a lot of useful things.

Why DSL? Boilerplate!

Now you see the completed application on $mol:

$my_app $my_page

title @ \Are you ready for SPAM?

body /

<= Agree $my_checkbox

checked?val <=> agree?val true

It consists of a panel with a checkbox inside. And together they are connected by two-way communication according to the given properties. These 5 lines of code even have localization support. The equivalent JavaScript code takes up 5 times more space:

class $my_app extends $my_page {

title() {

return this.$.$my_text( '$my_app_title' )

}

body() {

return [this.Agree()]

}

Agree() {

const obj = new this.$.$my_checkbox()

obj.checked = val => this.agree( val )

return obj

}

agree( val = true ) {

return value

}

}

$my_mem( $my_app.prototype, "agree" )

$my_mem( $my_app.prototype, "Agree" )

This code, although in a more familiar language, is much more difficult to understand. In addition, he completely lost the hierarchy in order to achieve the same level of flexibility. The good thing about a flat class is that you can inherit from it and override any aspect of the component's behavior.

Thus, one of the main reasons for using DSL is the ability to write simple and concise code that is easy to learn, hard to mess up, and easy to maintain.

Why DSL? Custom Scripts!

Another reason for implementing DSLs is the need to let the users themselves extend your application logic using scripts. For example, let's take a simple task list automation script written by a normal user:

@assignee = $me

@component = \frontend

@estimate ?= 1D

@deadline = $prev.@deadline + @estimate

Here he says: put me in charge of all tasks; indicate that they are all related to the frontend; if the estimate is not set, then write 1 day; and build their deadlines one by one, taking into account the resulting estimate.

JS in a sandbox? Is it legal?!7

And here you may ask: why not just give the user JS in their hands? And then I suddenly agree with you. I even have a sandbox for safely executing custom JS. And the online sandbox for the sandbox:

You can try to get out of it. My favorite example: Function is not a function - in very spirit of JS.

JS in a sandbox? No, it's not for average minds..

However, for the average user, JS is too complicated.

It would be much easier for him to learn some simple language focused on his business area, rather than a general-purpose language like JS.

Why DSL? Different Targets!

Another reason to create your own DSL is the ability to write code once and execute it in a variety of runtimes:

- JS

- WASM

- GPU

- JVM

- CIL

Why different targets? One model to rule them all!

As an illustration, I will give an example from one startup that I developed. For half a year of development, we have done quite a lot. And all thanks to the fact that we had a universal isomorphic API, which was configured by a simple DSL, which described what entities we have, what attributes they have, what types they have, how they are related to other entities, what indexes they have, and all that. Just a few dozen entities and under a hundred connections. A simple example is the task model..

task

title String

estimate Duration

From this declarative description, which occupies several kilobytes, code is already generated that works both on the server and on the client, and, of course, the database schema is also automatically updated.

class Task extends Model {

title() {

return this.json().title

}

estimate() {

return new Duration( this.json().estimate )

}

}

$my_mem( Task.prototype, "estimate" )

CREATE CLASS Task extends Model;

CREATE PROPERTY title string;

CREATE PROPERTY estimate string;

Thus, development (and especially refactoring) is significantly accelerated. It is enough to change the line in the config, and after a few seconds we can already pull the new entity on the client.

Why DSL? Fatal flaw!

And, of course, what kind of programmer does not like fast cycling?

Why all this? Transpilation and checks!

So we have a lot of different useful tools:

- Babel and other transpilers.

- Uglify and other minifiers.

- TypeScript, AssemblyScript and other programming languages.

- TypeScript, FlowJS, Hegel and other typecheckers.

- SCSS, Less, Stylus PostCSS and other CSS generators.

- SVGO, CSSO and other optimizers.

- JSX, Pug, Handlebars and other templaters.

- MD, TeX and other markup languages.

- ESLint and other linters.

- Pretier and other formatters.

Developing them is not an easy task. Yes, even to write a plugin for any of them - you have to take a steam bath. So let's think about how all this could be simplified. But first, let's look at the problems that lie in wait for us on the way ..

So what's the problem? This is not what I wrote!

Let's say a user has written such a simple markdown template ..

Hello, **World**!

And we generated a spreading code that collects the DOM through JS ..

function make_dom( parent ) {

{

const child = document.createTextNode( "Hello, " )

parent.appendChild( child )

}

{

constchild = document.createElement( "strong" )

void ( parent => {

const child = document.createTextNode( "World" )

parent.appendChild( child )

} )( child )

parent.appendChild( child )

}

{

const child = document.createTextNode( "!" )

parent.appendChild( child )

}

}

If the user encounters it, for example, when debugging, then it will take him a long time to understand what kind of noodle code is and what he does in general.

So what's the problem? Yes, the devil will break his leg!

It is quite sad when the code is not just bloated, but also minified with single-letter variable and function names..

Hello, **World**!

function make_dom(e){{const t=document.createTextNode("Hello, ");

e.appendChild(t)}{const t=document.createElement("strong");

(e=>{const t=document.createTextNode("World");e.appendChild(t)})(t),

e.appendChild(t)}{const t=document.createTextNode("!");e.appendChild(t)}}

How can sourcemaps help? Sources and Debugging!

But this is where sourcemaps come to the rescue. They allow instead of the generated code to show the programmer the code that he wrote.

Moreover, debugging tools will work with sourcemaps: it will be possible to execute it step by step, set breakpoints inside the line, and so on. Almost native.

How can sourcemaps help? Stack traces!

In addition, sourcemaps are used to display stack traces.

The browser first shows links to the generated code, downloading sourcemaps in the background, after which it replaces links to the source code on the fly.

How can sourcemaps help? Variable values!

The third hypostasis of sourcemaps is the display of the values of variables.

In the source example, the name next is used, but there is no such variable in runtime, because in the generated code the variable is called pipe. However, when hovering over next, the browser does a reverse mapping and displays the value of the pipe variable.

Specification? No, have not heard..

It is intuitively expected that sourcemaps should have a detailed specification that can be implemented and that's it, we're in chocolate. This thing is already 10 years old. However, things are not so rosy..

- V1 - Internal Closure Inspector format

- Proposal V2 2010 +JSON -20%

- Proposal V3 2013 - 50%

Speca has 3 versions. I did not find the first one, and the rest are just notes in Google Docs.

The whole history of sourcemaps is the story of how a programmer making developer tools heroically fought to reduce their size. In total, they decreased as a result by about 60%. This is not only a rather ridiculous figure in itself, but also the struggle for the size of sourcemaps is a rather pointless exercise, because they are downloaded only on the developer's machine, and then only when he is debugging.

That is, we get the classic misfortune of many programmers: optimizing not what is important, but what is interesting or easier to optimize. Never do that!

How to sort out the sorsmaps?

If you decide to contact the sourcemaps, then the following articles may be useful to you:

Next, I will tell you about the underwater rake, which is abundantly scattered here and there in the name of reducing the size ..

How are sourcesmaps connected?

Sourcemaps can be connected in two ways. It can be done via HTTP header..

SourceMap: <url>

But this is a rather stupid option, as it requires special configuration of the web server. Not every static hosting allows this at all.

It is preferable to use another way - placing a link at the end of the generated code..

//# sourceMappingURL=<url.js.map>

/*#sourceMappingURL=<url.css.map> */

As you can see, we have a separate syntax for JS and a separate syntax for CSS. At the same time, the second option is syntactically correct for JS, but no, it won’t work that way. Because of this, we cannot get by with one universal function for generating code with sourcemaps. We definitely need a separate function for generating JS code and a separate one for CSS. Here is such a complication out of the blue.

How do sourcemaps work?

Let's see what they have inside..

{

version: 3

"sources": [ "url1", "url2", ... ],

"sourcesContent": [ "src1", "src2", ... ],

"names": [ "va1", "var2", ... ],

"mappings": "AAAA,ACCO;AAAA,ADJH,WFCIG;ADJI;..."

}

The sources field contains links to sources. There can be any strings, but usually these are relative links, according to which the browser will download the sources. But I recommend that you always put these sources in sourcesContent - this will save you from the problems that at some point you will have one version of the mappings, and the other sources, or not download at all. And then - happy debugging. Yes, sourcemaps bloat in size, but this is a much more reliable solution, which is important when debugging already buggy code. We get that all that struggle for the size of sourcemaps was meaningless, since a good half of the sourcemap is source codes.

The names field stores the runtime variable names. This crutch is no longer needed, since now browsers can do both forward and reverse mapping. That is, they themselves pull out the names of the variables from the generated code.

Well, in the mappings field, there are already, in fact, mappings for the generated code.

How to decode mappings?

Let's imagine mappings for clarity in several lines in order to understand their structure..

AAAA,ACCO;

AAAA,ADJH,WFCIG;

ADJI;

...

For each line of the generated file, several spans are specified, separated by commas. And at the end - a semicolon to separate lines. Here we have 3 semicolons, so there are at least 3 lines in the generated file.

It is important to emphasize that although a semicolon can be trailing, commas cannot be trailing. Well, more precisely, FF eats them and will not choke, but Chrome will simply ignore such sourcemaps without any error message.

What kind of spans are these?

Span is a set of numbers in the amount of 1, 4 or 5 pieces. Span points to a specific place in a specific source.

The fifth number is the number of the variable name in the names list, which (as we have already found out) is not needed, so we simply do not specify this number.

So what's in these numbers?

The remaining 4 numbers are the column number in the corresponding line of the generated file, the source number, the source line number and the column number in this line.

Keep in mind that numbers start from 0. The last three numbers can be omitted, then we will only have a pointer to a column in the generated file, which is not mapped anywhere in the source. A little later I will tell you why this is necessary. In the meantime, let's figure out how numbers are encoded ..

And it's all in 5 bytes? Differential coding!

It would be naive to serialize spans like this (each row is one span)..

| TC | SI | SR | SC |

|---|---|---|---|

| 0 | 1 | 40 | thirty |

| 3 | 3 | 900 | 10 |

| 6 | 3 | 910 | twenty |

But in sourcemaps, differential encoding is used. That is, the field values are presented as is only for the first span. For the rest, it is not the absolute value that is stored, but the relative value - the difference between the current and previous span.

| TC | SI | SR | SC |

|---|---|---|---|

| 0 | 1 | 40 | thirty |

| +3 | +2 | +860 | -twenty |

| +3 | 0 | +10 | +10 |

Please note that if you add 860 to 40 from the first span, you get 900 for the second span, and if you add 10 more, then 910 for the third span.

The same amount of information is stored in this representation, but the dimension of the numbers is somewhat reduced - they become closer to 0.

And it's all in 5 bytes? VLQ encoding!

Next, VLQ encoding, or variable length encoding, is applied. The closer a number is to 0, the fewer bytes it needs to represent..

| values | Bit Count | Bytes Count |

|---|---|---|

| -15 .. +15 | 5 | one |

| -511 .. +511 | 10 | 2 |

| -16383 .. +16383 | 15 | 3 |

As you can see, every 5 significant bits of information require 1 additional byte. This is not the most efficient way to encode. For example, WebAssembly uses LEB128, where a byte is already spent for every 7 significant bits. But this is a binary format. And here we have mappings for some reason made in JSON format, which is text.

In general, the format was overcomplicated, but the size was not really won. Well, okay, it's still flowers ..

How good are the sourcemaps! If there was a source..

Sourcemaps map not a range of bytes in one file to a range in another, as a naive programmer might think. They only map dots. And everything that falls between the mapped point and the next one in one file - it seems to be mapped to everything after the corresponding point to the next one in another file.

And this, of course, leads to various problems. For example, if we add some content that is not in the source code, and, accordingly, we don’t map it anywhere, then it will simply stick to the previous pointer..

In the example, we have added Bar. And if we do not prescribe any mapping for it (and there is nowhere to map it), then it will stick to Foo. It turns out that Foo is mapped to FooBar, and, for example, displaying the values of variables on hover stops working.

To prevent this from happening, you need to map Bar to nowhere. To do this, just need a variant of the span with a single number. In this case, it will be the number 3, since Bar starts from the third column. Thus, we say that after the given pointer until the next (or the end of the line) the content is not mapped anywhere, and Foo is mapped only on Foo.

How good are the sourcemaps! There would be a result..

There is also an opposite situation, when there is content in the source, but it does not go to the result. And here too there can be a problem with adhesion ..

It turns out that you need to map the cut content somewhere. But where? The only place is somewhere at the end of the resulting file. This is quite a working solution. And everything would be fine, but if our pipeline does not end there, and processing continues, then there may be problems.

For example, if we next glue several generated files together, then we need to merge their mappings. They are arranged in such a way that they can be simply concatenated. However, the end of one file becomes the beginning of the next. And everything will fall apart.

And if you need to glue the sourcemaps?

It would be possible to do tricky remapping when concatenating, but here another sourcemap format comes to our aid. Here is the tweet! There are actually two of them. Composite sourcemaps already look like this..

{

version: 3,

sections: [

{

offset: {

line: 0

column: 0

},

url: "url_for_part1.map"

},

{

offset: {

line: 100

column: 10

},

map: { ... }

}

],

}

Here the generated file is divided into sections. For each section, the initial position is set and either a link to a regular sourcemap, or the content of the sourcemap itself for this section.

And pay attention to the fact that the beginning of the section is set in the "line-column" format, which is extremely inconvenient. Indeed, in order to measure a section, it is necessary to recalculate all newlines in the previous sections. Such jokes would look especially fun when generating binary files. Fortunately, sourcemaps by design do not support them.

What about macros? Map on their insides..

Another extreme case is macros in one form or another. That is, code generation at the application level. Take for example the log macro, which takes some expression and wraps it in conditional logging...

template log( value ) {

if( logLevel > Info ) { // A

console. log( value ) // B

}

}

log!stat1()

log!stat2()

log!stat3()

Thus, we do not evaluate a potentially heavy expression if logging is turned off, but at the same time we do not write a bunch of the same type of code.

Attention, the question is: where to map the code generated by the macro?

if( logLevel > Info ) { // A

console. log( stat1() ) // B

}

if( logLevel > Info ) { // A

console. log( stat2() ) // B

}

if( logLevel > Info ) { // A

console. log( stat3() ) // B

}

If we map it to the contents of the macro, then it turns out that, when executing the code step by step, we will walk inside the macro: ABABAB. And we will not stop at the point of its application. That is, the developer will not be able to see where he got into the macro from and what was passed to him.

What about macros? Let's look at their use..

Then maybe it's better to map all the generated code to the place where the macro is applied?

template log( value ) {

if( logLevel > Info ) {

console.log( value )

}

}

log!stat1() // 1

log!stat2() // 2

log!stat3() // 3

if( logLevel > Info ) { // 1

console.log( stat1() ) // 1

}

if( logLevel > Info ) { // 2

console.log( stat2() ) // 2

}

if( logLevel > Info ) { // 3

console.log( stat3() ) // 3

}

But here we get a different problem: we stopped at line 1, then again at line 1, then again .. This can go on for a tediously long time, depending on how many instructions will be inside the macro. In short, now the debugger will stop several times at the same place without entering the macro code. This is already inconvenient, plus debugging the macros themselves in this way is simply not realistic.

What about macros? Mapim and on application, and on vnutryanku!

With macros, it is better to combine both approaches. First, add an instruction that does nothing useful, but maps to the place where the macro is applied, and the code generated by the macro is already mapped to the macro code..

template log( value ) {

if( logLevel > Info ) { // A

console. log( value ) // B

}

}

log!stat1() // 1

log!stat2() // 2

log!stat3() // 3

void 0 // 1

if( logLevel > Info ) { // A

console. log( stat1() ) // B

}

void 0 // 2

if( logLevel > Info ) { // A

console. log( stat2() ) // B

}

void 0 // 3

if( logLevel > Info ) { // A

console. log( stat3() ) // B

}

Thus, when debugging step by step, we will first stop at the place where the macro is applied, then we will go into it and go through its code, then we will exit and move on. Almost like with native functions, only without the ability to jump over them, because the runtime knows nothing about our macros.

It would be nice to add support for macros in version 4 of sourcemaps. Oh, dreams, dreams..

How good are the sourcemaps! If it wasn't for the variable names..

Well, regarding variables, everything is also pretty dull here. If you think you can isolate an arbitrary expression in the source, and expect the browser to look at what it maps to and try to execute it, then no matter how!

- Only variable names, no expressions.

- Just a complete coincidence.

How good are the sourcemaps! If not for evil..

And one more devil in implementation details. If you are generating code not on the server, but on the client, then in order to execute it, you will need some form of invocation of the interpreter. If you use eval for this, then mappings will be fine, but will be slow. It is much faster to make a function and execute it many times already..

new Function( '', 'debugger' )

But the browser under the hood does something like:

eval(`

(function anonymous(

) {

debugger

})

`)

That is, it adds two lines to your code from above, which is why all the mappings turn the wrong way. To overcome this, you need to move the sourcemaps down, for example, by adding a couple of semicolons to the beginning of the mapping. Then new Function will map well. But now it will move out to eval.

That is, when you generate mappings, you must clearly understand how you will run this code, otherwise the mappings will show the wrong way.

How good are the sourcemaps! But something went wrong..

Well, the main trouble with sourcesmaps: if you mess up somewhere, then in most cases the browser will not tell you anything, but simply ignore it. And then you just have to guess.

- Tarot cards

- Natal charts

- Google Maps

And even Google is of little help here, because there are mostly answers to questions in the spirit of "how to set up WebPack?". And there is only one reasonable setting option. Why users were given so many grenades is not clear.

Let's fantasize? Sourcemaps of a healthy person!

Okay, with sorsmaps now everything is rather sad. Let's try to design them now from scratch. I would make a binary format for this, where not pointers, but specific ranges of bytes would be mapped. We will allocate constant 8 bytes for the span, that is, a machine word. Working with it is simple, fast and, most importantly, it is enough for our needs. The span will consist of 3 numbers: the offset of the range in the cumulative source (concatenation of all sources), the length of this range, and the length of the range as a result.

| field | Bytes Count |

|---|---|

| source_offset | 3 |

| source_length | 3 |

| target length | 2 |

This information is necessary and sufficient to uniquely map the source to the result. Even if the result is a binary, not text. And even if we need to remake something somewhere, then this is done by a simple and effective function.

But, unfortunately, we have to work with what we have now.

Is it worth messing with sourcemaps?

I hope I managed to show that sourcemaps are another swamp in which it is better not to get into. In the process of transformation, they must be carefully monitored so that they do not get lost and move out. Error messages must point to the source, and in the case of macros, you need to display a trace according to the source. Total:

- Difficult in itself.

- Carry through transformations.

- Carry in error messages.

- Plus trace on templates.

I wouldn't want to mess with them, but I had to. But let's think about how to avoid them.

Difficult? Let's take Babel!

Take a popular tool like Babel. Surely all the problems there have already been resolved and you can sit down and go!

Let's take the first available plugin ..

import { declare } from "@babel/helper-plugin-utils";

import type NodePath from "@babel/traverse";

export default declare((api, options) => {

const { spec } = options;

return {

name: "transform-arrow-functions",

visitor: {

ArrowFunctionExpression(

path: NodePath<BabelNodeArrowFunctionExpression>,

) {

if (!path.isArrowFunctionExpression()) return

path.arrowFunctionToExpression({ // Babel Helper

allowInsertArrow: false

specCompliant: !!spec,

})

},

},

}

})

It transforms an arrow function into a regular one. The task seems to be simple, and there is not so much code! However, if you look closely, then all this footcloth does is call the standard Babel helper and that's it. A bit too much code for such a simple task!

Babel, why so many boilerplates?

Okay, let's take a look at this helper..

import "@babel/types";

import nameFunction from "@babel/helper-function-name";

// ...

this.replaceWith(

callExpression( // mapped to this

memberExpression( // mapped to this

nameFunction(this, true) || this.node, // mapped to this

identifier("bind"), // mapped to this

),

[checkBinding ? identifier(checkBinding.name) : thisExpression()],

),

);

Yup, new AST nodes are generated here using global factory functions. But the trouble is that you have no control over where they are mapped. And a little earlier, I showed how important it is to precisely control what maps where. This information is not immediately available, so Babel has no choice but to map new nodes to the only node to which the plugin has matched (this), which does not always give an adequate result.

Shall we debug? AST smoker..

The next problem is debugging transformations. Here it is important for us to be able to see which AST was before the transformation, and which was after. Let's take a simple JS code:

Just look at what a typical abstract syntax tree (AST) looks like for him..

{

"type": "Program",

"sourceType": "script",

body: [

{

"type": "VariableDeclaration",

"kind": "const",

"declarations": [

{

"type": "VariableDeclarator",

"id": {

"type": "Identifier",

"name": "foo"

},

"init": {

"type": "ObjectExpression",

"properties": [

...

And this is only half of it. And this is not even a Babel AST, but some kind of noun - I just took the most compact of those that are on ASTExplorer. Actually, that's why this tool appeared in general, because without it, looking at these JSON-chiki is pain and suffering.

Shall we debug? AST of a healthy person!

And here the Tree format comes to our aid, which I once developed specifically for the purpose of visual representation of AST ..

{;}

const

foo

{,}

:

\bar

123

As you can see, the js.tree representation is already much cleaner. And does not require any ASTExplorer. Although I made a tree support patch for it , which has been ignored by the maintainer for the second year. It's open source, baby!

And how to work with it? Everything you need and nothing you don't!

In my Tree API implementation ($mol_tree2) each node has only 4 properties: type name, raw value, list of children nodes and span (pointer to the range in the source).

interface $mol_tree2 {

readonly type: string

readonly value: string

readonly kids: $mol_tree2[]

readonly span: $mol_span

}

Each span contains a link to the source, the contents of the source itself, the row and column numbers of the beginning of the range, and the length of this range..

interface $mol_span {

readonly uri:string

readonly source:string

readonly row: number

readonly col:number

readonly length: number

}

As you can see, there is everything you need to represent and process any language, and nothing unnecessary.

And how to work with it? Local factories!

New nodes are generated not by global factory functions, but, on the contrary, by local factory methods.

interface $mol_tree2 {

struct( type, kids ): $mol_tree2

data( value, kids ): $mol_tree2

list( kids ): $mol_tree2

clone( kids ): $mol_tree2

}

Each such factory creates a new node, but inherits the span from the existing node.

Why does this work?

In this way, we can precisely control which part of the source each node will map to, even after applying many AST transformations..

In the diagram, you can see how we generated 1 from 2 files through 3 transformations, which cut something, added something, and mixed something. But the binding to the source codes has not been lost anywhere.

And how to work with it? Generalized transformations!

There are 4 generic methods for writing transformations.

interface $mol_tree2 {

select( ... path ): $mol_tree2

filter( ... path, value ): $mol_tree2

insert( ... path, value ): $mol_tree2

hack( belt, context ): $mol_tree2[]

}

Each of them creates a new AST without changing the existing one, which is very convenient for debugging. They allow deep fetches, deep fetch filtering, deep inserts and hacks.

What kind of hacks are these? template example..

Haki is the most powerful thing that allows you to walk through the tree, replacing nodes of different types with the result of executing different handlers. The easiest way to demonstrate their work is to implement a trivial templating engine for AST as an example. Let's say we have a config template for our server..

rest-api

login @username

password @password

db-root

user @username

secret @password

After parsing it into AST, we can hack our config in just a few lines of code ..

config.list(

config.hack({

'@username': n => [ n.data( 'jin' ) ],

'@password': p => [ p.data( 'password' ) ],

})

)

As a result, it turns out that all placeholders are replaced with the values we need.

rest-api

login \jin

password \password

db-root

user \jin

secret \password

What if something more complicated? Automation script..

Let's consider a more complicated example - an automation script.

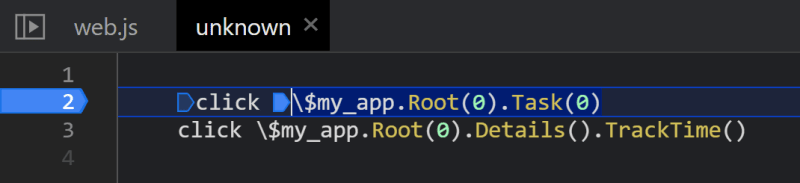

click \$my_app.Root(0).Task(0)

click \$my_app.Root(0).Details().TrackTime()

Here we have the click command. It is passed the ID of the element to be clicked on.

Well, let's get this script so that the output is javascript AST..

script.hack({

click: ( click, belt )=> {

const id = click.kids[0]

return [

click.struct( '()', [

id.struct( 'document' ),

id.struct( '[]', [

id.data( 'getElementById' ),

] ),

id.struct( '(,)', [ id ] ),

click.struct( '[]', [

click.data( 'click' ),

] ),

click.struct( '(,)' ),

] ),

]

},

})

Note that some of the nodes are created from the command name (click), and some of the nodes are created from the element identifier (id). That is, the debugger will stop here and there. And the error stack traces will point to the correct places in the source code.

Is it even easier? jack.tree - macro language for transformations!

But you can dive even deeper and make a DSL to handle the DSL. For example, the transformation of an automation script can be described as follows in jack.tree language..

hack script {;} from

hack click()

document

[]\getElementById

(,) data from

[]\click

(,)

script jack

click \$my_app.Root(0).Task(0)

click \$my_app.Root(0).Details().TrackTime()

Each hack is a macro that matches a given node type and replaces it with something else. It's still a prototype, but it already does a lot of things.

And if different targets? Transform to JS, cutting out the localization..

Hacks allow you to do more than just literally translate one language into another. With their help, it is possible to extract information of interest to us from the code. For example, we have a script in some simple DSL that outputs something in English..

+js

print @begin \Hello, World!

when onunload print @ end \Bye, World!

And we can convert it to JS so that instead of English texts, the localize function with the desired key twitches by simply wrapping it in a macro +js ..

{

console.log(localize("begin") )

function onunload() {

console.log(localize("end") )

}

}

And if different targets? Isolate translations, ignoring logic..

But we can apply another macro to it: +loc..

+loc

print @begin \Hello, World!

when onunload print @ end \Bye, World!

And then, on the contrary, all logic will be ignored, and we will get JSON with all the keys and their corresponding texts..

{

"begin": "Hello World!",

"end": "Bye, World!"

}

And if different targets? We change transformations like gloves ..

On jack.tree these macros are described by relatively simple code..

hack+js

hack print()

console

[]\log

(,) from

hack@()

localize

(,) type from

hack when function

struct type from

(,)

{;} kids from

{;} from

hack+loc

hack print from

hack when kids from

hack@:

type from

kids from

{,} from

As you can see, other macros can be declared inside a macro. That is, the language can be easily extended by means of the language itself. Thus, it is possible to generate different code. You can take into account the context in which the nodes are located, and match only in this context. In short, the technique is very simple, but powerful and at the same time nimble, since we do not have to walk up and down the tree - we only go down it.

Something went wrong? Trace of transformations!

Great power requires great responsibility. If something goes wrong and an exception occurs, and we have a macro on a macro and a macro drives, then it is extremely important to output a trace, which will help you figure out who matched what where on the way to the place of the error..

Here we see that an exception occurred at point (1), but a mistake was made by a person at point (2), to which we came from point (3).

Well, why another bike?

And here you are most likely wondering: "Dima, why have another bike? Don't boil the pot! Enough bicycles already!" I would be happy, but let's briefly compare it with the alternatives ..

| Babel | typescript | tree | |

|---|---|---|---|

| API complexity | ~300 | ∞ | ~10 |

| Abstraction from language | ❌ | ❌ | ✅ |

| API immutability | ❌ | ❌ | ✅ |

| Convenient serialization | ❌ | ❌ | ✅ |

| Self-sufficiency | ❌ | ✅ | ✅ |

Babel has about 300 functions, methods and properties. TS has some kind of prohibitive complexity there, and almost no documentation.

All of them are nailed to JS, which complicates their use for custom languages. They have a mutable API without concise AST serialization, which greatly complicates debugging.

Finally, Babel's AST is not self-sufficient, that is, we cannot directly generate both the resulting script and sourcemaps from it - for this we need to extend the source codes in a roundabout way. With error messages - the same trouble. TS is better at this, but here it is already another extreme - together with a banana, he gives you both a monkey, and a jungle, and even his own solar system in several guises.

Typical pipeline.. something is wrong here..

Let's take a look at what a typical front-end pipeline looks like..

- TS: parsed, transpiled, serialized.

- Webpack: parsed, shook trees, assembled, serialized.

- Terser: parsed, minified, serialized.

- ESLint: parsed, checked everything, serialized.

Something is wrong here .. All these tools lack a single language for communication, that is, some representation of AST, which would be, on the one hand, as simple and abstract as possible, and on the other hand, would allow expressing everything necessary for each tool, but not would be tied to him.

And, in my opinion, the Tree format is the best for this. Therefore, in the future, it would be great to push the idea to them to switch to this format. But unfortunately, I'm not an influencer enough for that. So let's not roll out the lip much, but let's dream a little ..

What would a healthy person's pipeline look like?

- Parsed in AST.

- Everything was transformed and checked.

- Serialized to scripts/styles and sourcemaps.

Thus, the main work takes place at the AST level without intermediate serializations. And even if we need to temporarily serialize the AST in order, for example, to transfer it to another process, then a compact Tree can be serialized and parsed much faster of sprawling JSON.

How to avoid result and sourcemap travel? text tree!

Ok, we have transformed the AST, it remains to serialize it. If this is done for each language separately, then it will be too difficult, because another language can have dozens or even hundreds of types of nodes. And each one needs not only to be serialized correctly, but also to correctly form a span for it in the mapping.

To make this easier, Stefan and I developed the text.tree, where there are only 3 types of nodes: lines, indents, and raw text. Simple example..

line \{

indent

line

\foo

\:

\123

line \}

{

foo:123

}

//# sourceMappingURL=data:application/json,

%7B%22version%22%3A3%2C%22sources%22%3A%5B%22

unknown%22%5D%2C%22sourcesContent%22%3A%5B%22

line%20%5C%5C%7B%5Cnindent%5Cn%5Ctline%5Cn%5C

t%5Ct%5C%5Cfoo%5Cn%5Ct%5Ct%5C%5C%3A%20%5Cn%5C

t%5Ct%5C%5C123%5Cnline%20%5C%5C%7D%5Cn%22%5D

%2C%22mappings%22%3A%22%3B%3BAAAA%2CAAAK%3BAACL

%2CAACC%2CCACC%2CGACA%2CEACA%3BAACF%2CAAAK%3B%22%7D

Any other language can be transformed into text.tree relatively easily without any span dances. And further serialization with the formation of sourcesmaps is just the use of standard, already written functions.

What if you need WebAssembly? wasm.tree -> bin.tree

Well, in addition to text serialization, we also have binary serialization. Everything is the same here: we transform any language into bin.tree, after which we get a binary from it with a standard function. For example, let's take a non-cunning wasm.tree code..

custom xxx

type xxx

=> i32

=> i64

=> f32

<= f64

import foo.bar func xxx

\00

\61

\73

\6D

\01

\00

\00

\00

You can write code both directly on wasm.tree, and on any of your DSLs, which has already been transformed into wasm.tree. Thus, you can easily write under WebAssembly without diving into the wilds of its bytecode. Well .. when I finish this compiler, of course. If someone is ready to help - join.

Even WASM with sourcemapping?!

And, of course, we automatically get sourcemaps from bin.tree as well. It's just that they won't work. For WASM, you need to generate an older mapping format that is used for compiled programming languages.

But I'm still afraid to climb into these jungles ..

Forgotten something?

So far, we've only talked about generating code from our DSL. But for comfortable work with it, many more things are required ..

- Syntax highlighting

- Hints

- Checks

- Refactorings

One extension to rule them all.. Come on?!

I have a wild idea - for each IDE, make one universal plugin that can read the declarative description of the language syntax and use it to provide basic integration with the IDE: highlighting, hints, validation. I have so far implemented highlighting.

There is a three-minute video of the process of describing a new language for your project on the $mol-channel..

You do not need to restart anything, install developer builds of the development environment or special extensions for them. You just write code and it repaints your DSL in real time.

On the right you see the code in the language view.tree, and on the left a description of this language. The plugin does not know anything about this language, but thanks to the description it knows how to colorize it.

What do you need for automatic highlighting?

It works simply: upon encountering an unfamiliar language (determined by the file extension), the plugin scans the workspace for the existence of a schema for this language. If it finds several schemes, it connects them.

There is also a requirement for the language itself - the semantics of its nodes must be specified syntactically. For example, "starts with a dollar" or "has the name null". That is, there should not be syntactically indistinguishable nodes that have different semantics. This, however, is useful not only for highlighting, but also for simplifying the understanding of the language by the user himself.

Total what you need:

- Declarative description of the language.

- Syntactic binding to semantics.

- No installation for each language.

- Default heuristics.

Yes, the description of the language is not at all necessary, because sometimes the default heuristics are enough for coloring any tree-based languages.

Where to go?

This is where my story ends. I hope I managed to interest you in my research. And if so, you might find the following links helpful..

- nin-jin.github.io/slides/sourcemap - these slides

- tree.hyoo.ru - sandbox for tree transformations

-

@_jin_nin_- JS tweets

Thank you for listening. I felt better.

Witness's testimonies

- ❌ At the beginning it was a bit difficult to focus on the problem.

- ❌ It's complicated and it's not clear where to apply it.

- ❌ I still don’t understand why this report is needed at this conference, the topic seems to have been revealed, but the design of DSL is somewhat strange, practical applicability = 0.

- ❌ The name does not match the declared (even minimally), information about the sourcemap goes from 5 minutes to the 35th, the rest of the time the author broadcasts about his framework, which has nothing to do with the topic. I wasted my time, it would be better to look at another author.

- ✅ Cool theme and Dima even almost got rid of professional deformation with $ mol.

- ✅ Interesting report. Dmitry spoke very well about the subject area, highlighted possible problems and thought about ease of use for the user. Very cool!

Top comments (0)