The article is originally published on ITNEXT

Introduction to Docker

In today’s IT world, most of us must have heard or faced issues like code works well in the Dev environment, but not in testing or a production environment. Because of this, the Operations team faces a severe headache of maintaining the systems in their proper state without having downtimes or affecting the end-user. This becomes a snake and ladder game between Dev and Ops and creates a lot of chaos that results in unproductive releases, downtimes, and trust issues. When the developer code works in one machine but not in another, a lot of developer time is consumed in finding the exact error that caused this issue.

Docker is the tool that solves this puzzle, with docker we can pack the code along with all its configuration and dependencies so that it can work seamlessly in any environment, whether it is development, operation or testing.

Why Docker?

Docker changed the way applications used to build and ship. It has completely revolutionized the containerization world. With Docker, deploying your software becomes a lot easier, you don’t have to think about missing a system configuration, underlying infrastructure, or a prerequisite. Docker enables us to create, deploy, and manage lightweight, stand-alone packages that contain everything that is needed to run an application. To be specific, it contains code, libraries, runtime, system settings, and dependencies. Each such packages are called containers. Each container is deployed with its own CPU, network resources, memory, and everything without having to depend upon any individual external kernel and operating system.

Imagine you’ve thousands of test cases to run connected to a database, and they all go through sequentially. How much time do you think that will take? But with Docker, this will happen very quickly with the containerization approach where all these test cases can run parallelly on the same host at the same time.

Benefits of using Docker

- Enables consistent environment

- Easy to use and maintain

- Efficient use of the system resources

- Increase in the rate of software delivery

- Increases operational efficiency

- Increases developer productivity

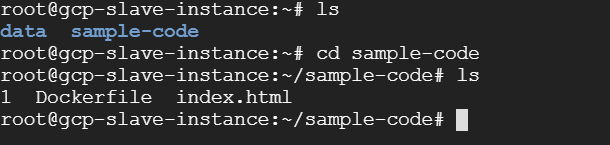

- Docker Architecture

Docker works on a client-server architecture. There is something called Docker client that talks to the Docker daemon, which does the heavy lifting of building, running, and distributing Docker containers. Both the Docker client and Docker daemon can run on the same system, or the other way is, you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, through UNIX sockets or network interface.

Main components in the Docker system

Docker Image

Docker Images are made up of multiple layers of read-only filesystems, these filesystems are called a Docker file, they are just text file with a set of pre-written commands.

For every text written or instructions given in docker file a layer is created and is placed on top of another layer forming a docker image, which is future used to create docker container.

Docker has created hub.docker.com, where people store their created docker images, it’s like a storage area for docker images, also it can be stored in a local registry.

Docker Container

A container is an isolated application, it is built from one or more images, and acts as an entire package system which includes all the libraries and dependencies required for an application to run. Docker containers come without OS, they use the Host OS for functionality, hence it is a more portable, efficient and lightweight system that comes with a guarantee that the software will run in any environment.

Docker Engine

It is the nucleus of the Docker system, an application that is installed on the host machine and it follows client-server architecture.

There are mainly 3 components in the Docker Engine:

Server is the docker daemon named dockerd. Creates and manages docker images, containers, networks, etc.

Rest API instructs docker daemon what to do.

Command Line Interface (CLI) is the client used to enter docker commands.

Docker client

Docker client is the key component in the Docker system which is used by users to interact with Docker, it provides a command-line interface (CLI). When we run the docker commands, the client sends these commands to the daemon ‘dockerd, to issue build, run, and stop the application.

Docker Registry

This is the place where Docker images are stored. Docker Hub and Docker Cloud are public registries that can be accessed by everyone and anyone, whereas, other option is having your own private registry. Docker by default is configured to look for images on Docker Hub. You can also have an Artifactory Docker Registry for more security and optimize your builds.

Let us get into the practicalities of Docker

Steps to install Docker:

Below is the link for docker installation, that is provided by Docker, we have clear documentation available for installing Docker.

https://docs.docker.com/engine/install/ubuntu/

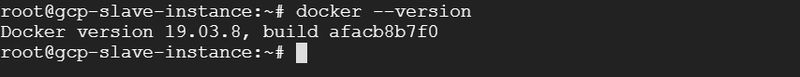

Once docker is installed check the version of docker

Few basic Docker commands

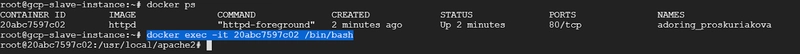

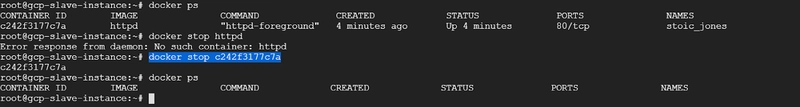

- docker ps: Gives you the list of active containers on your machine

In the output we can see it displays few details about the container

CONTAINER ID: Each and every container will be assigned with a unique ID

IMAGE: Every Image has an attached tag

COMMAND: Each and every image will be assigned with a unique ID

CREATED: shows the detail when it was created

STATUS: Shows the detail whether the container is active or not

PORTS: Exposed port

NAMES: Random name is assigned by docker for container created

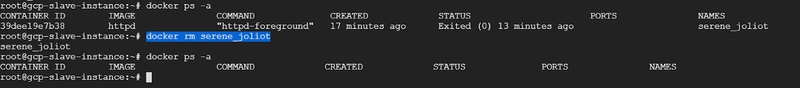

docker ps -a

Gives you the full list of containers including the once’s which are stopped or crashed

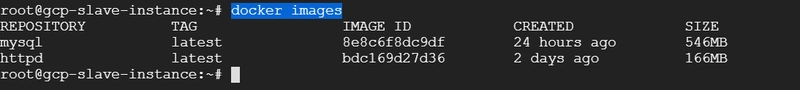

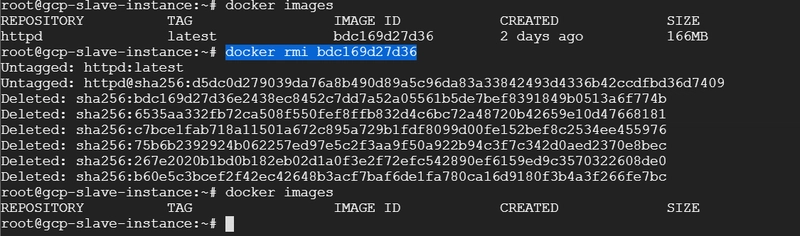

docker images

Gives you the list of images present in the system

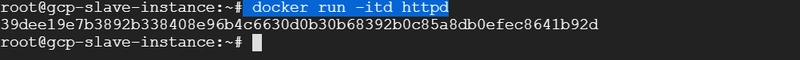

docker run ARGUMENT IMAGE-NAME

It will create a container using the image name

Here arguments -itd means

i — interactive

t — connected to terminal

d — detached Mode

We can run the container as detached mode (-itd) or root mode (-td)as per the requirement.

docker exec -it container name /bin/bash/

Get access to shell of container

With this command we can run our required code within the container.

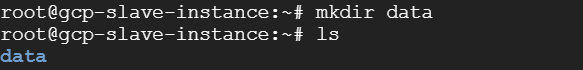

Volumes in container

Containers are dynamic in nature, they move a lot, today a container might be on server A tomorrow it may be on server B so they will be shuffled, relocated as per the requirement

Volume acts as data warehouse, or data storage attached externally to container.

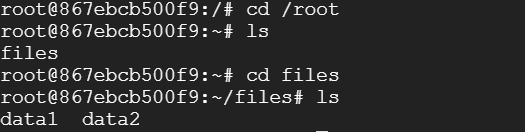

Let’s say for example, in data directory (data) we have data1 and data2 as file that are part of the data associated for our application to work with.

but we do not want these data to be really stored within container, instead of that we want it to be mounted, so the actual read and write will happen within data1 and data2 it will look like it’s a part of container. So these mounted data are called as Volumes.

Now that we have a basic idea about creating, deleting and starting a container, further will see how to create your own image.

On our server machine we need to install Apache2 by running below mentioned commands:

sudo apt-get update

sudo apt-get install apache2

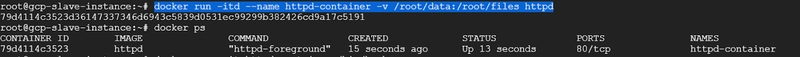

Creating a Dockerfiles

Create a directory as sample-code within that create a Dockerfile and index.html file

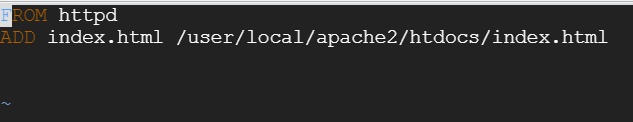

Every dockerfile starts with FROM command which tells from where is the base image is coming from , here we are telling to use httpd as our base image, and then we want to add a file index.html, which will act as our source and our destination will be /usr/local/apache2/htdocs/index.html, when we run this file docker will create a temporary container and it will create an image out of it once the image is created we can use this image to create a container out of it.

docker build . -t first-image:

this command will build an image where first-mage is the name of imagedocker run -itd — name first-container -p 8090:80 first-image:

this command will build the container where first-container is name of container mapped to port 80

we can see the output : http://server_IP:port

Conclusion

Docker containers are better than virtual machines because they ensure that our application runs without any error. The containerization paves the way forward to digital transformation in the software powered organizations. Docker Containers can be spun up with any framework and language of your choice as long as there is an image already available in the community for them. Many CI/CD tools like Jenkins, CircleCI, TravisCI, etc. are now fully support and integrated with Docker, which makes diffusing your changes from environment to environment is now a breeze. Docker makes deployment of application very easy and because the containers are lightweight, it helps in scale and automation.

There are several free resources you can learn Docker from.

Listing some below,

Top comments (1)

Seems to me that Docker is for lazy developers who won't or can't solve infra and dependency problems. By deploying with docker, they move the extra effort to the user. With one developer and 1000 users, this seems highly inefficient and unfair. Other ecosystems have similar issues. Golang puts as much as possible on the developer, freeing the user of most dependency issues.

Can you write a tiny bit about docker, containers in general, as an anti-pattern?