Understanding Kubernetes Services: A Comprehensive Guide

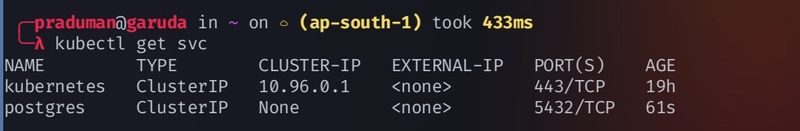

A Kubernetes Service is an object that helps you expose an application running in one or more Pods in your cluster. Since pod IP addresses can change when pods are created or destroyed, a service provides a stable IP that doesn't change. This ensures that both internal and external users can always connect to the right application, even if the pods behind it are constantly changing. By default the service that is created is clusterIP, it is useful for communication within the cluster.

-

To create a service (

default: ClusterIP)

kubectl expose <deployment/pod-name> --port=80 -

To see the services

kubectl get svc -owide

What Are Endpoints in Kubernetes?

Endpoints are objects that list the IP addresses and ports of the pods associated with a specific service. When you create a service in Kubernetes, it uses a selector to determine which pods it should communicate with. The Endpoints object automatically updates as pods are added or removed, ensuring that the service always knows where to send traffic. It play a crucial role in connecting services to the pods they manage.

Viewing Endpoints

kubectl get endpoints <service-name>

Exploring Different Types of Kubernetes Services

ClusterIP

NodePort

LoadBalancer

ExternalName

Headless

ExternalDNS

Networking Fundamentals in Kubernetes: A Deep Dive

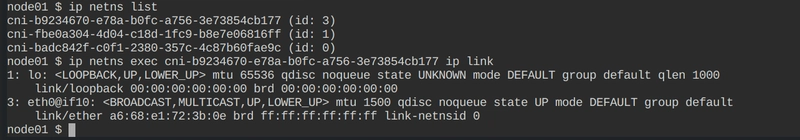

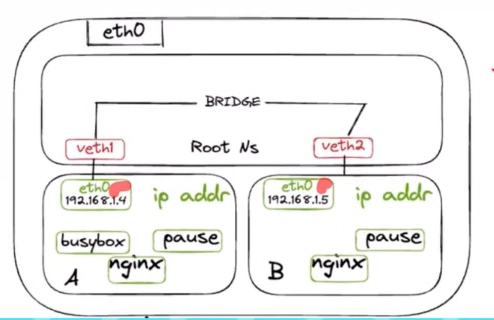

Inside each node, there's always a veth(Virtual Ethernet) pair for networking. When a pod runs, a special container called the pause container is also created. For example, if you create a pod with two containers, like busybox and nginx, there will actually be three containers: the two you defined and the pause container. The pod gets its own IP, and this IP is connected to an interface called eth0(Ethernet interface) inside the pod using CNI.

-

Create a multi-container pod (

mcp.yml)

apiVersion: v1 kind: Pod metadata: name: shared-namespace spec: containers: - name: p1 image: busybox command: ['/bin/sh', '-c', 'sleep 10000'] - name: p2 image: nginx

```bash

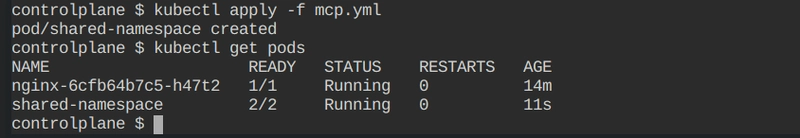

kubectl apply -f mcp.yml

```

<p align="center">

-

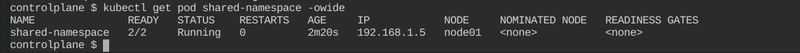

To see the pod’s IP

kubectl get pod shared-namespace -owide -

Check the node where the pod is running and SSH into it

ssh node01 -

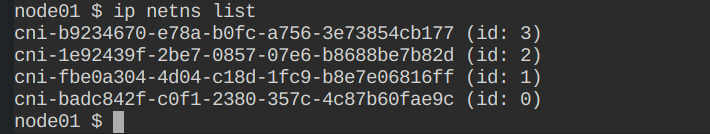

To view the network namespaces created

ip netns list -

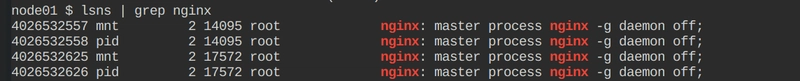

To find the pause container

lsns | grep nginx -

Get details of the pause container's namespaces (net, ipc, uts)

lsns -p <PID-from-the-previous-command> -

To check the list of all network namespaces

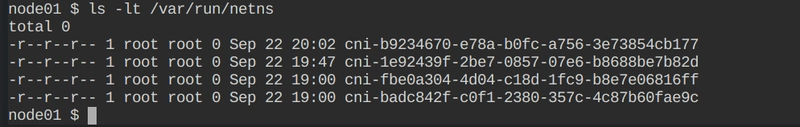

ls -lt /var/run/netns -

Exec into the namespace or into the pod to see the ip link

ip netns exec <namespace> ip link kubectl exec -it <pod-name> -- ip addr -

Find the veth Pair

- Once you run the above command, you may see an interface with a name like

eth@9. The number after@represents the identifier of the virtual Ethernet (veth) pair interface. - To find the corresponding link on the Kubernetes node, you can search using this identifier. For example, if the number is

9, run the following on the node

ip link | grep -A1 ^9- This will show the details of the veth pair on the node

- Once you run the above command, you may see an interface with a name like

-

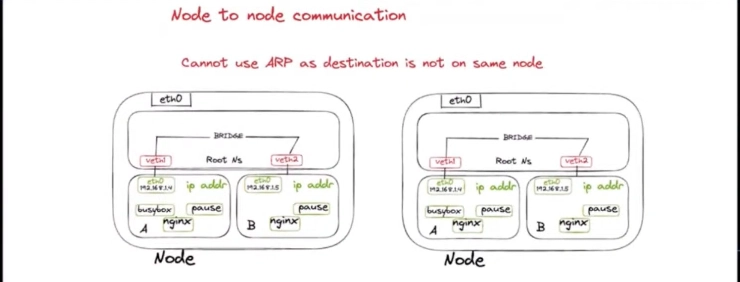

Inter Node communication

Here, Traffic(packet) goes to

eth0and thenveth1acts as tunnel and traffic goes toroot namespaceandbridgeresolve the destination address using theARP tablethenVeth1send traffic(packet) topod B. This all only happens if there is a single node

StatefulSet: Managing Stateful Applications in Kubernetes

It helps manage stateful applications where each pod needs a unique identity and keeps its own data. It makes sure pods are started, updated, and stopped one by one, in a set order. Each pod has a fixed name and can keep its data even if it is restarted or moved to another machine. This is useful for apps like databases, where data and order matter.

Key Differences Between Deployments and StatefulSets

| Feature | Deployment | StatefulSet |

|---|---|---|

| Use Case | For stateless applications | For stateful applications |

| Pod Identity | Pods are interchangeable and do not have stable identities | Pods have unique, stable identities (e.g., pod-0, pod-1) |

| Storage | Typically uses ephemeral storage, data is lost when a pod is deleted | Each pod can have its own persistent volume attached |

| Scaling Behavior | Pods are scaled simultaneously and in random order | Pods are scaled sequentially (e.g., pod-0 before pod-1) |

| Pod Updates | All pods can be updated concurrently | Pods are updated sequentially, ensuring one pod is ready before moving to the next |

| Order of Pod Creation/Deletion | No specific order in pod creation or deletion | Pods are created/deleted in a specific order (e.g., pod-0, pod-1) |

| Network Identity | Uses a ClusterIP service, no stable network identity | Typically uses a headless service, giving each pod a stable network identity |

| Examples | Microservices, stateless web apps | Databases (MySQL, Cassandra), distributed systems requiring unique identity or stable storage |

| Use of Persistent Volumes | Persistent volumes are shared across pods (if needed) | Each pod gets a dedicated persistent volume |

The

Servicethat is created inStatefulSetis theClusterIP: nonei.e., (headless service)

-

StatefulSet example for deploying a MySQL database in Kubernetes

apiVersion: apps/v1 kind: StatefulSet metadata: name: mysql spec: serviceName: "mysql-service" replicas: 3 selector: matchLabels: app: mysql template: metadata: labels: app: mysql spec: containers: - name: mysql image: mysql:5.7 ports: - containerPort: 3306 name: mysql volumeMounts: - name: mysql-storage mountPath: /var/lib/mysql volumeClaimTemplates: - metadata: name: mysql-storage spec: accessModes: ["ReadWriteOnce"] resources: requests: storage: 1Gi- Each pod will have its own persistent storage (

mysql-storage). - Pods will have stable network names (

mysql-0,mysql-1, etc.), and their data will persist even if they restart.

- Each pod will have its own persistent storage (

-

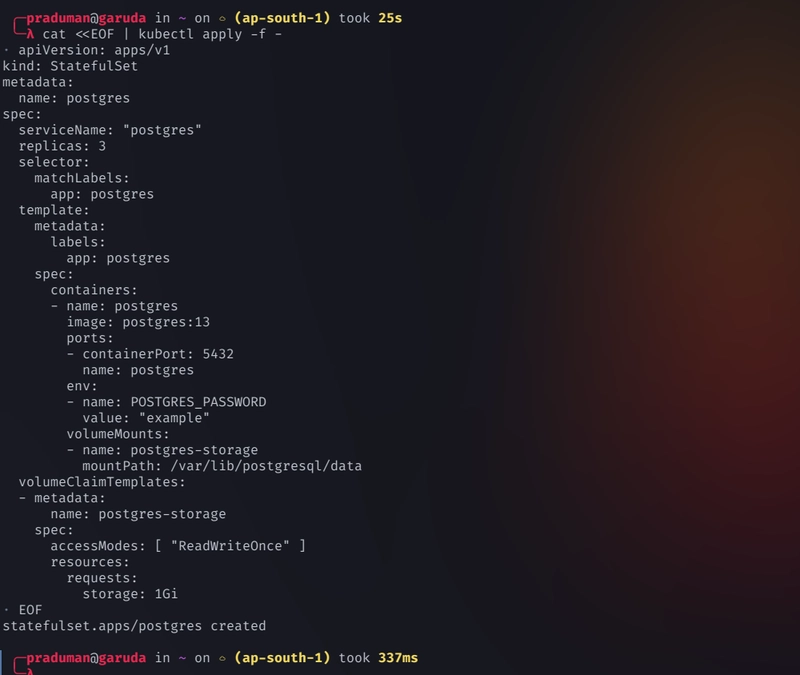

Create a StatefulSet

cat <<EOF | kubectl apply -f - apiVersion: apps/v1 kind: StatefulSet metadata: name: postgres spec: serviceName: "postgres" replicas: 3 selector: matchLabels: app: postgres template: metadata: labels: app: postgres spec: containers: - name: postgres image: postgres:13 ports: - containerPort: 5432 name: postgres env: - name: POSTGRES_PASSWORD value: "example" volumeMounts: - name: postgres-storage mountPath: /var/lib/postgresql/data volumeClaimTemplates: - metadata: name: postgres-storage spec: accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 1Gi EOF -

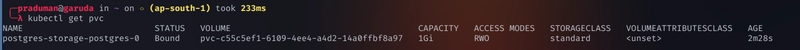

A

persistent volumeandpersistent volume claimis also created

kubectl get pvkubectl get pvc -

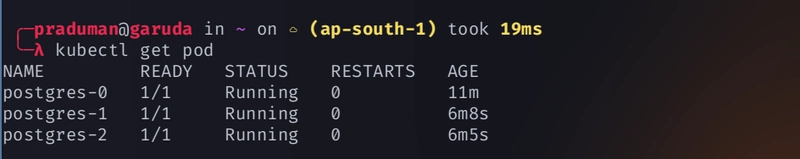

Check for pods that is created

-

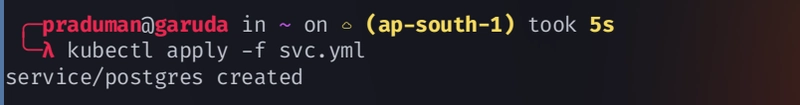

Create a service (

svc.yml)

apiVersion: v1 kind: Service metadata: name: postgres labels: app: postgres spec: ports: - port: 5432 name: postgres clusterIP: None selector: app: postgres -

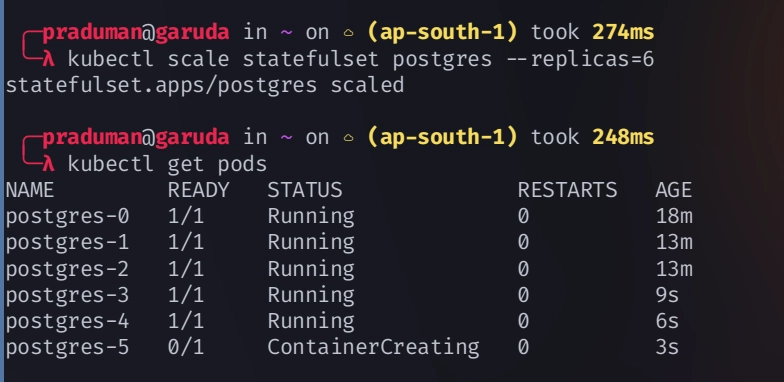

Increase the replicas and you will see the each pod have a ordered and fixed name

kubectl scale statefulset postgres --replicas=6

```bash

kubectl get pod

```

<p align="center">

-

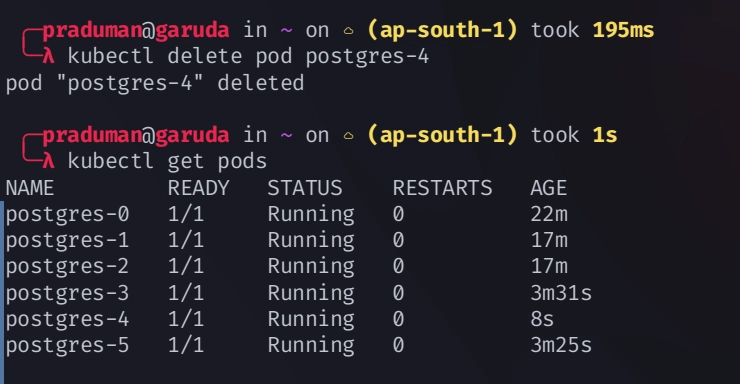

If you delete a pod a new pod with the same name again created

kubectl delete pod postgres-3 -

Check if the service is working or not

kubectl exec -it postgres-0 -- psql -U postgres

NodePort: Exposing Your Kubernetes Application

A NodePort is a way to let people from outside the cluster access your app. It opens a specific port on every node in the cluster, allowing you to reach the app using the node's IP and the assigned port. This port is a number in the range 30000–32767. You can access your application by visiting <NodeIP>:<NodePort>, where NodeIP is the IP address of any node in the cluster, and NodePort is the port assigned by Kubernetes.

Where it's useful ?

For testing and development: You can quickly share access to apps without need of extra networking setup.

For small or internal projects: If a company doesn't use cloud-based load balancers or advanced setups, NodePort is a simple solution to expose an app.

YAML configuration for a NodePort service that exposes an nginx deployment or pod

apiVersion: v1 kind: Service metadata: name: nginx-nodeport labels: app: nginx spec: type: NodePort # Specify the service type as NodePort selector: app: nginx # This must match the labels of the nginx pods ports: - protocol: TCP port: 80 # The port the service will listen on targetPort: 80 # The port the nginx container is listening on nodePort: 30008 # NodePort exposed (optional, Kubernetes can assign one if omitted)

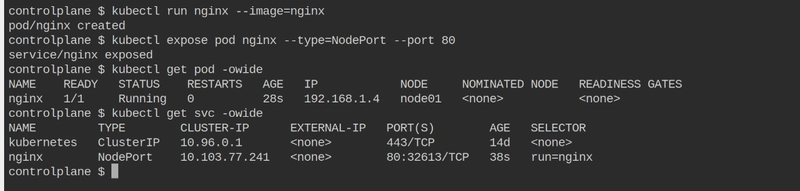

Create a Pod and expose it using NodePort service

kubectl run nginx --image=nginx

kubectl expose pod nginx --type=NodePort --port 80

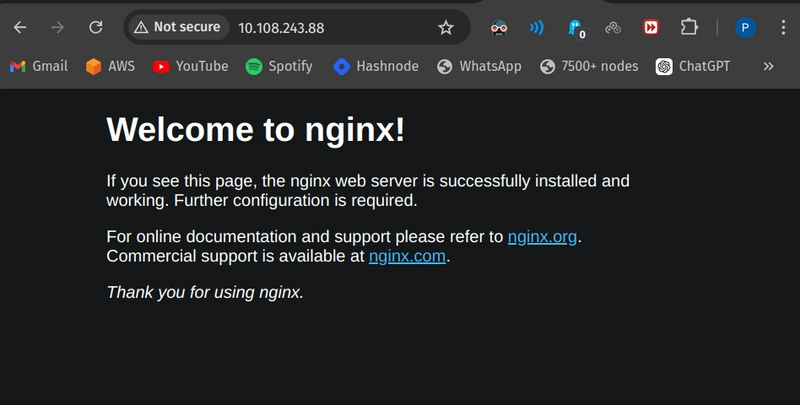

Access the Server http://192.168.1.4:32613

-

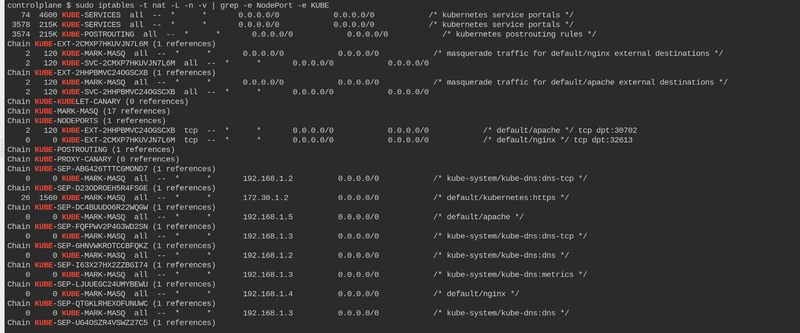

Check NodePort and KUBE Rules

to check the rules in the iptables firewall configuration related to Kubernetes NodePort services

sudo iptables -t nat -L -n -v | grep -e NodePort -e KUBE -

Check Specific NodePort Rules

sudo iptables -t nat -L -n -v | grep 32613This indicates that traffic coming to port

32613(your NodePort) is being redirected properly to the appropriate service.

LoadBalancer: Making Your Application Public

It allows your application to be accessible to the public over the internet. When you create a service of this type, Kubernetes asks your cloud provider (like AWS, GCP, or Azure) to set up a load balancer automatically.

This service type is ideal when you want to expose an application, such as a web server, to users outside your cluster. The cloud provider provides an external IP address, which users can use to access your application.

The load balancer automatically distributes incoming traffic to all the pods running the service. This helps spread the traffic evenly and avoid overloading any single pod.

LoadBalancer YAML file

apiVersion: v1 kind: Service metadata: name: nginx-loadbalancer spec: type: LoadBalancer # This makes it a LoadBalancer service selector: app: nginx # This selects the nginx pods ports: - protocol: TCP port: 80 # Exposes port 80 (HTTP) to the public targetPort: 80 # The port on the nginx containerHow It Works:

You create the LoadBalancer service.

Kubernetes communicates with the cloud provider, and a load balancer is created.

The load balancer assigns an external IP address.

Traffic from the external IP is sent to your service, which distributes it to the nginx pods.

-

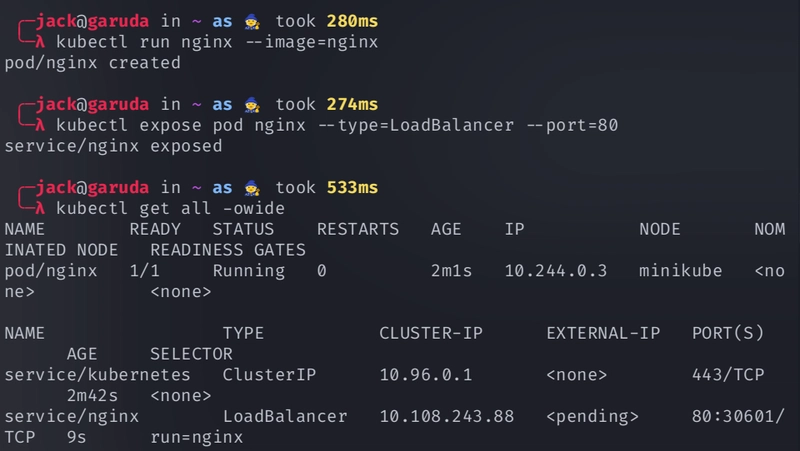

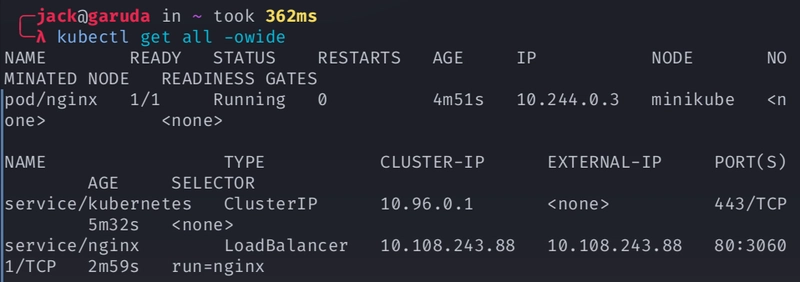

Creating a LoadBalancer service in minikube

kubectl run nginx --image=nginx kubectl expose pod nginx --type=LoadBalancer --port=80 -

Creates a network tunnel, making your LoadBalancer service accessible

minikube tunnel -

Access the Service at

External-ip

Why Avoid LoadBalancer in Production?

Cost: LoadBalancers can be expensive, as cloud providers charge for them.

Scalability Problems: They might struggle with sudden increases in traffic.

Single Point of Failure: If the LoadBalancer fails, all services using it can go down.

Limited Flexibility: They have fixed rules, making it hard to manage complex traffic needs.

Performance Delays: They can add extra time (latency) to how quickly users access your services.

Dependence on Cloud Services: If the cloud provider has issues, your services might become unavailable.

Complex Setup: Managing LoadBalancers can complicate your system, especially with multiple clusters.

Alternatives to LoadBalancer for Kubernetes

Ingress Controllers: These are cheaper and allow more flexible traffic management.

Service Mesh: They help manage traffic and improve observability without needing a LoadBalancer.

ExternalName: Mapping Services to External DNS Names

The ExternalName service type is a special kind of service in Kubernetes that allows you to map a service to an external DNS name. Instead of using a cluster IP, it returns a CNAME record with the specified external name. We use it when we need to communicate to an external service (like external API or database) without changing your application code. Using this we can also communicate with services that is present in other namespaces.

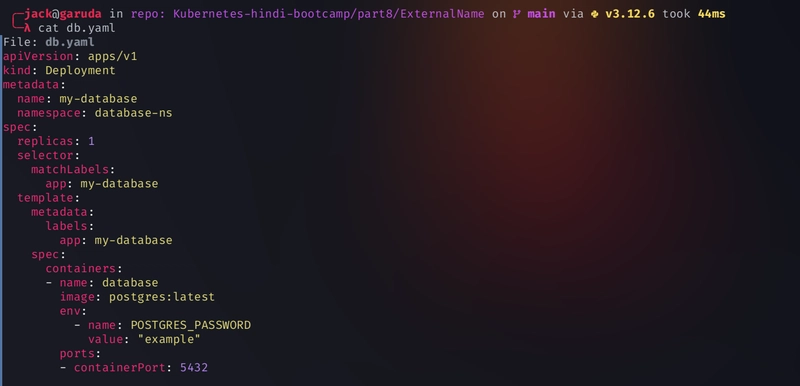

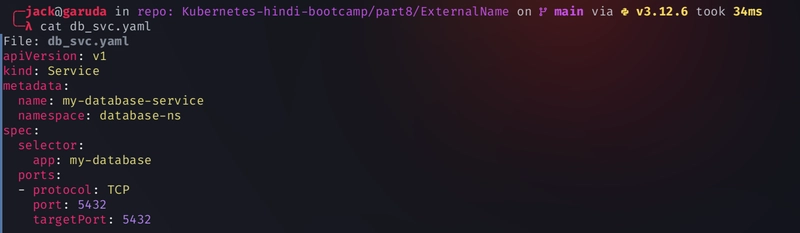

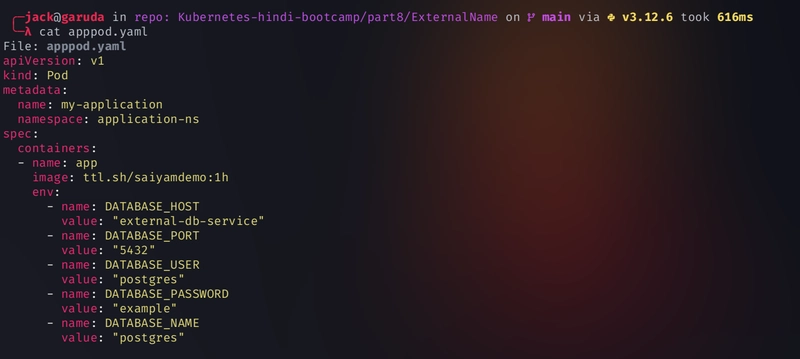

Example: Two services within two other namespaces communicate with each other

Use

This fileto get the Sourcecode

-

Create two namespace

kubectl create ns database-ns kubectl create ns application-ns -

Create the database pod and service

kubectl apply -f db.yaml kubectl apply -f db_svc.yaml -

Create ExternalName service

kubectl apply -f externam-db_svc.yaml -

Create Application to access the service Docker build

docker build --no-cache --platform=linux/amd64 -t ttl.sh/saiyamdemo:1h . -

Create the pod

kubectl apply -f apppod.yaml -

Check the pod logs to see if the connection was successful

kubectl logs my-application -n application-ns

Ingress: Controlling User Access to Kubernetes Applications

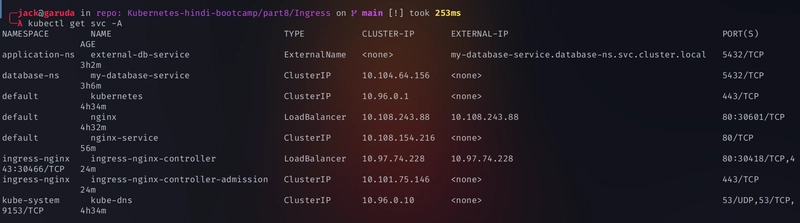

It is a way to control how users access your applications running in Kubernetes. Think of it as a gatekeeper that directs traffic to the right place. To use ingress we firstly need to deploy an Ingress Controller on the cluster and the Ingress are implemented through Ingress Controller. Ingress controllers are the open source project of many companies such as NGINX, Traefik, Istio Ingress, etc.

Now, we create a service using ClusterIP and a resource using Ingress. User request firstly goes to ingress controller then ingress controller send it to ingress then after it goes to the clusterIP service configured by us and at last it goes to the pod. Here we deploy Ingress Controller as the LoadBalancer.

Why Use Ingress?

Single Address: Instead of having different addresses for each app, you can use one address for everything.

Routing by URL: You can send users to different apps based on the URL they visit. For example,

/app1goes to one app and/app2goes to another.Secure Connections: Ingress can handle secure connections (HTTPS) easily, so your apps are safe to use.

Balancing Traffic: It spreads out incoming traffic evenly, so no single app gets overloaded.

Example

Imagine you have two apps:

A website

An API

You can set up Ingress to route traffic like this:

If someone goes to

/, they get the website.If they go to

/api, they get the API.Here’s how you might set it up:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: example-ingress spec: rules: - host: "my-app.com" http: paths: - path: / pathType: Prefix backend: service: name: website-service port: number: 80 - path: /api pathType: Prefix backend: service: name: api-service port: number: 80

-

Create a deployment (

deploy.yaml)

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 volumeMounts: - name: config-volume mountPath: /etc/nginx/nginx.conf subPath: nginx.conf # Ensure this matches the filename in the ConfigMap volumes: - name: config-volume configMap: name: nginx-config

```yaml

kubectl apply -f deploy.yaml

```

-

Create a

clusterIPservice (svc.yaml)

apiVersion: v1 kind: Service metadata: name: nginx-service spec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80

```bash

kubectl apply -f svc.yaml

```

-

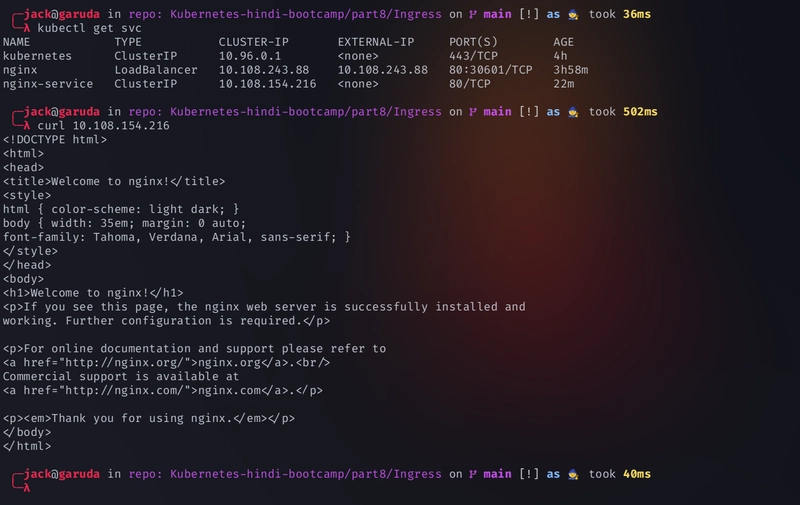

verify the service is created

kubectl get svc -

Create a nginx.conf file

user nginx; worker_processes auto; error_log /var/log/nginx/error.log notice; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; #tcp_nopush on; keepalive_timeout 65; #gzip on; server { listen 80; server_name localhost; location / { root /usr/share/nginx/html; index index.html index.htm; } location /public { return 200 'Access to public granted!'; } error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } } } -

Create a ConfigMap

kubectl create configmap nginx-config --from-file=nginx.conf -

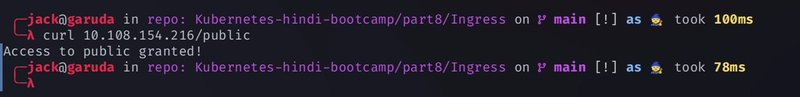

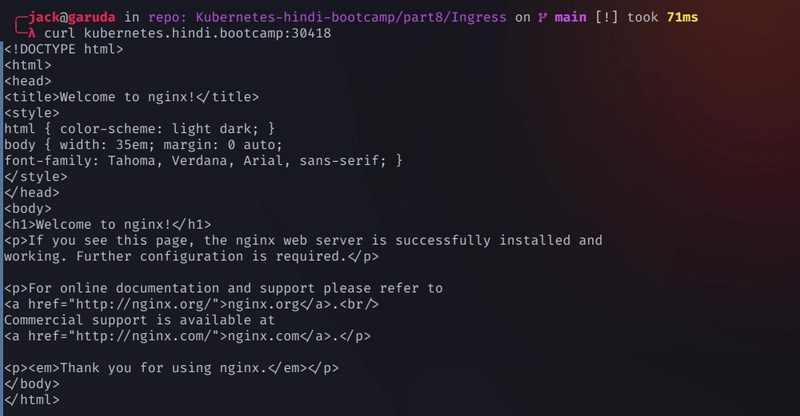

Access the server

curl <cluster-IP>

Now we need to access this application from outside the cluster without using

NodePortandLoadBalancerservice

-

Install

Ingress controllerfirst

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.9.4/deploy/static/provider/cloud/deploy.yaml -

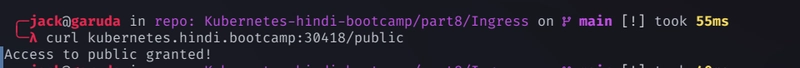

Create a Ingress object (

ingress.yaml)

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: bootcamp spec: ingressClassName: nginx rules: - host: "kubernetes.hindi.bootcamp" http: paths: - path: / pathType: Prefix backend: service: name: nginx-service port: number: 80 - path: /public pathType: Prefix backend: service: name: nginx-service port: number: 80 -

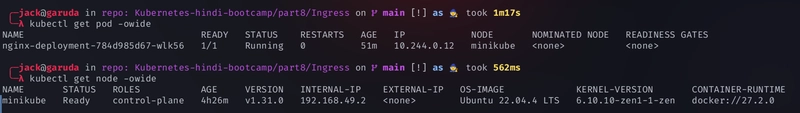

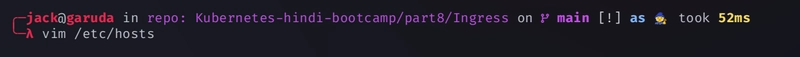

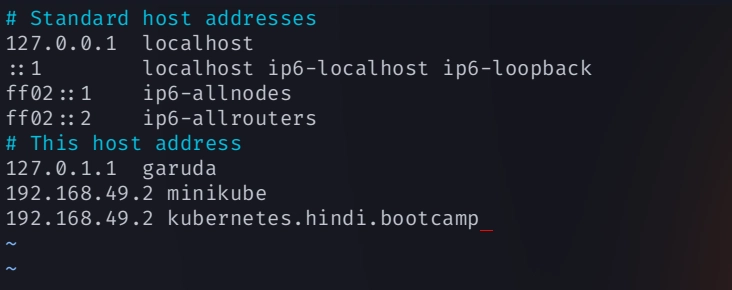

ssh onto the node where the pod is deployed and the change the /etc/hosts file

# add this in file <node-internal-IP> <nodeName> <node-internal-IP> kubernets.hindi.bootcamp -

Now apply the create

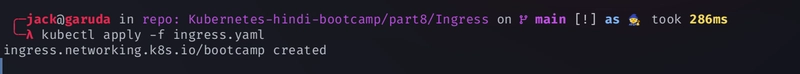

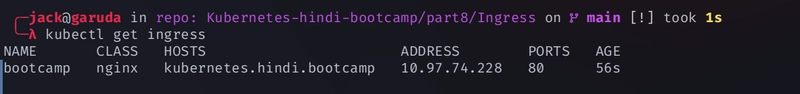

ingress.yamlfile

kubectl apply -f ingress.yaml -

We need to connect user to the ingress controller

curl kubernetes.hindi.bootcamp:30418

ExternalDNS: Connecting Kubernetes to DNS Providers

It connects your Kubernetes cluster to a DNS provider (like AWS Route53, Google Cloud DNS, or Cloudflare) and updates DNS records when you deploy new services or ingresses. This makes your apps available under a specific domain name.

How it works:

Watch Kubernetes Resources: ExternalDNS continuously monitors Kubernetes resources (like

Service,Ingress, orEndpointresources) for changes.Determine Desired DNS Records: Based on the services or ingress objects, it calculates the required DNS records.

Update DNS Provider: It then communicates with a DNS provider (like AWS Route53, Google Cloud DNS, or Cloudflare) to create, update, or delete DNS records as needed.

Use Cases

Automatically manage DNS entries for services or applications exposed via Kubernetes Ingress or LoadBalancer services.

Keep DNS records up-to-date when service IPs or endpoints change.

Use annotations to control which resources should have DNS records managed by ExternalDNS.

Conclusion

In this article, we explored various aspects of Kubernetes, including services, networking fundamentals, StatefulSets, and different types of services like NodePort, LoadBalancer, ExternalName, Ingress, and ExternalDNS. We delved into the specifics of how each service type functions, their use cases, and provided practical examples to illustrate their implementation. Understanding these components is crucial for effectively managing and deploying applications in a Kubernetes environment. By mastering these concepts, you can ensure your applications are scalable, resilient, and efficiently managed within your Kubernetes clusters.

Top comments (0)