A pod is the smallest compute unit object you create in Kubernetes, and it's how you group one or more containers. Containers in a pod share the same networking, the same storage, and are scheduled in the same host. A pod would feel and act like a container would in case of single container pods.

Thus A Pod represents a unit of deployment: a single instance of an application in Kubernetes, which might consist of either a single container or a small number of containers that are tightly coupled and that share resources.

Application-specific "logical host"

So you should think of a pod as if it was a representation of a virtual machine, but it actually isn't.

Let us understand what we mean by that.

If an application needs some other applications or components which run in the same VM or(any physical machine), we can call it an application-specific "logical host". Basically, it has every relatively tightly coupled components for the app to run. An example would be having a Redis cache along with the web app in the same VM.

Pod models an application-specific "logical host" - it contains one or more application containers(Eg: Redis and App running in nodejs) which are relatively tightly coupled — in a pre-container world, being executed on the same physical or virtual machine would mean being executed on the same logical host and communicate using localhost.

why do we need multiple containers to run inside a pod? Why can't we put all of these in a single container?

Let us take the example of a web application running on node js and uses a Redis cache.

In any Linux machine, init (short for initialization) is the first process started during booting of the computer system. Init is a daemon process that continues running until the system is shut down.

But, generally, containers are designed to run a single process. It doesn't have an init system like the Linux VM, that manages all processes. So if we run multiple processes inside a container, it is our(the developer's) responsibility to keep both the node js and Redis processes running, manage their logs, handle all IPC signals, and so on. It is not so easy. Hence it is recommended to run only one process per container, and we will be able to monitor this process for logs, and all other management becomes easier.

Hence, with pods, we can run multiple containers together. Thus the pod of containers models an application-specific "logical host".

These shared containers in a pod will have the following advantages:

- They communicate with each other using localhost(i.e) in the same network and have the same IP but vary by port.

- They share the storage volumes.

- They form a cohesive unit of service.

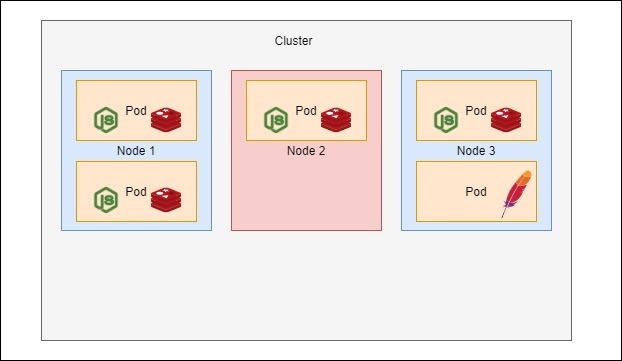

Now, if you can observe, I have changed the single container pod-style given in Kubernetes architecture post, to the current grouped container pod style. Though the previous depiction is also correct, this is an easier, standard way to handle and scale the application. You can also observe that the pod containing apache is a single container pod.

Check out this post for more info on the pod networking: https://dev.to/kanapuli/what-are-kubernetes-pods-anyway-1app

Top comments (0)