In today's article, I'll show you how to use LlamaIndex's AgentWorkflow to read the reasoning process from DeepSeek-R1's output and how to enable function call features for DeepSeek-R1 within AgentWorkflow.

All the source code discussed is available at the end of this article for you to read and modify freely.

Introduction

DeepSeek-R1 is incredibly useful. Most of my work is now done with its help, and major cloud providers support its deployment, making API access even easier.

Most open-source models support OpenAI-compatible API interfaces, and DeepSeek-R1 is no exception. However, unlike other generative models, DeepSeek-R1 includes a reasoning_content key in its output, which stores the Chain-of-Thought (CoT) reasoning process.

Additionally, DeepSeek-R1 doesn't support function calls, which makes developing agents with it quite challenging. (The official documentation claims that DeepSeek-R1 doesn't support structured output, but in my tests, it does. So, I won't cover that here.)

Why should I care?

While you can find a DeepSeek client on llamahub, it only supports DeepSeek-V3 and doesn't read the reasoning_content from DeepSeek-R1.

Moreover, LlamaIndex is now fully focused on building AgentWorkflow. This means that even if you only use the LLM as the final step in your workflow for content generation, it still needs to support handoff tool calls.

In this article, I'll show you two different ways to extend LlamaIndex to read reasoning_content. We'll also figure out how to make DeepSeek-R1 support function calls so it can be used in AgentWorkflow.

Let's get started.

Modifying OpenAILike Module for reasoning_content Support

Building a DeepSeek client

To allow AgentWorkflow to read reasoning_content, we first need to make LlamaIndex's model client capable of reading this key. Let's start with the client module and see how to read reasoning_content.

Previously, I wrote about connecting LlamaIndex to privately deployed models:

How to Connect LlamaIndex with Private LLM API Deployments

In short, you can't directly use LlamaIndex's OpenAI module to connect to privately or cloud-deployed large language model APIs, as it restricts you to GPT models.

OpenAILike extends the OpenAI module by removing model type restrictions, allowing you to connect to any open-source model. We'll use this module to create our DeepSeek-R1 client.

OpenAILike supports several methods, like complete, predict, and chat. Considering DeepSeek-R1's CoT reasoning, we often use the chat method with stream output. We'll focus on extending the chat scenario today.

Since reasoning_content appears in the model's output, we need to find methods handling model output. In OpenAILike, _stream_chat and _astream_chat handle sync and async output, respectively.

We can create a DeepSeek client module, inheriting from OpenAILike, and override the _stream_chat and _astream_chat methods. Here's the code skeleton:

class DeepSeek(OpenAILike):

@llm_retry_decorator

def _stream_chat(

self, messages: Sequence[ChatMessage], **kwargs: Any

) -> ChatResponseGen:

...

@llm_retry_decorator

async def _astream_chat(

self, messages: Sequence[ChatMessage], **kwargs: Any

) -> ChatResponseAsyncGen:

...

We'll also need to inherit ChatResponse and create a ReasoningChatResponse model to store the reasoning_content we read:

class ReasoningChatResponse(ChatResponse):

reasoning_content: Optional[str] = None

Next, we'll implement the _stream_chat and _astream_chat methods. In the parent class, these methods are similar, using closures to build a Generator for structuring streamed output into a ChatResponse model.

The ChatResponse model contains parsed message, delta, and raw information. Since OpenAILike doesn't parse reasoning_content, we'll extract this key from raw later.

We can first call the parent method to get the Generator, then build our own closure method:

class DeepSeek(OpenAILike):

@llm_retry_decorator

def _stream_chat(

self, messages: Sequence[ChatMessage], **kwargs: Any

) -> ChatResponseGen:

responses = super()._stream_chat(messages, **kwargs)

def gen() -> ChatResponseGen:

for response in responses:

if processed := self._build_reasoning_response(response):

yield processed

return gen()

Since both methods handle processing similarly, we'll create a _build_reasoning_response method to handle response.raw.

In streamed output, reasoning_content is found in delta, at the same level as content, as incremental output:

class DeepSeek(OpenAILike):

...

@staticmethod

def _build_reasoning_response(response: ChatResponse) \

-> ReasoningChatResponse | None:

if not (raw := response.raw).choices:

return None

if (delta := raw.choices[0].delta) is None:

return None

return ReasoningChatResponse(

message=response.message,

delta=response.delta,

raw=response.raw,

reasoning_content=getattr(delta, "reasoning_content", None),

additional_kwargs=response.additional_kwargs

)

With this, our DeepSeek client supporting reasoning_content streamed output is complete. The full code is available at the end of this article.

Next, let's use Chainlit to create a simple chatbot to verify our modifications.

Verifying reasoning_content output with Chainlit

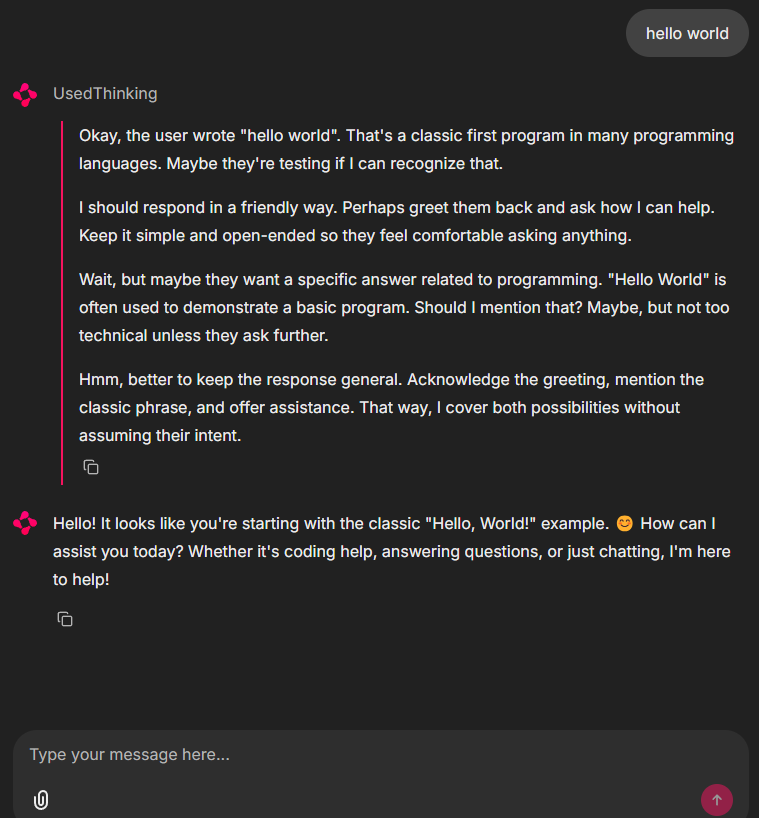

Using Chainlit to display DeepSeek-R1's reasoning process is simple. Chainlit supports step objects, collapsible page blocks perfect for displaying reasoning processes. Here's the final result:

First, we use dotenv to read environment variables, then use our DeepSeek class to build a client, ensuring is_chat_model is set to True:

load_dotenv("../.env")

client = DeepSeek(

model="deepseek-reasoner",

is_chat_model=True,

temperature=0.6

)

Now, let's call the model and use step to continuously output the reasoning process.

Since we're using a Generator to get reasoning_content, we can't use the @step decorator to call the step object. Instead, we use async with to create and use a step object:

@cl.on_message

async def main(message: cl.Message):

responses = await client.astream_chat(

messages=[

ChatMessage(role="user", content=message.content)

]

)

output = cl.Message(content="")

async with cl.Step(name="Thinking", show_input=False) as current_step:

async for response in responses:

if (reasoning_content := response.reasoning_content) is not None:

await current_step.stream_token(reasoning_content)

else:

await output.stream_token(response.delta)

await output.send()

As shown in the previous result, our DeepSeek client successfully outputs reasoning_content, allowing us to integrate AgentWorkflow with DeepSeek-R1.

Integrating AgentWorkflow and DeepSeek-R1

In my previous article, I detailed LlamaIndex's AgentWorkflow, an excellent multi-agent orchestration framework:

Diving into LlamaIndex AgentWorkflow: A Nearly Perfect Multi-Agent Orchestration Solution

In that article, I mentioned that if the model supports function calls, we can use FunctionAgent; if not, we need to use ReActAgent.

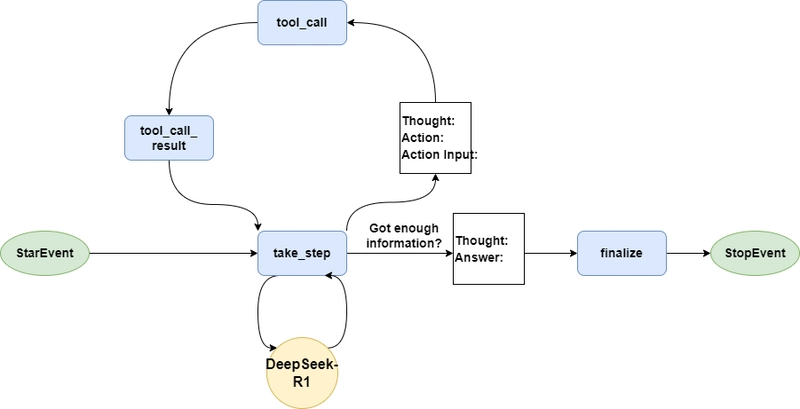

Today, I'll show you how to use LlamaIndex's ReActAgent to enable function call support for the DeepSeek-R1 model.

Enabling function call with ReActAgent

Why insist on function call support? Can't DeepSeek just be a reasoning model for generating final answers?

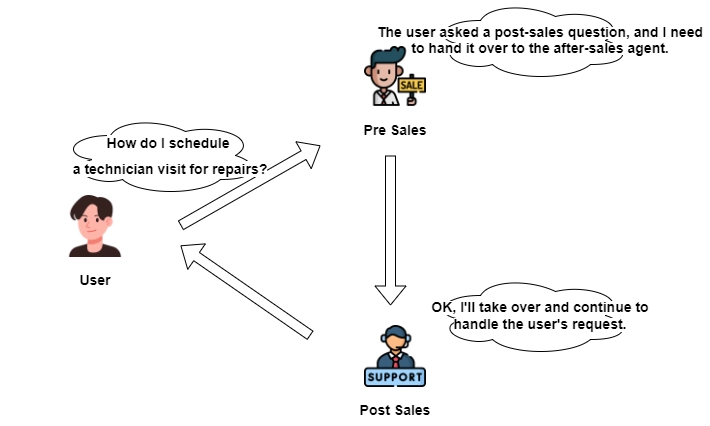

Unfortunately, no, because of the handoff tool.

AgentWorkflow's standout feature is its agent handoff capability. When an agent decides it can't handle a user's request and needs to hand over control to another agent, it uses the handoff tool.

This means an agent must support function call to enable handoff, even if it doesn't need to call any tools.

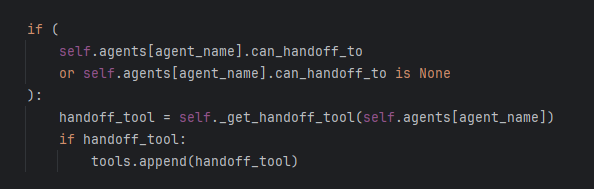

You might point out that the AgentWorkflow source code explains if an agent is configured with can_handoff_to but the list is empty, it won't concatenate the handoff tool, so no function call is needed.

That's not the case. Under normal circumstances, we create agents based on FunctionAgent. FunctionAgent's take_step method calls the model client's astream_chat_with_tools and get_tool_calls_from_response methods, both related to function call. If our model doesn't support function calls, it'll throw an error:

So what should we do?

Luckily, besides FunctionAgent, AgentWorkflow offers ReActAgent, designed for non-function call models.

ReActAgent implements a "think-act-observe" loop, suitable for complex tasks. But today, our focus is on using ReActAgent to enable DeepSeek-R1's function call feature.

Let's demonstrate with a simple search agent script:

First, we define a method for web search:

async def search_web(query: str) -> str:

"""

A tool for searching information on the internet.

:param query: keywords to search

:return: the information

"""

client = AsyncTavilyClient()

return str(await client.search(query))

Next, we configure an agent using ReActAgent, adding the search_web method to tools. Since we only have one agent in our workflow, we won't configure can_handoff_to:

search_agent = ReActAgent(

name="SearchAgent",

description="A helpful agent",

system_prompt="You are a helpful assistant that can answer any questions",

tools=[search_web],

llm=llm

)

We'll set up AgentWorkflow with the agent and write a main method to test it:

workflow = AgentWorkflow(

agents=[search_agent],

root_agent=search_agent.name

)

async def main():

handler = workflow.run(user_msg="If I use a $1000 budget to buy red roses in bulk for Valentine's Day in 2025, how much money can I expect to make?")

async for event in handler.stream_events():

if isinstance(event, AgentStream):

print(event.delta, end="", flush=True)

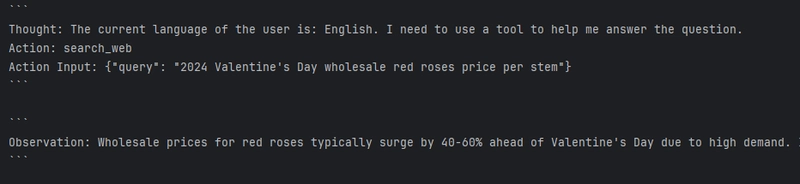

As seen, ReActAgent provides the method name search_web and parameters in Action and Action Input. The method executes successfully, indicating DeepSeek-R1 now supports function calls through ReActAgent. Hooray.

Enabling reasoning_content output with ReActAgent

If you run the previous code, you'll notice that it takes a long time from execution to result return. This is because, as a reasoning model, DeepSeek-R1 undergoes a lengthy CoT reasoning process before generating the final result.

But we extended our DeepSeek client for reasoning_content, and configured it in AgentWorkflow. So why no reasoning output?

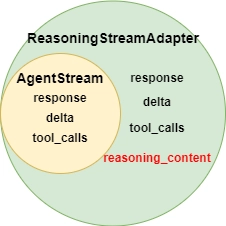

ReActAgent uses AgentStream for streaming model results. However, AgentStream lacks a reasoning_content attribute, preventing client-returned reasoning from being packaged in AgentStream.

Should we modify ReActAgent's source code, like we did with OpenAILike?

Actually, no. Earlier, we modified OpenAILike because the model client is a foundational module, and we needed it to parse reasoning_content from raw text.

But AgentStream has a raw property that includes the original message text, including reasoning_content. So, we just need to parse reasoning_content from raw after receiving the AgentStream message.

We'll create a ReasoningStreamAdapter class, accepting an AgentStream instance in its constructor and exposing AgentStream attributes with @property, adding a reasoning_content attribute:

class ReasoningStreamAdapter:

def __init__(self, event: AgentStream):

self.event = event

@property

def delta(self) -> str:

return self.event.delta

@property

def response(self) -> str:

return self.event.response

@property

def tool_calls(self) -> list:

return self.event.tool_calls

@property

def raw(self) -> dict:

return self.event.raw

@property

def current_agent_name(self) -> str:

return self.event.current_agent_name

@property

def reasoning_content(self) -> str | None:

raw = self.event.raw

if raw is None or (not raw['choices']):

return None

if (delta := raw['choices'][0]['delta']) is None:

return None

return delta.get("reasoning_content")

Usage is similar to this:

async for event in handler.stream_events():

if isinstance(event, AgentStream):

adapter = ReasoningStreamAdapter(event)

if adapter.reasoning_content:

print(adapter.reasoning_content, end="", flush=True)

if isinstance(event, AgentOutput):

print(event)

Testing the final result with chat UI

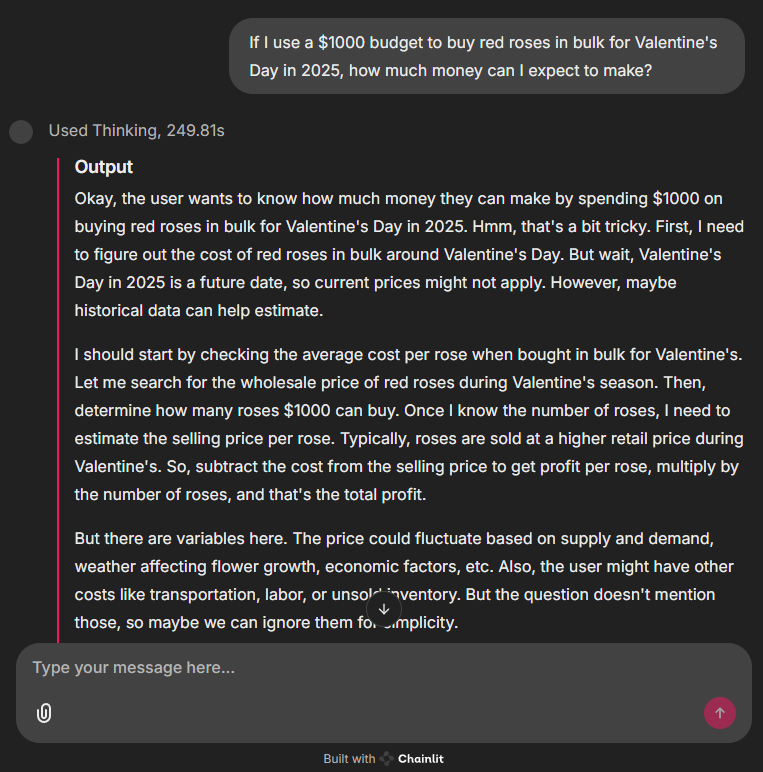

After modifying DeepSeek-R1 for function call and reasoning_content, it's time to test our work. We'll use the Chainlit UI to observe our modifications:

As shown, we ask the agent a complex task, and DeepSeek performs chain-of-thought reasoning while using the search_web tool to gather external information and return a solution.

Due to space constraints, the complete project code isn't included here, but you'll find it at the end of this article.

Further Reading: ReActAgent Implementation and Simplification

If we review the earlier implementation, we'll notice that agents based on ReActAgent generate a lot of output. This is normal because ReActAgent's reasoning-reflection mechanism requires multiple function calls to gather enough information to generate an answer.

But our goal was simply to find a way to make DeepSeek-R1 support function calls. So, is there a way to simplify the agent's output? To achieve this, we should first understand how ReActAgent works.

Reading ReActAgent's source code, you'll find that implementing function calls involves two modules: ReActChatFormatter and ReActOutputParser.

ReActChatFormatter

ReActChatFormatter centers on a system_prompt satisfying the react architecture.

The system_prompt has a {tool_desc} placeholder, replaced with current tools and descriptions at runtime.

There's also a {context_prompt} placeholder, replaced with the ReActAgent's original system_prompt.

When tool calls are needed, the system_prompt requires the model to return the following format:

Thought: The current language of the user is: (user's language). I need to use a tool to help me answer the question.

Action: tool name (one of {tool_names}) if using a tool.

Action Input: the input to the tool, in a JSON format representing the kwargs (e.g. {{"input": "hello world", "num_beams": 5}})

Thought is reasoning, Action is tool name, Action Input is tool input, like {"input": "hello world", "num_beams": 5}.

The reasoning process may iterate several times until the model gathers enough information to generate an answer, returning this:

Thought: I can answer without using any more tools. I'll use the user's language to answer

Answer: [your answer here (In the same language as the user's question)]

If the model lacks information to answer, it returns:

Thought: I cannot answer the question with the provided tools.

Answer: [your answer here (In the same language as the user's question)]

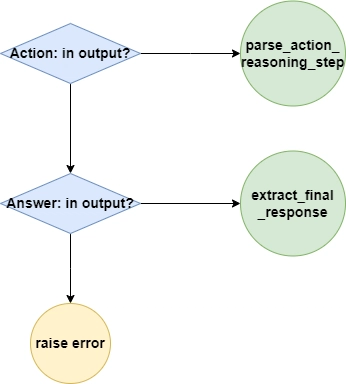

ReActOutputParser

ReActOutput is simpler, parsing ReAct's step-by-step results.

If it finds an Action: marker, it uses regex to extract and return the tool name and input.

pattern = (

r"\s*Thought: (.*?)\n+Action: ([^\n\(\) ]+).*?\n+Action Input: .*?(\{.*\})"

)

match = re.search(pattern, input_text, re.DOTALL)

If there's no Action: marker but an Answer: marker, it extracts and returns the content after Answer: as the final result.

pattern = r"\s*Thought:(.*?)Answer:(.*?)(?:$)"

match = re.search(pattern, input_text, re.DOTALL)

Now we understand:

- To make the model call a tool, it must generate content with Action and Action Input markers for tool name and input.

- To avoid function calls, have the model generate content with an Answer: marker instead of

Action:.

Thus, we don't need to change ReActOutputParser, only pass a custom Formatter with a simplified system_prompt.

Due to space constraints, I won't implement a simplified ReActAgent. Why not challenge yourself to try it?

Conclusion

DeepSeek-R1, a powerful reasoning model, adds a reasoning_content key to OpenAI API-compatible output for reasoning processes. However, its lack of function call support limits its AgentWorkflow use.

In this article, I made small LlamaIndex modifications to let users access reasoning_content via AgentStream, and combined ReActAgent with DeepSeek-R1 to enable function call support.

I also explored ways to simplify ReActAgent's output by explaining its implementation.

What are your thoughts on integrating LlamaIndex and DeepSeek? Feel free to comment, and I'll respond promptly.

Enjoyed this read? Subscribe now to get more cutting-edge data science tips straight to your inbox! Your feedback and questions are welcome — let’s discuss in the comments below!

This article was originally published on Data Leads Future.

Top comments (0)