Over the past few years, there has been considerable discussion about how AI might replace all our jobs. For a long time, I completely ignored the topic; most of it sounded like pure bu11SH1T. I had already studied neural networks and understood the basics of LLMs, Machine Learning, Deep Learning, Natural Language Processing, and Expert Systems. I had even tested a few generative AI tools, but none of them impressed me; they were buggy, full of hallucinations, and constrained.

Last year (2024), I revisited the subject with more focus. A lot has changed, major improvements were made, but still, nothing close to replacing a real Software Engineer, despite what many headlines suggested at the time.

Since then, I’ve been following the field closely, running experiments and trying to understand how this tech could impact our daily work. That’s how this experiment came to life: I decided to use AI alone to redesign the visual theme of my website (rafaelhs-tech.com). The idea was simple: change only the look and feel of the site. Here’s how it went.

Tech Stack

I originally built my site in 2021 using Angular (version 7 or 8, can’t remember exactly). It was pretty basic, just a few components with a space-themed design.

The Original Version

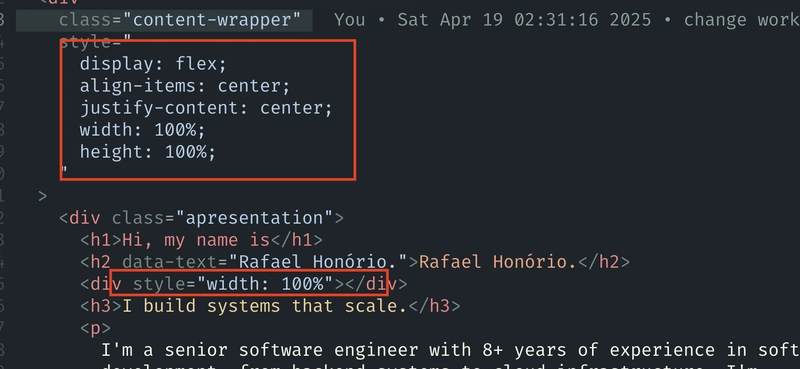

This was the original version. The journey with AI agents began using Claude 3.7. My first prompt went something like this:

“This is an Angular XX application, and I want to change the style to something with a cyberpunk theme. The folders are organized by components, and each component represents a page.”

Claude scanned the project, found the SASS files, and started generating new code… but then it began to hallucinate: it created React files inside an Angular project.

I tried correcting it, but it kept going off track, breaking the app, mixing files, outputting React code again and again. So, I scrapped everything and started over.

This time, I was more specific: I mentioned the Angular version, outlined the folder structure, highlighted key files, and gave a full project overview. The result? Slightly better visually, but still a broken UI. Misaligned pages, inconsistent styles, and worst of all: the code was a mess.

This kind of output was common. For those unfamiliar, mixing HTML, CSS, and JS like this is bad practice in modern web development.

It wasn’t exactly surprising, but it reinforced a key point: these agents still lack a real understanding of good development practices. I kept using Claude 3.7 for a while, but it got frustrating. I’d ask for one thing, and it would return something completely different. After more than 15 prompts just to align a single button, I realized it would’ve been faster to just fix it manually, but I was committed to simulating a non-technical user experience.

I also tested CodeLLM, but it had another issue: it doesn’t retain long-term memory. If the prompt fails or reaches the token limit, continuing in a new thread resets everything, with no memory of past context. That became really annoying.

Common Hallucinations with Claude 3.7:

- Generated things I didn’t ask for

- Couldn’t revert changes

- Deleted everything and regenerated the code in loops

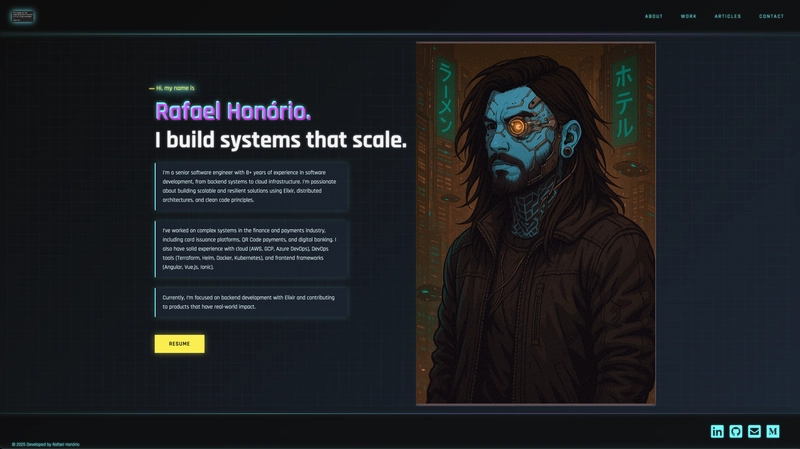

So I switched to Claude 3.5, which behaved more reliably. It followed instructions with better accuracy. I continued tweaking things and eventually got this result:

The Final Version

After about 40 prompts, I reached a decent visual result. But the code was still messy: CSS mixed with SASS, JS inline with HTML, weird file names, useless comments... nothing reusable. I decided to try other LLMs like Gemini 2.5, GPT o1, and GPT o4mini. The outcome was the same, frustrating, and each run produced noticeably different results (as expected).

Reflections

There’s no doubt AI is changing how we code, learn, and approach problem-solving. But replacing a software engineer? We’re still far from that.

Some notes from this experiment:

- I didn’t manually edit any code, even though I knew exactly what to change. That alone made a huge difference.

- The project was based on TS, SASS, and HTML, a well-documented and widely used stack, and it still hallucinated a lot. Imagine this with Elixir, Clojure, or Crystal, I’ve tried it. It doesn’t work well.

- Code quality was poor. It "worked", but required major rework.

- Vague prompts result in vague answers, obvious in theory, but problematic for non-technical users.

- The total cost was around $14, mainly from long conversations and resets. If I hadn’t started over several times, it would’ve been around $8 or 9.

Final Thoughts

After all this, I can confidently say: we’re far from singularity. LLMs are, at their core, text input → text output. Sure, there’s complex stuff in the middle, but no actual "intelligence" is happening.

If you repeat the same prompt, results may vary. That’s because LLMs are non-deterministic, they generate responses based on probabilities. Techniques like top-k sampling and top-p sampling can influence the outcome, but randomness is part of the deal.

Yes, this raises the bar for simpler tasks, and that might affect junior devs, but everything depends on context.

Now imagine a real-world complex system with backend logic, BFF, Terraform, CI/CD pipelines, or microservices, where a small UI tweak could have unexpected side effects elsewhere. That’s a mess waiting to happen. I spoke with some friends in mobile development, and their experience was even worse. Honestly, web development might be the "least bad" scenario.

It’s up to us to adapt. I use AI tools every day now, for studying, writing docs, building PRDs, MRDs, diagrams, etc. But we’re still far from AI solving software engineering on its own.

If you enjoyed this reflection, leave a comment and share your own experience using LLMs.

Until next time!

Top comments (2)

Growth like this is always nice to see. Kinda makes me wonder - what keeps stuff improving year after year? Just picking things up and not letting go? Luck?

I believe it’s essential to stay current and experiment with these tools because software engineers will likely be the primary consumers of LLMs. With the new MCP concepts, interacting with language models will become a continuous activity, not just an occasional one. Before long, calling an LLM could feel as routine to developers as making an HTTP request.