In Part 1 of the blog, we explored LLM classification framework, technical considerations and also tried a hands-on by accessing LLama-3 LLM from Meta through Hugging Face platform and Mistral LLM from Mistral AI. In this blog, we continue exploring more LLMs on different platforms using APIs.

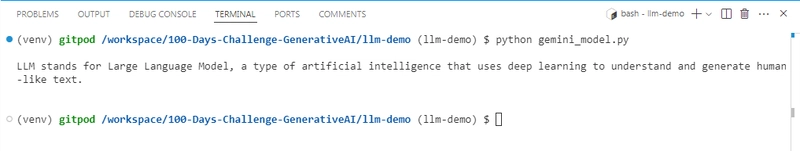

1. Google Gemini LLM

- Google Gemini(formerly, Bard) is a large language model (LLM) that can understand, generate, and combine different types of information.

- It's a multimodal AI model that can process text, images, audio, video, and code. Here, we are using

gemini-2.0-flashmodel. - Gemini models can accessed via web application or programmatically using API. Get your API here.

- Install the required library, use

pip install google-genai

Please, save your API key in a

.envfile and access the API key in your code.

import os

from dotenv import load_dotenv

from google import genai

load_dotenv()

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")

client = genai.Client(api_key=GOOGLE_API_KEY)

response = client.models.generate_content(

model="gemini-2.0-flash", contents="What is LLM? give a one line answer."

)

print()

print(response.text)

print()

Response:

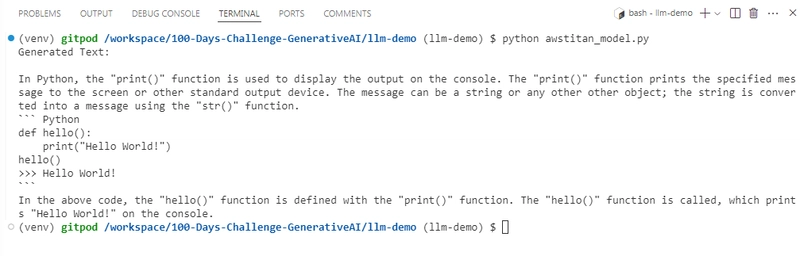

2. AWS Bedrock

- AWS Bedrock is a fully managed service from Amazon Web Services that provides access to a variety of high-performing LLMs like Claude, LLama-3, DeepSeek and more through a single API.

- It is a unified platform to explore, test, and deploy cutting-edge AI models.

- AWS has their own set of pre-trained LLMs known as Amazon Titan foundational models supporting a variety of use cases like text generation, Image generation, Embeddings model and more.

- Here we are using Amazon Titan's

amazon.titan-text-express-v1LLM in the below example. - To access AWS Bedrock, you need to have -

- An AWS Account with access to Bedrock service.

- Get the AWS Secret key and AWS Access Key from the AWS Account page.

- Install AWS SDK for Python,

pip install boto3.

import os

from dotenv import load_dotenv

import boto3

import json

# Load environmental variables

load_dotenv()

AWS_ACCESS_KEY = os.getenv("AWS_ACCESS_KEY")

AWS_SECRET_KEY = os.getenv("AWS_SECRET_KEY")

# Create a Bedrock client

client = boto3.client(

service_name='bedrock-runtime',

aws_access_key_id=AWS_ACCESS_KEY,

aws_secret_access_key=AWS_SECRET_KEY,

region_name="us-east-1"

)

# Define the model prompt and model id

prompt = "Write a Hello World function in Python programming language"

model_id = "amazon.titan-text-express-v1"

# Configure inference parameters

inference_parameters = {

"inputText": prompt,

"textGenerationConfig": {

"maxTokenCount": 512, # Limit the response length

"temperature": 0.1, # Control the randomness of the output

},

}

# Convert the request payload to JSON

request_payload = json.dumps(inference_parameters)

# Invoke the model

response = client.invoke_model(

modelId=model_id,

body=request_payload,

contentType="application/json",

accept="application/json"

)

# Decode the response body

response_body = json.loads(response["body"].read())

# Extract and print the generated text

generated_text = response_body["results"][0]["outputText"]

print("Generated Text:\n", generated_text)

Response:

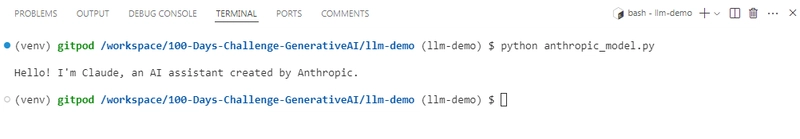

3. Anthropic Claude LLM

- Anthropic is an AI Research company focused on developing safe and reliable AI models.

- Claude is the flagship LLM developed by Anthropic. Claude is capable of generating text, translating languages, writing different kinds of creative content, answering your questions in an informative way and more.

- To use Claude LLM, install the necessary library

pip install anthropicand also get the Anthropic API key using the console. - You need to subscribe to a billing plan in Anthropic, in order to use Claude LLM. Since, I have not chosen billing plan, let's access Claude LLM using AWS Bedrock API. Luckily, I have a few AWS credits to use.

- You need to have an AWS account, follow the steps above given in AWS Bedrock to get started.

import os

from dotenv import load_dotenv

import boto3

import json

# Load environmental variables

load_dotenv()

AWS_ACCESS_KEY = os.getenv("AWS_ACCESS_KEY")

AWS_SECRET_KEY = os.getenv("AWS_SECRET_KEY")

# Create a Bedrock client

client = boto3.client(

service_name='bedrock-runtime',

aws_access_key_id=AWS_ACCESS_KEY,

aws_secret_access_key=AWS_SECRET_KEY,

region_name="us-east-1"

)

body = json.dumps({

"max_tokens": 256,

"messages": [{"role": "user", "content": "Hello, world"}],

"anthropic_version": "bedrock-2023-05-31"

})

response = client.invoke_model(body=body, modelId="anthropic.claude-3-sonnet-20240229-v1:0")

response_body = json.loads(response.get("body").read())

generated_text = response_body.get("content")[0]["text"]

print()

print(generated_text)

print()

Response:

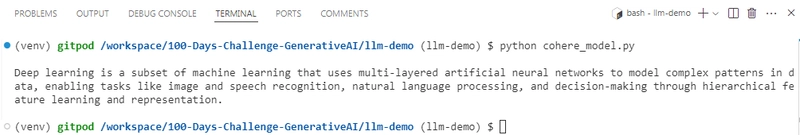

3. Cohere LLMs

- Cohere is Canadian AI company focused on LLM technologies for enterprise use cases.

- It offers API that enables developers to build and deploy LLM-powered solutions for various tasks like text generation, summarization, and semantic search.

- Cohere offers three primary for various use cases,

- Command models - these models are widely used for text-generation, summarization, copywriting and also for RAG based applications.

- Reorder - these models are primarily used for semantic search use cases. These are used to reorder search results to improve relevance based on specific criteria.

- Embed - these models are used for generating text embeddings to improve the accuracy of search, classification and RAG results.

- Sign Up, if not already, to get your Cohere API key. Cohere offers free account and provides trial keys for free, these are rate-limited and cannot be used for commercial applications. Cohere also provides production keys with pay-as-you-go pricing model.

- To get started, get your trail key, save it as

COHERE_API_KEY=in.envfile and install the Cohere Python client usingpip install cohere

import os

from dotenv import load_dotenv

import cohere

# Load environmental variables from .env file

load_dotenv()

# Get the cohere api key

COHERE_API_KEY = os.getenv("COHERE_API_KEY")

# Create the client

client = cohere.ClientV2(COHERE_API_KEY)

# Generate text

response = client.chat(

model="command-a-03-2025",

messages=[

{

"role": "user",

"content": "Explain Deep Learning in one-sentence",

}

],

)

print()

print(response.message.content[0].text)

print()

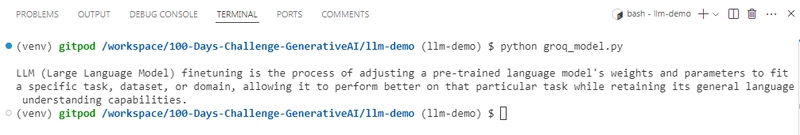

5. Groq

- Groq is an American AI company that develops a Language Processing Unit (LPU) chip and associated hardware to accelerate AI inference performance, focusing on delivering fast, efficient, and accessible AI solutions for various industries.

- Groq claims that its LLM inference performance is 18x times more faster than that of top cloud-based providers.

- Groq currently makes available models like Meta AI's Llama 2 70B and Mixtral 8x7B via their APIs.

- Register/Login to here to get the API key. Groq also provides free plan to get started.

import os

from dotenv import load_dotenv

from groq import Groq

# Load environmental variables from .env file

load_dotenv()

# Get the cohere api key

GROQ_API_KEY = os.getenv("GROQ_API_KEY")

# Create Groq client

client = Groq()

# Generate response

completion = client.chat.completions.create(

model="llama-3.3-70b-versatile",

messages=[

{

"role": "user",

"content": "What is LLM finetuning? Answer in a sentence"

}

],

temperature=1,

max_completion_tokens=1024,

top_p=1,

stream=True,

stop=None,

)

print()

for chunk in completion:

print(chunk.choices[0].delta.content or "", end="")

print()

Response:

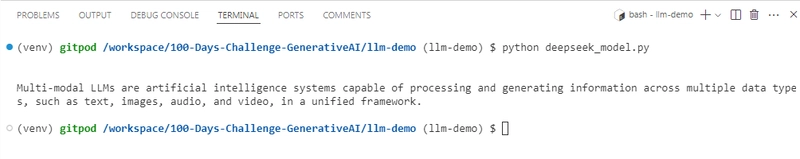

6. DeepSeek

- DeepSeek is a Chinese AI company that develops Large Language Models.

- DeepSeek models are open-source, cost effective with strong competitive performance.

- DeepSeek-R1 is one of the models developed by DeepSeek. It uses Reinforcement Learning under the hood.

- It is used for text generations and coding tasks.

- You need to subscribe for a plan to use DeepSeek API, it can be accessed via other providers like AWS Bedrock, which can be seen in the below demo code.

import os

from dotenv import load_dotenv

import boto3

import json

from botocore.exceptions import ClientError

# Load environmental variables

load_dotenv()

AWS_ACCESS_KEY = os.getenv("AWS_ACCESS_KEY")

AWS_SECRET_KEY = os.getenv("AWS_SECRET_KEY")

# Create a Bedrock client

client = boto3.client(

service_name='bedrock-runtime',

aws_access_key_id=AWS_ACCESS_KEY,

aws_secret_access_key=AWS_SECRET_KEY,

region_name="us-east-1"

)

# Set the model ID, e.g., DeepSeek-R1 Model.

model_id = "us.deepseek.r1-v1:0"

# Start a conversation with the user message.

user_message = "What are multi-modal LLMs? Give the response in a sentence"

conversation = [

{

"role": "user",

"content": [{"text": user_message}],

}

]

try:

# Send the message to the model, using a basic inference configuration.

response = client.converse(

modelId=model_id,

messages=conversation,

inferenceConfig={"maxTokens": 512, "temperature": 0.5, "topP": 0.9},

)

# Extract and print the response text.

response_text = response["output"]["message"]["content"][0]["text"]

print(response_text)

print()

except (ClientError, Exception) as e:

print(f"ERROR: Can't invoke '{model_id}'. Reason: {e}")

exit(1)

Conclusion

Each of these LLM providers offers unique capabilities, and choosing the right one depends on your use case. Whether you need general knowledge, multilingual support, safety-focused AI, or open-weight models, there is an LLM available for you.

This blog post has provided a starting point for exploring the exciting world of LLMs. By understanding how to access and use these powerful models, you can unlock a wide range of possibilities in natural language processing.

This is my small attempt to explore different LLMs from different platforms. With Python and simple API integrations, you can start leveraging the power of LLMs. Remember to consult the official documentation for each platform for the most accurate and up-to-date information. Happy coding!

References

- Repository for code files used in this blog.

- Amazon Bedrock: A Complete Guide to Building AI Applications by DataCamp

- AWS Documentation

- Claude on Amazon Bedrock - Anthropic Documentation

Top comments (0)