We see the world as our brain interprets it. We can manage the fractal complexity of the world, because the human brain (and, to a degree, animal brains too) relies on mental shortcuts to make sense of everything. Such shortcuts are called heuristics. They're mental tricks, which we often use subconsciously, to quickly find a good-enough solution to a problem.

Life would be impossible without heuristics, as we'd have to think about every choice we make as if it's the first time. Think of all the tiny decisions we make every day without thinking about them and imagine we'd have to consciously think about every such decision. It would be incredibly taxing mentally and we'd be exhausted after a few hours.

However, heuristics don't always help us make good, objective decisions. Our brain can also develop mental shortcuts that have us see the world in a distorted, inaccurate, and illogical way. Such a hurtful mental shortcut is called a cognitive bias.

Over the last six decades, behavioral psychologists have discovered a list of over 200 (and growing) cognitive biases. These are 12 of the most common, hurtful ones. At the end of this article, I've shared the single most important tip to overcome these cognitive biases.

Confirmation Bias

Confirmation bias leads you to favor information that confirms your pre-existing beliefs. It also leads you to discard the evidence that doesn't confirm those beliefs. Ever browsed Google to prove a point to someone, skipping over the links that seem to prove their point instead of yours? That's confirmation bias.

Everyone has some degree of confirmation bias. It's how strongly you stick to your existing beliefs, despite evidence to the contrary, that will determine how much confirmation bias will skew your decisions.

A variant of the cognitive bias is the backfire effect, where evidence that goes against your existing beliefs only leads you to strengthen those beliefs. The modern world, unfortunately, is rife with examples of the backfire effect: the anti-vaxxer movement and the flat Earth society being two of the best-known ones.

Availability Heuristic

The availability heuristic (which should really be called the availability bias) leads you to place greater value on what comes to mind quickly. You base the likelihood of an event on how easily an example comes to mind. This is irrational, because the likelihood of something happening has nothing to do with how quickly you can recall it.

The availability heuristic explains why people think flying is unsafe, because a plane crash comes much more readily to mind when compared to a safe flight. It's why people fear shark attacks too, even though you're statistically more likely to come to your end because of a champagne cork than because of a shark attack.

Self-Serving Bias

Do you blame external forces when something bad happens, but take credit when something good happens? That's the self-serving bias. You might think this is an easy one to spot, but that's not necessarily true. For example, it's self-serving bias if you think you've won in poker because you're skilled at it, but lost because you've been dealt a bad hand.

Another example: your favorite soccer team wins a game because they're a wonderful team of great players, but they lose a game because the referee has been bought and they should've gotten a penalty. Self-serving bias, I'm sorry.

Anchoring Bias

The anchoring bias leads us to rely too heavily on the first piece of information we receive. Salespeople often use (exploit?) this bias by starting negotiations with a high price to make the actual price of the product more palatable. Be wary when someone gives you a price that seems prohibitively high, then offers a lower price as a "concession."

There's no reason why you can't use this bias. When negotiating salary, for example, start with a price that's higher than what you actually want. This is your anchor, and it will make your actual salary request seem more reasonable.

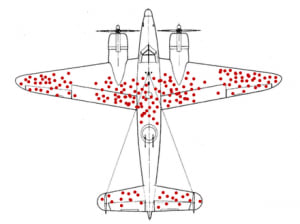

Survivorship Bias

Survivorship bias can have you focus too heavily on what remains standing, instead of taking into account that which you cannot see. For example, the US military wanted to better protect its planes against enemy fire during the Second World War. This led to US scientists wanting to better reinforce the areas of returning planes where they found bullet holes (see the pic above).

However, the Hungarian mathematician Abraham Wald said they should do the complete opposite. The planes should be reinforced where there were no bullet holes, i.e. in the middle of the wings, the middle of the tail, and in the cockpit. After all, the undamaged areas of the plane indicate why the plane was able to return in the first place. The planes that were damaged in those areas simply didn't make it back.

Zero-Risk Bias

If you prefer to eliminate all the risk in one aspect of a problem instead of reducing the overall risk in the entire problem, then you're subject to zero-risk bias. It's why we decide to cut all unhealthy snacks out of our diet instead of focusing on exercise and eating more vegetables. The latter is a much more effective solution to greater health, and yet many are compelled to do the former.

The Bandwagon Effect

The Bandwagon Effect leads us to favor certain things or beliefs simply because other people do and believe the same things. While you can't have an original opinion on everything, it's dangerous to blindly believe or do something without having your own reasons for doing so.

This, to me, is particularly obvious in contemporary politics. It's no longer uncommon to hear a politician say they'll vote against a proposal of the opposing party without even having read the proposal. They vote against it because their party told them to.

This kind of dogmatic groupthink is dangerous. The modern world would benefit from more original thinkers who can respectfully form their own opinion (based on facts, of course).

Loss Aversion

In 1979, the behavioral scientists Tversky and Kahneman discovered that losing something hurts people more than winning the same thing makes us happy. It's why we keep things for longer than we need them, because losing hurts.

Loss aversion is related to the endowment effect: we give something greater value simply because we own it. Losing that something then means we've lost greater value than we've been given, which is why it hurts more.

Sunk Cost Fallacy

This is one of the better-known biases, partially because it's taught in economics. The Sunk Cost Fallacy leads us to continue on an irrational path of action, just because we've invested so much time and/or money in it already.

This is in part because we want to avoid admitting that we've made a mistake or that we've wasted resources. Of course, the faster we can get over this fallacy, the fewer resources we'll waste.

The Law of Triviality

The Law of Triviality refers to the tendency of people in an organization to spend disproportionate amounts of time on trivial issues and details instead of spending that time on the things that will make a big difference in the organization.

This is why people spend can hours deliberating over the colors of a logo or the position of a

on a page. The Law of Triviality is also known as bikeshedding.Clustering Illusion

Technical analysis traders will love this one. The clustering illusion is our tendency to see patterns in random events. We love linking events simply because they happen closely together (in time or space).

The clustering illusion also goes by pareidolia or apophenia. We all experience it from time to time. It's a dangerous bias, particularly if you decide to build your career on it. Correlation does not mean causation.

Conservatism Bias

The conservatism bias is our tendency to favor prior evidence over new evidence. It partly explains why society was so slow to fully accept the roundness of Earth (throughout the Middle Ages for the Old World). We'd fully accepted that the Earth was flat and our brain was reluctant to accept new information that it wasn't.

Conservatism bias could also mean our tendency to revise our beliefs insufficiently in the face of new evidence. We might move our beliefs a little bit, but not enough.

How to Avoid Being Biased

Let me start by saying that we're all biased in one way or the other. No one is ever a fully rational human being. However, we can make an effort to be less biased.

Here's the one trick you need to know to become less biased: awareness. Being aware of a cognitive bias and having it in the front of your mind when you make a decision will help you recognize it and ultimately avoid it.

Of course, you can't do this with all biases at once. That's why I suggest focusing on one bias for a certain period of time. If you keep a diary, make it a question that you reflect on daily. For example:

- "Did I fall for the confirmation bias today?"

Eventually, you'll grow less susceptible to the bias that you're targeting. Over time, you'll start recognizing cognitive biases in yourself and others. Your brain will recalibrate and it will help you make better, more rational decisions.

Top comments (6)

Loved reading through this. I'm pretty sure almost everyone can relate to each and every one of these in some point in their lives.

Thank you for the post!

Good post! If you liked this article, you may also enjoy Gladwell's book: gladwellbooks.com/titles/malcolm-g...

There are a couple things missing here which are worth considering.

The first is that cognitive biases exist because they serve us well, as far as evolution goes. Confirmation bias is based on the idea that our first approximation of a situation is good enough and therefore we don't need to re-evaluate everything every time we see something new (as that would lead to analysis paralysis). That isn't to say that these aren't sometimes harmful, but rather that they actually serve purposes for us.

So the question isn't how to become less biased -- that is actually impossible. The goal is to trap problems that biases cause so that we don't let them cause problems. In practice this means developing a number of good habits:

Treat disagreement as a possible check on biases. When two people disagree about just about anything this is a check on biases. If we treat this as an opportunity to explore the disagreement in the interest of a shared search for truth, we can avoid being victims of our own biases. This is even (or particularly!) true where the disagreement sparks strong emotions.

Seek out conversations with people who disagree with you. And treat these as checks on cognitive biases too.

Also there are a few cognitive biases that are missing here but can also be extremely damaging in some cases:

Continuation bias -- the tendency not to consider changing plans as circumstances change.

Expectation bias -- the tendency to filter perceptions based on what you expect to see/hear/etc.

Mission Syndrome -- Basically what happens when you combine continuation bias with stress. You get fixated on "completing the mission" to the exclusion of any other considerations.

A final point here is that a good habit to avoid being bitten by these is to develop a habit of stopping and re-evaluating when an action does not have the expected result.

Pretty accurate and interesting observations. Thank you for your knowledge.

Correlation does not imply causation, not the other way around

Ha, of course! I've corrected it in the article. Thanks for spotting!