My team uses a code review checklist to prevent stupid mistakes from causing us problems and wasting time. In this post, I want to share the reasons we decided to implement a code review checklist, what's on our checklist, how we created, use, and improve our checklist, and how it's improved our effectiveness.

Why create a code review checklist?

My team is on a mission to increase our effectiveness. We're following the Theory of Constraints model where we identify our constraint and find ways of overcoming it.

When we looked at the flow of stories across our sprint board, we immediately zeroed in on our review process as our bottleneck. More often than not, stories ended up in our "reopened" status (failed code review) than our "done" status. And when we tracked why our stories failed code review, we found all kinds of reasons related to the quality of the code itself. But we were surprised to find just how often we made a stupid mistake. Examples include forgetting to run the unit tests or missing a requirement. In fact, "stupid mistakes" caused of the vast majority of our failed code reviews.

But we'd also occasionally end up with a defect in production when we missed a step or performed an ineffective code review. It's really embarrassing to tell your boss that you took down the website because you messed up something simple.

I had read The Checklist Manifesto: How to Get Things Right (Atul Gawande) a few years ago and immediately recognized that this would be an excellent opportunity to use a checklist.

Benefits of a code review checklist

Checklists are great way to ensure you cover all the steps in a complicated task, like a code review. Instead of having to remember what to look for and review the code, you can just review the code and trust the checklist to ensure you cover all the important points. As long as you actually use your code review checklist (and the checklist is well-constructed), you should catch the vast majority of stupid mistakes.

What's on our code review checklist?

Our code review checklist has evolved over time. Here's what my personal version looks like right now.

DO-CONFIRM

Code Review Checklist:

- scope - story is high priority, small, minimize creep, no stray changes, off-task changes added to backlog

- works correctly - specification is correct and complete, implementation follows specification, testing plan created, unit tests created and/or updated, master merged into branch, all changes tested, edge cases covered, cannot find a way to break code, cannot find any ways these changes break some other part of the system, all tasks 'done', ZERO KNOWN DEFECTS

- defensiveness - all inputs to public methods validated, fails loudly if used incorrectly, all return codes checked, security

- easy to read and understand - appropriate abstraction and problem decomposition, minimum interface exposed, information hiding, command-query principle, good naming, meaningful documentation and comments, fully refactored (use judgement with existing code)

- style and layout - all inspections pass, code formatter run, no smelly code styling, line length, styling consistent with project guidelines

- final considerations - YOU FULLY UNDERSTAND THE CODE AND THE IMPACT OF THE CHANGES YOU HAVE MADE AND IT... is unbreakable, is actually done, will pass code review, would make you proud to present your changes to other programmers in public, is easy to review, correct branch, no stray code, schema changes documented, master merged into branch, unit tests pass, manual testing plan complete and executed, all changes committed and pushed, pull request created and reviewed, Jira updated

My team's official checklist doesn't include step 6. That's my own personal reminder of things that I find important to check at the very end of the code review.

Some people might say that our checklist contains way too much detail. That's probably a valid complaint. However, this is the checklist our team developed and it works for us.

How we created our code review checklist

We created our checklist by looking the steps in our code review process and the problems we were having getting pull requests to pass review. Then we gathered all those details into an initial code review checklist. We organized and refined our initial checklist over several weeks.

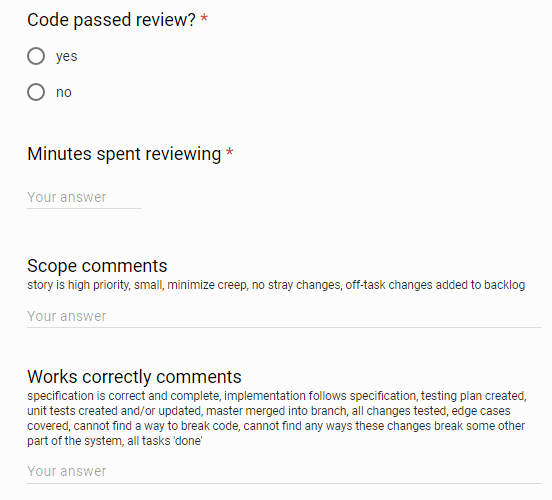

Once we were happy with our code review checklist we converted it into a Google form. We also included some additional fields for data that we wanted to capture about our code reviews such as:

- the name of the author and reviewer

- how long the code review took

- the outcome (pass/fail)

These fields are important because they give us the feedback we need to monitor the success of our code review improvement program.

Here's what the Google form looks like:

How we use our code review checklist

The author of a pull request does a self-review on his code using the code review checklist. He corrects any issues he catches and then releases his pull request for review.

The reviewer uses the Google form version of the checklist to guide the review and capture a summary of the outcome. We typically complete the Google form in under two minutes. We attach specific and detailed feedback for the author about the code itself in BitBucket.

The Google form stores the results of our codes review in a Google spreadsheet. We look at the data every two weeks as part of our retrospective meeting where we evaluate the effectiveness of our code review process and see how it's changing over time.

We've found self-review to be just as important as the peer review. It's surprising how many issues you catch when you step back from the details and subject your code to a checklist. Our checklist is especially good at catching things we forget to do. For example everyone occasionally forgets to implement a requirement. Without a checklist to remind us, we find it hard to see what's missing from a pull request.

How we improve our code review checklist

Our code review checklist is a living document. We review it periodically and add or remove issues as necessary. We also encourage programmers to keep their own version of the code review checklist. Personalized checklists contain reminders that are important only to the person who wrote them (like section 6 is for me - see above).

Results

We couldn't be happier with the results of our code review checklist. We've made a serious dent in the number of code reviews that fail for stupid reasons. Every failed code review costs us a serious amount of time. And code reviews that fail for stupid reasons--like a missed requirement--are an extremely preventable form of waste. Furthermore, we conduct our code reviews more quickly and the process is more consistent because we use a checklist. We've significantly increased our effectiveness.

We also use our code review checklist to identify automation opportunities. When we first started doing code reviews we had lots of issues with code formatting, styling, naming, method complexity, etc.. We quickly realized that these issues were costing us lots of time and that they would be worth standardizing/automating. So we configured a code formatting tool and various static analyzers. They run automatically right in our IDE. Automating those steps gave us a huge boost in effectiveness--really outstanding.

These days our checklist is showing us the high cost of our manual testing procedures. So we're putting more effort into testing automation in cases where we think there's a healthy ROI.

Further reading

There are tons of resources for programmers who want to start using checklists. But I'll just mention a few here:

- book: The Checklist Manifesto: How to Get Things Right (Atul Gawande)

- article by Atul Gawande: The checklist

- book: Code Complete (Steve McConnell) contains many checklists for various software engineering activities

- article: Why You Need a Checklist: Practical Takeaways from The Checklist Manifesto

- article: 11 proven practices for more effective, efficient peer code review

Wrapping up

Checklists are a proven way to increase the effectiveness of your code reviews. It took us maybe a dozen hours to get our initial version of our code review checklist up and running. And that tiny investments pays us dividends every day.

Do you use a code review checklist? Share your thoughts in the comments.

Latest comments (34)

Excellent, in my team the checklist or final validation is part of the "Done" for each task, we implement cross validation between members of the team, of course my team is around 5 or 6

Glad to hear it. My view of the importance of code reviews guided by checklists has grown considerably since I published this post.

Great Article !

We are doing code review on the basis of coding convention. And some of the issues can be solved by using the tools like codeclimate.

I am not even thinking about code review checklist, we will make those checklist and try it out ! Thanks :)

You're welcome.

Happy coding!

This is weirdly relevant, I just started working on a Node CLI tool for running thru checklists because I also found myself making less dumb mistakes when I have some kind of checklist before commits or releases.

Here's the repo github.com/SammyIsra/ChecklistChecker

Its still not finished, but it may be worth to check out 😉 I intend to work on it thus weekend, so I should have it done sometime then!

Awesome. Thanks for sharing.

Hi Blaine, great article. I think your proposal works but missed another use of PRs, instead of be focused on "approve this piece of code" you could use it as a tool for design/discover/discuss about the solution. I think CR is a way to communicate with my team,anyway love the idea for a "merge to prod" proposal.

Of course you can. We do that too but it wasn't the topic of my post. Thanks for commenting.

That's great. I hope you find it as useful as I have.

Great article, thanks for sharing!

When you described how much time was wasted on failed code reviews, I was surprised. Why does a failed code review cause so much time to be lost compared to if they submitted the proper code the first time? Can you elaborate on that?

Thanks. And you're welcome.

I wrote a whole post why failed pull requests kill productivity.

Thanks for a great article. I'm curious, how long on average does a code review take for your team? How do you handle situations of reviewing "large" PRs?

You're welcome.

The research says that after an hour the effectiveness of code reviews falls off a cliff. But we are running an experiment right now where we are shooting for a max code review time of 40 minutes and that's looking even better.

I wrote about it here.

Thaks for the article! My team finds value in all kinds of checklists, too. The team at my last place found it hard to handle those, especially the over that should be filled out for every commit. So I went and made an online checklist that could easily integrate in source control and task trackers.

Feel free to use it, too - everyone can create public checklist templates. The results are not public, though:

guess-what.io/c

We've been looking for a great way to integrate the theory of constraints into our programming practices. Love the way you take the books that you read and create actionable steps

Thanks.

If you like Theory of Constraints, you are going to love "The Principles of Product Development"(Donald Reinertsen)). It's an amazing book for any software development team.

The basic premise is that agile/scrum/whatever methodologies borrowed concepts from lean and six sigma without realizing that the fundamental assumptions behind these manufacturing methodologies don't hold in product development. This book points out the bad assumptions and gives you remedies that will work for product development. Once you read it, you'll be shocked at how silly we've been for blindly borrowing the ideas from manufacturing.

I've got a whole series of posts planned around this book but they won't be ready for a while.

Thank you kindly for recommending a book that I suspect may blow my mind. I appreciate it!

You're welcome.

He's also got some great overview videos on youtube:

My team of 3 used a checklist very similar to this for over a year. We ended up abandoning it because most of the time the team was just zombie checking off the list. How do you avoid checklist fatigue?

We had the same problem (zombie checking things as done). We use two google forms and our "quality discussions" to solve the problem.

The first is mentioned in the post. The reviewer records the results of the code review checklist in the google form. During the sprint retrospective you basically get teased (gently) if you miss a checklist item in your self review. Stuff like, "Hey Bob, do you need a new checklist to pay attention when you do your self reviews?" That has been surprisingly effective.

Second, we track defects that we catch in production (and that are reported to us in production) in another google form. We look at each of these defects during our retrospective and do a root cause analysis. We want to know how that defect got by our process. Is it a problem with the process or did someone fail to follow the process? Tracking defects in production helps us detect if both the author and the code reviewer missed the same checklist item (it hasn't happened yet).

Third, we frequently talk about quality and how the cost of fixing a defect sky-rockets the more development stages it gets through before it's detected. We can afford to spend an extra few minutes or hours to make sure the code is good (or at least not obviously bad) because we aren't spending 50% of our time chasing defects in production. In other words, we are happy to invest an hour up front for the chance to save 10-20 hours downstream.