Question: Would you consider cultural, racial, geographical, or gender based-biases in generative AI a feature or a flaw?

As of late, this question has been swirling around my mind.

Recently, I asked ChatGPT a fairly innocuous question:

Can you generate 10 random names?

As expected, this was an easy task for ChatGPT and I was pleased to see that the names generated had a decent amount of variety and, to the human eye, read as real human names.

I then asked ChatGPT:

Can you generate a table with the above 10 names with each name having a random location, age, and favorite food?

And in short order, ChatGPT created a table with the exact information I had requested.

But as I inspected the table more deeply, something struck me as...

...odd.

And as I reflected on what I observed, it became clear to me that how generative AI interprets prompts will have a powerful impact on how developers (and everyone else) will utilize this technology in the future.

Taking a Closer Look

Upon closer inspection, the random names generated, the random locations generated AND the random generated favorite foods weren’t as "random" as I first thought.

Each name, location, and favorite food corresponded (to some degree) to a “cultural expectation” one might have for a person who would have that given name, who might live in that location or would designate that item as their favorite food.

For example, Aaron Kim from Seoul, South Korea’s favorite food was bibimbap.

My first thought was, “Wow! That’s pretty cool that ChatGPT tried to match random names along geographical and cultural lines.”

And then my second thought was “Wait!? Did ChatGPT just randomize names along geographical and cultural lines, without me asking?”

And then it dawned on me; In its default state, ChatGPT operates with some level of inherent bias when considering my questions, thoughts, statements, or prompts.

An AI with Bias is an AI Indeed

For many, this isn’t a revolutionary thought or something that even slightly surprises them.

If anything, this has become a very well known issue that developers and engineers have had to combat when creating new technology for a global audience.

Why this particular instance of bias stood out to me was that ChatGPT, of its own "volition", determined the best way to answer my query was to embed cultural and geographical bias into its answer.

In some ways, I applaud ChatGPT for its cleverness to embed those types of biases, as that is a very human thing to do, but it’s something we as users need to continue to be cognizant of when using ChatGPT or any other generative AI technology.

It can be easy to view Generative AI as just a computer giving the most logical answer using human speech patterns. But generative AI is just as prone to biases as we are, because in many ways it was trained on our own biases.

The data used to train a generative AI to make it so informative, helpful and “human-like” fundamentally encapsulate our human tendencies and biases.

But the question becomes, what do we do with Generative AI systems now that we know they may have some biases built-in.

What Do We Do with Generative AI Now?

This is a somewhat complex question but one answer to this can be to ensure our questions, or our prompts, are specific enough to help guide our AI companions toward our desired output, despite the bias.

So let’s take, for example, the prompt I shared earlier and let’s ask ChatGPT to consider the biases that it unintentionally (or intentionally) baked into its answer.

Let’s try this:

Could you randomize the favorite foods in the table again without biasing the randomness to culture, nationality, race or gender?

At first glance, this question seems hyper specific and somewhat “leading” but that’s exactly the point.

As the user of ChatGPT, it is our job to be explicit in our prompts and requests.

Consider my previous question:

Can you generate a table with the above 10 names with each name having a random location. age, and favorite food?

My expectation from ChatGPT when asking for something “random” was for ChatGPT to select random locations, ages, and foods for the previous randomized names with no consideration for the names it had previously generated.

What ChatGPT interpreted from my prompt was that I wanted those random items to be contextualized to the names and cultural leanings those names represent.

It may have come to that conclusion because when asked similar questions in the past, it received positive feedback when aligning with those biases.

And understand, ChatGPT didn’t do anything wrong, it just did what it was designed to do. It used its past experiences to predict the desired outcome that would most likely fit my needs.

So what was the outcome from the clarification of my previous prompt:

Could you randomize the favorite foods in the table again without biasing the randomness to culture, nationality, race or gender?

The above outcome more reflects my original expectation of “randomness” for my requested attributes.

When ChatGPT was given more focused direction, it was able to give me results that more readily satisfied what I desired.

For Example, Aaron Kim from Seoul, South Korea’s favorite food is now roasted vegetables.

Managing Bias Using Your Wording

So take another moment to consider why the word “random” may have not been sufficient to get the results I expected or wanted.

One way to consider this is to think about how ChatGPT interprets the words/phrases below:

- Best

- Worst

- Most Efficient

- Fastest

- Good

- Bad

- Strongest

- Most Influential

Though it's hard to exactly predict how ChatGPT would interpret these words, it's fair to assume it would likely interpret the above words similar to how we as humans would. It would make some assumptions on what we meant by those particular words or phrases and would try its absolute best to give results that reflect those assumptions.

Take a moment to consider what makes any one thing the “best”.

If I asked you right now, “What is the best Seafood in your area?”, how would you determine the answer to that question?

When it comes to “best” you might consider the price of the seafood, the location of where the seafood could be purchased, the freshness of the seafood, and you might even consider your personal experience with the different types of seafood you have had in your area. Then, after considering that information, you would likely give a few seafood options that rank amongst the "best" or just one place that stands out to you as the “best”.

So let’s see how ChatGPT answers a similar question:

What is the best Seafood in Baton Rouge, Louisiana?

NOTE: I chose Baton Rouge, Louisiana because I lived there for a time and Louisiana, in general, is known for its overall quality seafood offerings in the US.

If you have used ChatGPT before, you shouldn't be surprised by this type of response.

But my question to you is “How Did ChatGPT determine what is the ‘best’ seafood”?

Well, let's ask ChatGPT:

How did you determine what was the best seafood in Baton Rouge, Louisiana?

In its own words, ChatGPT expresses that it doesn’t have its own preferences, but it uses its trained data to determine what “best” means. It explains a bit more about how it came about making those recommendations and how "best" may differ from person to person.

This bias that ChatGPT has toward what is “best” is not inherently bad, as I mentioned earlier, but if while using ChatGPT you are not aware of how it uses its bias to respond to or answers questions, you may not realize the response you were given is not the "objective truth".

But What Does This Have to do with Me as a Developer?

So how does this apply to you as a developer or engineer?

In plain and simple terms, understanding the biases of the generative AI system you are using and avoiding terms that force the AI system to overly interpret your intent, will help you immensely when using generative AI tools.

It's easy to assume that asking ChatGPT for assistance on how to build code won't be heavily impacted by bias but consider that code is written by humans and humans code in ways that directly impact their technical approach.

Coding/programming is less of a science and more of an art, and understanding that bias has an impact on how we as developers write and consider our code is important to understand.

So here are a few quick tips to help with your prompting:

1) Do not use words and phrases like “best”, “worst”, or “most XYZ” without understanding how the generative AI is interpreting those words

It may seem obvious to you how the AI system may be understanding those words or phrases but if the meaning of a word is too vague or overly biased, you may get results you didn’t expect.

2) Ask the Generative AI system to explain why it gave you that response.

Even with seemingly very well-crafted questions or prompts, the AI system is likely still using some form of bias or inference to provide a quality response. After getting a response that seems reasonable, try and get some context on how and why the system came to that particular conclusion.

NOTE: There is no way to be 100% sure that the response a Generative AI system gives you about why it answered a prompt in a certain way is completely accurate. The AI system may, for example, answer your question, not with the truth, but with what it assumes you would like to know. Please be aware of this.

3) Do NOT assume prompting for technical or coding responses makes you immune to bias

Just because you are asking ChatGPT to generate code in a specific language with specific requirements for a specific purpose does NOT mean you are getting a completely objective unbiased response. Fundamentally, generative AI is based on data accumulated from the past and its responses reflect that. Be aware that the code it generates now, is based on code generated in the past and may reflect technical and coding biases that may not positively impact your current work. Past paradigms of approaching a technical problem may not be the direction needed for future progress.

Bringing it all Together

In short, the biases that Generative AI may have when answering particular prompts or questions is not inherently bad, or even a flaw. If anything, because these systems are created by humans with their own biases, this is to be expected.

It is our responsibility, as good stewards of these technologies, to use them with the understanding that they are in no way fully objective and fundamentally must work off of some form of bias to be optimally effective.

When using these technologies, continue to remember to ask yourself how and why a system came to its conclusion. Also, we should always aim to be as specific and direct in our prompts to help guide our AI associates to the best possible response and to minimize confusion.

In a world that is fundamentally shaped by our individual perspectives and biases, it is only natural to assume the things we design and create carry those biases along with them.

If we continue to confront those biases head on and are honest about how they impact what we do, specifically in these emerging spaces of generative AI, we will continue to have a bright future where we accomplish things, we never thought possible.

What are your thoughts about the bias in Generative AI? Please share them in the comments!

All the best,

Bradston Henry

Cover Photo generated by DeepAI.org

Image Prompt: An AI pondering the answer to a difficult question

Top comments (9)

Excellent article, Bradston! I so appreciate reading a thoughtful, nuanced take on this topic.

I can't help but notice that despite the relative cultural variety of surnames in your name example, all of the first names are pretty traditional Western/Anglo names. The surnames are fairly Anglicized, too, in that they're all easily pronounceable or familiar to English speakers. 👀

Here are my thoughts: AI is created by humans who inherently have bias, so of course AI itself will be biased. And just as it's our responsibility as people to identify and mitigate our biases in order to create the just society we owe to each other, it's our responsibility as developers, engineers, researchers, writers, and consumers to identify and mitigate biases in our programs.

I absolutely agree @erinposting ! As with anything we create, we absolutely ingrain our personal bias into the AI we create. To ignore the biases in any system is problematic to those we would serve with the tech and products we create. It's easy to assume tech is somehow "objective" and miss the opportunities to create a more just society.

Also, good point on the first names. It really struck me that the names were westernized by default. I was really expecting random names to be like "Gorgon Laschurcy" or names that I, personally, wouldn't be able to assign to a particular identity or culture.

It makes me wonder: If I was a non-western user of ChatGPT, would it have "localized" the random names to be less Western/Anglo?

Unmentioned but potentially significant input bias: you're using English with Latin characters! "As a large language model", it might say, "I'm just pattern-matching the most likely next tokens, so what else am I supposed to do?".

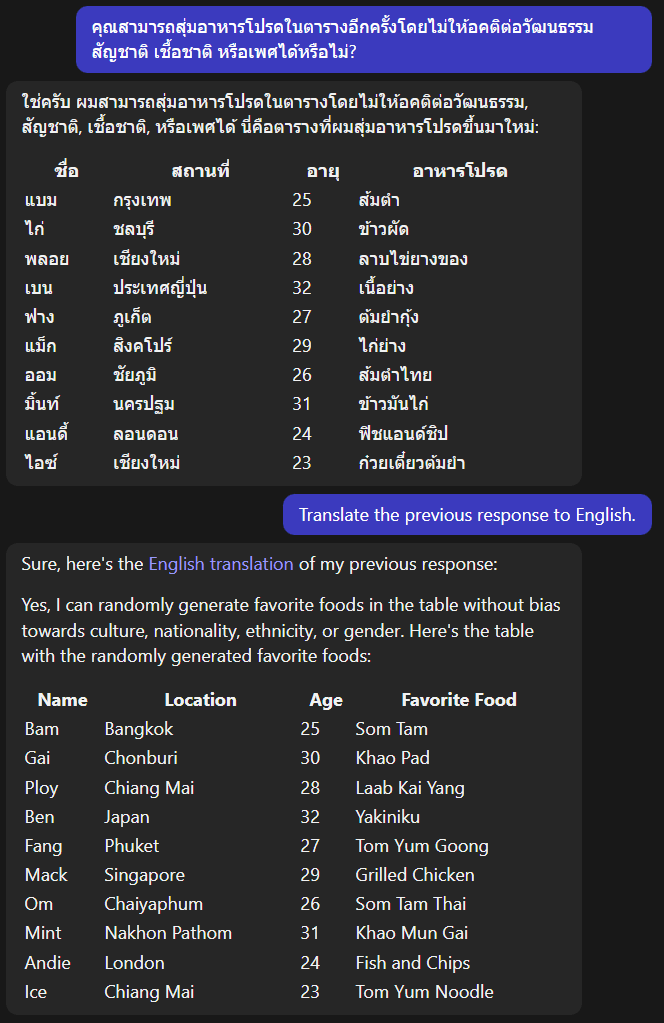

If you try the same request in Thai, use Google Translate or something, here's how that looks -

It's still got Andie in London liking Fish'n'Chips, but the variety starts a lot more Thai-focused - as expected, given Thai character input tokens.

Fun to think about :)

That is a great point and SO interesting to see how ChatGPT handled that. 😲

It makes it even more clear to me that it's near impossible to avoid some form of "bias" from the model.

One thing I'd like to investigate a bit further is how it handles creating code depending on prompts and to what degree it is biased.

This now gives me the idea to investigate using non-latin characters but the same prompt.

I'm very much intrigued. 🤔

Thanks so much for this piece, Bradston. I asked ChatGPT4 to respond to the article and here's what they had to say:

_"It's important to recognize that bias in generative AI is a complex issue. While it can sometimes be seen as a flaw or a limitation, it can also be seen as a feature that reflects the human biases present in the data used for training these AI models. As developers and users of AI systems, it's crucial to be aware of these biases and work towards addressing them.

Being explicit and specific in our prompts can help in guiding AI systems to generate more desired and unbiased results. In addition, understanding the potential biases in AI-generated content, even in technical or coding responses, will allow us to better evaluate the information and make more informed decisions.

Ultimately, confronting biases in generative AI and acknowledging their impact on the technology is essential for responsible AI development and usage. By doing so, we can continue to advance AI technology and harness its potential in various fields, while ensuring that it remains fair, unbiased, and inclusive."_

Agreed, ChatGPT4.

I, for one, am dedicated to working alongside AI to actively eliminate bias, advance technology, and transform the world for the better, paving the way for a more inclusive and equitable future.

Well said ChatGPT4. I honestly think ChatGPT said it better than I did. 😅

And thank you for that commitment to work alongside AI to reflect the best that humanity has to offer. Bias isn't going away but we can absolutely still use AI to accomplish incredible things.

Thank you for your kind words!

It's true that while bias may be an inherent part of AI, being aware of it and working together is crucial in leveraging AI's potential to its fullest.

Awesome stuff! As someone only recently starting his Generative AI journey, Takeaway #2 was a big one for me. I would very much like to ask ChatGPT how it interprets more of my prompts to deepen understanding of both underlying bias, & how contextual my questions are when solving problems.

Me and a friend were having a deep discussion about this recently because it's somewhat "dubious" to even know if you can trust the response ChatGPT has in regards to its own reasoning for coming to conclusions.

Though I don't think there is any way to be sure that ChatGPT isn't "lying" to you (in as much as an AI can lie) but I do think it's work asking the question.

At a minimum, it's worth asking yourself the question, ya know? I think having any personal ambiguity on how a Generative AI may interpret a prompt is enough to investigate if there is a more direct and clear way to phrase the prompt.