Hi, I’m Viktor. Twelve years ago, I joined a web studio in my hometown. That day marked the start of my career as a developer. Back then we had no Git, no CI/CD, no test beds… And I saw how it impacted the growth of our team and business. We had to do a lot of trial and error, discover new practices, and implement them all on our own. Since then, I’ve been a senior dev in a Russian financial holding and a German b2b startup. I’ve also been a team lead in a food tech project, a CTO in educational projects for the Russian and LatAm markets… And in most of those projects, I saw similar issues. I recently moved to Israel and found a position as a consultant for a startup. Guess what I found?.. Right.

I wrote this piece to offer you some examples of businesses shooting themselves in the leg (while any startup’s goal is to “run fast”). I’m also going to provide a chart I’m using myself to help fix those issues. The chart was actually the reason for writing this text. You can find a link to it at the end of the article.

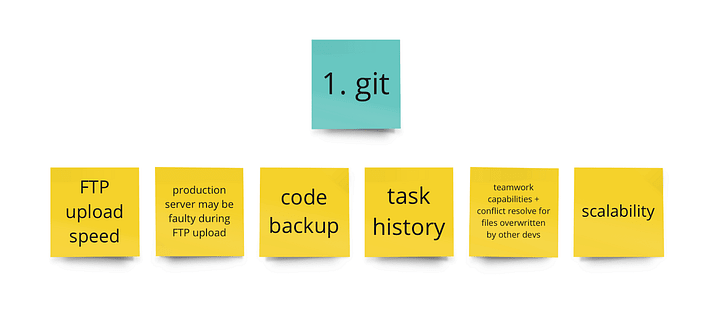

No Git; development done in production

A classic case: a project starts with a single dev. They make something, upload it via FTP, test it, and fix it right in prod. They might even have repositories, but those are often empty or outdated.

Then, the business scaling part begins, with more devs joining. A most curious thing often happens next… For instance, one such dev who worked alone and ignored Git managed to wipe a couple days’ worth of my work. Fortunately, I’m using a version control system in every project I do. You probably know what would’ve happened to my code if I’d deleted it. Now, I wanted to sell the “you need Git for backups and better scaling” idea to the team so I made some slides and presented them at a Friday meeting over some pizza. It worked! When our prod went down and the second dev was unavailable, I could at least understand what they’d changed. The fix didn’t take much time.

No code review

I once joined a project that had no original devs left. One of my tasks was to change the pagination method. Doesn’t seem too complicated, right? Just open the function and swap it out in a couple of minutes. But I couldn’t do that. My predecessor used a sophisticated approach: they copied a piece of code and introduced changes into it. That resulted in 200 entries and a manual correction that took 3 days. I think any code review done by a colleague would’ve prevented this from happening in the first place.

Thankfully, I had some time to sit down, think it through, and improve it. Here’s a case where having no code review was a major blunder. So, it’s a new project. The CRM, this foundation of all business, was getting slow. It needed a fix, and the management was getting pushy. The dev decided to ignore all the standard practices like code review, testing, even autotests. They just pushed their code to Git and released it. The CRM simply went down. It was a common human mistake made under heavy stress and with a tight deadline. Having someone else check the commit would’ve been way safer. I believe you CAN ignore the autotests if they’re taking too long and you have a critical situation at your hands, but you should never ignore code review.

No CI/CD

In a different project, the team released manually via git pull. The website would regularly go down. For example, they once simply forgot to do the package install. The support, the business, and even the users began questioning the team. Introducing CI/CD worked like a charm.

In another case, having Git and CI/CD played a major role in meeting the good old “release faster” demand. We needed to make a German version of the website ASAP, as a landing for potential investors. With an automated deploy process and a way to quickly make new test servers with CI/CD, we cut off the fluff, created dedicated DBs, uploaded the translations, and had a test bed for the investors in no time.

No transparency in task descriptions

I “love it” when the task is buried somewhere in 150 chat messages. Or worse, in DMs.

We once had a big chat with call center employees. We had a QA team whose task was to screen for issues in that chat, ask for additional details, and create a task in a tracker if they managed to find a bug. However, the call center employees quickly realized that writing in the chat meant answering all those standard questions, sending screenshots or links, and doing other “complicated” things. If they wrote directly to some “tester Jack” instead, they’d fix it much quicker via admin access in most cases.

QA was confident they were doing the good thing, but in reality, we were losing money on all the manual labor and missed bugs. All hell broke loose once a certain worker left the company. We were flooded with an influx of new info and tiny, pesky bugs that took a lot of urgent effort to fix.

Once, though, we managed to prevent that from happening. We had this “major” task that involved an integration with a big client. The manager decided to bypass all the processes and went to a dev directly to give them the task orally: “Just do what I tell you, don’t overthink it”. Thankfully, the team was already used to following the standard practice. We discussed the task on a daily and added it to the tracker. The research stage led to quite a few uncomfortable questions. As it turned out, we could’ve leaked our entire commercial base if we’d followed this “don’t overthink it” way.

No “1 task = 1 code branch” rule

We were making a new large piece for a system with paying clients expecting it. The launch was promising a good profit. Each day of delay was eating into that profit. So, we decomposed the task into smaller, independent pieces so that we could release it faster and add the extra features in the process.

I created 10 tasks in the tracker, but the team decided to do them all in a single branch for some reason. So, the deadline passed, and we still couldn’t release: one of the minor tasks had a critical bug that couldn’t be fixed quickly. Had we had separate branches, we could’ve released the other 9 tasks and launched the system. Thankfully, the team learned from this and switched to my proposed method. When I joined a different team, however, we had to go through this “enlightening” experience all over again.

No autotests introduced with growth

Some startups have a long life and build up legacy practices. Here’s how I started using autotests: I joined projects with some history where testing was hard. I wanted something automatic to make sure my changes wouldn’t break anything.

I knew a dev once who wanted to “rewrite everything from scratch” instead of doing autotests on legacy code. There was no convincing him otherwise, so I gave him a piece of code to rewrite. Once he released it, some non-crucial functionality of the website went down. The new code had to be discarded because restoring the old code was cheaper, faster, and simpler. Thankfully, he had a controlled environment to learn what post-mortem is. As a lesson of sorts, the dev then had to write autotests.

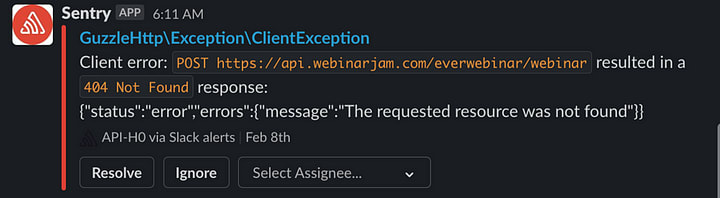

No error monitoring

Startups often have chats with early or active users. So, a support or a PM comes to you and says: “Hey, there’s a thing that doesn’t work for our user”, with details usually including wonderful descriptions like “I don’t know what it is” or “this is it but I have no idea how it breaks” etc. If that feature works for you, trying to find out what’s wrong and how it doesn’t work for that certain user is a pain. All you usually have is a cropped screenshot or just another “it still won’t work fix it ASAP” from that user.

Before we added a monitoring system, we could only learn our website was down in 10 minutes at best. Aaand it was the users who told us. With monitoring, we started fixing bugs before getting told about them. As a bonus, the logs helped us discover some extra bugs multiple times, like when an order was created on the website but not added to the base.

Conclusion

You might ask: why are you still working for startups?! Well, I like forming streamlined processes from chaos. During my career, I’ve noticed two major issues in such projects: they don’t work with people, and they don’t have automation. I see only two ways out. One, educate. Two, implement trusted tools and practices everywhere you can. If it’s really bad, start with a CI/CD release pipeline. That usually also involves using Git and adding code review. All together, it’s a great foundation for further improvements like pipelining, monitoring, etc.

Once you finish both, you’ll get a completely different speed and quality of development. I myself usually follow this chart. Discussion and additions are welcome. And of course, we’re all looking forward to your stories!

Top comments (1)

Good read 👍 reminds me of my time in agencies (particularly the bit about code reviews 😂)

I worked with a great senior who said to me once:

“An application is like a kitchen, keep it clean, otherwise customers will be eating 💩”