Universal Sentence Encoder

Cera et al. demonstrated that the transfer learning result of sentence embeddings is outperform word embeddings. The traditional way of building sentence embeddings is either average, sum or contacting a set of word vectors to product sentence embeddings. This method loss lots of information but just easier of calculation. Cera et al. evaluated two famous network architectures which are transformer based model and deep averaging network (DAN) based model.

This story will discuss about Universal Sentence Encoder (Cera et al., 2018) and the following are will be covered:

- Data

- Architecture

- Implementation

Data

As it is designed to support multiple downstream tasks, multi task learning is adopted. Therefore, Cera et al. use multiple data sources to train model including movie review, customer review, sentiment classification, question classification, semantic textual similarity and Word Embedding Association Test (WEAT) data.

Architecture

Text will be tokenized by Penn Treebank(PTB) method and passing to either transformer architecture or deep averaging network. As both models are designed to be a general purpose, multi-task learning approach is adopted. The training objective includes:

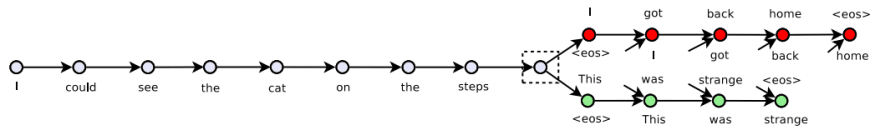

- Same as Skip-though, predicting previous sentence and next sentence by giving current sentence.

- Conversational response suggestion for the inclusion of parsed conversational data.

- Classification task on supervised data

Transformer architecture is developed by Google in 2017. It leverages self attention with multi blocks to learn the context aware word representation.

Deep averaging network (DAN) is using average of embeddings (word and bi-gram) and feeding to feedforward neural network.

The reasons of introducing two models because different concern. Transformer architecture achieve a better performance but it needs more resource to train. Although DAN does not perform as good as transformer architecture. The advantage of DAN is simple model and requiring less training resource.

Implementation

To explore the Universal Sentence Encoder, if you simply follow the instruction from Tensorflow Hub.

Take Away

- Multi-task learning is important for learning text representations. It can be found that lots of modern NLP model architecture use multi-task learning rather than standalone data set

- Rather than aggregate multi word vectors to represent sentence embeddings, learning it from multi word vectors achieve better result.

About Me

I am Data Scientist in Bay Area. Focusing on state-of-the-art in Data Science, Artificial Intelligence , especially in NLP and platform related. Feel free to connect with me on LinkedIn or following me on Medium or Github. I am offering short advise on machine learning problem or data science platform for small fee.

Extension Reading

Reference

D. Cera , Y. Yang , S. Y. Kong , N, Hua , N. Limtiaco, R. S. Johna , N. Constanta , M. Guajardo-Cespedes, S. Yuan, C. Tar , Y. H. Sung , B. Strope and Ray Kurzweil. Universal Sentence Encoder. 2018

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser and I. Polosukhin. Attention Is All You Need. 2017

M. Iyyer, V. Manjunatha, J. Boyd-Graber and H. Daume III. Deep Unordered Composition Rivals Syntactic Methods for Text Classification. 2015

Top comments (0)