AI is often hailed (by me, no less!) as a powerful tool for augmenting human intelligence and creativity. But what if relying on AI actually makes us less capable of formulating revolutionary ideas and innovations over time? That’s the alarming argument put forward by a new research paper that went viral on Reddit and Hacker News this week.

The paper’s central claim is that our growing use of AI systems like language models and knowledge bases could lead to a civilization-level threat the author dubs “knowledge collapse.” As we come to depend on AIs trained on mainstream, conventional information sources, we risk losing touch with the wild, unorthodox ideas on the fringes of knowledge — the same ideas that often fuel transformative discoveries and inventions.

You can find my full analysis of the paper, some counterpoint questions, and the technical breakdown below. But first, let’s dig into what “knowledge collapse” really means and why it matters so much…

AIModels.fyi is a reader-supported publication. To receive new posts and support my work subscribe and be sure to follow me on Twitter!

AI and the problem of knowledge collapse

The paper, authored by Andrew J. Peterson at the University of Poitiers, introduces the concept of knowledge collapse as the “progressive narrowing over time of the set of information available to humans, along with a concomitant narrowing in the perceived availability and utility of different sets of information.”

In plain terms, knowledge collapse is what happens when AI makes conventional knowledge and common ideas so easy to access that the unconventional, esoteric, “long-tail” knowledge gets neglected and forgotten. It’s not about making us dumber as individuals, but rather about eroding the healthy diversity of human thought.

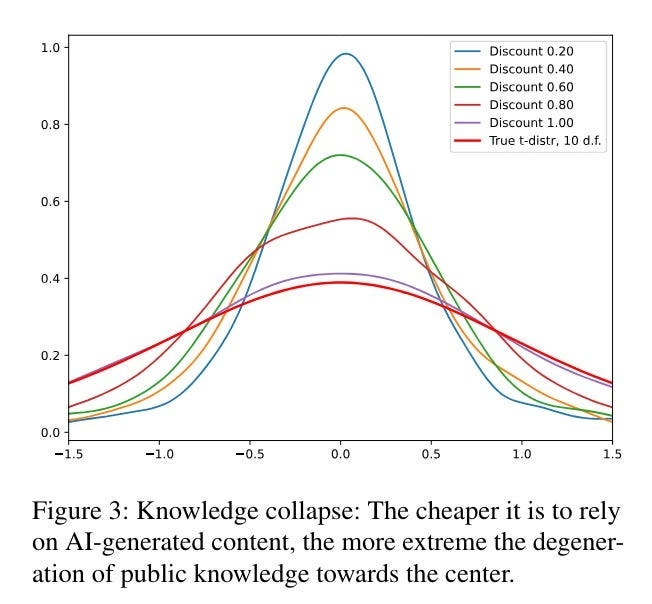

Figure 3 from the paper, illustrating the central concept of knowledge collapse.

Peterson argues this is an existential threat to innovation because interacting with a wide variety of ideas, especially non-mainstream ones, is how we make novel conceptual connections and mental leaps. The most impactful breakthroughs in science, technology, art and culture often come from synthesizing wildly different concepts or applying frameworks from one domain to another.

But if AI causes us to draw from an ever-narrower slice of “normal” knowledge, those creative sparks become increasingly unlikely. Our collective intelligence gets trapped in a conformist echo chamber and stagnates. In the long run, the scope of human imagination shrinks to fit the limited information diet optimized by our AI tools.

To illustrate this, imagine if all book suggestions came from an AI trained only on the most popular mainstream titles. Fringe genres and niche subject matter would disappear over time, and the literary world would be stuck in a cycle of derivative, repetitive works. No more revolutionary ideas from mashing up wildly different influences.

Or picture a scenario where scientists and inventors get all their knowledge from an AI trained on a corpus of existing research. The most conventional, well-trodden lines of inquiry get reinforced (being highly represented in the training data), while the unorthodox approaches that lead to real paradigm shifts wither away. Entire frontiers of discovery go unexplored because our AI blinders cause us to ignore them.

That’s the insidious risk Peterson sees in outsourcing more and more of our information supply and knowledge curation to AI systems that prize mainstream data. The very diversity of thought required for humanity to continue making big creative leaps gradually erodes away, swallowed by the gravitational pull of the conventional and the quantitatively popular.

Peterson’s model of knowledge collapse

To further investigate the dynamics of knowledge collapse, Peterson introduces a mathematical model of how AI-driven narrowing of information sources could compound across generations.

The model imagines a community of “learners” who can choose to acquire knowledge by sampling from either 1) the full true distribution of information using traditional methods or 2) a discounted AI-based process that samples from a narrower distribution centered on mainstream information.

This is actually a screencap from Primer’s video on voting systems but I pictured that the simulated “learners” in their “community” looked like this when reading the paper, and now you will too.

Peterson then simulates how the overall “public knowledge distribution” evolves over multiple generations under different scenarios and assumptions. Some key findings:

When AI provides learners a 20% cost reduction for mainstream information, the public knowledge distribution ends up 2.3 times more skewed compared to a no-AI baseline. Fringe knowledge gets rapidly out-competed.

Recursive interdependence between AI systems (e.g. an AI that learns from the outputs of another AI and so on) dramatically accelerates knowledge collapse over generations. Errors and biases toward convention compound at each step.

Offsetting collapse requires very strong incentives for learners to actively seek out fringe knowledge. They must not only recognize the value of rare information but go out of their way to acquire it at personal cost.

Peterson also connects his model to concepts like “information cascades” in social learning theory and the economic incentives for AI companies to prioritize the most commercially applicable data. These all suggest strong pressures toward the conventional in an AI-driven knowledge ecosystem.

Critical Perspective and Open Questions

Peterson’s arguments about knowledge collapse are philosophically provocative and technically coherent. The paper’s formal model provides a helpful framework for analyzing the problem and envisioning solutions.

However, I would have liked to see more direct real-world evidence of these dynamics in action, beyond just a mathematical simulation. Empirical metrics for tracking diversity of knowledge over time might help test and quantify the core claims. The paper is also light on addressing potential counterarguments.

Some key open questions in my mind:

Can’t expanded AI access to knowledge still be a net positive in terms of innovation even if it skews things somewhat toward convention? Isn’t lowering the barriers to learning more important?

What collective policies, incentives or choice architectures could help offset knowledge collapse while preserving the efficiency gains of AI knowledge tools? How can we merge machine intelligence with comprehensive information?

Might the economic incentives of AI companies shift over time to place more value on rare data and edge cases as mainstream knowledge commoditizes? Could market dynamics actually encourage diversity?

Proposed solutions like reserving AI training data and individual commitment to seeking fringe knowledge feel only partially effective to me. Solving this seems to require coordination at a social and institutional level, not just individual choices. We need shared mechanisms to actively value and preserve the unconventional.

I’m also curious about the role decentralized, open knowledge bases might play as a counterweight to AI-driven narrowing. Could initiatives like Wikidata, arXiv, or IPFS provide a bulwark against knowledge collapse by making marginal information more accessible? There’s lots of room for further work here.

The stakes for our creative future

Ultimately, Peterson’s paper is a powerful warning about the hidden dangers lurking in our rush to make AI the mediator of human knowledge, even for people like me who are very pro-AI. In a world reshaped by machine intelligence, preserving the chaotic, unruly diversity of thought is an imperative for humanity’s continued creativity and progress.

We might be smart to proactively design our AI knowledge tools to nurture the unconventional as well as efficiently deliver the conventional. We need strong safeguards and incentives to keep us connected to the weirdness on the fringes. Failing to do so risks trapping our collective mind in a conformist bubble of our own design.

A conformist bubble of our own design!

So what do you think — are you concerned about knowledge collapse in an AI-driven culture? What strategies would you propose to prevent it? Let me know your thoughts in the comments!

And if this intro piqued your interest, consider becoming a paid subscriber to get the full analysis and support my work clarifying critical AI issues. If you share my conviction that grappling with these ideas is essential for our creative future, please share this piece and invite others to the discussion.

The diversity of human knowledge isn’t some abstract nice-to-have — it’s the essential catalyst for humanity’s most meaningful breakthroughs and creative leaps. Preserving that vibrant range of ideas in the face of hyper-efficient AI knowledge curation is a defining challenge for our future as an innovative species!

AIModels.fyi is a reader-supported publication. To receive new posts and support my work subscribe and be sure to follow me on Twitter!

Top comments (13)

I guess it's a "standing on the shoulders of giants" argument for me, if massive amounts of knowledge are black-boxed and easy to use then it gives the potential to build on that and innovate on the fringes. If you can't innovate because you don't understand the basic principles then it would create an issue.

It does feel like a moment of societal revolution, with much more capabilities in the hands of more people, but also potentially a changing of social status and a "globalisation" of thought.

Maths used to be about abacuses and times tables. Those skills are lost or reducing because they are irrelevant to solving problems today.

If I can tell my stories in 2035 using Sora V10.5, in a way that looks like a Hollywood blockbuster, then success will be about the story and not the budget required to create it - that feels like an avenue to great creative expression.

Even if AI in the future was itself creative, I'm not sure that's even a problem, creativity isn't a hierarchy - things touch people or they don't. If every technical challenge is solved by AI; if every physical job is done by a robot; humanity can still have purpose and that purpose won't be about buying enough food to eat, or paying the rent. Now that has extreme societal change written all over it.

"If I can tell my stories in 2035 using Sora V10.5, in a way that looks like a Hollywood blockbuster, then success will be about the story and not the budget required to create it - that feels like an avenue to great creative expression." - This right here is a very good point!

Exactly, apart from the problem solving and the technical challenges, you have forgotten the SM. Imagine that maybe one day all posting and tweeting will be done by AI and people will finally talk to each other again. I can't say that would be a loss.

I read something a few days ago that made me thinking:

And I am not 100% innocent here, as I even have written a plugin for WordPress that drafts posts for you automatically using AI, but it's hard to see how much we don't fully understand AI and how to use it for better. If I need to ask an AI to write a comment on a LinkedIn post, I shouldn't even be commenting, as I have nothing to say.

Maybe when the hype settles down and we start seeing startups falling apart, AI will have better usage. For now, it's something like blogs and twitter where in 2006, "great, but I do with it?"

The VC "community" has already shown us what happens in a kind of capital collapse where funding is often directed to support existing entities rather than to enable new ones to be developed. So I suspect it would be something like that, only amplifying it further.

I appreciate the argument for education, but hear me out: If a person already has access to state-of-the-art educational AI technologies, doesn't that imply that they have access to the rest of the Internet? And if so, they have access to what is, in my opinion, a much better source of information than AI. Thus, I would argue that AI does not lower the barriers to education, and it instead makes it easier to receive a more skewed education.

Also, I'm not just concerned about knowledge collapse, but I'm also concerned about the homogenization of our culture. You mentioned Reddit, which is a perfect example, but there are many other platforms that "force" their users to conform to a specific design, ideology, or something else. I don't think this is entirely AI driven, but I think that AI has shed a good deal of light on it.

Good concern bro, but to me i think it will instead boost creativity with more time to come

just now #a friend of mine is conducting a research project he calls plastecity how plastic materials can make electricity and conduct it well like in metals

When i try to ask an AI that can plastic materials conduct electricity , it will tell me by default that its impossible

based on the facts i has but due to human desire and ambition , just a dream can be put in place

Just dont be worried but keep thinking outside the box,

I’m not personally worried. As things get skewed and homogenized it will just trigger a deep inner urge to strike out and touch something real.

The internet today has already shown how this will play out with AI to some degree. The internet used to be a much more creative place! You used to “stumble upon” strange and interesting websites. Why? Because the cost of creating that content was much higher, and fewer people had an interest in crossing those technical barriers. You could say the internet was indeed “fringe.”

Now we have a homogenous internet gobbled up by mega corps in the same way strip malls have strip mined our town squares.

BUT, we have never been more connected and more in touch with global happenings. We have never been in deeper global contact.

I see the pendulum swinging back toward authentic self expression, toward diving head first into intimate contact with the real world

Great post and great questions raised,I'm one of those that believes AI can in some form stump knowledge and the ability to think creatively towards solutions.But considering that having both AI and the Internet are great learning tools with wide sources of information I believe I all comes down to the individual.Will they simply copy paste and get an answer? Or will they chat back and forth about how and why the solution came to be? As someone who studies code I find that learning 'how to learn' is one of the things that will determine if you can be successful or just stubborn.Are you training your brain to get answers or are you training to solve problems?

Without that mindset people may simply use AI without even thinking of what consequences it could have. There is to much of a good thing sometimes I suppose

Society does not need AI to kill unorthodox ideas, that's exactly why it's called orthodox. In the middle ages, they burned the witches. In Islamic states, they kill the sinners. Same principle, only difference: in our brave new world, we tend to overestimate our degrees of freedom.

Thanks for this one. This is one of my concerns about wide use of generative AI - especially if going unchecked.

Complete non-expert here 😄, just passionate about nurturing creativity - I can think of a few reasons this can go wrong:

So we're dealing with human nature, which is really the same problem we're always dealing with as technology and societies advance. And let's remember that we also love novelty and to feel useful, so there's a natural counter-side too.

I like to think we can counter potential pitfalls (the type in the article) of widely adopted generative AI by creating tools which encourage creativity, curiosity and critical thinking. These are qualities we're going to need to adapt well to a changing world, so we should take care to preserve and grow them more than ever before.

i agree that's what is meant to be abuse of it is a negative human error.