Stunning shot of the Bernina Range courtesy of Pietro Branca.

Azure CosmosDB and Rust

In this series of blob post we will explore the power of Azure CosmosDB, using Rust as programming language. This is not meant to be an authoritative essay on either: we will focus on GettingTheJobDone©.

In order to have a valid example, we will suppose to be tasked of creating a globally distributed shopping platform. We will start by identifying the functionality we need, how CosmosDB will help us to achieve our goals and, finally, how to interact with CosmosDB using Rust.

For the sake of this blog we will simplify a lot of stuff here - we won't be touching the front-end for example - but in the end you'll hopefully be able to complete the missing pieces on your own.

What we need

Our application will be composed of:

- Goods database. Goods name, description, image and so on.

- Inventory service: how many goods we have in storage.

- Shopping basket service: every user can add items in the basket before committing to a purchase.

This is pretty standard stuff. Let's explore which out-of-the-box CosmosDB feature can help us.

Geo-replication

Azure CosmosDB can optionally replicate the data in various locations around the world. Turning this on is just a matter of clicking on a setting in the Azure Portal.

This is a great feature to have: if our shop supports customers from around the world we need to have a backend close to them otherwise the latency will kill the user experience. We do not want our Europe based customers to wait 600 ms on the network latency alone just because we happen to have chosen an US-based Azure region. With Azure CosmosDB we can enable and disable this setting at will. We can choose to have a primary read/write region and read-only secondary ones (single master setup) or elect to have many read/write regions at the same time (multi master setup).

For the goods database we will use a single master setup.

For the good and inventory service we will have a multi master replicated account (we assume to have one, big warehouse). The same applies to the shopping basket service. While unusual, this way our customers can start shopping in LA and commit the shopping basket in Rome!

Since we are unused to free launches in our trade, some of you might be wondering what would be the downside of having a replicated database. Generally speaking we know that a distributed database has either eventual consistency or horrible latency (CAP theorem being what it is).

In CosmosDB, however, we can use eventual consistency when it suits us and elect to have strong consistency only when needed.

The consistency levels of CosmosDB are quite complex because they support multiple scenarios: I will try to explain the more common ones here.

Consistency Levels

For our application we will use just three consistency levels: eventual, session, and strong. For a more precise and comprehensive list of the available consistency levels please consult the official documentation: https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels.

Eventual consistency is basically the "give me the answer as fast as you can". This means we will get stale data or even some data updated while the remaining is not. For our goods service we will use eventual consistency: the updates are rare and we do not care if the customers sees a slightly outdated version of our goods. We care about having a fast UI.

Session consistency means "make sure that I see what I've just written". This means we can have outdated data but we are guaranteed to have our writes reflected in subsequent queries. The shopping basket service uses session consistency. We do not care if the other baskets are updated, we just care about our own: when the customer adds an item to the basket they expect it to happen!

Strong consistency means "make sure I get the most accurate data available". This means we will always get the updated data regardless of where the last write happened. The inventory service uses strong consistency. We want to make sure to have the items available before accepting a purchase!

So, why do we not use strong consistency everywhere? The answer is that strong consistency is slow. This is especially true if you have a georeplicated topology.

Time to live

In Azure CosmosDB you can optionally set an expiration date for every document. This means that CosmosDB will delete any expired document automagically. If you know that some of your data is useless after some time setting an expiration date avoids you to have a purge procedure in place. You can also ask CosmosDB to set expiration dates on his own without any intervention by your part!

Our shopping basket services stores expiring documents: if the customer does not update a shopping basket after a week we will let CosmosDB clean it up for us. This will keep our database free of useless data without a single line of code.

Embedded attachments

Azure CosmosDB treats attachments as first class parameter of your document. You can either store the attachments as links or you can ask CosmosDB to store them for you in a automatic blob storage. We can leverage this functionality in the goods database to store images. This is cool because we do not need to implement a "store and retrieval" image code, we can just add our images as attachments and CosmosDB will store them for us. It will also give us a valid Azure Storage URL for later retrieval. If the blob has public access (more info here), we can use the returned URL directly in our frontend with no additional code: Azure storage URIs are REST compliant!

Show me some code!

Enough jabber, let's see some code! We start off adding the mandatory reference to the unofficial SDK for Rust in Cargo.toml:

[dependencies]

azure_sdk_cosmos = "0.100.3"

This crate is fully async so it is a good idea to use the async derive offered by Tokio. This is accomplished by adding this dependency to Cargo.toml:

tokio = { version = "0.2", features = ["macros"] }

And by replacing the main function created by Cargo with this one:

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

println!("Hello, world!");

Ok(())

}

Now let's initialize the CosmosDB client. The CosmosDB client is our go-to trait that allows us to interact with our CosmosDB account. The client requires two things in order to successfully interact with CosmosDB: your account name and your authorization token (while there are other ways to authenticate the client, we will use the master key to keep things simple).

We retrieve these parameters from the command line, like this:

let account = std::env::args()

.nth(1)

.expect("pass the CosmosDB account name as first parameter!");

let master_key = std::env::args()

.nth(2)

.expect("pass the CosmosDB master key as second parameter!");

And then we instantiate the client with these two lines:

// create an authorization token ginen a master key

let authorization_token = AuthorizationToken::new_master(&master_key)?;

// create a cosmos client for the account, using the authorization token above

let client = ClientBuilder::new(&account, authorization_token)?;

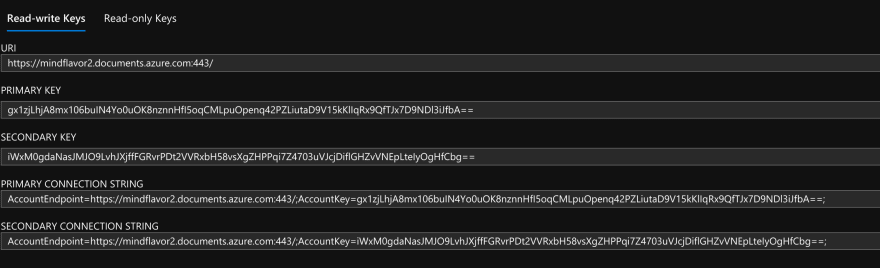

You can retrieve these info in the Keys tab of your CosmosDB blade in the Azure portal. For example:

The account name in my case is mindflavor2 (you can see it as the first part of the URI) and the two keys are shown as Primary Key and Secondary Key. Notice you can find the read only keys in the other tab. Please note that you can regenerate the keys at any time. This is useful if the keys are compromised because, say, you unfortunately posted a screenshot on the web, just like I did right now 😇.

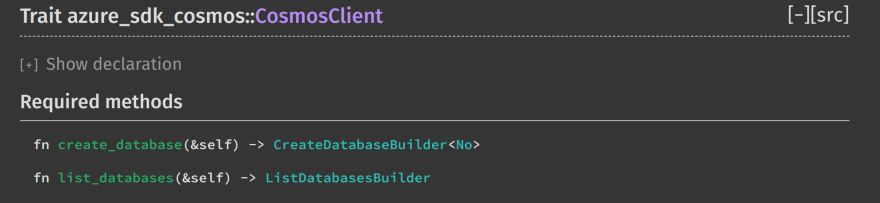

Let's check what we can do with our shiny new client, shall we?

We can check the functions exported by this trait by looking at the docs azure_sdk_cosmos/trait.CosmosClient.html:

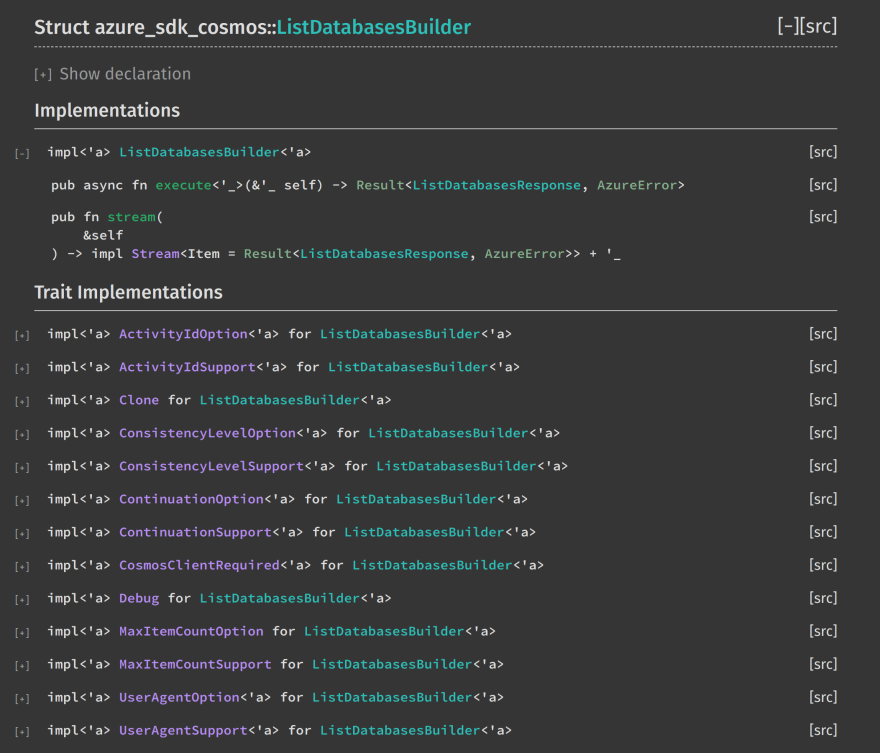

Let's try the list databases function. Clicking on the ListDatabaseBuilder gives us this:

Again, there are just two functions, execute and stream. This is a common theme to every SDK function: we call the function in the client trait and get back a Builder struct. We then call the execute function in the Builder struct to actually execute the call.

Don't panic, the Rust code is just one liner:

let databases = client.list_databases().execute().await?;

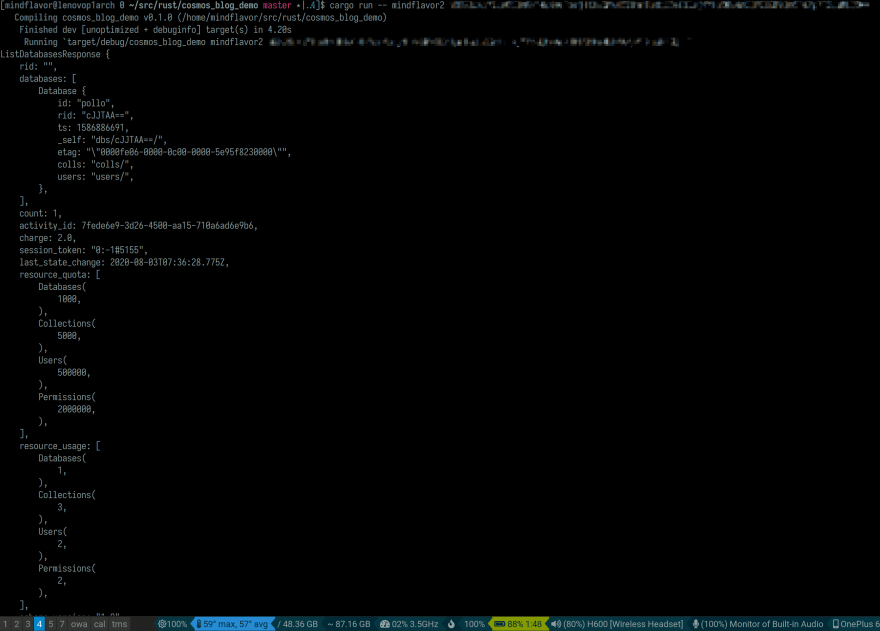

Seems too simple to be true? Go ahead and run it! The complete source code so far is:

use azure_sdk_cosmos::prelude::*;

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

let account = std::env::args()

.nth(1)

.expect("pass the cosmosdb account name as first parameter!");

let master_key = std::env::args()

.nth(2)

.expect("pass the cosmosdb master key as second parameter!");

let authorization_token = AuthorizationToken::new_master(&master_key)?;

let client = ClientBuilder::new(&account, authorization_token)?;

let databases = client.list_databases().execute().await?;

println!("{:#?}", databases);

Ok(())

}

If the key is right you will get the list of your databases! Hurrah! This time I even masqueraded my primary key properly in the screenshot 👍.

But, wait, you do not have a database? Just create one with the Azure portal or use the Rust SDK - you might have guessed, the only other Cosmos client function is called create_database 😉.

Last thing before closing this section: we have used the execute() function to get the list of databases but we also had the stream() function as well. Why two functions? The thing is, you can potentially have a lot of databases. In that case the execute() function would be very slow, having to return a lot of data. For this reason Azure limits the number of items returned by a single REST call. Don't worry though, Azure gives you a continuation token you can use to call the same API again with in order to keep enumerating the remaining items.

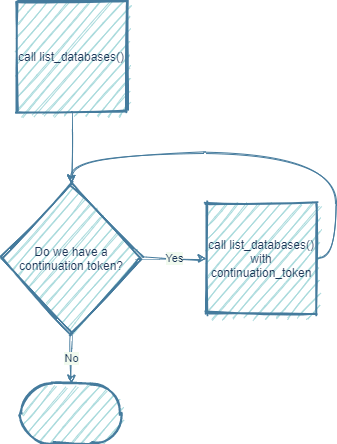

In other words you should keep calling the same API over and over until you are out of items (signaled by the lack of continuation token):

This is where the stream() function comes in. You do not have to code the loop by yourself: the stream() function handles this logic for you. In the following posts we will see examples on how to do this.

Create a database - builder pattern

The Rust CosmosDB SDK is meant to be as rusty as possible. In other word if the code compiles it should work as expected. That means catching as many errors during compile time as possible.

For example let's try to create a new database with this simple line:

client

.create_database()

.execute()

.await?;

If we try this, the code won't compile. The compiler will tell us that the method execute does not exists in azure_sdk_cosmos::CreateDatabaseBuilder<'_, azure_sdk_core::No>. The important part is the No. If we look at the definition in the docs azure_sdk_cosmos/struct.CreateDatabaseBuilder.html:

We can clearly see that the struct has a type called DatabaseNameSet: ToAssign. This type, as stated by the compiler is No.

In other words the compiler is saying: "Is DatabaseNameSet? No! How can I create a database without a name? Go back and check you code!".

Yes, we forgot to give the new database a name! 🤦

The SDK, instead of failing at runtime, prevents us to compile the code altogether. That's nice!

Let's fix this by passing the database name:

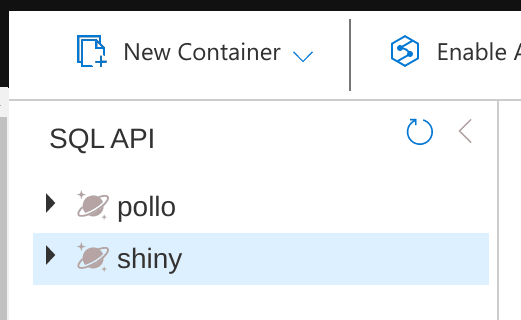

client

.create_database()

.with_database_name(&"shiny") // we must specify a name!!!

.execute()

.await?;

Now the compiler is happy and so will be CosmosDB. Sure enough there it is!

Be mindful that errors at runtime can still happen. The SDK prevents you from making the wrong calls according to the documentation (these calls would fail every single time).

Correct calls can still fail for various reasons including service outages, network errors, order 66 and so on. It's important to handle these transient errors appropriately.

Next in series

This concludes this first post. We have learned how easy is to instantiate a Cosmos client and how to call a basic function. In the next post we will dive in the other functions available.

Happy coding,

Francesco Cogno

Top comments (1)

Hey,

I am trying to follow this guide to connect to CosmosDB and test some range based query functionality. However I am blocked in connecting to CosmosDB, i have used primary-key as shown in azure keys blade, but i always end up with below error.

FailureError(UnsupportedToken { token: "interopUsers=2000", s: "databases=1000;collections=5000;users=500000;permissions=2000000;clientEncryptionKeys=20;interopUsers=2000;authPolicyElements=200000;roleDefinitions=100;roleAssignments=2000;" }Any pointers that can help me to solve this.