In the market for generic RAG frameworks, the different providers are fighting over who can provide 67% accuracy versus 65%. And when you run an off-the-shelf RAG framework on your use case, it will end up closer to 50% accuracy. Is this the best that the industry can do?

Actually, yes, it is. The problem is that these frameworks are meant to be plug-and-play; you are supposed to be able to build the product once and then sell it to thousands of customers in different verticals. But it just doesn’t work well enough, and it’s unclear whether generic RAGs will ever be able to deliver on this promise. Let’s see why.

DIY

The problem with generic RAG frameworks is just that—they are generic and don’t incorporate specific know-how about the vertical or the industry from domain experts. When it comes to information retrieval, the more specific and informed the key/query design of your index, the better accuracy you’ll get. To do that, you need domain experts with continuous refinement processes.

While these generic frameworks don’t deliver, RAG is not that hard to build in-house—and custom implementations are easier to optimize and perform much better than their generic counterparts. At the same time, engineers have every incentive to build RAG frameworks in-house: It doesn’t require extraordinary expertise to achieve good results, and it allows them to play around with interesting technology and improve their skills.

Custom RAGs typically perform dramatically better than generic ones because of the reality that the more case-specific your RAG’s design is, the more accurate it will be.

Are We Building Just For Fun?

If we want to build just for fun, that’s fine! But if we’re looking for production in real-world cases, accuracy must go higher.

For example, if you were to build a generic RAG framework for the financial vertical, you might get to 90% accuracy based on this narrowing-down of the use ase. But is that good enough for a big bank serving millions of customers—can they afford to get 1 million answers wrong out of every 10? Wouldn’t it make more sense for them to build something in-house quickly and with more control, that could get close to 100% accuracy? In fact, they’d be crazy not to.

What It Takes to Reach 99.999% Accuracy

It’s possible to build a 100% accurate RAG if you’ve got the right approach and are willing to put in the effort rather than trying to avoid it. We’ve actually done so (and open-sourced it) with parlant-qna. Doing so, for us, has involved making some strategic sacrifices we need to elaborate on:

No Chunked Upstream Information

First, unlike generic plug-and-play RAG frameworks, we don't chunk upstream information at all; documents are sent whole to the LLM. This already prevents many lost-in-transition errors.

Independent Q&A

Our second strategic choice was to have the questions and answers managed independently rather than heuristically parsing upstream knowledge-bases (KBs). Think about it: You don’t write KB docs the same way you do when you have a conversation. If you want your RAG framework to be more accurate, or if you’d at least like to adjust conversational responses without conflicting with upstream KBs, you have to provide information with the same conversational character that you expect your AI agent to respond in. We just break from convention in having you roll up your sleeves and do that as the fundamental methodology. It’ll be better this way in the long run.

Dynamic Manual Tagging

The next decision we made was to support dynamic, manual tagging for every question. One of the big problems with RAG frameworks is what to do when they get answers wrong. With plug-and-play RAG frameworks, in order to update the framework, you usually have to rerun the entire system to re-parse your KBs, and often you’ll end up breaking something else. So we wanted to add a marker that would allow you to always return a particular question response to specific queries. It helps to quickly deploy a fix to production while buying time to iterate on the results of that specific question using your automatic, predictive retrieval mechanisms.

Knowledge-Base Optimization (rather than a perfected RAG)

Lastly, we advise our users to spend time curating and optimizing their knowledge-base. When discussing creating RAG frameworks and AI agents in general, we talk a lot—perhaps too much—about creating the perfect algorithms that make them work.

But the key to creating RAG that actually returns highly accurate answers lies in curating the knowledge base, not in creating a perfect generic algorithm. Engineering teams will often spend months trying to create the ideal RAG framework when, in reality, dedicating a tenth of the time to manual knowledge base curation would get them better, more easily maintainable results—much faster. Alas, while some engineers may find this approach uninspiring, from a resource management and time-to-market perspective it’s often the smarter choice.

Disclaimer: It Does Come At A Cost

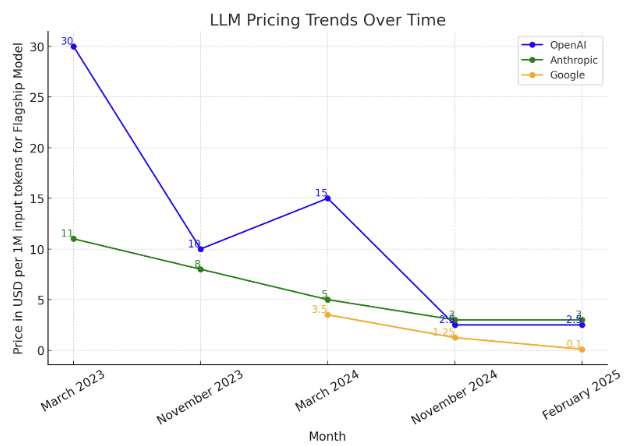

Some of the practices we’ve mentioned above do make RAG more accurate, but they can also increase latency and price. That said, new hardware such as Cerebras, and newer LLMs such as Gemini 2.0 Flash, are now making it practical.

Here’s a framework for assessing whether an approach like Parlant QnA’s is right for your use case.

Risk assessment: How severe are the consequences of an incorrect response?

Volume considerations: How many queries will your system handle daily? (As said above, recent hardware and newer LLMs are now making this approach more practical anyway)

Expertise availability: Do you have domain experts ready to curate and edit the QnAs properly?

Time constraints: How quickly do you need to deploy?

Is 70% 'Good Enough'?

Perhaps the most frustrating aspect of the conversation about RAG frameworks is that the industry's expectation that 70% accuracy is 'good enough' is flat-out absurd.

I've heard many say, "70% is great because human representatives get even less than that." While that could well be true for some use cases (note that the industry average CSAT and FCR is around 80%), there are two important points to consider for GenAI agents.

First of all, if a human agent makes an error, various types of insurance can cover the business's losses.

Second, even when a human agent is only 70% accurate, the remaining 30% of errors are typically limited in impact. It's not often that a human representative will agree to sell a customer a truck for $1, but low-accuracy AI agents are known to do so. Businesses are therefore much more apprehensive about GenAI agents getting it wrong.

For real-life use cases, we need to start talking about AI accuracy the same way we talk about most service-level agreements (SLA): in terms of the number of ninths that come after the decimal point. Instead of 67% versus 65%, we should start building systems that compete between 99.99% and 99.999%, and be willing to make the relative sacrifices in the meantime—such as in response latency—while AI hardware is catching up to match the accuracy with the desired response speed.

As I illustrated before, a large bank running 100 million conversations every month can’t afford 10 million faulty responses every month. Given that (especially in regulated industries where each faulty response could cause reputation and legal risks), such low-accuracy approaches are simply not good enough for real-world applications.

Retrieving RAG to its full potential

If accuracy is mission-critical, enterprises can't afford to gamble on generic, plug-and-play RAG to power their customer-facing AI agents. A best-effort attempt at retrieval doesn’t cut it—especially when subtle errors can mean financial, legal, or reputational risk.

Top comments (0)